Quantum Error Correction for the Toric Code Using Deep Reinforcement Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Lattice 2018 28 July 2018 This talk has two parts (1) Near-term prospects for quantum computing. (2) Opportunities in quantum simulation of quantum field theory. Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Two fundamental ideas (1) Quantum complexity Why we think quantum computing is powerful. (2) Quantum error correction Why we think quantum computing is scalable. A complete description of a typical quantum state of just 300 qubits requires more bits than the number of atoms in the visible universe. Why we think quantum computing is powerful We know examples of problems that can be solved efficiently by a quantum computer, where we believe the problems are hard for classical computers. -

Painstakingly-Edited Typeset Lecture Notes

Physics 239: Topology from Physics Winter 2021 Lecturer: McGreevy These lecture notes live here. Please email corrections and questions to mcgreevy at physics dot ucsd dot edu. Last updated: May 26, 2021, 14:36:17 1 Contents 0.1 Introductory remarks............................3 0.2 Conventions.................................8 0.3 Sources....................................9 1 The toric code and homology 10 1.1 Cell complexes and homology....................... 21 1.2 p-form ZN toric code............................ 23 1.3 Some examples............................... 26 1.4 Higgsing, change of coefficients, exact sequences............. 32 1.5 Independence of cellulation......................... 36 1.6 Gapped boundaries and relative homology................ 39 1.7 Duality.................................... 42 2 Supersymmetric quantum mechanics and cohomology, index theory, Morse theory 49 2.1 Supersymmetric quantum mechanics................... 49 2.2 Differential forms consolidation...................... 61 2.3 Supersymmetric QM and Morse theory.................. 66 2.4 Global information from local information................ 74 2.5 Homology and cohomology......................... 77 2.6 Cech cohomology.............................. 80 2.7 Local reconstructability of quantum states................ 86 3 Quantum Double Model and Homotopy 89 3.1 Notions of `same'.............................. 89 3.2 Homotopy equivalence and cohomology.................. 90 3.3 Homotopy equivalence and homology................... 91 3.4 Morse theory and homotopy -

Quantum Machine Learning: Benefits and Practical Examples

Quantum Machine Learning: Benefits and Practical Examples Frank Phillipson1[0000-0003-4580-7521] 1 TNO, Anna van Buerenplein 1, 2595 DA Den Haag, The Netherlands [email protected] Abstract. A quantum computer that is useful in practice, is expected to be devel- oped in the next few years. An important application is expected to be machine learning, where benefits are expected on run time, capacity and learning effi- ciency. In this paper, these benefits are presented and for each benefit an example application is presented. A quantum hybrid Helmholtz machine use quantum sampling to improve run time, a quantum Hopfield neural network shows an im- proved capacity and a variational quantum circuit based neural network is ex- pected to deliver a higher learning efficiency. Keywords: Quantum Machine Learning, Quantum Computing, Near Future Quantum Applications. 1 Introduction Quantum computers make use of quantum-mechanical phenomena, such as superposi- tion and entanglement, to perform operations on data [1]. Where classical computers require the data to be encoded into binary digits (bits), each of which is always in one of two definite states (0 or 1), quantum computation uses quantum bits, which can be in superpositions of states. These computers would theoretically be able to solve certain problems much more quickly than any classical computer that use even the best cur- rently known algorithms. Examples are integer factorization using Shor's algorithm or the simulation of quantum many-body systems. This benefit is also called ‘quantum supremacy’ [2], which only recently has been claimed for the first time [3]. There are two different quantum computing paradigms. -

Superconducting Quantum Simulator for Topological Order and the Toric Code

Superconducting Quantum Simulator for Topological Order and the Toric Code Mahdi Sameti,1 Anton Potoˇcnik,2 Dan E. Browne,3 Andreas Wallraff,2 and Michael J. Hartmann1 1Institute of Photonics and Quantum Sciences, Heriot-Watt University Edinburgh EH14 4AS, United Kingdom 2Department of Physics, ETH Z¨urich,CH-8093 Z¨urich,Switzerland 3Department of Physics and Astronomy, University College London, Gower Street, London WC1E 6BT, United Kingdom (Dated: March 20, 2017) Topological order is now being established as a central criterion for characterizing and classifying ground states of condensed matter systems and complements categorizations based on symmetries. Fractional quantum Hall systems and quantum spin liquids are receiving substantial interest because of their intriguing quantum correlations, their exotic excitations and prospects for protecting stored quantum information against errors. Here we show that the Hamiltonian of the central model of this class of systems, the Toric Code, can be directly implemented as an analog quantum simulator in lattices of superconducting circuits. The four-body interactions, which lie at its heart, are in our concept realized via Superconducting Quantum Interference Devices (SQUIDs) that are driven by a suitably oscillating flux bias. All physical qubits and coupling SQUIDs can be individually controlled with high precision. Topologically ordered states can be prepared via an adiabatic ramp of the stabilizer interactions. Strings of qubit operators, including the stabilizers and correlations along non-contractible loops, can be read out via a capacitive coupling to read-out resonators. Moreover, the available single qubit operations allow to create and propagate elementary excitations of the Toric Code and to verify their fractional statistics. -

COVID-19 Detection on IBM Quantum Computer with Classical-Quantum Transfer Learning

medRxiv preprint doi: https://doi.org/10.1101/2020.11.07.20227306; this version posted November 10, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. It is made available under a CC-BY-NC-ND 4.0 International license . Turk J Elec Eng & Comp Sci () : { © TUB¨ ITAK_ doi:10.3906/elk- COVID-19 detection on IBM quantum computer with classical-quantum transfer learning Erdi ACAR1*, Ihsan_ YILMAZ2 1Department of Computer Engineering, Institute of Science, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey 2Department of Computer Engineering, Faculty of Engineering, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey Received: .201 Accepted/Published Online: .201 Final Version: ..201 Abstract: Diagnose the infected patient as soon as possible in the coronavirus 2019 (COVID-19) outbreak which is declared as a pandemic by the world health organization (WHO) is extremely important. Experts recommend CT imaging as a diagnostic tool because of the weak points of the nucleic acid amplification test (NAAT). In this study, the detection of COVID-19 from CT images, which give the most accurate response in a short time, was investigated in the classical computer and firstly in quantum computers. Using the quantum transfer learning method, we experimentally perform COVID-19 detection in different quantum real processors (IBMQx2, IBMQ-London and IBMQ-Rome) of IBM, as well as in different simulators (Pennylane, Qiskit-Aer and Cirq). By using a small number of data sets such as 126 COVID-19 and 100 Normal CT images, we obtained a positive or negative classification of COVID-19 with 90% success in classical computers, while we achieved a high success rate of 94-100% in quantum computers. -

Quantum Inductive Learning and Quantum Logic Synthesis

Portland State University PDXScholar Dissertations and Theses Dissertations and Theses 2009 Quantum Inductive Learning and Quantum Logic Synthesis Martin Lukac Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/open_access_etds Part of the Electrical and Computer Engineering Commons Let us know how access to this document benefits ou.y Recommended Citation Lukac, Martin, "Quantum Inductive Learning and Quantum Logic Synthesis" (2009). Dissertations and Theses. Paper 2319. https://doi.org/10.15760/etd.2316 This Dissertation is brought to you for free and open access. It has been accepted for inclusion in Dissertations and Theses by an authorized administrator of PDXScholar. For more information, please contact [email protected]. QUANTUM INDUCTIVE LEARNING AND QUANTUM LOGIC SYNTHESIS by MARTIN LUKAC A dissertation submitted in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY in ELECTRICAL AND COMPUTER ENGINEERING. Portland State University 2009 DISSERTATION APPROVAL The abstract and dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering were presented January 9, 2009, and accepted by the dissertation committee and the doctoral program. COMMITTEE APPROVALS: Irek Perkowski, Chair GarrisoH-Xireenwood -George ^Lendaris 5artM ?teven Bleiler Representative of the Office of Graduate Studies DOCTORAL PROGRAM APPROVAL: Malgorza /ska-Jeske7~Director Electrical Computer Engineering Ph.D. Program ABSTRACT An abstract of the dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering presented January 9, 2009. Title: Quantum Inductive Learning and Quantum Logic Synhesis Since Quantum Computer is almost realizable on large scale and Quantum Technology is one of the main solutions to the Moore Limit, Quantum Logic Synthesis (QLS) has become a required theory and tool for designing Quantum Logic Circuits. -

Nearest Centroid Classification on a Trapped Ion Quantum Computer

www.nature.com/npjqi ARTICLE OPEN Nearest centroid classification on a trapped ion quantum computer ✉ Sonika Johri1 , Shantanu Debnath1, Avinash Mocherla2,3,4, Alexandros SINGK2,3,5, Anupam Prakash2,3, Jungsang Kim1 and Iordanis Kerenidis2,3,6 Quantum machine learning has seen considerable theoretical and practical developments in recent years and has become a promising area for finding real world applications of quantum computers. In pursuit of this goal, here we combine state-of-the-art algorithms and quantum hardware to provide an experimental demonstration of a quantum machine learning application with provable guarantees for its performance and efficiency. In particular, we design a quantum Nearest Centroid classifier, using techniques for efficiently loading classical data into quantum states and performing distance estimations, and experimentally demonstrate it on a 11-qubit trapped-ion quantum machine, matching the accuracy of classical nearest centroid classifiers for the MNIST handwritten digits dataset and achieving up to 100% accuracy for 8-dimensional synthetic data. npj Quantum Information (2021) 7:122 ; https://doi.org/10.1038/s41534-021-00456-5 INTRODUCTION Thus, one might hope that noisy quantum computers are 1234567890():,; Quantum technologies promise to revolutionize the future of inherently better suited for machine learning computations than information and communication, in the form of quantum for other types of problems that need precise computations like computing devices able to communicate and process massive factoring or search problems. amounts of data both efficiently and securely using quantum However, there are significant challenges to be overcome to resources. Tremendous progress is continuously being made both make QML practical. -

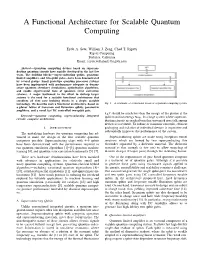

A Functional Architecture for Scalable Quantum Computing

A Functional Architecture for Scalable Quantum Computing Eyob A. Sete, William J. Zeng, Chad T. Rigetti Rigetti Computing Berkeley, California Email: feyob,will,[email protected] Abstract—Quantum computing devices based on supercon- ducting quantum circuits have rapidly developed in the last few years. The building blocks—superconducting qubits, quantum- limited amplifiers, and two-qubit gates—have been demonstrated by several groups. Small prototype quantum processor systems have been implemented with performance adequate to demon- strate quantum chemistry simulations, optimization algorithms, and enable experimental tests of quantum error correction schemes. A major bottleneck in the effort to devleop larger systems is the need for a scalable functional architecture that combines all thee core building blocks in a single, scalable technology. We describe such a functional architecture, based on Fig. 1. A schematic of a functional layout of a quantum computing system. a planar lattice of transmon and fluxonium qubits, parametric amplifiers, and a novel fast DC controlled two-qubit gate. kBT should be much less than the energy of the photon at the Keywords—quantum computing, superconducting integrated qubit transition energy ~!01. In a large system where supercon- circuits, computer architecture. ducting circuits are packed together, unwanted crosstalk among devices is inevitable. To reduce or minimize crosstalk, efficient I. INTRODUCTION packaging and isolation of individual devices is imperative and substantially improves the performance of the system. The underlying hardware for quantum computing has ad- vanced to make the design of the first scalable quantum Superconducting qubits are made using Josephson tunnel computers possible. Superconducting chips with 4–9 qubits junctions which are formed by two superconducting thin have been demonstrated with the performance required to electrodes separated by a dielectric material. -

![Arxiv:1803.00026V4 [Cond-Mat.Str-El] 8 Feb 2019 3 Variational Quantum-Classical Simulation (VQCS) 5](https://docslib.b-cdn.net/cover/8638/arxiv-1803-00026v4-cond-mat-str-el-8-feb-2019-3-variational-quantum-classical-simulation-vqcs-5-668638.webp)

Arxiv:1803.00026V4 [Cond-Mat.Str-El] 8 Feb 2019 3 Variational Quantum-Classical Simulation (VQCS) 5

SciPost Physics Submission Efficient variational simulation of non-trivial quantum states Wen Wei Ho1*, Timothy H. Hsieh2,3, 1 Department of Physics, Harvard University, Cambridge, Massachusetts 02138, USA 2 Kavli Institute for Theoretical Physics, University of California, Santa Barbara, California 93106, USA 3 Perimeter Institute for Theoretical Physics, Waterloo, Ontario N2L 2Y5, Canada *[email protected] February 12, 2019 Abstract We provide an efficient and general route for preparing non-trivial quantum states that are not adiabatically connected to unentangled product states. Our approach is a hybrid quantum-classical variational protocol that incorporates a feedback loop between a quantum simulator and a classical computer, and is experimen- tally realizable on near-term quantum devices of synthetic quantum systems. We find explicit protocols which prepare with perfect fidelities (i) the Greenberger- Horne-Zeilinger (GHZ) state, (ii) a quantum critical state, and (iii) a topologically ordered state, with L variational parameters and physical runtimes T that scale linearly with the system size L. We furthermore conjecture and support numeri- cally that our protocol can prepare, with perfect fidelity and similar operational costs, the ground state of every point in the one dimensional transverse field Ising model phase diagram. Besides being practically useful, our results also illustrate the utility of such variational ans¨atzeas good descriptions of non-trivial states of matter. Contents 1 Introduction 2 2 Non-trivial quantum states -

QIP 2010 Tutorial and Scientific Programmes

QIP 2010 15th – 22nd January, Zürich, Switzerland Tutorial and Scientific Programmes asymptotically large number of channel uses. Such “regularized” formulas tell us Friday, 15th January very little. The purpose of this talk is to give an overview of what we know about 10:00 – 17:10 Jiannis Pachos (Univ. Leeds) this need for regularization, when and why it happens, and what it means. I will Why should anyone care about computing with anyons? focus on the quantum capacity of a quantum channel, which is the case we understand best. This is a short course in topological quantum computation. The topics to be covered include: 1. Introduction to anyons and topological models. 15:00 – 16:55 Daniel Nagaj (Slovak Academy of Sciences) 2. Quantum Double Models. These are stabilizer codes, that can be described Local Hamiltonians in quantum computation very much like quantum error correcting codes. They include the toric code This talk is about two Hamiltonian Complexity questions. First, how hard is it to and various extensions. compute the ground state properties of quantum systems with local Hamiltonians? 3. The Jones polynomials, their relation to anyons and their approximation by Second, which spin systems with time-independent (and perhaps, translationally- quantum algorithms. invariant) local interactions could be used for universal computation? 4. Overview of current state of topological quantum computation and open Aiming at a participant without previous understanding of complexity theory, we will discuss two locally-constrained quantum problems: k-local Hamiltonian and questions. quantum k-SAT. Learning the techniques of Kitaev and others along the way, our first goal is the understanding of QMA-completeness of these problems. -

Experimental Kernel-Based Quantum Machine Learning in Finite Feature

www.nature.com/scientificreports OPEN Experimental kernel‑based quantum machine learning in fnite feature space Karol Bartkiewicz1,2*, Clemens Gneiting3, Antonín Černoch2*, Kateřina Jiráková2, Karel Lemr2* & Franco Nori3,4 We implement an all‑optical setup demonstrating kernel‑based quantum machine learning for two‑ dimensional classifcation problems. In this hybrid approach, kernel evaluations are outsourced to projective measurements on suitably designed quantum states encoding the training data, while the model training is processed on a classical computer. Our two-photon proposal encodes data points in a discrete, eight-dimensional feature Hilbert space. In order to maximize the application range of the deployable kernels, we optimize feature maps towards the resulting kernels’ ability to separate points, i.e., their “resolution,” under the constraint of fnite, fxed Hilbert space dimension. Implementing these kernels, our setup delivers viable decision boundaries for standard nonlinear supervised classifcation tasks in feature space. We demonstrate such kernel-based quantum machine learning using specialized multiphoton quantum optical circuits. The deployed kernel exhibits exponentially better scaling in the required number of qubits than a direct generalization of kernels described in the literature. Many contemporary computational problems (like drug design, trafc control, logistics, automatic driving, stock market analysis, automatic medical examination, material engineering, and others) routinely require optimiza- tion over huge amounts of data1. While these highly demanding problems can ofen be approached by suitable machine learning (ML) algorithms, in many relevant cases the underlying calculations would last prohibitively long. Quantum ML (QML) comes with the promise to run these computations more efciently (in some cases exponentially faster) by complementing ML algorithms with quantum resources. -

High Energy Physics Quantum Computing

High Energy Physics Quantum Computing Quantum Information Science in High Energy Physics at the Large Hadron Collider PI: O.K. Baker, Yale University Unraveling the quantum structure of QCD in parton shower Monte Carlo generators PI: Christian Bauer, Lawrence Berkeley National Laboratory Co-PIs: Wibe de Jong and Ben Nachman (LBNL) The HEP.QPR Project: Quantum Pattern Recognition for Charged Particle Tracking PI: Heather Gray, Lawrence Berkeley National Laboratory Co-PIs: Wahid Bhimji, Paolo Calafiura, Steve Farrell, Wim Lavrijsen, Lucy Linder, Illya Shapoval (LBNL) Neutrino-Nucleus Scattering on a Quantum Computer PI: Rajan Gupta, Los Alamos National Laboratory Co-PIs: Joseph Carlson (LANL); Alessandro Roggero (UW), Gabriel Purdue (FNAL) Particle Track Pattern Recognition via Content-Addressable Memory and Adiabatic Quantum Optimization PI: Lauren Ice, Johns Hopkins University Co-PIs: Gregory Quiroz (Johns Hopkins); Travis Humble (Oak Ridge National Laboratory) Towards practical quantum simulation for High Energy Physics PI: Peter Love, Tufts University Co-PIs: Gary Goldstein, Hugo Beauchemin (Tufts) High Energy Physics (HEP) ML and Optimization Go Quantum PI: Gabriel Perdue, Fermilab Co-PIs: Jim Kowalkowski, Stephen Mrenna, Brian Nord, Aris Tsaris (Fermilab); Travis Humble, Alex McCaskey (Oak Ridge National Lab) Quantum Machine Learning and Quantum Computation Frameworks for HEP (QMLQCF) PI: M. Spiropulu, California Institute of Technology Co-PIs: Panagiotis Spentzouris (Fermilab), Daniel Lidar (USC), Seth Lloyd (MIT) Quantum Algorithms for Collider Physics PI: Jesse Thaler, Massachusetts Institute of Technology Co-PI: Aram Harrow, Massachusetts Institute of Technology Quantum Machine Learning for Lattice QCD PI: Boram Yoon, Los Alamos National Laboratory Co-PIs: Nga T. T. Nguyen, Garrett Kenyon, Tanmoy Bhattacharya and Rajan Gupta (LANL) Quantum Information Science in High Energy Physics at the Large Hadron Collider O.K.