Chapter 4: Vectors, Matrices, and Linear Algebra

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

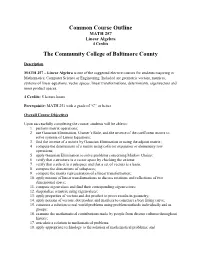

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

Introduction to Linear Bialgebra

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of New Mexico University of New Mexico UNM Digital Repository Mathematics and Statistics Faculty and Staff Publications Academic Department Resources 2005 INTRODUCTION TO LINEAR BIALGEBRA Florentin Smarandache University of New Mexico, [email protected] W.B. Vasantha Kandasamy K. Ilanthenral Follow this and additional works at: https://digitalrepository.unm.edu/math_fsp Part of the Algebra Commons, Analysis Commons, Discrete Mathematics and Combinatorics Commons, and the Other Mathematics Commons Recommended Citation Smarandache, Florentin; W.B. Vasantha Kandasamy; and K. Ilanthenral. "INTRODUCTION TO LINEAR BIALGEBRA." (2005). https://digitalrepository.unm.edu/math_fsp/232 This Book is brought to you for free and open access by the Academic Department Resources at UNM Digital Repository. It has been accepted for inclusion in Mathematics and Statistics Faculty and Staff Publications by an authorized administrator of UNM Digital Repository. For more information, please contact [email protected], [email protected], [email protected]. INTRODUCTION TO LINEAR BIALGEBRA W. B. Vasantha Kandasamy Department of Mathematics Indian Institute of Technology, Madras Chennai – 600036, India e-mail: [email protected] web: http://mat.iitm.ac.in/~wbv Florentin Smarandache Department of Mathematics University of New Mexico Gallup, NM 87301, USA e-mail: [email protected] K. Ilanthenral Editor, Maths Tiger, Quarterly Journal Flat No.11, Mayura Park, 16, Kazhikundram Main Road, Tharamani, Chennai – 600 113, India e-mail: [email protected] HEXIS Phoenix, Arizona 2005 1 This book can be ordered in a paper bound reprint from: Books on Demand ProQuest Information & Learning (University of Microfilm International) 300 N. -

A New Description of Space and Time Using Clifford Multivectors

A new description of space and time using Clifford multivectors James M. Chappell† , Nicolangelo Iannella† , Azhar Iqbal† , Mark Chappell‡ , Derek Abbott† †School of Electrical and Electronic Engineering, University of Adelaide, South Australia 5005, Australia ‡Griffith Institute, Griffith University, Queensland 4122, Australia Abstract Following the development of the special theory of relativity in 1905, Minkowski pro- posed a unified space and time structure consisting of three space dimensions and one time dimension, with relativistic effects then being natural consequences of this space- time geometry. In this paper, we illustrate how Clifford’s geometric algebra that utilizes multivectors to represent spacetime, provides an elegant mathematical framework for the study of relativistic phenomena. We show, with several examples, how the application of geometric algebra leads to the correct relativistic description of the physical phenomena being considered. This approach not only provides a compact mathematical representa- tion to tackle such phenomena, but also suggests some novel insights into the nature of time. Keywords: Geometric algebra, Clifford space, Spacetime, Multivectors, Algebraic framework 1. Introduction The physical world, based on early investigations, was deemed to possess three inde- pendent freedoms of translation, referred to as the three dimensions of space. This naive conclusion is also supported by more sophisticated analysis such as the existence of only five regular polyhedra and the inverse square force laws. If we lived in a world with four spatial dimensions, for example, we would be able to construct six regular solids, and in arXiv:1205.5195v2 [math-ph] 11 Oct 2012 five dimensions and above we would find only three [1]. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

Determinant Notes

68 UNIT FIVE DETERMINANTS 5.1 INTRODUCTION In unit one the determinant of a 2× 2 matrix was introduced and used in the evaluation of a cross product. In this chapter we extend the definition of a determinant to any size square matrix. The determinant has a variety of applications. The value of the determinant of a square matrix A can be used to determine whether A is invertible or noninvertible. An explicit formula for A–1 exists that involves the determinant of A. Some systems of linear equations have solutions that can be expressed in terms of determinants. 5.2 DEFINITION OF THE DETERMINANT a11 a12 Recall that in chapter one the determinant of the 2× 2 matrix A = was a21 a22 defined to be the number a11a22 − a12 a21 and that the notation det (A) or A was used to represent the determinant of A. For any given n × n matrix A = a , the notation A [ ij ]n×n ij will be used to denote the (n −1)× (n −1) submatrix obtained from A by deleting the ith row and the jth column of A. The determinant of any size square matrix A = a is [ ij ]n×n defined recursively as follows. Definition of the Determinant Let A = a be an n × n matrix. [ ij ]n×n (1) If n = 1, that is A = [a11], then we define det (A) = a11 . n 1k+ (2) If na>=1, we define det(A) ∑(-1)11kk det(A ) k=1 Example If A = []5 , then by part (1) of the definition of the determinant, det (A) = 5. -

Vectors, Matrices and Coordinate Transformations

S. Widnall 16.07 Dynamics Fall 2009 Lecture notes based on J. Peraire Version 2.0 Lecture L3 - Vectors, Matrices and Coordinate Transformations By using vectors and defining appropriate operations between them, physical laws can often be written in a simple form. Since we will making extensive use of vectors in Dynamics, we will summarize some of their important properties. Vectors For our purposes we will think of a vector as a mathematical representation of a physical entity which has both magnitude and direction in a 3D space. Examples of physical vectors are forces, moments, and velocities. Geometrically, a vector can be represented as arrows. The length of the arrow represents its magnitude. Unless indicated otherwise, we shall assume that parallel translation does not change a vector, and we shall call the vectors satisfying this property, free vectors. Thus, two vectors are equal if and only if they are parallel, point in the same direction, and have equal length. Vectors are usually typed in boldface and scalar quantities appear in lightface italic type, e.g. the vector quantity A has magnitude, or modulus, A = |A|. In handwritten text, vectors are often expressed using the −→ arrow, or underbar notation, e.g. A , A. Vector Algebra Here, we introduce a few useful operations which are defined for free vectors. Multiplication by a scalar If we multiply a vector A by a scalar α, the result is a vector B = αA, which has magnitude B = |α|A. The vector B, is parallel to A and points in the same direction if α > 0. -

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

Linear Algebra for Dummies

Linear Algebra for Dummies Jorge A. Menendez October 6, 2017 Contents 1 Matrices and Vectors1 2 Matrix Multiplication2 3 Matrix Inverse, Pseudo-inverse4 4 Outer products 5 5 Inner Products 5 6 Example: Linear Regression7 7 Eigenstuff 8 8 Example: Covariance Matrices 11 9 Example: PCA 12 10 Useful resources 12 1 Matrices and Vectors An m × n matrix is simply an array of numbers: 2 3 a11 a12 : : : a1n 6 a21 a22 : : : a2n 7 A = 6 7 6 . 7 4 . 5 am1 am2 : : : amn where we define the indexing Aij = aij to designate the component in the ith row and jth column of A. The transpose of a matrix is obtained by flipping the rows with the columns: 2 3 a11 a21 : : : an1 6 a12 a22 : : : an2 7 AT = 6 7 6 . 7 4 . 5 a1m a2m : : : anm T which evidently is now an n × m matrix, with components Aij = Aji = aji. In other words, the transpose is obtained by simply flipping the row and column indeces. One particularly important matrix is called the identity matrix, which is composed of 1’s on the diagonal and 0’s everywhere else: 21 0 ::: 03 60 1 ::: 07 6 7 6. .. .7 4. .5 0 0 ::: 1 1 It is called the identity matrix because the product of any matrix with the identity matrix is identical to itself: AI = A In other words, I is the equivalent of the number 1 for matrices. For our purposes, a vector can simply be thought of as a matrix with one column1: 2 3 a1 6a2 7 a = 6 7 6 . -

Linear Independence, Span, and Basis of a Set of Vectors What Is Linear Independence?

LECTURENOTES · purdue university MA 26500 Kyle Kloster Linear Algebra October 22, 2014 Linear Independence, Span, and Basis of a Set of Vectors What is linear independence? A set of vectors S = fv1; ··· ; vkg is linearly independent if none of the vectors vi can be written as a linear combination of the other vectors, i.e. vj = α1v1 + ··· + αkvk. Suppose the vector vj can be written as a linear combination of the other vectors, i.e. there exist scalars αi such that vj = α1v1 + ··· + αkvk holds. (This is equivalent to saying that the vectors v1; ··· ; vk are linearly dependent). We can subtract vj to move it over to the other side to get an expression 0 = α1v1 + ··· αkvk (where the term vj now appears on the right hand side. In other words, the condition that \the set of vectors S = fv1; ··· ; vkg is linearly dependent" is equivalent to the condition that there exists αi not all of which are zero such that 2 3 α1 6α27 0 = v v ··· v 6 7 : 1 2 k 6 . 7 4 . 5 αk More concisely, form the matrix V whose columns are the vectors vi. Then the set S of vectors vi is a linearly dependent set if there is a nonzero solution x such that V x = 0. This means that the condition that \the set of vectors S = fv1; ··· ; vkg is linearly independent" is equivalent to the condition that \the only solution x to the equation V x = 0 is the zero vector, i.e. x = 0. How do you determine if a set is lin. -

Vector Analysis

DOING PHYSICS WITH MATLAB VECTOR ANANYSIS Ian Cooper School of Physics, University of Sydney [email protected] DOWNLOAD DIRECTORY FOR MATLAB SCRIPTS cemVectorsA.m Inputs: Cartesian components of the vector V Outputs: cylindrical and spherical components and [3D] plot of vector cemVectorsB.m Inputs: Cartesian components of the vectors A B C Outputs: dot products, cross products and triple products cemVectorsC.m Rotation of XY axes around Z axis to give new of reference X’Y’Z’. Inputs: rotation angle and vector (Cartesian components) in XYZ frame Outputs: Cartesian components of vector in X’Y’Z’ frame mscript can be modified to calculate the rotation matrix for a [3D] rotation and give the Cartesian components of the vector in the X’Y’Z’ frame of reference. Doing Physics with Matlab 1 SPECIFYING a [3D] VECTOR A scalar is completely characterised by its magnitude, and has no associated direction (mass, time, direction, work). A scalar is given by a simple number. A vector has both a magnitude and direction (force, electric field, magnetic field). A vector can be specified in terms of its Cartesian or cylindrical (polar in [2D]) or spherical coordinates. Cartesian coordinate system (XYZ right-handed rectangular: if we curl our fingers on the right hand so they rotate from the X axis to the Y axis then the Z axis is in the direction of the thumb). A vector V in specified in terms of its X, Y and Z Cartesian components ˆˆˆ VVVV x,, y z VViVjVk x y z where iˆˆ,, j kˆ are unit vectors parallel to the X, Y and Z axes respectively. -

Determinants Math 122 Calculus III D Joyce, Fall 2012

Determinants Math 122 Calculus III D Joyce, Fall 2012 What they are. A determinant is a value associated to a square array of numbers, that square array being called a square matrix. For example, here are determinants of a general 2 × 2 matrix and a general 3 × 3 matrix. a b = ad − bc: c d a b c d e f = aei + bfg + cdh − ceg − afh − bdi: g h i The determinant of a matrix A is usually denoted jAj or det (A). You can think of the rows of the determinant as being vectors. For the 3×3 matrix above, the vectors are u = (a; b; c), v = (d; e; f), and w = (g; h; i). Then the determinant is a value associated to n vectors in Rn. There's a general definition for n×n determinants. It's a particular signed sum of products of n entries in the matrix where each product is of one entry in each row and column. The two ways you can choose one entry in each row and column of the 2 × 2 matrix give you the two products ad and bc. There are six ways of chosing one entry in each row and column in a 3 × 3 matrix, and generally, there are n! ways in an n × n matrix. Thus, the determinant of a 4 × 4 matrix is the signed sum of 24, which is 4!, terms. In this general definition, half the terms are taken positively and half negatively. In class, we briefly saw how the signs are determined by permutations. -

Discover Linear Algebra Incomplete Preliminary Draft

Discover Linear Algebra Incomplete Preliminary Draft Date: November 28, 2017 L´aszl´oBabai in collaboration with Noah Halford All rights reserved. Approved for instructional use only. Commercial distribution prohibited. c 2016 L´aszl´oBabai. Last updated: November 10, 2016 Preface TO BE WRITTEN. Babai: Discover Linear Algebra. ii This chapter last updated August 21, 2016 c 2016 L´aszl´oBabai. Contents Notation ix I Matrix Theory 1 Introduction to Part I 2 1 (F, R) Column Vectors 3 1.1 (F) Column vector basics . 3 1.1.1 The domain of scalars . 3 1.2 (F) Subspaces and span . 6 1.3 (F) Linear independence and the First Miracle of Linear Algebra . 8 1.4 (F) Dot product . 12 1.5 (R) Dot product over R ................................. 14 1.6 (F) Additional exercises . 14 2 (F) Matrices 15 2.1 Matrix basics . 15 2.2 Matrix multiplication . 18 2.3 Arithmetic of diagonal and triangular matrices . 22 2.4 Permutation Matrices . 24 2.5 Additional exercises . 26 3 (F) Matrix Rank 28 3.1 Column and row rank . 28 iii iv CONTENTS 3.2 Elementary operations and Gaussian elimination . 29 3.3 Invariance of column and row rank, the Second Miracle of Linear Algebra . 31 3.4 Matrix rank and invertibility . 33 3.5 Codimension (optional) . 34 3.6 Additional exercises . 35 4 (F) Theory of Systems of Linear Equations I: Qualitative Theory 38 4.1 Homogeneous systems of linear equations . 38 4.2 General systems of linear equations . 40 5 (F, R) Affine and Convex Combinations (optional) 42 5.1 (F) Affine combinations .