Programming on Unix.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Different Unix Contexts

The different Unix contexts • User-level • Kernel “top half” - System call, page fault handler, kernel-only process, etc. • Software interrupt • Device interrupt • Timer interrupt (hardclock) • Context switch code Transitions between contexts • User ! top half: syscall, page fault • User/top half ! device/timer interrupt: hardware • Top half ! user/context switch: return • Top half ! context switch: sleep • Context switch ! user/top half Top/bottom half synchronization • Top half kernel procedures can mask interrupts int x = splhigh (); /* ... */ splx (x); • splhigh disables all interrupts, but also splnet, splbio, splsoftnet, . • Masking interrupts in hardware can be expensive - Optimistic implementation – set mask flag on splhigh, check interrupted flag on splx Kernel Synchronization • Need to relinquish CPU when waiting for events - Disk read, network packet arrival, pipe write, signal, etc. • int tsleep(void *ident, int priority, ...); - Switches to another process - ident is arbitrary pointer—e.g., buffer address - priority is priority at which to run when woken up - PCATCH, if ORed into priority, means wake up on signal - Returns 0 if awakened, or ERESTART/EINTR on signal • int wakeup(void *ident); - Awakens all processes sleeping on ident - Restores SPL a time they went to sleep (so fine to sleep at splhigh) Process scheduling • Goal: High throughput - Minimize context switches to avoid wasting CPU, TLB misses, cache misses, even page faults. • Goal: Low latency - People typing at editors want fast response - Network services can be latency-bound, not CPU-bound • BSD time quantum: 1=10 sec (since ∼1980) - Empirically longest tolerable latency - Computers now faster, but job queues also shorter Scheduling algorithms • Round-robin • Priority scheduling • Shortest process next (if you can estimate it) • Fair-Share Schedule (try to be fair at level of users, not processes) Multilevel feeedback queues (BSD) • Every runnable proc. -

Backup and Restore Cisco Prime Collaboration Provisioning

Backup and Restore Cisco Prime Collaboration Provisioning This section explains the following: • Perform Backup and Restore, page 1 • Back Up the Single-Machine Provisioning Database, page 2 • Restore the Single-Machine Provisioning Database, page 3 • Schedule Backup Using the Provisioning User Interface, page 5 Perform Backup and Restore Cisco Prime Collaboration Provisioning allows you to backup your data and restore it. You can schedule periodic backups using the Provisioning UI (Schedule Backup Using the Provisioning User Interface, on page 5). There are two backup and restore scenarios; select the set of procedures that matches your scenario: • Backup and restore on a single machine, with the same installation or a new installation. For this scenario, see Schedule Backup Using the Provisioning User Interface, on page 5. Note When backing up files, you should place the files on a different file server. Also, you should burn the backup data onto a CD. Cisco Prime Collaboration Provisioning allows you to back up system data and restore it on a different system in the event of total system failure. To restore the backup from another system, the following prerequisites must be met: • Ensure that the server to which data is restored has the same MAC address as that of the system that was backed up (the IP address and the hostname can be different). • If you are unable to assign the MAC address of the original system (the one that was backed up) to another system, contact the Engineering Team for information on a new license file (for a new MAC address). Cisco Prime Collaboration Provisioning Install and Upgrade Guide, 12.3 1 Backup and Restore Cisco Prime Collaboration Provisioning Back Up the Single-Machine Provisioning Database • The procedure to backup and restore data on a different system is the same as the procedure to backup and restore data on the same system. -

FIFI-LS Highlights Robert Minchin FIFI-LS Highlights

FIFI-LS Highlights Robert Minchin FIFI-LS Highlights • Nice face-on galaxies where [CII] traces star formation [CII] traces star formation in M51 Pineda et al. 2018 [CII] in NGC 6946 Bigiel et al. 2020 [CII] particularly important as H2 & SFR tracer in inter-arm regions FIFI-LS Highlights • Nice face-on galaxies where [CII] traces star formation • Galaxies where [CII] doesn’t trace star formation [CII] from shocks & turbulence in NGC 4258 • [CII] seen along X-ray/radio ‘arms’ of NGC 4258, associated with past or present jet activity • [CII] excited by shocks and turbulence associated with the jet impacting the disk • NOT star formation Appleton et al. 2018 Excess [CII] in HE 1353-1917 1011 HE 1353-1917 1% MUSE RGB Images: 0.1 % • HE 1353-1917 1010 iband[OIII] Hα 0.02 % has a large 109 excess in HE 0433-1028 L[CII]/LFIR 8 10 excess 1σ 10 × 2σ /L 3C 326 3σ HE 1029-1831 • AGN ionization [CII] 7 10 HE 1108-2813 L cone intercepts HE 2211-3903 106 the cold SOFIA AGN Hosts galactic disk AGN Hosts High z 105 LINERs [Brisbin+15] Normal Galaxies LIRGs ULIRGs [Decarli+18] 104 108 109 1010 1011 1012 1013 1014 L /L Smirnova-Pinchukova et al. 2019 FIR Shock-excited [CII] in NGC 2445 [CII] in the ring of NGC 2445 is enhanced compared to PAHs, showing a shock excitement origin rather than star formation Fadda & Appleton, 2020, AAS Meeting 235 FIFI-LS Highlights • Nice face-on galaxies where [CII] traces star formation • Galaxies where [CII] doesn’t trace star formation • Galaxies with gas away from the plane Extraplanar [CII] in edge-on galaxies • Edge-on galaxies NGC 891 & NGC 5907 observed by Reach et al. -

Sleep 2.1 Manual

Sleep 2.1 Manual "If you put a million monkeys at a million keyboards, one of them will eventually write a Java program. The rest of them will write Perl programs." -- Anonymous Raphael Mudge Sleep 2.1 Manual Revision: 06.02.08 Released under a Creative Commons Attribution-ShareAlike 3.0 License (see http://creativecommons.org/licenses/by-sa/3.0/us/) You are free: • to Share -- to copy, distribute, display, and perform the work • to Remix -- to make derivative works Under the following conditions: Attribution. You must attribute this work to Raphael Mudge with a link to http://sleep.dashnine.org/ Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under the same, similar or a compatible license. • For any reuse or distribution, you must make clear to others the license terms of this work. The best way to do this is with a link to the license. • Any of the above conditions can be waived if you get permission from the copyright holder. • Apart from the remix rights granted under this license, nothing in this license impairs or restricts the author's moral rights. Your fair use and other rights are in no way affected by the above. Table of Contents Introduction................................................................................................. 1 I. What is Sleep?...................................................................................................1 II. Manual Conventions......................................................................................2 III. -

Parallel Processing Here at the School of Statistics

Parallel Processing here at the School of Statistics Charles J. Geyer School of Statistics University of Minnesota http://www.stat.umn.edu/~charlie/parallel/ 1 • batch processing • R package multicore • R package rlecuyer • R package snow • grid engine (CLA) • clusters (MSI) 2 Batch Processing This is really old stuff (from 1975). But not everyone knows it. If you do the following at a unix prompt nohup nice -n 19 some job & where \some job" is replaced by an actual job, then • the job will run in background (because of &). • the job will not be killed when you log out (because of nohup). • the job will have low priority (because of nice -n 19). 3 Batch Processing (cont.) For example, if foo.R is a plain text file containing R commands, then nohup nice -n 19 R CMD BATCH --vanilla foo.R & executes the commands and puts the printout in the file foo.Rout. And nohup nice -n 19 R CMD BATCH --no-restore foo.R & executes the commands, puts the printout in the file foo.Rout, and saves all created R objects in the file .RData. 4 Batch Processing (cont.) nohup nice -n 19 R CMD BATCH foo.R & is a really bad idea! It reads in all the objects in the file .RData (if one is present) at the beginning. So you have no idea whether the results are reproducible. Always use --vanilla or --no-restore except when debugging. 5 Batch Processing (cont.) This idiom has nothing to do with R. If foo is a compiled C or C++ or Fortran main program that doesn't have command line arguments (or a shell, Perl, Python, or Ruby script), then nohup nice -n 19 foo & runs it. -

Unix Quickref.Dvi

Summary of UNIX commands Table of Contents df [dirname] display free disk space. If dirname is omitted, 1. Directory and file commands 1994,1995,1996 Budi Rahardjo ([email protected]) display all available disks. The output maybe This is a summary of UNIX commands available 2. Print-related commands in blocks or in Kbytes. Use df -k in Solaris. on most UNIX systems. Depending on the config- uration, some of the commands may be unavailable 3. Miscellaneous commands du [dirname] on your site. These commands may be a commer- display disk usage. cial program, freeware or public domain program that 4. Process management must be installed separately, or probably just not in less filename your search path. Check your local documentation or 5. File archive and compression display filename one screenful. A pager similar manual pages for more details (e.g. man program- to (better than) more. 6. Text editors name). This reference card, obviously, cannot de- ls [dirname] scribe all UNIX commands in details, but instead I 7. Mail programs picked commands that are useful and interesting from list the content of directory dirname. Options: a user's point of view. 8. Usnet news -a display hidden files, -l display in long format 9. File transfer and remote access mkdir dirname Disclaimer make directory dirname The author makes no warranty of any kind, expressed 10. X window or implied, including the warranties of merchantabil- more filename 11. Graph, Plot, Image processing tools ity or fitness for a particular purpose, with regard to view file filename one screenfull at a time the use of commands contained in this reference card. -

Transcript of Interviews

UNIVERSITY OF OXFORD ANNUAL REVIEW 2013/14 REALISING THE POTENTIAL The UNIQ scheme allows academically able students from state schools to experience student life at a top research-led university Transcript of interviews Alistair (mentor): UNIQ’s been running five years now and I was a student The Humanities UNIQ course and other themed courses on the very first year. I’ve now been a mentor for two allow students to dip into history and English and whatever years. There are lots of lectures, which are a bit like the they want. Or general science UNIQ courses, where ones you would have in Oxford as an undergraduate; lab they can actually spend a week deciding which of these work as well, with similar experiments; and a lot of social disciplines suits them most, or maybe they want to do a activities as well with the other students. joint or combined honours course. Fiona (mentor): Fiona: The summer school was in fact way more packed into When I was 17 doing UNIQ I thought the 21-year-olds a week than I actually find myself doing in a week at running the course, assisting on the course, were so old. Oxford. I thought they’d got everything together, they were so mature, they were all going to go and have jobs. It’s quite Selina Todd, lecturer in history: nice that they must see me in the same way, as someone The culmination of the week was finishing and handing who’s been through it, and got their act together. I think the in – and having marked – your first Oxford history essay. -

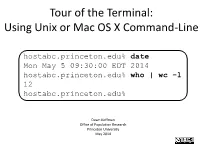

Tour of the Terminal: Using Unix Or Mac OS X Command-Line

Tour of the Terminal: Using Unix or Mac OS X Command-Line hostabc.princeton.edu% date Mon May 5 09:30:00 EDT 2014 hostabc.princeton.edu% who | wc –l 12 hostabc.princeton.edu% Dawn Koffman Office of Population Research Princeton University May 2014 Tour of the Terminal: Using Unix or Mac OS X Command Line • Introduction • Files • Directories • Commands • Shell Programs • Stream Editor: sed 2 Introduction • Operating Systems • Command-Line Interface • Shell • Unix Philosophy • Command Execution Cycle • Command History 3 Command-Line Interface user operating system computer (human ) (software) (hardware) command- programs kernel line (text (manages interface editors, computing compilers, resources: commands - memory for working - hard-drive cpu with file - time) memory system, point-and- hard-drive many other click (gui) utilites) interface 4 Comparison command-line interface point-and-click interface - may have steeper learning curve, - may be more intuitive, BUT provides constructs that can BUT can also be much more make many tasks very easy human-manual-labor intensive - scales up very well when - often does not scale up well when have lots of: have lots of: data data programs programs tasks to accomplish tasks to accomplish 5 Shell Command-line interface provided by Unix and Mac OS X is called a shell a shell: - prompts user for commands - interprets user commands - passes them onto the rest of the operating system which is hidden from the user How do you access a shell ? - if you have an account on a machine running Unix or Linux , just log in. A default shell will be running. - if you are using a Mac, run the Terminal app. -

The Linux Command Line

The Linux Command Line Second Internet Edition William E. Shotts, Jr. A LinuxCommand.org Book Copyright ©2008-2013, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, 171 Second Street, Suite 300, San Fran- cisco, California, 94105, USA. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. This book is also available in printed form, published by No Starch Press and may be purchased wherever fine books are sold. No Starch Press also offers this book in elec- tronic formats for most popular e-readers: http://nostarch.com/tlcl.htm Release History Version Date Description 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. 09.11 November 19, 2009 Fourth draft with almost all reviewer feedback incorporated and edited through chapter 37. 09.10 October 3, 2009 Third draft with revised table formatting, partial application of reviewers feedback and edited through chapter 18. 09.08 August 12, 2009 Second draft incorporating the first editing pass. 09.07 July 18, 2009 Completed first draft. Table of Contents Introduction....................................................................................................xvi -

Gnu Coreutils Core GNU Utilities for Version 5.93, 2 November 2005

gnu Coreutils Core GNU utilities for version 5.93, 2 November 2005 David MacKenzie et al. This manual documents version 5.93 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section “Bugs” in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering. -

Processes in Linux/Unix

Processes in Linux/Unix A program/command when executed, a special instance is provided by the system to the process. This instance consists of all the services/resources that may be utilized by the process under execution. • Whenever a command is issued in unix/linux, it creates/starts a new process. For example, pwd when issued which is used to list the current directory location the user is in, a process starts. • Through a 5 digit ID number unix/linux keeps account of the processes, this number is call process id or pid. Each process in the system has a unique pid. • Used up pid’s can be used in again for a newer process since all the possible combinations are used. • At any point of time, no two processes with the same pid exist in the system because it is the pid that Unix uses to track each process. Initializing a process A process can be run in two ways: 1. Foreground Process : Every process when started runs in foreground by default, receives input from the keyboard and sends output to the screen. When issuing pwd command $ ls pwd Output: $ /home/geeksforgeeks/root When a command/process is running in the foreground and is taking a lot of time, no other processes can be run or started because the prompt would not be available until the program finishes processing and comes out. 2. Backround Process : It runs in the background without keyboard input and waits till keyboard input is required. Thus, other processes can be done in parallel with the process running in background since they do not have to wait for the previous process to be completed. -

UNIQ: Uniform Noise Injection for Non-Uniform Quantization of Neural

UNIQ:U NIFORM N OISE I NJECTIONFOR N ON -U NIFORM Q UANTIZATIONOF N EURAL N ETWORKS Chaim Baskin * 1 Eli Schwartz * 2 Evgenii Zheltonozhskii 1 Natan Liss 1 Raja Giryes 2 Alex Bronstein 1 Avi Mendelson 1 A BSTRACT We present a novel method for neural network quantization that emulates a non-uniform k-quantile quantizer, which adapts to the distribution of the quantized parameters. Our approach provides a novel alternative to the existing uniform quantization techniques for neural networks. We suggest to compare the results as a function of the bit-operations (BOPS) performed, assuming a look-up table availability for the non-uniform case. In this setup, we show the advantages of our strategy in the low computational budget regime. While the proposed solution is harder to implement in hardware, we believe it sets a basis for new alternatives to neural networks quantization. 1 I NTRODUCTION of two reduces the amount of hardware by roughly a factor of four. (A more accurate analysis of the effects of quanti- Deep neural networks are widely used in many fields today zation is presented in Section 4.2). Quantization allows to including computer vision, signal processing, computational fit bigger networks into a device, which is especially crit- imaging, image processing, and speech and language pro- ical for embedded devices and custom hardware. On the cessing (Hinton et al., 2012; Lai et al., 2015; Chen et al., other hand, activations are passed between layers, and thus, 2016). Yet, a major drawback of these deep learning models if different layers are processed separately, activation size is their storage and computational cost.