Entropy: Is It What We Think It Is and How Should We Teach It?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bluff Your Way in the Second Law of Thermodynamics

Bluff your way in the Second Law of Thermodynamics Jos Uffink Department of History and Foundations of Science Utrecht University, P.O.Box 80.000, 3508 TA Utrecht, The Netherlands e-mail: uffi[email protected] 5th July 2001 ABSTRACT The aim of this article is to analyse the relation between the second law of thermodynamics and the so-called arrow of time. For this purpose, a number of different aspects in this arrow of time are distinguished, in particular those of time-(a)symmetry and of (ir)reversibility. Next I review versions of the second law in the work of Carnot, Clausius, Kelvin, Planck, Gibbs, Caratheodory´ and Lieb and Yngvason, and investigate their connection with these aspects of the arrow of time. It is shown that this connection varies a great deal along with these formulations of the second law. According to the famous formulation by Planck, the second law expresses the irreversibility of natural processes. But in many other formulations irreversibility or even time-asymmetry plays no role. I therefore argue for the view that the second law has nothing to do with the arrow of time. KEY WORDS: Thermodynamics, Second Law, Irreversibility, Time-asymmetry, Arrow of Time. 1 INTRODUCTION There is a famous lecture by the British physicist/novelist C. P. Snow about the cul- tural abyss between two types of intellectuals: those who have been educated in literary arts and those in the exact sciences. This lecture, the Two Cultures (1959), characterises the lack of mutual respect between them in a passage: A good many times I have been present at gatherings of people who, by the standards of the traditional culture, are thought highly educated and who have 1 with considerable gusto been expressing their incredulity at the illiteracy of sci- entists. -

Lecture 4: 09.16.05 Temperature, Heat, and Entropy

3.012 Fundamentals of Materials Science Fall 2005 Lecture 4: 09.16.05 Temperature, heat, and entropy Today: LAST TIME .........................................................................................................................................................................................2� State functions ..............................................................................................................................................................................2� Path dependent variables: heat and work..................................................................................................................................2� DEFINING TEMPERATURE ...................................................................................................................................................................4� The zeroth law of thermodynamics .............................................................................................................................................4� The absolute temperature scale ..................................................................................................................................................5� CONSEQUENCES OF THE RELATION BETWEEN TEMPERATURE, HEAT, AND ENTROPY: HEAT CAPACITY .......................................6� The difference between heat and temperature ...........................................................................................................................6� Defining heat capacity.................................................................................................................................................................6� -

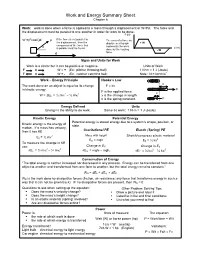

Work and Energy Summary Sheet Chapter 6

Work and Energy Summary Sheet Chapter 6 Work: work is done when a force is applied to a mass through a displacement or W=Fd. The force and the displacement must be parallel to one another in order for work to be done. F (N) W =(Fcosθ)d F If the force is not parallel to The area of a force vs. the displacement, then the displacement graph + W component of the force that represents the work θ d (m) is parallel must be found. done by the varying - W d force. Signs and Units for Work Work is a scalar but it can be positive or negative. Units of Work F d W = + (Ex: pitcher throwing ball) 1 N•m = 1 J (Joule) F d W = - (Ex. catcher catching ball) Note: N = kg m/s2 • Work – Energy Principle Hooke’s Law x The work done on an object is equal to its change F = kx in kinetic energy. F F is the applied force. 2 2 x W = ΔEk = ½ mvf – ½ mvi x is the change in length. k is the spring constant. F Energy Defined Units Energy is the ability to do work. Same as work: 1 N•m = 1 J (Joule) Kinetic Energy Potential Energy Potential energy is stored energy due to a system’s shape, position, or Kinetic energy is the energy of state. motion. If a mass has velocity, Gravitational PE Elastic (Spring) PE then it has KE 2 Mass with height Stretch/compress elastic material Ek = ½ mv 2 EG = mgh EE = ½ kx To measure the change in KE Change in E use: G Change in ES 2 2 2 2 ΔEk = ½ mvf – ½ mvi ΔEG = mghf – mghi ΔEE = ½ kxf – ½ kxi Conservation of Energy “The total energy is neither increased nor decreased in any process. -

The Theory of Dissociation”

76 Bull. Hist. Chem., VOLUME 34, Number 2 (2009) PRIMARY DOCUMENTS “The Theory of Dissociation” A. Horstmann Annalen der Chemie und Pharmacie, 1873, 170, 192-210. W. Thomson was the first to take note of one of (Received 11 October 1873) the consequences of the mechanical theory of heat (1) - namely that the entire world is continuously approaching, It is characteristic of dissociation phenomena that a via the totality of all natural processes, a limiting state in reaction, in which heat overcomes the force of chemical which further change is impossible. Repose and death attraction, occurs for only a portion of a substance, even will then reign over all and the end of the world will though all of its parts have been equally exposed to the have arrived. same influences. In the remaining portion, the forces of chemical attraction, which are the only reason for the Clausius (2) knew how to give this conclusion a reaction to proceed in the opposite direction, maintain mathematical form by constructing a quantity—the the upper hand. Hence, for such reactions there is a entropy—which increases during all natural changes limiting state which the molecular system in question but which cannot be decreased by any known force of approaches irrespective of the initial state and, once it is nature. The limiting state is, therefore, reached when reached, neither heat nor chemical forces can produce the entropy of the world is as large as possible. Then further change so long as the external conditions remain the only possible processes that can occur are those for constant. -

Entropy (Order and Disorder)

Entropy (order and disorder) Locally, the entropy can be lowered by external action. This applies to machines, such as a refrigerator, where the entropy in the cold chamber is being reduced, and to living organisms. This local decrease in entropy is, how- ever, only possible at the expense of an entropy increase in the surroundings. 1 History This “molecular ordering” entropy perspective traces its origins to molecular movement interpretations developed by Rudolf Clausius in the 1850s, particularly with his 1862 visual conception of molecular disgregation. Sim- ilarly, in 1859, after reading a paper on the diffusion of molecules by Clausius, Scottish physicist James Clerk Boltzmann’s molecules (1896) shown at a “rest position” in a solid Maxwell formulated the Maxwell distribution of molec- ular velocities, which gave the proportion of molecules having a certain velocity in a specific range. This was the In thermodynamics, entropy is commonly associated first-ever statistical law in physics.[3] with the amount of order, disorder, or chaos in a thermodynamic system. This stems from Rudolf Clau- In 1864, Ludwig Boltzmann, a young student in Vienna, sius' 1862 assertion that any thermodynamic process al- came across Maxwell’s paper and was so inspired by it ways “admits to being reduced to the alteration in some that he spent much of his long and distinguished life de- way or another of the arrangement of the constituent parts veloping the subject further. Later, Boltzmann, in efforts of the working body" and that internal work associated to develop a kinetic theory for the behavior of a gas, ap- with these alterations is quantified energetically by a mea- plied the laws of probability to Maxwell’s and Clausius’ sure of “entropy” change, according to the following dif- molecular interpretation of entropy so to begin to inter- ferential expression:[1] pret entropy in terms of order and disorder. -

Lecture 8: Maximum and Minimum Work, Thermodynamic Inequalities

Lecture 8: Maximum and Minimum Work, Thermodynamic Inequalities Chapter II. Thermodynamic Quantities A.G. Petukhov, PHYS 743 October 4, 2017 Chapter II. Thermodynamic Quantities Lecture 8: Maximum and Minimum Work, ThermodynamicA.G. Petukhov,October Inequalities PHYS 4, 743 2017 1 / 12 Maximum Work If a thermally isolated system is in non-equilibrium state it may do work on some external bodies while equilibrium is being established. The total work done depends on the way leading to the equilibrium. Therefore the final state will also be different. In any event, since system is thermally isolated the work done by the system: jAj = E0 − E(S); where E0 is the initial energy and E(S) is final (equilibrium) one. Le us consider the case when Vinit = Vfinal but can change during the process. @ jAj @E = − = −Tfinal < 0 @S @S V The entropy cannot decrease. Then it means that the greater is the change of the entropy the smaller is work done by the system The maximum work done by the system corresponds to the reversible process when ∆S = Sfinal − Sinitial = 0 Chapter II. Thermodynamic Quantities Lecture 8: Maximum and Minimum Work, ThermodynamicA.G. Petukhov,October Inequalities PHYS 4, 743 2017 2 / 12 Clausius Theorem dS 0 R > dS < 0 R S S TA > TA T > T B B A δQA > 0 B Q 0 δ B < The system following a closed path A: System receives heat from a hot reservoir. Temperature of the thermostat is slightly larger then the system temperature B: System dumps heat to a cold reservoir. Temperature of the system is slightly larger then that of the thermostat Chapter II. -

Lecture 6: Entropy

Matthew Schwartz Statistical Mechanics, Spring 2019 Lecture 6: Entropy 1 Introduction In this lecture, we discuss many ways to think about entropy. The most important and most famous property of entropy is that it never decreases Stot > 0 (1) Here, Stot means the change in entropy of a system plus the change in entropy of the surroundings. This is the second law of thermodynamics that we met in the previous lecture. There's a great quote from Sir Arthur Eddington from 1927 summarizing the importance of the second law: If someone points out to you that your pet theory of the universe is in disagreement with Maxwell's equationsthen so much the worse for Maxwell's equations. If it is found to be contradicted by observationwell these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of ther- modynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation. Another possibly relevant quote, from the introduction to the statistical mechanics book by David Goodstein: Ludwig Boltzmann who spent much of his life studying statistical mechanics, died in 1906, by his own hand. Paul Ehrenfest, carrying on the work, died similarly in 1933. Now it is our turn to study statistical mechanics. There are many ways to dene entropy. All of them are equivalent, although it can be hard to see. In this lecture we will compare and contrast dierent denitions, building up intuition for how to think about entropy in dierent contexts. The original denition of entropy, due to Clausius, was thermodynamic. -

Work-Energy for a System of Particles and Its Relation to Conservation Of

Conservation of Energy, the Work-Energy Principle, and the Mechanical Energy Balance In your study of engineering and physics, you will run across a number of engineering concepts related to energy. Three of the most common are Conservation of Energy, the Work-Energy Principle, and the Mechanical Engineering Balance. The Conservation of Energy is treated in this course as one of the overarching and fundamental physical laws. The other two concepts are special cases and only apply under limited conditions. The purpose of this note is to review the pedigree of the Work-Energy Principle, to show how the more general Mechanical Energy Bal- ance is developed from Conservation of Energy, and finally to describe the conditions under which the Mechanical Energy Balance is preferred over the Work-Energy Principle. Work-Energy Principle for a Particle Consider a particle of mass m and velocity V moving in a gravitational field of strength g sub- G ject to a surface force Rsurface . Under these conditions, writing Conservation of Linear Momen- tum for the particle gives the following: d mV= R+ mg (1.1) dt ()Gsurface Forming the dot product of Eq. (1.1) with the velocity of the particle and rearranging terms gives the rate form of the Work-Energy Principle for a particle: 2 dV⎛⎞ d d ⎜⎟mmgzRV+=() surfacei G ⇒ () EEWK += GP mech, in (1.2) dt⎝⎠2 dt dt Gravitational mechanical Kinetic potential power into energy energy the system Recall that mechanical power is defined as WRmech, in= surfaceiV G , the dot product of the surface force with the velocity of its point of application. -

3. Energy, Heat, and Work

3. Energy, Heat, and Work 3.1. Energy 3.2. Potential and Kinetic Energy 3.3. Internal Energy 3.4. Relatively Effects 3.5. Heat 3.6. Work 3.7. Notation and Sign Convention In these Lecture Notes we examine the basis of thermodynamics – fundamental definitions and equations for energy, heat, and work. 3-1. Energy. Two of man's earliest observations was that: 1)useful work could be accomplished by exerting a force through a distance and that the product of force and distance was proportional to the expended effort, and 2)heat could be ‘felt’ in when close or in contact with a warm body. There were many explanations for this second observation including that of invisible particles traveling through space1. It was not until the early beginnings of modern science and molecular theory that scientists discovered a true physical understanding of ‘heat flow’. It was later that a few notable individuals, including James Prescott Joule, discovered through experiment that work and heat were the same phenomenon and that this phenomenon was energy: Energy is the capacity, either latent or apparent, to exert a force through a distance. The presence of energy is indicated by the macroscopic characteristics of the physical or chemical structure of matter such as its pressure, density, or temperature - properties of matter. The concept of hot versus cold arose in the distant past as a consequence of man's sense of touch or feel. Observations show that, when a hot and a cold substance are placed together, the hot substance gets colder as the cold substance gets hotter. -

V320140108.Pdf

1Department of Applied Science and Technology, Politecnico di Torino, Italy Abstract In this paper we are translating and discussing one of the scientific treatises written by Robert Grosseteste, the De Calore Solis, On the Heat of the Sun. In this treatise, the medieval philosopher analyses the nature of heat. Keywords: History of Science, Medieval Science 1. Introduction some features: he tells that Air is wet and hot, Fire is Robert Grosseteste (c. 1175 – 1253), philosopher and hot and dry, Earth is dry and cold, and the Water is bishop of Lincoln from 1235 AD until his death, cold and wet [5]. And, because the above-mentioned wrote several scientific papers [1]; among them, quite four elements are corruptible, Aristotle added the remarkable are those on optics and sound [2-4]. In incorruptible Aether as the fifth element, or essence, [5], we have recently proposed a translation and the quintessence, and since we do not see any discussion of his treatise entitled De Impressionibus changes in the heavenly regions, the stars and skies Elementorum, written shortly after 1220; there, above the Moon must be made of it. Grosseteste is talking about some phenomena involving the four classical elements (air, water, fire The Hellenistic period, a period between the death of and earth), in the framework of an Aristotelian Alexander the Great in 323 BC and the emergence of physics of the atmosphere. This treatise on elements ancient Rome, saw the flourishing of science. In shows the role of heat in phase transitions and in the Egypt of this Hellenistic tradition, under the atmospheric phenomena. -

Chemistry 130 Gibbs Free Energy

Chemistry 130 Gibbs Free Energy Dr. John F. C. Turner 409 Buehler Hall [email protected] Chemistry 130 Equilibrium and energy So far in chemistry 130, and in Chemistry 120, we have described chemical reactions thermodynamically by using U - the change in internal energy, U, which involves heat transferring in or out of the system only or H - the change in enthalpy, H, which involves heat transfers in and out of the system as well as changes in work. U applies at constant volume, where as H applies at constant pressure. Chemistry 130 Equilibrium and energy When chemical systems change, either physically through melting, evaporation, freezing or some other physical process variables (V, P, T) or chemically by reaction variables (ni) they move to a point of equilibrium by either exothermic or endothermic processes. Characterizing the change as exothermic or endothermic does not tell us whether the change is spontaneous or not. Both endothermic and exothermic processes are seen to occur spontaneously. Chemistry 130 Equilibrium and energy Our descriptions of reactions and other chemical changes are on the basis of exothermicity or endothermicity ± whether H is negative or positive H is negative ± exothermic H is positive ± endothermic As a description of changes in heat content and work, these are adequate but they do not describe whether a process is spontaneous or not. There are endothermic processes that are spontaneous ± evaporation of water, the dissolution of ammonium chloride in water, the melting of ice and so on. We need a thermodynamic description of spontaneous processes in order to fully describe a chemical system Chemistry 130 Equilibrium and energy A spontaneous process is one that takes place without any influence external to the system. -

Removing the Mystery of Entropy and Thermodynamics – Part II Harvey S

Table IV. Example of audit of energy loss in collision (with a mass attached to the end of the spring). Removing the Mystery of Entropy and Thermodynamics – Part II Harvey S. Leff, Reed College, Portland, ORa,b Part II of this five-part series is focused on further clarification of entropy and thermodynamics. We emphasize that entropy is a state function with a numerical value for any substance in thermodynamic equilibrium with its surroundings. The inter- pretation of entropy as a “spreading function” is suggested by the Clausius algorithm. The Mayer-Joule principle is shown to be helpful in understanding entropy changes for pure work processes. Furthermore, the entropy change when a gas expands or is compressed, and when two gases are mixed, can be understood qualitatively in terms of spatial energy spreading. The question- answer format of Part I1 is continued, enumerating main results in Key Points 2.1-2.6. • What is the significance of entropy being a state Although the linear correlation in Fig. 1 is quite striking, the function? two points to be emphasized here are: (i) the entropy value In Part I, we showed that the entropy of a room temperature of each solid is dependent on the energy added to and stored solid can be calculated at standard temperature and pressure by it, and (ii) the amount of energy needed for the heating using heat capacity data from near absolute zero, denoted by T process from T = 0 + K to T = 298.15 K differs from solid to = 0+, to room temperature, Tf = 298.15 K.