The Carnot Cycle, Reversibility and Entropy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Entropy, Information, and Conservation of Information

entropy Article On Entropy, Information, and Conservation of Information Yunus A. Çengel Department of Mechanical Engineering, University of Nevada, Reno, NV 89557, USA; [email protected] Abstract: The term entropy is used in different meanings in different contexts, sometimes in contradic- tory ways, resulting in misunderstandings and confusion. The root cause of the problem is the close resemblance of the defining mathematical expressions of entropy in statistical thermodynamics and information in the communications field, also called entropy, differing only by a constant factor with the unit ‘J/K’ in thermodynamics and ‘bits’ in the information theory. The thermodynamic property entropy is closely associated with the physical quantities of thermal energy and temperature, while the entropy used in the communications field is a mathematical abstraction based on probabilities of messages. The terms information and entropy are often used interchangeably in several branches of sciences. This practice gives rise to the phrase conservation of entropy in the sense of conservation of information, which is in contradiction to the fundamental increase of entropy principle in thermody- namics as an expression of the second law. The aim of this paper is to clarify matters and eliminate confusion by putting things into their rightful places within their domains. The notion of conservation of information is also put into a proper perspective. Keywords: entropy; information; conservation of information; creation of information; destruction of information; Boltzmann relation Citation: Çengel, Y.A. On Entropy, 1. Introduction Information, and Conservation of One needs to be cautious when dealing with information since it is defined differently Information. Entropy 2021, 23, 779. -

![Arxiv:2008.06405V1 [Physics.Ed-Ph] 14 Aug 2020 Fig.1 Shows Four Classical Cycles: (A) Carnot Cycle, (B) Stirling Cycle, (C) Otto Cycle and (D) Diesel Cycle](https://docslib.b-cdn.net/cover/0049/arxiv-2008-06405v1-physics-ed-ph-14-aug-2020-fig-1-shows-four-classical-cycles-a-carnot-cycle-b-stirling-cycle-c-otto-cycle-and-d-diesel-cycle-70049.webp)

Arxiv:2008.06405V1 [Physics.Ed-Ph] 14 Aug 2020 Fig.1 Shows Four Classical Cycles: (A) Carnot Cycle, (B) Stirling Cycle, (C) Otto Cycle and (D) Diesel Cycle

Investigating student understanding of heat engine: a case study of Stirling engine Lilin Zhu1 and Gang Xiang1, ∗ 1Department of Physics, Sichuan University, Chengdu 610064, China (Dated: August 17, 2020) We report on the study of student difficulties regarding heat engine in the context of Stirling cycle within upper-division undergraduate thermal physics course. An in-class test about a Stirling engine with a regenerator was taken by three classes, and the students were asked to perform one of the most basic activities—calculate the efficiency of the heat engine. Our data suggest that quite a few students have not developed a robust conceptual understanding of basic engineering knowledge of the heat engine, including the function of the regenerator and the influence of piston movements on the heat and work involved in the engine. Most notably, although the science error ratios of the three classes were similar (∼10%), the engineering error ratios of the three classes were high (above 50%), and the class that was given a simple tutorial of engineering knowledge of heat engine exhibited significantly smaller engineering error ratio by about 20% than the other two classes. In addition, both the written answers and post-test interviews show that most of the students can only associate Carnot’s theorem with Carnot cycle, but not with other reversible cycles working between two heat reservoirs, probably because no enough cycles except Carnot cycle were covered in the traditional Thermodynamics textbook. Our results suggest that both scientific and engineering knowledge are important and should be included in instructional approaches, especially in the Thermodynamics course taught in the countries and regions with a tradition of not paying much attention to experimental education or engineering training. -

Practice Problems from Chapter 1-3 Problem 1 One Mole of a Monatomic Ideal Gas Goes Through a Quasistatic Three-Stage Cycle (1-2, 2-3, 3-1) Shown in V 3 the Figure

Practice Problems from Chapter 1-3 Problem 1 One mole of a monatomic ideal gas goes through a quasistatic three-stage cycle (1-2, 2-3, 3-1) shown in V 3 the Figure. T1 and T2 are given. V 2 2 (a) (10) Calculate the work done by the gas. Is it positive or negative? V 1 1 (b) (20) Using two methods (Sackur-Tetrode eq. and dQ/T), calculate the entropy change for each stage and ∆ for the whole cycle, Stotal. Did you get the expected ∆ result for Stotal? Explain. T1 T2 T (c) (5) What is the heat capacity (in units R) for each stage? Problem 1 (cont.) ∝ → (a) 1 – 2 V T P = const (isobaric process) δW 12=P ( V 2−V 1 )=R (T 2−T 1)>0 V = const (isochoric process) 2 – 3 δW 23 =0 V 1 V 1 dV V 1 T1 3 – 1 T = const (isothermal process) δW 31=∫ PdV =R T1 ∫ =R T 1 ln =R T1 ln ¿ 0 V V V 2 T 2 V 2 2 T1 T 2 T 2 δW total=δW 12+δW 31=R (T 2−T 1)+R T 1 ln =R T 1 −1−ln >0 T 2 [ T 1 T 1 ] Problem 1 (cont.) Sackur-Tetrode equation: V (b) 3 V 3 U S ( U ,V ,N )=R ln + R ln +kB ln f ( N ,m ) V2 2 N 2 N V f 3 T f V f 3 T f ΔS =R ln + R ln =R ln + ln V V 2 T V 2 T 1 1 i i ( i i ) 1 – 2 V ∝ T → P = const (isobaric process) T1 T2 T 5 T 2 ΔS12 = R ln 2 T 1 T T V = const (isochoric process) 3 1 3 2 2 – 3 ΔS 23 = R ln =− R ln 2 T 2 2 T 1 V 1 T 2 3 – 1 T = const (isothermal process) ΔS 31 =R ln =−R ln V 2 T 1 5 T 2 T 2 3 T 2 as it should be for a quasistatic cyclic process ΔS = R ln −R ln − R ln =0 cycle 2 T T 2 T (quasistatic – reversible), 1 1 1 because S is a state function. -

Bluff Your Way in the Second Law of Thermodynamics

Bluff your way in the Second Law of Thermodynamics Jos Uffink Department of History and Foundations of Science Utrecht University, P.O.Box 80.000, 3508 TA Utrecht, The Netherlands e-mail: uffi[email protected] 5th July 2001 ABSTRACT The aim of this article is to analyse the relation between the second law of thermodynamics and the so-called arrow of time. For this purpose, a number of different aspects in this arrow of time are distinguished, in particular those of time-(a)symmetry and of (ir)reversibility. Next I review versions of the second law in the work of Carnot, Clausius, Kelvin, Planck, Gibbs, Caratheodory´ and Lieb and Yngvason, and investigate their connection with these aspects of the arrow of time. It is shown that this connection varies a great deal along with these formulations of the second law. According to the famous formulation by Planck, the second law expresses the irreversibility of natural processes. But in many other formulations irreversibility or even time-asymmetry plays no role. I therefore argue for the view that the second law has nothing to do with the arrow of time. KEY WORDS: Thermodynamics, Second Law, Irreversibility, Time-asymmetry, Arrow of Time. 1 INTRODUCTION There is a famous lecture by the British physicist/novelist C. P. Snow about the cul- tural abyss between two types of intellectuals: those who have been educated in literary arts and those in the exact sciences. This lecture, the Two Cultures (1959), characterises the lack of mutual respect between them in a passage: A good many times I have been present at gatherings of people who, by the standards of the traditional culture, are thought highly educated and who have 1 with considerable gusto been expressing their incredulity at the illiteracy of sci- entists. -

Lecture 4: 09.16.05 Temperature, Heat, and Entropy

3.012 Fundamentals of Materials Science Fall 2005 Lecture 4: 09.16.05 Temperature, heat, and entropy Today: LAST TIME .........................................................................................................................................................................................2� State functions ..............................................................................................................................................................................2� Path dependent variables: heat and work..................................................................................................................................2� DEFINING TEMPERATURE ...................................................................................................................................................................4� The zeroth law of thermodynamics .............................................................................................................................................4� The absolute temperature scale ..................................................................................................................................................5� CONSEQUENCES OF THE RELATION BETWEEN TEMPERATURE, HEAT, AND ENTROPY: HEAT CAPACITY .......................................6� The difference between heat and temperature ...........................................................................................................................6� Defining heat capacity.................................................................................................................................................................6� -

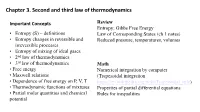

Chapter 3. Second and Third Law of Thermodynamics

Chapter 3. Second and third law of thermodynamics Important Concepts Review Entropy; Gibbs Free Energy • Entropy (S) – definitions Law of Corresponding States (ch 1 notes) • Entropy changes in reversible and Reduced pressure, temperatures, volumes irreversible processes • Entropy of mixing of ideal gases • 2nd law of thermodynamics • 3rd law of thermodynamics Math • Free energy Numerical integration by computer • Maxwell relations (Trapezoidal integration • Dependence of free energy on P, V, T https://en.wikipedia.org/wiki/Trapezoidal_rule) • Thermodynamic functions of mixtures Properties of partial differential equations • Partial molar quantities and chemical Rules for inequalities potential Major Concept Review • Adiabats vs. isotherms p1V1 p2V2 • Sign convention for work and heat w done on c=C /R vm system, q supplied to system : + p1V1 p2V2 =Cp/CV w done by system, q removed from system : c c V1T1 V2T2 - • Joule-Thomson expansion (DH=0); • State variables depend on final & initial state; not Joule-Thomson coefficient, inversion path. temperature • Reversible change occurs in series of equilibrium V states T TT V P p • Adiabatic q = 0; Isothermal DT = 0 H CP • Equations of state for enthalpy, H and internal • Formation reaction; enthalpies of energy, U reaction, Hess’s Law; other changes D rxn H iD f Hi i T D rxn H Drxn Href DrxnCpdT Tref • Calorimetry Spontaneous and Nonspontaneous Changes First Law: when one form of energy is converted to another, the total energy in universe is conserved. • Does not give any other restriction on a process • But many processes have a natural direction Examples • gas expands into a vacuum; not the reverse • can burn paper; can't unburn paper • heat never flows spontaneously from cold to hot These changes are called nonspontaneous changes. -

Entropy: Ideal Gas Processes

Chapter 19: The Kinec Theory of Gases Thermodynamics = macroscopic picture Gases micro -> macro picture One mole is the number of atoms in 12 g sample Avogadro’s Number of carbon-12 23 -1 C(12)—6 protrons, 6 neutrons and 6 electrons NA=6.02 x 10 mol 12 atomic units of mass assuming mP=mn Another way to do this is to know the mass of one molecule: then So the number of moles n is given by M n=N/N sample A N = N A mmole−mass € Ideal Gas Law Ideal Gases, Ideal Gas Law It was found experimentally that if 1 mole of any gas is placed in containers that have the same volume V and are kept at the same temperature T, approximately all have the same pressure p. The small differences in pressure disappear if lower gas densities are used. Further experiments showed that all low-density gases obey the equation pV = nRT. Here R = 8.31 K/mol ⋅ K and is known as the "gas constant." The equation itself is known as the "ideal gas law." The constant R can be expressed -23 as R = kNA . Here k is called the Boltzmann constant and is equal to 1.38 × 10 J/K. N If we substitute R as well as n = in the ideal gas law we get the equivalent form: NA pV = NkT. Here N is the number of molecules in the gas. The behavior of all real gases approaches that of an ideal gas at low enough densities. Low densitiens m= enumberans tha oft t hemoles gas molecul es are fa Nr e=nough number apa ofr tparticles that the y do not interact with one another, but only with the walls of the gas container. -

The Theory of Dissociation”

76 Bull. Hist. Chem., VOLUME 34, Number 2 (2009) PRIMARY DOCUMENTS “The Theory of Dissociation” A. Horstmann Annalen der Chemie und Pharmacie, 1873, 170, 192-210. W. Thomson was the first to take note of one of (Received 11 October 1873) the consequences of the mechanical theory of heat (1) - namely that the entire world is continuously approaching, It is characteristic of dissociation phenomena that a via the totality of all natural processes, a limiting state in reaction, in which heat overcomes the force of chemical which further change is impossible. Repose and death attraction, occurs for only a portion of a substance, even will then reign over all and the end of the world will though all of its parts have been equally exposed to the have arrived. same influences. In the remaining portion, the forces of chemical attraction, which are the only reason for the Clausius (2) knew how to give this conclusion a reaction to proceed in the opposite direction, maintain mathematical form by constructing a quantity—the the upper hand. Hence, for such reactions there is a entropy—which increases during all natural changes limiting state which the molecular system in question but which cannot be decreased by any known force of approaches irrespective of the initial state and, once it is nature. The limiting state is, therefore, reached when reached, neither heat nor chemical forces can produce the entropy of the world is as large as possible. Then further change so long as the external conditions remain the only possible processes that can occur are those for constant. -

Entropy (Order and Disorder)

Entropy (order and disorder) Locally, the entropy can be lowered by external action. This applies to machines, such as a refrigerator, where the entropy in the cold chamber is being reduced, and to living organisms. This local decrease in entropy is, how- ever, only possible at the expense of an entropy increase in the surroundings. 1 History This “molecular ordering” entropy perspective traces its origins to molecular movement interpretations developed by Rudolf Clausius in the 1850s, particularly with his 1862 visual conception of molecular disgregation. Sim- ilarly, in 1859, after reading a paper on the diffusion of molecules by Clausius, Scottish physicist James Clerk Boltzmann’s molecules (1896) shown at a “rest position” in a solid Maxwell formulated the Maxwell distribution of molec- ular velocities, which gave the proportion of molecules having a certain velocity in a specific range. This was the In thermodynamics, entropy is commonly associated first-ever statistical law in physics.[3] with the amount of order, disorder, or chaos in a thermodynamic system. This stems from Rudolf Clau- In 1864, Ludwig Boltzmann, a young student in Vienna, sius' 1862 assertion that any thermodynamic process al- came across Maxwell’s paper and was so inspired by it ways “admits to being reduced to the alteration in some that he spent much of his long and distinguished life de- way or another of the arrangement of the constituent parts veloping the subject further. Later, Boltzmann, in efforts of the working body" and that internal work associated to develop a kinetic theory for the behavior of a gas, ap- with these alterations is quantified energetically by a mea- plied the laws of probability to Maxwell’s and Clausius’ sure of “entropy” change, according to the following dif- molecular interpretation of entropy so to begin to inter- ferential expression:[1] pret entropy in terms of order and disorder. -

Thermodynamic Entropy As an Indicator for Urban Sustainability?

Available online at www.sciencedirect.com ScienceDirect Procedia Engineering 00 (2017) 000–000 www.elsevier.com/locate/procedia Urban Transitions Conference, Shanghai, September 2016 Thermodynamic entropy as an indicator for urban sustainability? Ben Purvisa,*, Yong Maoa, Darren Robinsona aLaboratory of Urban Complexity and Sustainability, University of Nottingham, NG7 2RD, UK bSecond affiliation, Address, City and Postcode, Country Abstract As foci of economic activity, resource consumption, and the production of material waste and pollution, cities represent both a major hurdle and yet also a source of great potential for achieving the goal of sustainability. Motivated by the desire to better understand and measure sustainability in quantitative terms we explore the applicability of thermodynamic entropy to urban systems as a tool for evaluating sustainability. Having comprehensively reviewed the application of thermodynamic entropy to urban systems we argue that the role it can hope to play in characterising sustainability is limited. We show that thermodynamic entropy may be considered as a measure of energy efficiency, but must be complimented by other indices to form part of a broader measure of urban sustainability. © 2017 The Authors. Published by Elsevier Ltd. Peer-review under responsibility of the organizing committee of the Urban Transitions Conference. Keywords: entropy; sustainability; thermodynamics; city; indicators; exergy; second law 1. Introduction The notion of using thermodynamic concepts as a tool for better understanding the problems relating to “sustainability” is not a new one. Ayres and Kneese (1969) [1] are credited with popularising the use of physical conservation principles in economic thinking. Georgescu-Roegen was the first to consider the relationship between the second law of thermodynamics and the degradation of natural resources [2]. -

Entropy: Is It What We Think It Is and How Should We Teach It?

Entropy: is it what we think it is and how should we teach it? David Sands Dept. Physics and Mathematics University of Hull UK Institute of Physics, December 2012 We owe our current view of entropy to Gibbs: “For the equilibrium of any isolated system it is necessary and sufficient that in all possible variations of the system that do not alter its energy, the variation of its entropy shall vanish or be negative.” Equilibrium of Heterogeneous Substances, 1875 And Maxwell: “We must regard the entropy of a body, like its volume, pressure, and temperature, as a distinct physical property of the body depending on its actual state.” Theory of Heat, 1891 Clausius: Was interested in what he called “internal work” – work done in overcoming inter-particle forces; Sought to extend the theory of cyclic processes to cover non-cyclic changes; Actively looked for an equivalent equation to the central result for cyclic processes; dQ 0 T Clausius: In modern thermodynamics the sign is negative, because heat must be extracted from the system to restore the original state if the cycle is irreversible . The positive sign arises because of Clausius’ view of heat; not caloric but still a property of a body The transformation of heat into work was something that occurred within a body – led to the notion of “equivalence value”, Q/T Clausius: Invented the concept “disgregation”, Z, to extend the ideas to irreversible, non-cyclic processes; TdZ dI dW Inserted disgregation into the First Law; dQ dH TdZ 0 Clausius: Changed the sign of dQ;(originally dQ=dH+AdL; dL=dI+dW) dHdQ Derived; dZ 0 T dH Called; dZ the entropy of a body. -

Lecture 6: Entropy

Matthew Schwartz Statistical Mechanics, Spring 2019 Lecture 6: Entropy 1 Introduction In this lecture, we discuss many ways to think about entropy. The most important and most famous property of entropy is that it never decreases Stot > 0 (1) Here, Stot means the change in entropy of a system plus the change in entropy of the surroundings. This is the second law of thermodynamics that we met in the previous lecture. There's a great quote from Sir Arthur Eddington from 1927 summarizing the importance of the second law: If someone points out to you that your pet theory of the universe is in disagreement with Maxwell's equationsthen so much the worse for Maxwell's equations. If it is found to be contradicted by observationwell these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of ther- modynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation. Another possibly relevant quote, from the introduction to the statistical mechanics book by David Goodstein: Ludwig Boltzmann who spent much of his life studying statistical mechanics, died in 1906, by his own hand. Paul Ehrenfest, carrying on the work, died similarly in 1933. Now it is our turn to study statistical mechanics. There are many ways to dene entropy. All of them are equivalent, although it can be hard to see. In this lecture we will compare and contrast dierent denitions, building up intuition for how to think about entropy in dierent contexts. The original denition of entropy, due to Clausius, was thermodynamic.