Instruction Set Architecture of MIPS Processor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

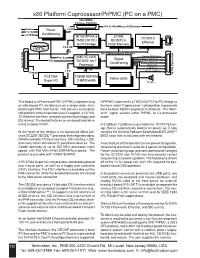

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Simple Computer Example Register Structure

Simple Computer Example Register Structure Read pp. 27-85 Simple Computer • To illustrate how a computer operates, let us look at the design of a very simple computer • Specifications 1. Memory words are 16 bits in length 2. 2 12 = 4 K words of memory 3. Memory can be accessed in one clock cycle 4. Single Accumulator for ALU (AC) 5. Registers are fully connected Simple Computer Continued 4K x 16 Memory MAR 12 MDR 16 X PC 12 ALU IR 16 AC Simple Computer Specifications (continued) 6. Control signals • INCPC – causes PC to increment on clock edge - [PC] +1 PC •ACin - causes output of ALU to be stored in AC • GMDR2X – get memory data register to X - [MDR] X • Read (Write) – Read (Write) contents of memory location whose address is in MAR To implement instructions, control unit must break down the instruction into a series of register transfers (just like a complier must break down C program into a series of machine level instructions) Simple Computer (continued) • Typical microinstruction for reading memory State Register Transfer Control Line(s) Next State 1 [[MAR]] MDR Read 2 • Timing State 1 State 2 During State 1, Read set by control unit CLK - Data is read from memory - MDR changes at the Read beginning of State 2 - Read is completed in one clock cycle MDR Simple Computer (continued) • Study: how to write the microinstructions to implement 3 instructions • ADD address • ADD (address) • JMP address ADD address: add using direct addressing 0000 address [AC] + [address] AC ADD (address): add using indirect addressing 0001 address [AC] + [[address]] AC JMP address 0010 address address PC Instruction Format for Simple Computer IR OP 4 AD 12 AD = address - Two phases to implement instructions: 1. -

MIPS IV Instruction Set

MIPS IV Instruction Set Revision 3.2 September, 1995 Charles Price MIPS Technologies, Inc. All Right Reserved RESTRICTED RIGHTS LEGEND Use, duplication, or disclosure of the technical data contained in this document by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and / or in similar or successor clauses in the FAR, or in the DOD or NASA FAR Supplement. Unpublished rights reserved under the Copyright Laws of the United States. Contractor / manufacturer is MIPS Technologies, Inc., 2011 N. Shoreline Blvd., Mountain View, CA 94039-7311. R2000, R3000, R6000, R4000, R4400, R4200, R8000, R4300 and R10000 are trademarks of MIPS Technologies, Inc. MIPS and R3000 are registered trademarks of MIPS Technologies, Inc. The information in this document is preliminary and subject to change without notice. MIPS Technologies, Inc. (MTI) reserves the right to change any portion of the product described herein to improve function or design. MTI does not assume liability arising out of the application or use of any product or circuit described herein. Information on MIPS products is available electronically: (a) Through the World Wide Web. Point your WWW client to: http://www.mips.com (b) Through ftp from the internet site “sgigate.sgi.com”. Login as “ftp” or “anonymous” and then cd to the directory “pub/doc”. (c) Through an automated FAX service: Inside the USA toll free: (800) 446-6477 (800-IGO-MIPS) Outside the USA: (415) 688-4321 (call from a FAX machine) MIPS Technologies, Inc. -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

Advanced Computer Architecture

Computer Architecture MTIT C 103 MIPS ISA and Processor Compiled By Afaq Alam Khan Introduction The MIPS architecture is one example of a RISC architecture The MIPS architecture is a register architecture. All arithmetic and logical operations involve only registers (or constants that are stored as part of the instructions). The MIPS architecture also includes several simple instructions for loading data from memory into registers and storing data from registers in memory; for this reason, the MIPS architecture is called a load/store architecture Register ◦ MIPS has 32 integer registers ($0, $1, ....... $30, $31) and 32 floating point registers ($f0, $f1, ....... $f30, $f31) ◦ Size of each register is 32 bits The $k0, $K1, $at, and $gp registers are reserved for os, assembler and global data And are not used by the programmer Miscellaneous Registers ◦ $PC: The $pc or program counter register points to the next instruction to be executed and is automatically updated by the CPU after instruction are executed. This register is not typically accessed directly by user programs ◦ $Status: The $status or status register is the processor status register and is updated after each instruction by the CPU. This register is not typically directly accessed by user programs. ◦ $cause: The $cause or exception cause register is used by the CPU in the event of an exception or unexpected interruption in program control flow. Examples of exceptions include division by 0, attempting to access in illegal memory address, or attempting to execute an invalid instruction (e.g., trying to execute a data item instead of code). ◦ $hi / $lo : The $hi and $lo registers are used by some specialized multiply and divide instructions. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

Comparing the Power and Performance of Intel's SCC to State

Comparing the Power and Performance of Intel’s SCC to State-of-the-Art CPUs and GPUs Ehsan Totoni, Babak Behzad, Swapnil Ghike, Josep Torrellas Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA E-mail: ftotoni2, bbehza2, ghike2, [email protected] Abstract—Power dissipation and energy consumption are be- A key architectural challenge now is how to support in- coming increasingly important architectural design constraints in creasing parallelism and scale performance, while being power different types of computers, from embedded systems to large- and energy efficient. There are multiple options on the table, scale supercomputers. To continue the scaling of performance, it is essential that we build parallel processor chips that make the namely “heavy-weight” multi-cores (such as general purpose best use of exponentially increasing numbers of transistors within processors), “light-weight” many-cores (such as Intel’s Single- the power and energy budgets. Intel SCC is an appealing option Chip Cloud Computer (SCC) [1]), low-power processors (such for future many-core architectures. In this paper, we use various as embedded processors), and SIMD-like highly-parallel archi- scalable applications to quantitatively compare and analyze tectures (such as General-Purpose Graphics Processing Units the performance, power consumption and energy efficiency of different cutting-edge platforms that differ in architectural build. (GPGPUs)). These platforms include the Intel Single-Chip Cloud Computer The Intel SCC [1] is a research chip made by Intel Labs (SCC) many-core, the Intel Core i7 general-purpose multi-core, to explore future many-core architectures. It has 48 Pentium the Intel Atom low-power processor, and the Nvidia ION2 (P54C) cores in 24 tiles of two cores each. -

The Central Processor Unit

Systems Architecture The Central Processing Unit The Central Processing Unit – p. 1/11 The Computer System Application High-level Language Operating System Assembly Language Machine level Microprogram Digital logic Hardware / Software Interface The Central Processing Unit – p. 2/11 CPU Structure External Memory MAR: Memory MBR: Memory Address Register Buffer Register Address Incrementer R15 / PC R11 R7 R3 R14 / LR R10 R6 R2 R13 / SP R9 R5 R1 R12 R8 R4 R0 User Registers Booth’s Multiplier Barrel IR Shifter Control Unit CPSR 32-Bit ALU The Central Processing Unit – p. 3/11 CPU Registers Internal Registers Condition Flags PC Program Counter C Carry IR Instruction Register Z Zero MAR Memory Address Register N Negative MBR Memory Buffer Register V Overflow CPSR Current Processor Status Register Internal Devices User Registers ALU Arithmetic Logic Unit Rn Register n CU Control Unit n = 0 . 15 M Memory Store SP Stack Pointer MMU Mem Management Unit LR Link Register Note that each CPU has a different set of User Registers The Central Processing Unit – p. 4/11 Current Process Status Register • Holds a number of status flags: N True if result of last operation is Negative Z True if result of last operation was Zero or equal C True if an unsigned borrow (Carry over) occurred Value of last bit shifted V True if a signed borrow (oVerflow) occurred • Current execution mode: User Normal “user” program execution mode System Privileged operating system tasks Some operations can only be preformed in a System mode The Central Processing Unit – p. 5/11 Register Transfer Language NAME Value of register or unit ← Transfer of data MAR ← PC x: Guard, only if x true hcci: MAR ← PC (field) Specific field of unit ALU(C) ← 1 (name), bit (n) or range (n:m) R0 ← MBR(0:7) Rn User Register n R0 ← MBR num Decimal number R0 ← 128 2_num Binary number R1 ← 2_0100 0001 0xnum Hexadecimal number R2 ← 0x40 M(addr) Memory Access (addr) MBR ← M(MAR) IR(field) Specified field of IR CU ← IR(op-code) ALU(field) Specified field of the ALU(C) ← 1 Arithmetic and Logic Unit The Central Processing Unit – p. -

The Interrupt Program Status Register (IPSR) Contains the Exception Type Number of the Current ISR

Lab #4: POLLING & INTERRUPTS CENG 255 - iHaz - 2016 Lab4 Objectives Recognize different kinds of exceptions. Understand the behavior of interrupts. Realize the advantages and disadvantages of interrupts relative to polling. Explain what polling is and write code for it. Explain what an Interrupt Service Routine (ISR) is and write code for it. Must be Done Individually Before the Lab. Pre-Lab4 Questions Describe the major difference between polling 1 and interrupt. 2 Explain what APSR, IPSR and EPSR are. 3 Explain how PRIMASK is used. 4 Explain what an exception vector table is. 5 Explain what PORT Control registers are. Must be Done Individually Before the Lab. Polling vs. Interrupt Describe the major difference between polling and interrupt. Polling Interrupt • In polling, the processor continuously • An interrupt is a signal to the microprocessor polls or tests a given device as to whether from a device that requires attention. Upon it requires attention. The polling is receiving an interrupt signal, the microcontroller carried out by a polling program that interrupts whatever it is doing and serves the shares processing time with the currently device. The program which is associated with the running task. A device indicates it interrupt is called the interrupt service routine requires attention by setting a bit in its (ISR) or interrupt handler. device status register. • When ISR is done, the microprocessor continues • Polling the device usually means reading with its original task as if it had never been its status register every so often until the interrupted. This is achieved by pushing the device's status changes to indicate that it contents of all of its internal registers on the has completed the request. -

World's First High- Performance X86 With

World’s First High- Performance x86 with Integrated AI Coprocessor Linley Spring Processor Conference 2020 April 8, 2020 G Glenn Henry Dr. Parviz Palangpour Chief AI Architect AI Software Deep Dive into Centaur’s New x86 AI Coprocessor (Ncore) • Background • Motivations • Constraints • Architecture • Software • Benchmarks • Conclusion Demonstrated Working Silicon For Video-Analytics Edge Server Nov, 2019 ©2020 Centaur Technology. All Rights Reserved Centaur Technology Background • 25-year-old startup in Austin, owned by Via Technologies • We design, from scratch, low-cost x86 processors • Everything to produce a custom x86 SoC with ~100 people • Architecture, logic design and microcode • Design, verification, and layout • Physical build, fab interface, and tape-out • Shipped by IBM, HP, Dell, Samsung, Lenovo… ©2020 Centaur Technology. All Rights Reserved Genesis of the AI Coprocessor (Ncore) • Centaur was developing SoC (CHA) with new x86 cores • Targeted at edge/cloud server market (high-end x86 features) • Huge inference markets beyond hyperscale cloud, IOT and mobile • Video analytics, edge computing, on-premise servers • However, x86 isn’t efficient at inference • High-performance inference requires external accelerator • CHA has 44x PCIe to support GPUs, etc. • But adds cost, power, another point of failure, etc. ©2020 Centaur Technology. All Rights Reserved Why not integrate a coprocessor? • Very low cost • Many components already on SoC (“free” to Ncore) Caches, memory, clock, power, package, pins, busses, etc. • There often is “free” space on complex SOCs due to I/O & pins • Having many high-performance x86 cores allows flexibility • The x86 cores can do some of the work, in parallel • Didn’t have to implement all strange/new functions • Allows fast prototyping of new things • For customer: nothing extra to buy ©2020 Centaur Technology. -

Computer Organization and Architecture, Rajaram & Radhakrishan, PHI

CHAPTER I CONTENTS: 1.1 INTRODUCTION 1.2 STORED PROGRAM ORGANIZATION 1.3 INDIRECT ADDRESS 1.4 COMPUTER REGISTERS 1.5 COMMON BUS SYSTEM SUMMARY SELF ASSESSMENT OBJECTIVE: In this chapter we are concerned with basic architecture and the different operations related to explain the proper functioning of the computer. Also how we can specify the operations with the help of different instructions. CHAPTER II CONTENTS: 2.1 REGISTER TRANSFER LANGUAGE 2.2 REGISTER TRANSFER 2.3 BUS AND MEMORY TRANSFERS 2.4 ARITHMETIC MICRO OPERATIONS 2.5 LOGIC MICROOPERATIONS 2.6 SHIFT MICRO OPERATIONS SUMMARY SELF ASSESSMENT OBJECTIVE: Here the concept of digital hardware modules is discussed. Size and complexity of the system can be varied as per the requirement of today. The interconnection of various modules is explained in the text. The way by which data is transferred from one register to another is called micro operation. Different micro operations are explained in the chapter. CHAPTER III CONTENTS: 3.1 INTRODUCTION 3.2 TIMING AND CONTROL 3.3 INSTRUCTION CYCLE 3.4 MEMORY-REFERENCE INSTRUCTIONS 3.5 INPUT-OUTPUT AND INTERRUPT SUMMARY SELF ASSESSMENT OBJECTIVE: There are various instructions with the help of which we can transfer the data from one place to another and manipulate the data as per our requirement. In this chapter we have included all the instructions, how they are being identified by the computer, what are their formats and many more details regarding the instructions. CHAPTER IV CONTENTS: 4.1 INTRODUCTION 4.2 ADDRESS SEQUENCING 4.3 MICROPROGRAM EXAMPLE 4.4 DESIGN OF CONTROL UNIT SUMMARY SELF ASSESSMENT OBJECTIVE: Various examples of micro programs are discussed in this chapter.