The Prevalence and Impact of School Resource Officers in Connecticut Appendix A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

11X17 Document

(Gregg Hunt - continued from page 3) Dedication Award Gregg has coached collegiate summer baseball in the New England Collegiate Baseball League for the Torrington Twisters and Manchester Silkworms. He has also coached the Torrington Titans in both the Atlantic Collegiate Baseball Paul R. Hoey League and Futures Collegiate Baseball League. His teams made six appearances in the league finals over 15 years. He currently has the most career wins of any Associate Executive Director, CIAC manager in NECBL history. Gregg now serves as a deputy commissioner of the NECBL. Gregg has been a member of the Western Connecticut Soccer Officials Paul Hoey, long-time principal of Newington Association for 33 years, officiating at several state finals. He has two children, High School, joined the CIAC central office David, a second year law student at Boston College, and Katie, a junior at the staff in 2004 as associate executive director University of South Carolina. for the CIAC after a thirty-five year career as a teacher, coach, assistant principal, and principal. Paul is the liaison to the CIAC eligibility committee and the CIAC Eligibility Review Board. As such, he is responsible for processing and monitoring all appeals of Jackie DiNardo - Coach Eligibility Committee decisions. He also serves as a consultant and recording secretary to the CIAC Board of Control and to Coach Jackie DiNardo is an exceptional woman who has been able to Presents The combine her love of sports and people to experience many happy and rewarding general CIAC committees including the Seasons Limitations, Eligibility events. She has been involved in playing and coaching basketball for most of her life. -

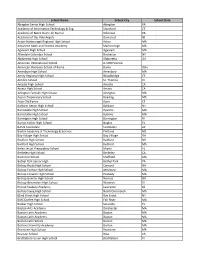

SSP SCHOOL TABLE 0607 1 District Code School Code District

SSP_SCHOOL_TABLE_0607 1 Five Year Pct Eligible for District School Grade Total Enrollment Free Reduced Code Code District Name School Name School Type Range Enrollment Change Price Meals 1 1 Andover School District Andover Elementary School Traditional/Regular PK- 6 341 -1.7 7.0 2 3 Ansonia School District Mead School Traditional/Regular 3- 5 574 -17.4 50.3 2 8 Ansonia School District Prendergast School Traditional/Regular PK- 2 798 23.5 49.2 2 51 Ansonia School District Ansonia Middle School Traditional/Regular 6- 8 619 0.5 49.1 2 61 Ansonia School District Ansonia High School Traditional/Regular 9-12 735 15.4 42.7 3 1 Ashford School District Ashford School Traditional/Regular PK- 8 524 -12.1 15.6 4 3 Avon School District Roaring Brook School Traditional/Regular PK- 4 757 -5.8 1.7 4 4 Avon School District Pine Grove School Traditional/Regular K- 4 599 -13.9 4.0 4 5 Avon School District Thompson Brook School Traditional/Regular 5- 6 581 1.5 4 51 Avon School District Avon Middle School Traditional/Regular 7- 8 580 -19.6 2.8 4 61 Avon School District Avon High School Traditional/Regular 9-12 989 28.4 2.0 5 1 Barkhamsted School District Barkhamsted Elementary School Traditional/Regular K- 6 336 0.6 5.7 7 1 Berlin School District Richard D. Hubbard School Traditional/Regular K- 5 270 -13.7 4.8 7 4 Berlin School District Emma Hart Willard School Traditional/Regular PK- 5 588 3.0 8.0 7 5 Berlin School District Mary E. -

Bulletin Bulletin

BULLETIN BULLETINThe Connecticut Association of Schools The Connecticut Interscholastic Athletic Conference Affiliated with: National Federation of State High School Associations • National Assoc. of Secondary School Principals • National Middle School Assoc. • National Assoc. of Elementary School Principals VOL. 82 / NO. 8 MAY/JUNE 2008 MANSFIELD EDUCATOR CSAL STUDENT LEADERSHIP CONFERENCE A HIT NAMED 2008 NDP By David Maloney, Assistant Executive Director ver 300 participants from twenty tially could be life changing for their high schools descended on Wes- school communities. Everything got Oleyan University during the last started in electric fashion as national pre- weekend in March for the Connecticut senter, Keith Hawkins, kicked off the Student Activities Leadership Conference. weekend by encouraging students "not to One participant was a surprising just consider what might be good for addition. Housatonic Valley Regional themselves and their school, but to take a High School Principal Dr. Gretchen Fos- wider lens and consider what might be ter accompanied her team of student lead- good for the greater community." ers for the two-day event. Foster was not Hawkins instilled the ethos of service disappointed. "The positive energy and right from the "get go" and the students enthusiasm from these leaders kept all of responded enthusiastically to his mes- us completely focused and engaged in sage. Newly elected state president Whit- some wonderful activities. The weekend ley Dozier noted, "Keith Hawkins was was an excellent opportunity for me to phenomenal. He motivated us to take spend time and listen, not only to my own stands on several difficult issues." NORMA FISHER-DOIRON students, but to other school leaders from The break-out presenters at the con- 2008 National Distinguished Principal urban, suburban and rural schools. -

Hartford Foundation Scholarship Program 2012-13 Scholarship

Promising Futures Hartford Foundation Scholarship Program 2012-13 Scholarship Recipients contents VOLUNTEER SCHOLARSHIP COMMITTEE Thank you to the members of the Hartford Foundation’s Volunteer 1 Thank You! Scholarship Committee for all of their hard work in interviewing 2 Hartford Foundation College finalists and recommending recipients for the Hartford Foundation Scholarship Program College Scholarship awards. 3 Alison Marinelli 4 Hartford Foundation College Gemma Baker Richard Pinder Scholarship Program Award Germán Bermúdez Monique Polidoro Recipients Marla Byrnes Tyler Polk Luis Cabán Elizabeth Reilly 20 First-time recipients from funds Britt-Marie Cole-Johnson Rosalie Roth held at the Hartford Foundation Nicholas Criscitelli Sue Shepard 22 Hartford Foundation Community Booker DeVaughn Gail Stafford College Scholarship Program Elena DeVaughn Kathy Steinwedell 23 Graduate student recipients Kristen Horrigan Charles Teale Sr. Thomas Mahoney Dougie Trumble Susan Myers Anna Webersen Chris Pandolfe Sherwood Willard Lucille Pelletier 3Cover photo: Scholarship recipients with Hartford Foundation president, Linda J. Kelly Above: Scholarship recipients during the 8th annual scholarship recipient reception Photos by Jake Koteen Photography Thank You! “Higher education is a prerequisite to success in a knowledge-based society and economy,” according to the Lumina Foundation. We at the Hartford Foundation couldn’t agree more. thrive when the educational levels of the residents are increased. A scholarship has a positive snowball effect – Gifts to the Hartford Foundation for Public Giving through the benefits keep growing, long after the award is received. a scholarship fund acknowledge the work of our students and help them with the rising costs of education. Higher On behalf of the scholarship recipients, thank you! Your education is necessary for our students to reach the generosity has shown them how much the people of our middle class, live a quality life, and to be able to save for community care and believe in them. -

List of AOIME Institutions

List of AOIME Institutions CEEB School City State Zip Code 1001510 Calgary Olympic Math School Calgary AB T2X2E5 1001804 ICUC Academy Calgary AB T3A3W2 820138 Renert School Calgary AB T3R0K4 820225 Western Canada High School Calgary AB T2S0B5 996056 WESTMOUNT CHARTER SCHOOL CALGARY AB T2N 4Y3 820388 Old Scona Academic Edmonton AB T6E 2H5 C10384 University of Alberta Edmonton AB T6G 2R3 1001184 Vernon Barford School Edmonton AB T6J 2C1 10326 ALABAMA SCHOOL OF FINE ARTS BIRMINGHAM AL 35203-2203 10335 ALTAMONT SCHOOL BIRMINGHAM AL 35222-4445 C12963 University of Alabama at Birmingham Birmingham AL 35294 10328 Hoover High School Hoover AL 35244 11697 BOB JONES HIGH SCHOOL MADISON AL 35758-8737 11701 James Clemens High School Madison AL 35756 11793 ALABAMA SCHOOL OF MATH/SCIENCE MOBILE AL 36604-2519 11896 Loveless Academic Magnet Program High School Montgomery AL 36111 11440 Indian Springs School Pelham AL 35124 996060 LOUIS PIZITZ MS VESTAVIA HILLS AL 35216 12768 VESTAVIA HILLS HS VESTAVIA HILLS AL 35216-3314 C07813 University of Arkansas - Fayetteville Fayetteville AR 72701 41148 ASMSA Hot Springs AR 71901 41422 Central High School Little Rock AR 72202 30072 BASIS Chandler Chandler AZ 85248-4598 30045 CHANDLER HIGH SCHOOL CHANDLER AZ 85225-4578 30711 ERIE SCHOOL CAMPUS CHANDLER AZ 85224-4316 30062 Hamilton High School Chandler AZ 85248 997449 GCA - Gilbert Classical Academy Gilbert AZ 85234 30157 MESQUITE HS GILBERT AZ 85233-6506 30668 Perry High School Gilbert AZ 85297 30153 Mountain Ridge High School Glendale AZ 85310 30750 BASIS Mesa -

Connecticut Public Schools by Reason

Connecticut Public Schools Not Making Adequate Yearly Progress, by Category, for the 2008-09 School Year As per No Child Left Behind Legislation and Connecticut's K-12 Accountability System Based on the spring 2009 Connecticut Mastery Test (CMT) and the spring 2009 Connecticut Academic Performance Test (CAPT) Whole School Math and Reading Academic Achievement Number of Schools: 198 Ansonia School District Ansonia High School Bloomfield School District Bloomfield High School Bridgeport School District Barnum School Beardsley School Bryant School Columbus School Edison School Luis Munoz Marin School Hooker School Cesar Batalla School Madison School Classical Studies Academy Jettie S. Tisdale School Read School Roosevelt School Waltersville School Geraldine Johnson School Cross School Blackham School Dunbar School Curiale School Bassick High School Central High School Harding High School Bristol School District Clara T. O'Connell School Danbury School District Stadley Rough School Danbury High School Alternative Center For Excellence Derby School District Irving School East Hartford School District Hockanum School Dr. Franklin H. Mayberry School Anna E. Norris School Dr. Thomas S. O'Connell School Silver Lane School Robert J. O'Brien School Dr. John A. Langford School Sunset Ridge School East Hartford Middle School East Hartford High School Stevens Alternate High school East Windsor School District Broad Brook Elementary School Greenwich School District Hamilton Avenue School Groton School District Catherine Kolnaski Magnet School Hamden School District Church Street School Dunbar Hill School Helen Street School Hartford School District Sand School Batchelder School Burns Latino Studies Academy M. D. Fox ComPACT School Hooker School Kennelly School Kinsella Magnet School McDonough School Naylor School Parkville Community School M. -

Participating School List 2018-2019

School Name School City School State Abington Senior High School Abington PA Academy of Information Technology & Eng. Stamford CT Academy of Notre Dame de Namur Villanova PA Academy of the Holy Angels Demarest NJ Acton-Boxborough Regional High School Acton MA Advanced Math and Science Academy Marlborough MA Agawam High School Agawam MA Allendale Columbia School Rochester NY Alpharetta High School Alpharetta GA American International School A-1090 Vienna American Overseas School of Rome Rome Italy Amesbury High School Amesbury MA Amity Regional High School Woodbridge CT Antilles School St. Thomas VI Arcadia High School Arcadia CA Arcata High School Arcata CA Arlington Catholic High School Arlington MA Austin Preparatory School Reading MA Avon Old Farms Avon CT Baldwin Senior High School Baldwin NY Barnstable High School Hyannis MA Barnstable High School Hyannis MA Barrington High School Barrington RI Barron Collier High School Naples FL BASIS Scottsdale Scottsdale AZ Baxter Academy of Technology & Science Portland ME Bay Village High School Bay Village OH Bedford High School Bedford NH Bedford High School Bedford MA Belen Jesuit Preparatory School Miami FL Berkeley High School Berkeley CA Berkshire School Sheffield MA Bethel Park Senior High Bethel Park PA Bishop Brady High School Concord NH Bishop Feehan High School Attleboro MA Bishop Fenwick High School Peabody MA Bishop Guertin High School Nashua NH Bishop Hendricken High School Warwick RI Bishop Seabury Academy Lawrence KS Bishop Stang High School North Dartmouth MA Blind Brook High -

Collecting Plymouth's History, Past and Present

WHERE TO VISIT: Plymouth Reservoir Recreation Area: The recreation center located on North Street in Plymouth The Terryville Public A Time in History Mural: Located on the includes the Festa Forest Trails (map available at the Terryville Public Library). The Festa Forest Trails Library Presents: wall in the lower parking lot of the Terryville feature caves, stone walls, a natural swing and some Public Library. This mural was made possible by beautiful views of fall foliage and the North Street reservoir. Collecting The Main Street Community Foundation, Art in Motion, Plymouth Beautification Committee and Terryville High School Nature Trail: Located Plymouth’s kind volunteers. Come inside the library to grab behind the Terryville High School, this blazed nature trail loops around a pond. a separate “I Spy” just about the mural itself. History, Past and Present Tory Den: Located about one mile down the Events: A family-oriented town wide Tunxis Trail. The Tunxis Trail can be accessed about 1/2 mile north of St. Matthew’s Cemetery scavenger hunt! on East Plymouth Road. Tory’s Den is a cave that How This Works: was a hideout for the “Tories” or those loyal to Families can spend time together England during the Revolutionary War. exploring Plymouth, Terryville and Pequabuck! Plymouth Library: Located at 692 Main St Plymouth. The Plymouth Library hours are Visit at least 5 of the 20 stops, take a Monday, Wednesday & Friday 11:00 a.m. - 4:00 Tour: On Saturday, May 6, 2017 from 11:00 a.m. – selfie at each and email or post it to 1:00 p.m., Jerry Milne will be on hand to lead visi- p.m., Wednesday evenings 6:00 p.m.- 8:00 p.m., our Facebook event. -

Conard High School Student Handbook 2013/2014

Conard High School Student Handbook 2013/2014 CONARD HIGH SCHOOL 110 Beechwood Rd. West Hartford, Connecticut 06107 Voicemail (860) 231-5000 Switchboard (860) 231-5050 Absence Voicemail (860) 231-6080 Fax (860) 521-6699 Snowline (860) 233-2334 Website: http://whpsconard.sharpschool.net As part of our core values and beliefs, we challenge and guide our students to be active learners and productive citizens. Name: _______________________________________________________ Grade: ______________________________________________________ The information in this book was the best available at press time. Watch for additional information and changes. ©2013 School Datebooks, Inc. All rights reserved. No part of this publication may be reproduced, transmitted, transcribed, stored in any retrieval system, or translated in any form without the written permission of School Datebooks, Inc. 2880 U.S. Hwy. 231 S., Suite 200 • Lafayette, IN 47909 • (765) 471-8883 http://www.schooldatebooks.com • [email protected] 1 TABLE OF CONTENTS 2013-2014 School Bell Schedule ...........................6 Off Grounds ..........................................................56 Academic Honor Code .........................................29 Offer and Distribution by Students ........................39 Academic Honors .................................................59 Office Detention ....................................................52 Academic Progress Notice ...................................59 One-And-A-Half-Hour Delay ...................................7 ACT -

BULLETIN • National Association of Elementary School Principals

JUNE 20111 The Connecticut Association of Schools Affiliated with: The Connecticut Interscholastic Athletic Conference • National Federation of State High School Associations • National Association of Secondary School Principals • National Middle School Association BULLETIN • National Association of Elementary School Principals 59th ANNUAL BUSINESS MEETING SHORT AND SWEET hiccup this year. Karissa has taken hold of the driving wheel and kept us on course," said President Bishop. "Not only did we not lose any programs, we somehow man- aged to expand and improve our services, especially in the area of student activities, thanks to the extraordinary leadership of In This Issue: Dave Maloney." Update on School in Haiti - page 5 President Bishop concluded her remarks by praising the hundreds of loyal CAS mem- “Arts in the Middle” Turns Ten - page 7 bers who devote countless hours to the Connecticut Magnet Schools Receive association each year. "I want to thank all National Honors- page 14 CAS President Kit Bishop (seated) and CAS of you for your dedication to this associa- Executive Director Karissa Niehoff preside CAS President Kit Bishop Reflects on tion through your work on various boards Arts Education - page 15 over the 59th Annual Meeting. and committees. This organization, even with its outstanding staff, would not exist 2011 CIAC Spring Sports Champions - page 16 n May 12th, member school princi- if it were not for your interest and involve- pals and assistant principals gath- ment. We have seen volunteerism decrease Oered at the CAS Central Office for in our schools and community organiza- ANSONIA PRINCIPAL the association's 59th annual meeting. The tions because people are busy or apathetic. -

Dear Members of the Public Health Committee

Dear Members of the Public Health Committee, Typically during testimony, I would explain how this bill impacts my family specifically, but today I want to talk about the numbers. The whole impetus of this bill which aims to remove the religious exemption right now is not because of an emergency or public health crisis, but because of the possibility of one in the future. The Department of Public Health released vaccination data that shows an increase in the use of the religious exemption from one year to the next and “dangerous pockets” of low vaccination rates. I’ve poured over the numbers like children’s lives depend on it, because, frankly it does. The data I’ve used is from the Department of Public Health immunization surveys and the Department of Education’s student enrollment numbers for the 2018-2019 school year. But unfortunately there are many flaws in the data: • Using the supplied data and getting less than one child with an RE or percentages of a child. • Enrollment was less than 30 students and data was still supplied. • Schools with only Pre-Kindergarten students are used in the averages. I’m going to focus on the public school numbers since they are available from the State of CT EdSight database. For public schools, the increase from last school year to the previous year is an average of one religious exemption per public school. ONE. In the 2018-2019 school year there were 530,612 students in public K-12. With an overall exemption rate of 1.8%, that accounts for 9,551 RE. -

2012-13 CIAC Handbook

CIAC MISSION STATEMENT THE CIAC BELIEVES THAT INTERSCHOLASTIC ATHLETIC PROGRAMS AND COMPETITION ARE AN INTEGRAL PART OF A STUDENT’S ACADEMIC, SOCIAL, EMOTIONAL AND PHYSICAL DEVELOPMENT. THE CIAC PROMOTES THE ACADEMIC MISSION OF SCHOOLS AND HONORABLE COMPETITION. AS SUCH, THE CIAC SERVES AS THE REGULATORY AGENCY FOR HIGH SCHOOL INTERSCHOLASTIC ATHLETIC PROGRAMS AND EXISTS TO ASSURE QUALITY EXPERIENCES THAT REFLECT HIGH ETHICAL STANDARDS AND EXPECTATIONS FOR FAIRNESS, EQUITY AND SPORTSMANSHIP FOR ALL STUDENT-ATHLETES AND COACHES. THE CIAC PROVIDES LEADERSHIP AND SUPPORT FOR MEMBER SCHOOLS THROUGH THE VOLUNTARY SERVICES OF DEDICATED SCHOOL ADMINISTRATORS, ATHLETIC DIRECTORS, COACHES AND CONSULTANTS. 2 CONNECTICUT ASSOCIATION OF SCHOOLS CONNECTICUT INTERSCHOLASTIC ATHLETIC CONFERENCE HANDBOOK 2012-2013 30 Realty Drive Cheshire, CT 06410 Telephone (203) 250-1111 / Fax (203) 250-1345 Web site – www.casciac.org Member of the National Federation of State High School Associations Member of the National Association of Secondary School Principals Member of the New England Council of Secondary Schools Member of the National Middle School Association Member of the Council on Standards for International Educational Travel 3 ATTENTION CIAC COMMUNICATION PROTOCOL RULES, REGULATIONS AND INTERPRETATIONS CIAC member principals and athletic directors are expected to know, understand and communicate the CIAC rules and regulations to their student-athletes, their parents/guardians and to other appropriate school personnel. The CIAC Board of Control is the official body charged with the responsibility of interpreting its rules and regulations. The CIAC Executive Director or his/her designee(s) will provide official decisions, where appropriate, specific to the CIAC Code of Eligibility to high school principals, assistant principals and athletic directors only.