Modeling and Adjustment of THEMIS IR Line Scanner Camera Image Measurements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Volcanic History of the Imbrium Basin: a Close-Up View from the Lunar Rover Yutu

Volcanic history of the Imbrium basin: A close-up view from the lunar rover Yutu Jinhai Zhanga, Wei Yanga, Sen Hua, Yangting Lina,1, Guangyou Fangb, Chunlai Lic, Wenxi Pengd, Sanyuan Zhue, Zhiping Hef, Bin Zhoub, Hongyu Ling, Jianfeng Yangh, Enhai Liui, Yuchen Xua, Jianyu Wangf, Zhenxing Yaoa, Yongliao Zouc, Jun Yanc, and Ziyuan Ouyangj aKey Laboratory of Earth and Planetary Physics, Institute of Geology and Geophysics, Chinese Academy of Sciences, Beijing 100029, China; bInstitute of Electronics, Chinese Academy of Sciences, Beijing 100190, China; cNational Astronomical Observatories, Chinese Academy of Sciences, Beijing 100012, China; dInstitute of High Energy Physics, Chinese Academy of Sciences, Beijing 100049, China; eKey Laboratory of Mineralogy and Metallogeny, Guangzhou Institute of Geochemistry, Chinese Academy of Sciences, Guangzhou 510640, China; fKey Laboratory of Space Active Opto-Electronics Technology, Shanghai Institute of Technical Physics, Chinese Academy of Sciences, Shanghai 200083, China; gThe Fifth Laboratory, Beijing Institute of Space Mechanics & Electricity, Beijing 100076, China; hXi’an Institute of Optics and Precision Mechanics, Chinese Academy of Sciences, Xi’an 710119, China; iInstitute of Optics and Electronics, Chinese Academy of Sciences, Chengdu 610209, China; and jInstitute of Geochemistry, Chinese Academy of Science, Guiyang 550002, China Edited by Mark H. Thiemens, University of California, San Diego, La Jolla, CA, and approved March 24, 2015 (received for review February 13, 2015) We report the surface exploration by the lunar rover Yutu that flows in Mare Imbrium was obtained only by remote sensing from landed on the young lava flow in the northeastern part of the orbit. On December 14, 2013, Chang’e-3 successfully landed on the Mare Imbrium, which is the largest basin on the nearside of the young and high-Ti lava flow in the northeastern Mare Imbrium, Moon and is filled with several basalt units estimated to date from about 10 km south from the old low-Ti basalt unit (Fig. -

Mission to Jupiter

This book attempts to convey the creativity, Project A History of the Galileo Jupiter: To Mission The Galileo mission to Jupiter explored leadership, and vision that were necessary for the an exciting new frontier, had a major impact mission’s success. It is a book about dedicated people on planetary science, and provided invaluable and their scientific and engineering achievements. lessons for the design of spacecraft. This The Galileo mission faced many significant problems. mission amassed so many scientific firsts and Some of the most brilliant accomplishments and key discoveries that it can truly be called one of “work-arounds” of the Galileo staff occurred the most impressive feats of exploration of the precisely when these challenges arose. Throughout 20th century. In the words of John Casani, the the mission, engineers and scientists found ways to original project manager of the mission, “Galileo keep the spacecraft operational from a distance of was a way of demonstrating . just what U.S. nearly half a billion miles, enabling one of the most technology was capable of doing.” An engineer impressive voyages of scientific discovery. on the Galileo team expressed more personal * * * * * sentiments when she said, “I had never been a Michael Meltzer is an environmental part of something with such great scope . To scientist who has been writing about science know that the whole world was watching and and technology for nearly 30 years. His books hoping with us that this would work. We were and articles have investigated topics that include doing something for all mankind.” designing solar houses, preventing pollution in When Galileo lifted off from Kennedy electroplating shops, catching salmon with sonar and Space Center on 18 October 1989, it began an radar, and developing a sensor for examining Space interplanetary voyage that took it to Venus, to Michael Meltzer Michael Shuttle engines. -

A Summary of the Unified Lunar Control Network 2005 and Lunar Topographic Model B. A. Archinal, M. R. Rosiek, R. L. Kirk, and B

A Summary of the Unified Lunar Control Network 2005 and Lunar Topographic Model B. A. Archinal, M. R. Rosiek, R. L. Kirk, and B. L. Redding U. S. Geological Survey, 2255 N. Gemini Drive, Flagstaff, AZ 86001, USA, [email protected] Introduction: We have completed a new general unified lunar control network and lunar topographic model based on Clementine images. This photogrammetric network solution is the largest planetary control network ever completed. It includes the determination of the 3-D positions of 272,931 points on the lunar surface and the correction of the camera angles for 43,866 Clementine images, using 546,126 tie point measurements. The solution RMS is 20 µm (= 0.9 pixels) in the image plane, with the largest residual of 6.4 pixels. We are now documenting our solution [1] and plan to release the solution results soon [2]. Previous Networks: In recent years there have been two generally accepted lunar control networks. These are the Unified Lunar Control Network (ULCN) and the Clementine Lunar Control Network (CLCN), both derived by M. Davies and T. Colvin at RAND. The original ULCN was described in the last major publication about a lunar control network [3]. Images for this network are from the Apollo, Mariner 10, and Galileo missions, and Earth-based photographs. The CLCN was derived from Clementine images and measurements on Clementine 750-nm images. The purpose of this network was to determine the geometry for the Clementine Base Map [4]. The geometry of that mosaic was used to produce the Clementine UVVIS digital image model [5] and the Near-Infrared Global Multispectral Map of the Moon from Clementine [6]. -

The Economic Impact of Physics Research in the UK: Satellite Navigation Case Study

The economic impact of physics research in the UK: Satellite Navigation Case Study A report for STFC November 2012 Contents Executive Summary................................................................................... 3 1 The science behind satellite navigation......................................... 4 1.1 Introduction ................................................................................................ 4 1.2 The science................................................................................................ 4 1.3 STFC’s role in satellite navigation.............................................................. 6 1.4 Conclusions................................................................................................ 8 2 Economic impact of satellite navigation ........................................ 9 2.1 Introduction ................................................................................................ 9 2.2 Summary impact of GPS ........................................................................... 9 2.3 The need for Galileo................................................................................. 10 2.4 Definition of the satellite navigation industry............................................ 10 2.5 Methodological approach......................................................................... 11 2.6 Upstream direct impacts .......................................................................... 12 2.7 Downstream direct impacts..................................................................... -

Exploration of the Moon

Exploration of the Moon The physical exploration of the Moon began when Luna 2, a space probe launched by the Soviet Union, made an impact on the surface of the Moon on September 14, 1959. Prior to that the only available means of exploration had been observation from Earth. The invention of the optical telescope brought about the first leap in the quality of lunar observations. Galileo Galilei is generally credited as the first person to use a telescope for astronomical purposes; having made his own telescope in 1609, the mountains and craters on the lunar surface were among his first observations using it. NASA's Apollo program was the first, and to date only, mission to successfully land humans on the Moon, which it did six times. The first landing took place in 1969, when astronauts placed scientific instruments and returnedlunar samples to Earth. Apollo 12 Lunar Module Intrepid prepares to descend towards the surface of the Moon. NASA photo. Contents Early history Space race Recent exploration Plans Past and future lunar missions See also References External links Early history The ancient Greek philosopher Anaxagoras (d. 428 BC) reasoned that the Sun and Moon were both giant spherical rocks, and that the latter reflected the light of the former. His non-religious view of the heavens was one cause for his imprisonment and eventual exile.[1] In his little book On the Face in the Moon's Orb, Plutarch suggested that the Moon had deep recesses in which the light of the Sun did not reach and that the spots are nothing but the shadows of rivers or deep chasms. -

Highlights in Space 2010

International Astronautical Federation Committee on Space Research International Institute of Space Law 94 bis, Avenue de Suffren c/o CNES 94 bis, Avenue de Suffren UNITED NATIONS 75015 Paris, France 2 place Maurice Quentin 75015 Paris, France Tel: +33 1 45 67 42 60 Fax: +33 1 42 73 21 20 Tel. + 33 1 44 76 75 10 E-mail: : [email protected] E-mail: [email protected] Fax. + 33 1 44 76 74 37 URL: www.iislweb.com OFFICE FOR OUTER SPACE AFFAIRS URL: www.iafastro.com E-mail: [email protected] URL : http://cosparhq.cnes.fr Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law The United Nations Office for Outer Space Affairs is responsible for promoting international cooperation in the peaceful uses of outer space and assisting developing countries in using space science and technology. United Nations Office for Outer Space Affairs P. O. Box 500, 1400 Vienna, Austria Tel: (+43-1) 26060-4950 Fax: (+43-1) 26060-5830 E-mail: [email protected] URL: www.unoosa.org United Nations publication Printed in Austria USD 15 Sales No. E.11.I.3 ISBN 978-92-1-101236-1 ST/SPACE/57 *1180239* V.11-80239—January 2011—775 UNITED NATIONS OFFICE FOR OUTER SPACE AFFAIRS UNITED NATIONS OFFICE AT VIENNA Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law Progress in space science, technology and applications, international cooperation and space law UNITED NATIONS New York, 2011 UniTEd NationS PUblication Sales no. -

Space Sector Brochure

SPACE SPACE REVOLUTIONIZING THE WAY TO SPACE SPACECRAFT TECHNOLOGIES PROPULSION Moog provides components and subsystems for cold gas, chemical, and electric Moog is a proven leader in components, subsystems, and systems propulsion and designs, develops, and manufactures complete chemical propulsion for spacecraft of all sizes, from smallsats to GEO spacecraft. systems, including tanks, to accelerate the spacecraft for orbit-insertion, station Moog has been successfully providing spacecraft controls, in- keeping, or attitude control. Moog makes thrusters from <1N to 500N to support the space propulsion, and major subsystems for science, military, propulsion requirements for small to large spacecraft. and commercial operations for more than 60 years. AVIONICS Moog is a proven provider of high performance and reliable space-rated avionics hardware and software for command and data handling, power distribution, payload processing, memory, GPS receivers, motor controllers, and onboard computing. POWER SYSTEMS Moog leverages its proven spacecraft avionics and high-power control systems to supply hardware for telemetry, as well as solar array and battery power management and switching. Applications include bus line power to valves, motors, torque rods, and other end effectors. Moog has developed products for Power Management and Distribution (PMAD) Systems, such as high power DC converters, switching, and power stabilization. MECHANISMS Moog has produced spacecraft motion control products for more than 50 years, dating back to the historic Apollo and Pioneer programs. Today, we offer rotary, linear, and specialized mechanisms for spacecraft motion control needs. Moog is a world-class manufacturer of solar array drives, propulsion positioning gimbals, electric propulsion gimbals, antenna positioner mechanisms, docking and release mechanisms, and specialty payload positioners. -

LUNAR REGOLITH PROPERTIES CONSTRAINED by LRO DIVINER and CHANG'e 2 MICROWAVE RADIOMETER DATA. J. Feng1, M. A. Siegler1, P. O

50th Lunar and Planetary Science Conference 2019 (LPI Contrib. No. 2132) 3176.pdf LUNAR REGOLITH PROPERTIES CONSTRAINED BY LRO DIVINER AND CHANG’E 2 MICROWAVE RADIOMETER DATA. J. Feng1, M. A. Siegler1, P. O. Hayne2, D. T. Blewett3, 1Planetary Sci- ence Institute (1700 East Fort Lowell, Suite 106 Tucson, AZ 85719-2395, [email protected]), 2University of Colorado, Boulder. 3Johns Hopkins Univ. Applied Physics Lab, Maryland. Introduction: The geophysical properties of the sults of the thermal model (which includes layer num- lunar regolith have been well-studied, but many uncer- bers, layer thickness, temperature profile and density tainties remain about their in-situ nature. Diviner Lunar profile) are input parameters for the microwave model. Radiometer Experiment onboard LRO [1] is an instru- In contrast to [2, 3], we update the thermal parame- ment measuring the surface bolometric temperature of ters to match the surface temperature at noon in the the regolith. It has been systematically mapping the equatorial region and diurnal temperature variation in Moon since July 5, 2009 at solar and infrared wave- high latitude (Fig. 1). The best fit of the data show that lengths covering a full range of latitudes, longitudes, for most highlands the regolith has a bond albedo of local times and seasons. The Microwave Radiometer 0.09 at normal solar incidence and bond albedo at arbi- (MRM) carried by Chang’e 2 (CE-2) was used to de- trary solar incidence θ is represented by A=0.09 rive the brightness temperature of the moon in frequen- +0.04×(4θ/π)3+0.05×(2θ/π)8. -

ALBERT VAN HELDEN + HUYGENS’S RING CASSINI’S DIVISION O & O SATURN’S CHILDREN

_ ALBERT VAN HELDEN + HUYGENS’S RING CASSINI’S DIVISION o & o SATURN’S CHILDREN )0g-_ DIBNER LIBRARY LECTURE , HUYGENS’S RING, CASSINI’S DIVISION & SATURN’S CHILDREN c !@ _+++++++++ l ++++++++++ _) _) _) _) _)HUYGENS’S RING, _)CASSINI’S DIVISION _) _)& _)SATURN’S CHILDREN _) _) _)DDDDD _) _) _)Albert van Helden _) _) _) , _) _) _)_ _) _) _) _) _) · _) _) _) ; {(((((((((QW(((((((((} , 20013–7012 Text Copyright ©2006 Albert van Helden. All rights reserved. A H is Professor Emeritus at Rice University and the Univer- HUYGENS’S RING, CASSINI’S DIVISION sity of Utrecht, Netherlands, where he resides and teaches on a regular basis. He received his B.S and M.S. from Stevens Institute of Technology, M.A. from the AND SATURN’S CHILDREN University of Michigan and Ph.D. from Imperial College, University of London. Van Helden is a renowned author who has published respected books and arti- cles about the history of science, including the translation of Galileo’s “Sidereus Nuncius” into English. He has numerous periodical contributions to his credit and has served on the editorial boards of Air and Space, 1990-present; Journal for the History of Astronomy, 1988-present; Isis, 1989–1994; and Tractrix, 1989–1995. During his tenure at Rice University (1970–2001), van Helden was instrumental in establishing the “Galileo Project,”a Web-based source of information on the life and work of Galileo Galilei and the science of his time. A native of the Netherlands, Professor van Helden returned to his homeland in 2001 to join the faculty of Utrecht University. -

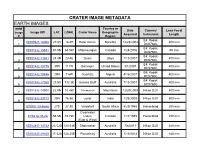

CRATER IMAGE METADATA EARTH IMAGES Country Or BMM Date Camera/ Lens Focal Image Image ID# LAT

CRATER IMAGE METADATA EARTH IMAGES Country or BMM Date Camera/ Lens Focal Image Image ID# LAT. LONG. Crater Name Geographic Acquired Instrument Length # Region E4: Kodak ISS006-E-16068 27.8S 16.4E Roter Kamm Namibia 12/28/2002 400 mm 1 DCS760C E4: Kodak ISS012-E-15881 51.5N 68.5W Manicouagan Canada 1/24/2006 50 mm 2 DCS760C E4: Kodak ISS014-E-11841 24.4N 24.4E Oasis Libya 1/13/2007 400 mm 3 DCS760C E4: Kodak ISS014-E-15775 35N 111W Barringer United States 3/1/2007 400 mm 4 DCS760C E4: Kodak ISS014-E-19496 29N 7.6W Ouarkziz Algeria 4/16/2007 800 mm 5 DCS760C E4: Kodak ISS015-E-17360 23.9S 132.3E Gosses Bluff Australia 7/13/2007 400 mm 6 DCS760C ISS018-E-14908 22.9N 10.4W Tenoumer Mauritania 12/20/2008 Nikon D2X 800 mm 7 ISS018-E-23713 20N 76.5E Lonar India 1/28/2009 Nikon D2X 800 mm 8 STS51I-33-56AA 27S 27.3E Vredefort South Africa 8/29/1985 Hasselblad 250 mm Clearwater STS61A-35-86 56.5N 74.7W Lakes Canada 11/1/1985 Hasselblad 250 mm (East & West) ISS028-E-14782 25.52S 120.53E Shoemaker Australia 7/6/2011 Nikon D2X 200 mm ISS034-E-29105 17.32S 128.25E Piccaninny Australia 1/15/2013 Nikon D2X 180 mm CRATER IMAGE METADATA MARS IMAGES BMM Geographic *Date or Camera/ Image Image ID# LAT. LONG. Crater Name Approx. YR Mission Name Region Instrument # Acquired PIA14290 5.4S 137.8E Gale Aeolis Mensae 2000's THEMIS IR Odyssey THEMIS IR Aeolis 14.5S 175.4E Gusev 2000's THEMIS IR Odyssey MOSAIC Quadrangle Mars Orbiter Colorized MOLA 42S 67E Hellas Basin Hellas Planitia 2000's Laser Altimeter Global Surveyor (MOLA) Viking Orbiter Margaritifer Visual -

Glossary Nov 08

NAVIGATION ACRONYMS, ABBREVIATIONS AND DEFINITIONS Collected by David Broughton These may be reproduced freely, but please inform the Royal Institute of Navigation of any amendments or additions via e-mail ([email protected]) or fax (+44 20 7591 3131). ‘ minute (= 1º/60) 112 European emergency phone number (= 911 in US, 999 in UK) 1st4sport Awarding body of the NCF Qualifications 2-AFC two-alternative forced-choice (procedure used in animal studies) 2dRMS twice distance root-mean-square Twice the root-mean-square of the radial distances from the true position to the observed positions obtained from a number of trials. Should contain 95% of observed positions. 2SOPS 2nd Space Operations Sqn (of 50th Space Wing)(USAF) Exercise command and control of GPS satellite constellation. 3DNC Three-dimensional digital navigational chart 3G third generation (of mobile phone technology or telematics) There is also 2G, 2.5G and 4G for phones. 3GPP 3G Partnership Project 3GT 3rd Generation Telematics (ERTICO and OSGi project) 802.11 a wireless interconnection standard with a range of 50 metres Also known as WiFi. An IEEE standard. 911 US emergency phone number (= 112 in Europe, 999 in UK) a atto (SI unit multiplier of 10-18) a semi-major axis of ellipse (for WGS-84 = 6,378,137 m) A-band NATO radar band 0-250 MHz = > 1.2 m A-GNSS (AGNSS) Assisted GNSS A-GPS (AGPS) Assisted GPS A-NPA Advance Notice of Proposed Amendment (EU JAA) A-NPRM Advance Notice of Proposed Rulemaking (FAA, FCC etc) A-S anti-spoofing In GPS the use of encryption to prevent a P-Code receiver locking to a bogus P-Code transmission. -

Impact Craters) Into the Subsurface

SUBSURFACE MINERAL HETEROGENEITY IN THE MARTIAN CRUST AS SEEN BY THE THERMAL EMISSION IMAGING SYSTEM (THEMIS): VIEWS FROM NATURAL “WINDOWS” (IMPACT CRATERS) INTO THE SUBSURFACE. L. L. Tornabene1 , J. E. Moersch1, H. Y. McSween Jr.1, J. A. Piatek1 and P. R. Christensen2; 1Department of Earth and Planetary Sciences, University of Tennessee, Knoxville, Tennessee 37996-1410, 2 Department of Geological Sciences, Arizona State University, Tempe, Arizona 85287–6305, USA. Introduction and Background: Impact craters have been effectively used as a “natural drill” into the crust of the moon, the Earth and on Mars, giving us a glimpse of the mineral and lithologic compositions that are otherwise not exposed or present on surfaces. A lunar study by Tomp- kins and Pieters [1] has demonstrated that Lunar Clementine data could be used to show that numerous craters on the Moon excavated distinct compositions within both the cen- tral uplift and craters walls/terraces of several complex cra- ters with respect to the surrounding lunar surface composi- tion. Later, Tornabene et al [2] demonstrated how Haughton impact structure was an excellent terrestrial analog for dem- onstrating the utility of using craters for studying both near- subsurface and the shallow crust of Mars. Using ASTER Thermal infrared (TIR) data, as an analog for THEMIS TIR, three subsurface units were distinguished within the structure as units that were excavated and uplifted by the impact event. These units, if not for the regional tilt and erosion, would otherwise not be presently exposed if not for the Haughton event. Meanwhile, recent studies on Mars by the Opportunity rover [3] revealed our first clear view of out- cropping bedrock of sedimentary layers within the walls of Eagle and Endurance craters.