Interactive Learning to Stimulate the Brain's Visual Center and To

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Compare and Contrast Two Models Or Theories of One Cognitive Process with Reference to Research Studies

! The following sample is for the learning objective: Compare and contrast two models or theories of one cognitive process with reference to research studies. What is the question asking for? * A clear outline of two models of one cognitive process. The cognitive process may be memory, perception, decision-making, language or thinking. * Research is used to support the models as described. The research does not need to be outlined in a lot of detail, but underatanding of the role of research in supporting the models should be apparent.. * Both similarities and differences of the two models should be clearly outlined. Sample response The theory of memory is studied scientifically and several models have been developed to help The cognitive process describe and potentially explain how memory works. Two models that attempt to describe how (memory) and two models are memory works are the Multi-Store Model of Memory, developed by Atkinson & Shiffrin (1968), clearly identified. and the Working Memory Model of Memory, developed by Baddeley & Hitch (1974). The Multi-store model model explains that all memory is taken in through our senses; this is called sensory input. This information is enters our sensory memory, where if it is attended to, it will pass to short-term memory. If not attention is paid to it, it is displaced. Short-term memory Research. is limited in duration and capacity. According to Miller, STM can hold only 7 plus or minus 2 pieces of information. Short-term memory memory lasts for six to twelve seconds. When information in the short-term memory is rehearsed, it enters the long-term memory store in a process called “encoding.” When we recall information, it is retrieved from LTM and moved A satisfactory description of back into STM. -

Types of Memory and Models of Memory

Inf1: Intro to Cognive Science Types of memory and models of memory Alyssa Alcorn, Helen Pain and Henry Thompson March 21, 2012 Intro to Cognitive Science 1 1. In the lecture today A review of short-term memory, and how much stuff fits in there anyway 1. Whether or not the number 7 is magic 2. Working memory 3. The Baddeley-Hitch model of memory hEp://www.cartoonstock.com/directory/s/ short_term_memory.asp March 21, 2012 Intro to Cognitive Science 2 2. Review of Short-Term Memory (STM) Short-term memory (STM) is responsible for storing small amounts of material over short periods of Nme A short Nme really means a SHORT Nme-- up to several seconds. Anything remembered for longer than this Nme is classified as long-term memory and involves different systems and processes. !!! Note that this is different that what we mean mean by short- term memory in everyday speech. If someone cannot remember what you told them five minutes ago, this is actually a problem with long-term memory. While much STM research discusses verbal or visuo-spaal informaon, the disNncNon of short vs. long-term applies to other types of sNmuli as well. 3/21/12 Intro to Cognitive Science 3 3. Memory span and magic numbers Amount of informaon varies with individual’s memory span = longest number of items (e.g. digits) that can be immediately repeated back in correct order. Classic research by George Miller (1956) described the apparent limits of short-term memory span in one of the most-cited papers in all of psychology. -

Handbook of Metamemory and Memory Evolution of Metacognition

This article was downloaded by: 10.3.98.104 On: 29 Sep 2021 Access details: subscription number Publisher: Routledge Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: 5 Howick Place, London SW1P 1WG, UK Handbook of Metamemory and Memory John Dunlosky, Robert A. Bjork Evolution of Metacognition Publication details https://www.routledgehandbooks.com/doi/10.4324/9780203805503.ch3 Janet Metcalfe Published online on: 28 May 2008 How to cite :- Janet Metcalfe. 28 May 2008, Evolution of Metacognition from: Handbook of Metamemory and Memory Routledge Accessed on: 29 Sep 2021 https://www.routledgehandbooks.com/doi/10.4324/9780203805503.ch3 PLEASE SCROLL DOWN FOR DOCUMENT Full terms and conditions of use: https://www.routledgehandbooks.com/legal-notices/terms This Document PDF may be used for research, teaching and private study purposes. Any substantial or systematic reproductions, re-distribution, re-selling, loan or sub-licensing, systematic supply or distribution in any form to anyone is expressly forbidden. The publisher does not give any warranty express or implied or make any representation that the contents will be complete or accurate or up to date. The publisher shall not be liable for an loss, actions, claims, proceedings, demand or costs or damages whatsoever or howsoever caused arising directly or indirectly in connection with or arising out of the use of this material. Evolution of Metacognition Janet Metcalfe Introduction The importance of metacognition, in the evolution of human consciousness, has been emphasized by thinkers going back hundreds of years. While it is clear that people have metacognition, even when it is strictly defined as it is here, whether any other animals share this capability is the topic of this chapter. -

How Trauma Impacts Four Different Types of Memory

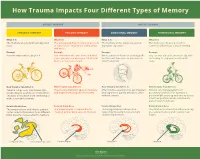

How Trauma Impacts Four Different Types of Memory EXPLICIT MEMORY IMPLICIT MEMORY SEMANTIC MEMORY EPISODIC MEMORY EMOTIONAL MEMORY PROCEDURAL MEMORY What It Is What It Is What It Is What It Is The memory of general knowledge and The autobiographical memory of an event The memory of the emotions you felt The memory of how to perform a facts. or experience – including the who, what, during an experience. common task without actively thinking and where. Example Example Example Example You remember what a bicycle is. You remember who was there and what When a wave of shame or anxiety grabs You can ride a bicycle automatically, with- street you were on when you fell off your you the next time you see your bicycle out having to stop and recall how it’s bicycle in front of a crowd. after the big fall. done. How Trauma Can Affect It How Trauma Can Affect It How Trauma Can Affect It How Trauma Can Affect It Trauma can prevent information (like Trauma can shutdown episodic memory After trauma, a person may get triggered Trauma can change patterns of words, images, sounds, etc.) from differ- and fragment the sequence of events. and experience painful emotions, often procedural memory. For example, a ent parts of the brain from combining to without context. person might tense up and unconsciously make a semantic memory. alter their posture, which could lead to pain or even numbness. Related Brain Area Related Brain Area Related Brain Area Related Brain Area The temporal lobe and inferior parietal The hippocampus is responsible for The amygdala plays a key role in The striatum is associated with producing cortex collect information from different creating and recalling episodic memory. -

Memory-Modulation: Self-Improvement Or Self-Depletion?

HYPOTHESIS AND THEORY published: 05 April 2018 doi: 10.3389/fpsyg.2018.00469 Memory-Modulation: Self-Improvement or Self-Depletion? Andrea Lavazza* Neuroethics, Centro Universitario Internazionale, Arezzo, Italy Autobiographical memory is fundamental to the process of self-construction. Therefore, the possibility of modifying autobiographical memories, in particular with memory-modulation and memory-erasing, is a very important topic both from the theoretical and from the practical point of view. The aim of this paper is to illustrate the state of the art of some of the most promising areas of memory-modulation and memory-erasing, considering how they can affect the self and the overall balance of the “self and autobiographical memory” system. Indeed, different conceptualizations of the self and of personal identity in relation to autobiographical memory are what makes memory-modulation and memory-erasing more or less desirable. Because of the current limitations (both practical and ethical) to interventions on memory, I can Edited by: only sketch some hypotheses. However, it can be argued that the choice to mitigate Rossella Guerini, painful memories (or edit memories for other reasons) is somehow problematic, from an Università degli Studi Roma Tre, Italy ethical point of view, according to some of the theories of the self and personal identity Reviewed by: in relation to autobiographical memory, in particular for the so-called narrative theories Tillmann Vierkant, University of Edinburgh, of personal identity, chosen here as the main case of study. Other conceptualizations of United Kingdom the “self and autobiographical memory” system, namely the constructivist theories, do Antonella Marchetti, Università Cattolica del Sacro Cuore, not have this sort of critical concerns. -

CHAPTER 6: MEMORY STRATEGIES: MNEMONIC DEVICES in Order to Remember Information, You First Have to Find It Somewhere in Your Memory

CHAPTER 6: MEMORY STRATEGIES: MNEMONIC DEVICES In order to remember information, you first have to find it somewhere in your memory. To be successful in college you need to use active learning strategies that help you store information and retrieve it. Mnemonic devices can help you do that. Mnemonics are techniques for remembering information that is otherwise difficult to recall. The idea behind using mnemonics is to encode complex information in a way that is much easier to remember. Two common types of mnemonic devices are acronyms and acrostics. Acronyms Acronyms are words made up of the first letters of other words. As a mnemonic device, acronyms help you remember the first letters of items in a list, which in turn helps you remember the list itself. Instructor OLC to accompany 100% Online Student Success 1 Examples The following are examples of popular mnemonic acronyms: HOMES Huron, Ontario, Michigan, Erie, Superior Names of the Great Lakes FACE The letters of the treble clef notes in the spaces from bottom to top spells “FACE”. ROY G. BIV Red, Orange, Yellow, Green, Blue, Indigo, Colors of the spectrum Violet MRS GREN Movement, Respiration, Sensitivity, Growth, Common attributes of living Reproduction, Excretion, Nutrition things Create Your Own Acronym Now think of a few words you need to remember. This could be related to your studies, your work, or just a subject of interest. Five steps to creating acronyms are*: 1. List the information you need to learn in meaningful phrases. 2. Circle or underline a keyword in each phrase. 3. Write down the first letter of each keyword. -

Learning Styles and Memory

Learning Styles and Memory Sandra E. Davis Auburn University Abstract The purpose of this article is to examine the relationship between learning styles and memory. Two learning styles were addressed in order to increase the understanding of learning styles and how they are applied to the individual. Specifically, memory phases and layers of memory will also be discussed. In conclusion, an increased understanding of the relationship between learning styles and memory seems to help the learner gain a better understanding of how to the maximize benefits for the preferred leaning style and how to retain the information in long-term memory. Introduction Learning styles, as identified in the Perpetual Learning Styles Theory and memory, as identified in the Memletics Accelerated Learning, will be overviewed. Factors involving information being retained into memory will then be discussed. This article will explain how the relationship between learning styles and memory can help the learner maximize his or her learning potential. Learning Styles The Perceptual Learning Styles Theory lists seven different styles. They are print, aural, interactive, visual, haptic, kinesthetic, and olfactory (Institute for Learning Styles Research, 2003). This theory says that most of what we learn comes from our five senses. The Perceptual Learning Style Theory defines the seven learning styles as follows: The print learning style individual prefers to see the written word (Institute for Learning Styles Research, 2003). They like taking notes, reading books, and seeing the written word, either on a chalk board or thru a media presentation such as Microsoft Powerpoint. The aural learner refers to listening (Institute for Learning Styles Research, 2003). -

Memory Formation, Consolidation and Transformation

Neuroscience and Biobehavioral Reviews 36 (2012) 1640–1645 Contents lists available at SciVerse ScienceDirect Neuroscience and Biobehavioral Reviews journa l homepage: www.elsevier.com/locate/neubiorev Review Memory formation, consolidation and transformation a,∗ b a a L. Nadel , A. Hupbach , R. Gomez , K. Newman-Smith a Department of Psychology, University of Arizona, United States b Department of Psychology, Lehigh University, United States a r t i c l e i n f o a b s t r a c t Article history: Memory formation is a highly dynamic process. In this review we discuss traditional views of memory Received 29 August 2011 and offer some ideas about the nature of memory formation and transformation. We argue that memory Received in revised form 20 February 2012 traces are transformed over time in a number of ways, but that understanding these transformations Accepted 2 March 2012 requires careful analysis of the various representations and linkages that result from an experience. These transformations can involve: (1) the selective strengthening of only some, but not all, traces as a function Keywords: of synaptic rescaling, or some other process that can result in selective survival of some traces; (2) the Memory consolidation integration (or assimilation) of new information into existing knowledge stores; (3) the establishment Transformation Reconsolidation of new linkages within existing knowledge stores; and (4) the up-dating of an existing episodic memory. We relate these ideas to our own work on reconsolidation to provide some grounding to our speculations that we hope will spark some new thinking in an area that is in need of transformation. -

The Three Amnesias

The Three Amnesias Russell M. Bauer, Ph.D. Department of Clinical and Health Psychology College of Public Health and Health Professions Evelyn F. and William L. McKnight Brain Institute University of Florida PO Box 100165 HSC Gainesville, FL 32610-0165 USA Bauer, R.M. (in press). The Three Amnesias. In J. Morgan and J.E. Ricker (Eds.), Textbook of Clinical Neuropsychology. Philadelphia: Taylor & Francis/Psychology Press. The Three Amnesias - 2 During the past five decades, our understanding of memory and its disorders has increased dramatically. In 1950, very little was known about the localization of brain lesions causing amnesia. Despite a few clues in earlier literature, it came as a complete surprise in the early 1950’s that bilateral medial temporal resection caused amnesia. The importance of the thalamus in memory was hardly suspected until the 1970’s and the basal forebrain was an area virtually unknown to clinicians before the 1980’s. An animal model of the amnesic syndrome was not developed until the 1970’s. The famous case of Henry M. (H.M.), published by Scoville and Milner (1957), marked the beginning of what has been called the “golden age of memory”. Since that time, experimental analyses of amnesic patients, coupled with meticulous clinical description, pathological analysis, and, more recently, structural and functional imaging, has led to a clearer understanding of the nature and characteristics of the human amnesic syndrome. The amnesic syndrome does not affect all kinds of memory, and, conversely, memory disordered patients without full-blown amnesia (e.g., patients with frontal lesions) may have impairment in those cognitive processes that normally support remembering. -

Models of Memory

To be published in H. Pashler & D. Medin (Eds.), Stevens’ Handbook of Experimental Psychology, Third Edition, Volume 2: Memory and Cognitive Processes. New York: John Wiley & Sons, Inc.. MODELS OF MEMORY Jeroen G.W. Raaijmakers Richard M. Shiffrin University of Amsterdam Indiana University Introduction Sciences tend to evolve in a direction that introduces greater emphasis on formal theorizing. Psychology generally, and the study of memory in particular, have followed this prescription: The memory field has seen a continuing introduction of mathematical and formal computer simulation models, today reaching the point where modeling is an integral part of the field rather than an esoteric newcomer. Thus anything resembling a comprehensive treatment of memory models would in effect turn into a review of the field of memory research, and considerably exceed the scope of this chapter. We shall deal with this problem by covering selected approaches that introduce some of the main themes that have characterized model development. This selective coverage will emphasize our own work perhaps somewhat more than would have been the case for other authors, but we are far more familiar with our models than some of the alternatives, and we believe they provide good examples of the themes that we wish to highlight. The earliest attempts to apply mathematical modeling to memory probably date back to the late 19th century when pioneers such as Ebbinghaus and Thorndike started to collect empirical data on learning and memory. Given the obvious regularities of learning and forgetting curves, it is not surprising that the question was asked whether these regularities could be captured by mathematical functions. -

Working Memory Training: Assessing the Efficiency of Mnemonic Strategies

entropy Article Working Memory Training: Assessing the Efficiency of Mnemonic Strategies 1, 2 1 1, Serena Di Santo y, Vanni De Luca , Alessio Isaja and Sara Andreetta * 1 Cognitive Neuroscience, Scuola Internazionale Superiore di Studi Avanzati, I-34136 Trieste, Italy; [email protected] (S.D.S.); [email protected] (A.I.) 2 Scuola Peripatetica d’Arte Mnemonica (S.P.A.M.), 10125 Turin, Italy; [email protected] * Correspondence: [email protected] Current address: Center for Theoretical Neuroscience, Columbia University, New York, NY 10027, USA. y Received: 6 April 2020; Accepted: 18 May 2020; Published: 20 May 2020 Abstract: Recently, there has been increasing interest in techniques for enhancing working memory (WM), casting a new light on the classical picture of a rigid system. One reason is that WM performance has been associated with intelligence and reasoning, while its impairment showed correlations with cognitive deficits, hence the possibility of training it is highly appealing. However, results on WM changes following training are controversial, leaving it unclear whether it can really be potentiated. This study aims at assessing changes in WM performance by comparing it with and without training by a professional mnemonist. Two groups, experimental and control, participated in the study, organized in two phases. In the morning, both groups were familiarized with stimuli through an N-back task, and then attended a 2-hour lecture. For the experimental group, the lecture, given by the mnemonist, introduced memory encoding techniques; for the control group, it was a standard academic lecture about memory systems. In the afternoon, both groups were administered five tests, in which they had to remember the position of 16 items, when asked in random order. -

Brain-Based Teaching Strategies for Improving Students' Memory

This article was downloaded by: [John Meyer] On: 22 July 2013, At: 11:40 Publisher: Routledge Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer House, 37-41 Mortimer Street, London W1T 3JH, UK Childhood Education Publication details, including instructions for authors and subscription information: http://www.tandfonline.com/loi/uced20 Review of Research: Brain-Based Teaching Strategies for Improving Students' Memory, Learning, and Test-Taking Success Judy Willis MD, M.Ed a a Santa Barbara Middle School, Santa Barbara, California, USA To cite this article: Judy Willis MD, M.Ed (2007) Review of Research: Brain-Based Teaching Strategies for Improving Students' Memory, Learning, and Test-Taking Success, Childhood Education, 83:5, 310-315, DOI: 10.1080/00094056.2007.10522940 To link to this article: http://dx.doi.org/10.1080/00094056.2007.10522940 PLEASE SCROLL DOWN FOR ARTICLE Taylor & Francis makes every effort to ensure the accuracy of all the information (the “Content”) contained in the publications on our platform. However, Taylor & Francis, our agents, and our licensors make no representations or warranties whatsoever as to the accuracy, completeness, or suitability for any purpose of the Content. Any opinions and views expressed in this publication are the opinions and views of the authors, and are not the views of or endorsed by Taylor & Francis. The accuracy of the Content should not be relied upon and should be independently verified with primary sources of information. Taylor and Francis shall not be liable for any losses, actions, claims, proceedings, demands, costs, expenses, damages, and other liabilities whatsoever or howsoever caused arising directly or indirectly in connection with, in relation to or arising out of the use of the Content.