AWK Structure of an AWK Program

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

At—At, Batch—Execute Commands at a Later Time

at—at, batch—execute commands at a later time at [–csm] [–f script] [–qqueue] time [date] [+ increment] at –l [ job...] at –r job... batch at and batch read commands from standard input to be executed at a later time. at allows you to specify when the commands should be executed, while jobs queued with batch will execute when system load level permits. Executes commands read from stdin or a file at some later time. Unless redirected, the output is mailed to the user. Example A.1 1 at 6:30am Dec 12 < program 2 at noon tomorrow < program 3 at 1945 pm August 9 < program 4 at now + 3 hours < program 5 at 8:30am Jan 4 < program 6 at -r 83883555320.a EXPLANATION 1. At 6:30 in the morning on December 12th, start the job. 2. At noon tomorrow start the job. 3. At 7:45 in the evening on August 9th, start the job. 4. In three hours start the job. 5. At 8:30 in the morning of January 4th, start the job. 6. Removes previously scheduled job 83883555320.a. awk—pattern scanning and processing language awk [ –fprogram–file ] [ –Fc ] [ prog ] [ parameters ] [ filename...] awk scans each input filename for lines that match any of a set of patterns specified in prog. Example A.2 1 awk '{print $1, $2}' file 2 awk '/John/{print $3, $4}' file 3 awk -F: '{print $3}' /etc/passwd 4 date | awk '{print $6}' EXPLANATION 1. Prints the first two fields of file where fields are separated by whitespace. 2. Prints fields 3 and 4 if the pattern John is found. -

Wait, I Don't Want to Be the Linux Administrator for SAS VA

SESUG Paper 88-2017 Wait, I don’t want to be the Linux Administrator for SAS VA Jonathan Boase; Zencos Consulting ABSTRACT Whether you are a new SAS administrator or switching to a Linux environment, you have a complex mission. This job becomes even more formidable when you are working with a system like SAS Visual Analytics that requires multiple users loading data daily. Eventually a user will have data issues or create a disruption that causes the system to malfunction. When that happens, what do you do next? In this paper, we will go through the basics of a SAS Visual Analytics Linux environment and how to troubleshoot the system when issues arise. INTRODUCTION Many companies choose to implement SAS Visual Analytics in a Linux environment. With a distributed deployment, it’s the only choice but many chose this operating system because it reduces operating costs. If you are the newly chosen SAS platform administrator, you might be more versed in a Windows environment and feel intimidated by Linux. This paper introduces using basic Linux commands and methods for troubleshooting a SAS Visual Analytics environment. The paper assumes that SAS Visual Analytics is installed on a SAS 9.4 platform for Linux and that the reader has some familiarity with other operating systems, such as Windows. PLATFORM ADMINISTRATION 101 SAS platform administrators work with three main product areas. Each area provides a different functionality based on the task the administrator needs to perform. The following figure defines each area and provides a general overview of its purpose. Figure 1 Platform Administrator Tools Operating System SAS Management SAS Environment •Contains installed Console Manager software •Access and manage the •Monitor the •Contains logs used for metadata environment troubleshooting •Control database •Configure custom alerts •Administer host system connections users •Manage user accounts •Manage the LASR server With any operating system, there is always a lot to learn. -

Drilling Network Stacks with Packetdrill

Drilling Network Stacks with packetdrill NEAL CARDWELL AND BARATH RAGHAVAN Neal Cardwell received an M.S. esting and troubleshooting network protocols and stacks can be in Computer Science from the painstaking. To ease this process, our team built packetdrill, a tool University of Washington, with that lets you write precise scripts to test entire network stacks, from research focused on TCP and T the system call layer down to the NIC hardware. packetdrill scripts use a Web performance. He joined familiar syntax and run in seconds, making them easy to use during develop- Google in 2002. Since then he has worked on networking software for google.com, the ment, debugging, and regression testing, and for learning and investigation. Googlebot web crawler, the network stack in Have you ever had the experience of staring at a long network trace, trying to figure out what the Linux kernel, and TCP performance and on earth went wrong? When a network protocol is not working right, how might you find the testing. [email protected] problem and fix it? Although tools like tcpdump allow us to peek under the hood, and tools like netperf help measure networks end-to-end, reproducing behavior is still hard, and know- Barath Raghavan received a ing when an issue has been fixed is even harder. Ph.D. in Computer Science from UC San Diego and a B.S. from These are the exact problems that our team used to encounter on a regular basis during UC Berkeley. He joined Google kernel network stack development. Here we describe packetdrill, which we built to enable in 2012 and was previously a scriptable network stack testing. -

Bash Crash Course + Bc + Sed + Awk∗

Bash Crash course + bc + sed + awk∗ Andrey Lukyanenko, CSE, Aalto University Fall, 2011 There are many Unix shell programs: bash, sh, csh, tcsh, ksh, etc. The comparison of those can be found on-line 1. We will primary focus on the capabilities of bash v.4 shell2. 1. Each bash script can be considered as a text file which starts with #!/bin/bash. It informs the editor or interpretor which tries to open the file, what to do with the file and how should it be treated. The special character set in the beginning #! is a magic number; check man magic and /usr/share/file/magic on existing magic numbers if interested. 2. Each script (assume you created “scriptname.sh file) can be invoked by command <dir>/scriptname.sh in console, where <dir> is absolute or relative path to the script directory, e.g., ./scriptname.sh for current directory. If it has #! as the first line it will be invoked by this command, otherwise it can be called by command bash <dir>/scriptname.sh. Notice: to call script as ./scriptname.sh it has to be executable, i.e., call command chmod 555 scriptname.sh before- hand. 3. Variable in bash can be treated as integers or strings, depending on their value. There are set of operations and rules available for them. For example: #!/bin/bash var1=123 # Assigns value 123 to var1 echo var1 # Prints ’var1’ to output echo $var1 # Prints ’123’ to output var2 =321 # Error (var2: command not found) var2= 321 # Error (321: command not found) var2=321 # Correct var3=$var2 # Assigns value 321 from var2 to var3 echo $var3 # Prints ’321’ to output -

UNIX X Command Tips and Tricks David B

SESUG Paper 122-2019 UNIX X Command Tips and Tricks David B. Horvath, MS, CCP ABSTRACT SAS® provides the ability to execute operating system level commands from within your SAS code – generically known as the “X Command”. This session explores the various commands, the advantages and disadvantages of each, and their alternatives. The focus is on UNIX/Linux but much of the same applies to Windows as well. Under SAS EG, any issued commands execute on the SAS engine, not necessarily on the PC. X %sysexec Call system Systask command Filename pipe &SYSRC Waitfor Alternatives will also be addressed – how to handle when NOXCMD is the default for your installation, saving results, and error checking. INTRODUCTION In this paper I will be covering some of the basics of the functionality within SAS that allows you to execute operating system commands from within your program. There are multiple ways you can do so – external to data steps, within data steps, and within macros. All of these, along with error checking, will be covered. RELEVANT OPTIONS Execution of any of the SAS System command execution commands depends on one option's setting: XCMD Enables the X command in SAS. Which can only be set at startup: options xcmd; ____ 30 WARNING 30-12: SAS option XCMD is valid only at startup of the SAS System. The SAS option is ignored. Unfortunately, ff NOXCMD is set at startup time, you're out of luck. Sorry! You might want to have a conversation with your system administrators to determine why and if you can get it changed. -

Useful Commands in Linux and Other Tools for Quality Control

Useful commands in Linux and other tools for quality control Ignacio Aguilar INIA Uruguay 05-2018 Unix Basic Commands pwd show working directory ls list files in working directory ll as before but with more information mkdir d make a directory d cd d change to directory d Copy and moving commands To copy file cp /home/user/is . To copy file directory cp –r /home/folder . to move file aa into bb in folder test mv aa ./test/bb To delete rm yy delete the file yy rm –r xx delete the folder xx Redirections & pipe Redirection useful to read/write from file !! aa < bb program aa reads from file bb blupf90 < in aa > bb program aa write in file bb blupf90 < in > log Redirections & pipe “|” similar to redirection but instead to write to a file, passes content as input to other command tee copy standard input to standard output and save in a file echo copy stream to standard output Example: program blupf90 reads name of parameter file and writes output in terminal and in file log echo par.b90 | blupf90 | tee blup.log Other popular commands head file print first 10 lines list file page-by-page tail file print last 10 lines less file list file line-by-line or page-by-page wc –l file count lines grep text file find lines that contains text cat file1 fiel2 concatenate files sort sort file cut cuts specific columns join join lines of two files on specific columns paste paste lines of two file expand replace TAB with spaces uniq retain unique lines on a sorted file head / tail $ head pedigree.txt 1 0 0 2 0 0 3 0 0 4 0 0 5 0 0 6 0 0 7 0 0 8 0 0 9 0 0 10 -

The AWK Programming Language

The Programming ~" ·. Language PolyAWK- The Toolbox Language· Auru:o V. AHo BRIAN W.I<ERNIGHAN PETER J. WEINBERGER TheAWK4 Programming~ Language TheAWI(. Programming~ Language ALFRED V. AHo BRIAN w. KERNIGHAN PETER J. WEINBERGER AT& T Bell Laboratories Murray Hill, New Jersey A ADDISON-WESLEY•• PUBLISHING COMPANY Reading, Massachusetts • Menlo Park, California • New York Don Mills, Ontario • Wokingham, England • Amsterdam • Bonn Sydney • Singapore • Tokyo • Madrid • Bogota Santiago • San Juan This book is in the Addison-Wesley Series in Computer Science Michael A. Harrison Consulting Editor Library of Congress Cataloging-in-Publication Data Aho, Alfred V. The AWK programming language. Includes index. I. AWK (Computer program language) I. Kernighan, Brian W. II. Weinberger, Peter J. III. Title. QA76.73.A95A35 1988 005.13'3 87-17566 ISBN 0-201-07981-X This book was typeset in Times Roman and Courier by the authors, using an Autologic APS-5 phototypesetter and a DEC VAX 8550 running the 9th Edition of the UNIX~ operating system. -~- ATs.T Copyright c 1988 by Bell Telephone Laboratories, Incorporated. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopy ing, recording, or otherwise, without the prior written permission of the publisher. Printed in the United States of America. Published simultaneously in Canada. UNIX is a registered trademark of AT&T. DEFGHIJ-AL-898 PREFACE Computer users spend a lot of time doing simple, mechanical data manipula tion - changing the format of data, checking its validity, finding items with some property, adding up numbers, printing reports, and the like. -

An AWK to C++ Translator

An AWK to C++ Translator Brian W. Kernighan Bell Laboratories Murray Hill, NJ 07974 [email protected] ABSTRACT This paper describes an experiment to produce an AWK to C++ translator and an AWK class definition and supporting library. The intent is to generate efficient and read- able C++, so that the generated code will run faster than interpreted AWK and can also be modified and extended where an AWK program could not be. The AWK class and library can also be used independently of the translator to access AWK facilities from C++ pro- grams. 1. Introduction An AWK program [1] is a sequence of pattern-action statements: pattern { action } pattern { action } ... A pattern is a regular expression, numeric expression, string expression, or combination of these; an action is executable code similar to C. The operation of an AWK program is for each input line for each pattern if the pattern matches the input line do the action Variables in an AWK program contain string or numeric values or both according to context; there are built- in variables for useful values such as the input filename and record number. Operators work on strings or numbers, coercing the types of their operands as necessary. Arrays have arbitrary subscripts (‘‘associative arrays’’). Input is read and input lines are split into fields, called $1, $2, etc. Control flow statements are like those of C: if-else, while, for, do. There are user-defined and built-in functions for arithmetic, string, regular expression pattern-matching, and input and output to/from arbitrary files and processes. -

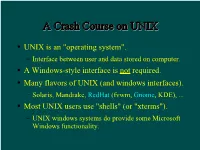

A Crash Course on UNIX

AA CCrraasshh CCoouurrssee oonn UUNNIIXX UNIX is an "operating system". Interface between user and data stored on computer. A Windows-style interface is not required. Many flavors of UNIX (and windows interfaces). Solaris, Mandrake, RedHat (fvwm, Gnome, KDE), ... Most UNIX users use "shells" (or "xterms"). UNIX windows systems do provide some Microsoft Windows functionality. TThhee SShheellll A shell is a command-line interface to UNIX. Also many flavors, e.g. sh, bash, csh, tcsh. The shell provides commands and functionality beyond the basic UNIX tools. E.g., wildcards, shell variables, loop control, etc. For this tutorial, examples use tcsh in RedHat Linux running Gnome. Differences are minor for the most part... BBaassiicc CCoommmmaannddss You need these to survive: ls, cd, cp, mkdir, mv. Typically these are UNIX (not shell) commands. They are actually programs that someone has written. Most commands such as these accept (or require) "arguments". E.g. ls -a [show all files, incl. "dot files"] mkdir ASTR688 [create a directory] cp myfile backup [copy a file] See the handout for a list of more commands. AA WWoorrdd AAbboouutt DDiirreeccttoorriieess Use cd to change directories. By default you start in your home directory. E.g. /home/dcr Handy abbreviations: Home directory: ~ Someone else's home directory: ~user Current directory: . Parent directory: .. SShhoorrttccuuttss To return to your home directory: cd To return to the previous directory: cd - In tcsh, with filename completion (on by default): Press TAB to complete filenames as you type. Press Ctrl-D to print a list of filenames matching what you have typed so far. Completion works with commands and variables too! Use ↑, ↓, Ctrl-A, & Ctrl-E to edit previous lines. -

Lex and Yacc

Lex and Yacc A Quick Tour HW8–Use Lex/Yacc to Turn this: Into this: <P> Here's a list: Here's a list: * This is item one of a list <UL> * This is item two. Lists should be <LI> This is item one of a list indented four spaces, with each item <LI>This is item two. Lists should be marked by a "*" two spaces left of indented four spaces, with each item four-space margin. Lists may contain marked by a "*" two spaces left of four- nested lists, like this: space margin. Lists may contain * Hi, I'm item one of an inner list. nested lists, like this:<UL><LI> Hi, I'm * Me two. item one of an inner list. <LI>Me two. * Item 3, inner. <LI> Item 3, inner. </UL><LI> Item 3, * Item 3, outer list. outer list.</UL> This is outside both lists; should be back This is outside both lists; should be to no indent. back to no indent. <P><P> Final suggestions: Final suggestions 2 if myVar == 6.02e23**2 then f( .. ! char stream LEX token stream if myVar == 6.02e23**2 then f( ! tokenstream YACC parse tree if-stmt == fun call var ** Arg 1 Arg 2 float-lit int-lit . ! 3 Lex / Yacc History Origin – early 1970’s at Bell Labs Many versions & many similar tools Lex, flex, jflex, posix, … Yacc, bison, byacc, CUP, posix, … Targets C, C++, C#, Python, Ruby, ML, … We’ll use jflex & byacc/j, targeting java (but for simplicity, I usually just say lex/yacc) 4 Uses “Front end” of many real compilers E.g., gcc “Little languages”: Many special purpose utilities evolve some clumsy, ad hoc, syntax Often easier, simpler, cleaner and more flexible to use lex/yacc or similar tools from the start 5 Lex: A Lexical Analyzer Generator Input: Regular exprs defining "tokens" my.flex Fragments of declarations & code Output: jflex A java program “yylex.java” Use: yylex.java Compile & link with your main() Calls to yylex() read chars & return successive tokens. -

AWK Tool in Unix Lecture Notes

Operating Systems/AWK Tool in Unix Lecture Notes Module 12: AWK Tool in Unix AWK was developed in 1978 at the famous Bell Laboratories by Aho, Weinberger and Kernighan [3]1 to process structured data files. In programming languages it is very common to have a definition of a record which may have one or more data fields. In this context, it is common to define a file as a collection of records. Records are structured data items arranged in accordance with some specification, basically as a pre-assigned sequence of fields. The data fields may be separated by a space or a tab. In a data processing environment it very common to have such record-based files. For instance, an organisation may maintain a personnel file. Each record may contain fields like employee name, gender, date of joining the organisation, designation, etc. Similarly, if we look at files created to maintain pay accounts, student files in universities, etc. all have structured records with a set of fields. AWK is ideal for the data processing of such structured set of records. AWK comes in many flavors [14]. There is gawk which is GNU AWK. Presently we will assume the availability of the standard AWK program which comes bundled with every flavor of the Unix OS. AWK is also available in the MS environment. 12.1 The Data to Process As AWK is used to process a structured set of records, we shall use a small file called awk.test given below. It has a structured set of records. The data in this file lists employee name, employee's hourly wage, and the number of hours the employee has worked. -

Lexical Analysis Lexeme Token Using Lex and Yacc Tools Examples

Lex & Yacc A compiler or an interpreter performs its task in 3 stages: 1) Lexical Analysis: scans the input stream and converts Example: Lexical analysis sequences of characters into tokens (token is a Lexeme Token classification of groups of characters) Sum identifier Lex is a tool for writing lexical analyzers. i identifier 2) Syntactic Analysis (Parsing): A parser reads tokens and := assignment_op assembles them into language constructs using the grammar rules of the language. = equal_sign Yacc is a tool for constructing parsers. 57 integer_constant 3) Actions: Acting upon input is done by code supplied by * multiplication_op the compiler writer. , comma ( left_paran input Lexical stream parse output Parser Actions stream Analyzer of tokens tree executable K. Dincer Programming Languages - Lex 1 K. Dincer Programming Languages - Lex 2 (4) (4) Using Lex and Yacc Tools *.l Lex Specification Yacc Specification *.y Lex: reads a spec file containing regular expressions and generates a C routine that performs lexical analysis. Matches sequences that identify tokens. lex yacc *.c Lex declares an external variable called yytext which lex.yy.c Custom C contains the matched string. yylex() yyparse() y.tab.c routines Yacc: reads a spec file that codifies the grammar of a libl.a cc cc Unix language and generates a parsing routine. Libraries liby.a cc -o scanner lex.yy.c -ll cc -o parser y.tab.c -ly -ll scanner parser K. Dincer Programming Languages - Lex 3 K. Dincer Programming Languages - Lex 4 (4) (4) Examples %% %% [A-Z]+[ \t\n] printf(“%s”, yytext); [+-]?[0-9]*(\.)?[0-9]+ printf(“FLOAT”); . ; /* no action specified */ digit [0-9] alphabetic [A-Za-z] %% digit [0 -9] [+-]?{digit}*( \.)?{digit}+ printf(“FLOAT”); alphanumeric ({alphabetic}|{digit}) %% digit [0-9] {alphabetic }{alphanumeric }* printf(“variable”); sign [+-] \, printf(“Comma”); %% \{ printf(“Left brace”); float val; \:\= printf(“Assignment”); {sign}?{digit}*( \.)?{digit}+ {sscanf(yytext, “%f”, &val); printf(“>%f<”, val); K.