Topic Modeling with Wasserstein Autoencoders

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cheat Codes for Cars Mater National Ps2

Cheat codes for cars mater national ps2 Cheat Codes Enter the following in "Cheat Codes" at the Options menu. Code Effect Cars Mater-NationalMore guides, cheats and FAQS. For Cars Mater-National Championship on the PlayStation 2, GameFAQs has 8 cheat codes and secrets. Select the 'Options' mode at the 'Main' menu and enter any of the following codes to unlock the corresponding feature in the game. Unlock ALL Cars.., Cars. We have 4 questions and 1 answers for this game. Check them out to find answers or ask your own to get the exact game help you need. Why can't we use cars. Cars Mater National PS2 Cheats, Cheat Codes Interested in making money from gameplay videos of Cars. Get the latest Cars: Mater-National cheats, codes, unlockables, hints, Easter eggs, glitches, tips, tricks, hacks, downloads, hints, guides, FAQs, walkthroughs, and. Cars Mater-National Championship cheats, Passwords, and Codes for the (Options Mode) then enter one of the following Codes/Cheats. PS2, PS3, Xbox , Wii | Submitted by Blankazoid. Cheats. PAINTITAll alternate colors Besides Lightning McQueen Cars Mater-National Unlockables. Get all the inside info, cheats, hacks, codes, walkthroughs for Cars Mater-National Championship on GameSpot. Mar 15, Cars Mater-National Championship Cheats - Wii Cheats: This Cheat codes and cheat code devices for DS, Wii, PS2, XBOX. Cars Mater National PS2 Cheats, Cheat Codes Interested in making money from gameplay videos of Cars. PS2, PS3, Xbox, Wii | Submitted by Blankazoid. Cars Mater National PS2 Cheats, Cheat Codes Interested in making money from gameplay videos of Cars Mater National? Step by step instructions from. -

Boots Launches Christmas TV Advert Featuring the Sugababes Submitted By: Pr-Sending-Enterprises Tuesday, 18 November 2008

Boots launches Christmas TV advert featuring the Sugababes Submitted by: pr-sending-enterprises Tuesday, 18 November 2008 Boots' Christmas TV advert from 2007 won an industry Gold Award in The Campaign Magazine Big Awards. The company has now launched this year's seasonal advert, and it features the Sugababes' hit single 'Girls'. In 2007 the Boots (http://www.boots.com/) TV advert gave real insight into the lengthy preparations women make when getting ready for that all-important Christmas party. The music that accompanied it was 'Here Come the Girls' by Ernie K Doe, originally a hit in the 1970s. This year, Boots' new Christmas advert celebrates the art of choosing great gifts for your colleagues this season. The advert shows Boots knows that women want to get it right, finding the perfect present at a price they can afford. Boots has teamed up with the Sugababes, the UK's biggest girl band, for this year’s campaign. The advert features the trio’s recent single 'Girls', which includes a sample of 'Here Come the Girls' by Ernie K Doe. The Sugababes feel-good single has been selling fast in selected Boots stores across the nation, and Boots has matched the 10p donation to the White Ribbon Alliance for every copy it has rung through its tills. The theme of the song reflects the feminine traits highlighted in the advert. Set in an office, viewers will see women making lists, lovingly wrapping presents, and even offering themselves up as gifts in a bid to ensure they give the perfect 'Secret Santa' present. -

Video Game Trader Magazine & Price Guide

Winter 2009/2010 Issue #14 4 Trading Thoughts 20 Hidden Gems Blue‘s Journey (Neo Geo) Video Game Flashback Dragon‘s Lair (NES) Hidden Gems 8 NES Archives p. 20 19 Page Turners Wrecking Crew Vintage Games 9 Retro Reviews 40 Made in Japan Coin-Op.TV Volume 2 (DVD) Twinkle Star Sprites Alf (Sega Master System) VectrexMad! AutoFire Dongle (Vectrex) 41 Video Game Programming ROM Hacking Part 2 11Homebrew Reviews Ultimate Frogger Championship (NES) 42 Six Feet Under Phantasm (Atari 2600) Accessories Mad Bodies (Atari Jaguar) 44 Just 4 Qix Qix 46 Press Start Comic Michael Thomasson’s Just 4 Qix 5 Bubsy: What Could Possibly Go Wrong? p. 44 6 Spike: Alive and Well in the land of Vectors 14 Special Book Preview: Classic Home Video Games (1985-1988) 43 Token Appreciation Altered Beast 22 Prices for popular consoles from the Atari 2600 Six Feet Under to Sony PlayStation. Now includes 3DO & Complete p. 42 Game Lists! Advertise with Video Game Trader! Multiple run discounts of up to 25% apply THIS ISSUES CONTRIBUTORS: when you run your ad for consecutive Dustin Gulley Brett Weiss Ad Deadlines are 12 Noon Eastern months. Email for full details or visit our ad- Jim Combs Pat “Coldguy” December 1, 2009 (for Issue #15 Spring vertising page on videogametrader.com. Kevin H Gerard Buchko 2010) Agents J & K Dick Ward February 1, 2009(for Issue #16 Summer Video Game Trader can help create your ad- Michael Thomasson John Hancock 2010) vertisement. Email us with your requirements for a price quote. P. Ian Nicholson Peter G NEW!! Low, Full Color, Advertising Rates! -

Activision Acquires U.K. Game Developer Bizarre Creations

Activision Acquires U.K. Game Developer Bizarre Creations Activision Enters $1.4 Billion Racing Genre Market, Representing More than 10% of Worldwide Video Game Market SANTA MONICA, Calif., Sep 26, 2007 (BUSINESS WIRE) -- Activision, Inc. (Nasdaq:ATVI) today announced that it has acquired U.K.-based video game developer Bizarre Creations, one of the world's premier video game developers and a leader in the racing category, a $1.4 billion market that is the fourth most popular video game genre and represents more than 10% of the total video game market worldwide. This acquisition represents the latest step in Activision's ongoing strategy to enter new genres. Last year, Activision entered the music rhythm genre through its acquisition of RedOctane's Guitar Hero franchise, which is one of the fastest growing franchises in the video game industry. With more than 10 years' experience in the racing genre, Bizarre Creations is the developer of the innovative multi-million unit franchise Project Gotham Racing, a critically-acclaimed series for the Xbox® and Xbox 360®. The Project Gotham Racing franchise, which is owned by Microsoft, currently has an average game rating of 89%, according to GameRankings.com and has sold more than 4.5 million units in North America and Europe, according to The NPD Group, Charttrack and Gfk. Bizarre Creations is currently finishing development on the highly-anticipated third-person action game, The Club, for SEGA, which is due to be released early 2008. They are also the creators of the top-selling arcade game series Geometry Wars on Xbox Live Arcade®. -

Host's Master Artist List

Host's Master Artist List You can play the list in the order shown here, or any order you prefer. Tick the boxes as you play the songs. Feeder Lily Allen Steps John Legend Gerry & The Pacemakers Liberty X Georgie Fame Bobby Vee Buddy Holly Beach Boys Animals Vengaboys Johnny Tilloson Hollies Blue Troggs Supremes Akon Gorillaz Britney Spears Chemical Brothers Johnny Kidd & The Pirates Sonny & Cher Elvis Presley Peggy Lee Ken Dodd Beyonce Jeff Beck Avril Lavigne Chuck Berry Lulu Del Shannon Spencer Davis Group Sugababes Backstreet Boys Daft Punk Dion Warwick Pink Doors Robbie Williams Steely Dan Louis Armstrong Chubby Checker Shirley Bassey Abba Destiny's Child Andy Williams Nelly Furtado Paul Anka Blink 182 Copyright QOD Page 1/101 Team/Player Sheet 1 Good Luck! Spencer Davis Peggy Lee Nelly Furtado Steps Ken Dodd Group Shirley Bassey Dion Warwick Gorillaz Bobby Vee Blue Johnny Kidd & Blink 182 Sonny & Cher Lulu Avril Lavigne The Pirates Jeff Beck Elvis Presley Feeder Vengaboys Britney Spears Copyright QOD Page 2/101 Team/Player Sheet 2 Good Luck! Lily Allen Blue Avril Lavigne Liberty X John Legend Peggy Lee Backstreet Boys Lulu Sugababes Buddy Holly Troggs Beach Boys Sonny & Cher Robbie Williams Blink 182 Johnny Kidd & Louis Armstrong Akon Abba Chuck Berry The Pirates Copyright QOD Page 3/101 Team/Player Sheet 3 Good Luck! Johnny Kidd & Robbie Williams Troggs Paul Anka Shirley Bassey The Pirates Blink 182 Johnny Tilloson Lulu Hollies Britney Spears Dion Warwick Lily Allen Peggy Lee Pink Del Shannon Sugababes Destiny's Child Chubby Checker -

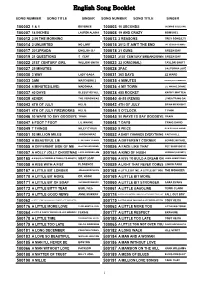

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

5 Potential Benefits of Golden Rice 16 6 Sensitivity Analysis 18 7 Conclusion 19 References 20 Appendix 24

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Zimmermann, Roukayatou; Ahmed, Faruk Working Paper Rice biotechnology and its potential to combat vitamin A deficiency: a case study of golden rice in Bangladesh ZEF Discussion Papers on Development Policy, No. 104 Provided in Cooperation with: Zentrum für Entwicklungsforschung / Center for Development Research (ZEF), University of Bonn Suggested Citation: Zimmermann, Roukayatou; Ahmed, Faruk (2006) : Rice biotechnology and its potential to combat vitamin A deficiency: a case study of golden rice in Bangladesh, ZEF Discussion Papers on Development Policy, No. 104, University of Bonn, Center for Development Research (ZEF), Bonn This Version is available at: http://hdl.handle.net/10419/32303 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. may exercise further usage rights as specified in the indicated licence. -

Anaemia in Bangladesh: a Review of Prevalence and Aetiology

Public Health Nutrition: 3(4), 385±393 385 Anaemia in Bangladesh: a review of prevalence and aetiology Faruk Ahmed* Institute of Nutrition and Food Science, University of Dhaka, Dhaka-1000, Bangladesh Submitted 23 August 1999: Accepted 20 March 2000 Abstract Objective: This paper provides a comprehensive review of the changes in the prevalence and the extent of anaemia among different population groups in Bangladesh up to the present time. The report also focuses on various factors in the aetiology of anaemia in the country. Design and setting: All the available data have been examined in detail, including data from national nutrition surveys, as well as small studies in different population groups. Results: Over the past three decades a number of studies including four national nutrition surveys (1962/64; 1975/76; 1981/82 and 1995/96) have been carried out to investigate the prevalence of anaemia among different population groups in Bangladesh, and have demonstrated a signi®cant public health problem. Since the 1975/76 survey the average national prevalence of anaemia has not fallen; in 1995/96, 74% were anaemic (64% in urban areas and 77% in rural areas). However, age-speci®c comparisons suggest that the rates have fallen in most groups except adult men: in preschool children in rural areas it has decreased by about 30%, but the current level (53%) still falls within internationally agreed high risk levels. Among the rural population, the prevalence of anaemia is 43% in adolescent girls, 45% in non- pregnant women and 49% in pregnant women. The rates in the urban population are slightly lower compared with rural areas, but are high enough to pose a considerable problem. -

Dan Blaze's Karaoke Song List

Dan Blaze's Karaoke Song List - By Artist 112 Peaches And Cream 411 Dumb 411 On My Knees 411 Teardrops 911 A Little Bit More 911 All I Want Is You 911 How Do You Want Me To Love You 911 More Than A Woman 911 Party People (Friday Night) 911 Private Number 911 The Journey 10 cc Donna 10 cc I'm Mandy 10 cc I'm Not In Love 10 cc The Things We Do For Love 10 cc Wall St Shuffle 10 cc Dreadlock Holiday 10000 Maniacs These Are The Days 1910 Fruitgum Co Simon Says 1999 Man United Squad Lift It High 2 Evisa Oh La La La 2 Pac California Love 2 Pac & Elton John Ghetto Gospel 2 Unlimited No Limits 2 Unlimited No Limits 20 Fingers Short Dick Man 21st Century Girls 21st Century Girls 3 Doors Down Kryptonite 3 Oh 3 feat Katy Perry Starstrukk 3 Oh 3 Feat Kesha My First Kiss 3 S L Take It Easy 30 Seconds To Mars The Kill 38 Special Hold On Loosely 3t Anything 3t With Michael Jackson Why 4 Non Blondes What's Up 4 Non Blondes What's Up 5 Seconds Of Summer Don't Stop 5 Seconds Of Summer Good Girls 5 Seconds Of Summer She Looks So Perfect 5 Star Rain Or Shine Updated 08.04.2015 www.blazediscos.com - www.facebook.com/djdanblaze Dan Blaze's Karaoke Song List - By Artist 50 Cent 21 Questions 50 Cent Candy Shop 50 Cent In Da Club 50 Cent Just A Lil Bit 50 Cent Feat Neyo Baby By Me 50 Cent Featt Justin Timberlake & Timbaland Ayo Technology 5ive & Queen We Will Rock You 5th Dimension Aquarius Let The Sunshine 5th Dimension Stoned Soul Picnic 5th Dimension Up Up and Away 5th Dimension Wedding Bell Blues 98 Degrees Because Of You 98 Degrees I Do 98 Degrees The Hardest -

IGDA Online Games White Paper Full Version

IGDA Online Games White Paper Full Version Presented at the Game Developers Conference 2002 Created by the IGDA Online Games Committee Alex Jarett, President, Broadband Entertainment Group, Chairman Jon Estanislao, Manager, Media & Entertainment Strategy, Accenture, Vice-Chairman FOREWORD With the rising use of the Internet, the commercial success of certain massively multiplayer games (e.g., Asheron’s Call, EverQuest, and Ultima Online), the ubiquitous availability of parlor and arcade games on “free” game sites, the widespread use of matching services for multiplayer games, and the constant positioning by the console makers for future online play, it is apparent that online games are here to stay and there is a long term opportunity for the industry. What is not so obvious is how the independent developer can take advantage of this opportunity. For the two years prior to starting this project, I had the opportunity to host several roundtables at the GDC discussing the opportunities and future of online games. While the excitement was there, it was hard not to notice an obvious trend. It seemed like four out of five independent developers I met were working on the next great “massively multiplayer” game that they hoped to sell to some lucky publisher. I couldn’t help but see the problem with this trend. I knew from talking with folks that these games cost a LOT of money to make, and the reality is that only a few publishers and developers will work on these projects. So where was the opportunity for the rest of the developers? As I spoke to people at the roundtables, it became apparent that there was a void of baseline information in this segment. -

Sports and the Rhetorical Construction of the Citizen-Consumer

THE SPORTS MALL OF AMERICA: SPORTS AND THE RHETORICAL CONSTRUCTION OF THE CITIZEN-CONSUMER Cory Hillman A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY August 2012 Committee: Dr. Michael Butterworth, Advisor Dr. David Tobar Graduate Faculty Representative Dr. Clayton Rosati Dr. Joshua Atkinson © 2010 Cory Hillman All Rights Reserved iii ABSTRACT Dr. Michael Butterworth, Advisor The purpose of this dissertation was to investigate from a rhetorical perspective how contemporary sports both reflect and influence a preferred definition of democracy that has been narrowly conflated with consumption in the cultural imaginary. I argue that the relationship between fans and sports has become mediated by rituals of consumption in order to affirm a particular identity, similar to the ways that citizenship in America has become defined by one’s ability to consume under conditions of neoliberal capitalism. In this study, I examine how new sports stadiums are architecturally designed to attract upper income fans through the mobilization of spectacle and surveillance-based strategies such as Fan Code of Conducts. I also investigate the “sports gaming culture” that addresses advertising in sports video games and fantasy sports participation that both reinforce the burgeoning commercialism of sports while normalizing capitalism’s worldview. I also explore the area of licensed merchandise which is often used to seduce fans into consuming the sports brand by speaking the terms of consumer capitalism often naturalized in fan’s expectations in their engagement with sports. Finally, I address potential strategies of resistance that rely on a reassessment of the value of sports in American culture, predicated upon restoring citizens’ faith in public institutions that would simultaneously reclaim control of the sporting landscape from commercial entities exploiting them for profit. -

Prevalence and Predictors of Vitamin D Deficiency and Insufficiency

nutrients Article Prevalence and Predictors of Vitamin D Deficiency and Insufficiency among Pregnant Rural Women in Bangladesh Faruk Ahmed 1,*, Hossein Khosravi-Boroujeni 1, Moududur Rahman Khan 2, Anjan Kumar Roy 3 and Rubhana Raqib 3 1 Public Health, School of Medicine, Griffith University, Gold Coast Campus, Gold Coast, QLD 4220, Australia; [email protected] 2 Institute of Nutrition and Food Science, University of Dhaka, Dhaka 1000, Bangladesh; [email protected] 3 International Centre for Diarrhoeal Disease Research, Mohakhali, Dhaka 1212, Bangladesh; [email protected] (A.K.R.); [email protected] (R.R.) * Correspondence: f.ahmed@griffith.edu.au Abstract: Although adequate vitamin D status during pregnancy is essential for maternal health and to prevent adverse pregnancy outcomes, limited data exist on vitamin D status and associated risk factors in pregnant rural Bangladeshi women. This study determined the prevalence of vitamin D deficiency and insufficiency, and identified associated risk factors, among these women. A total of 515 pregnant women from rural Bangladesh, gestational age ≤ 20 weeks, participated in this cross-sectional study. A separate logistic regression analysis was applied to determine the risk factors of vitamin D deficiency and insufficiency. Overall, 17.3% of the pregnant women had vitamin D deficiency [serum 25(OH)D concentration <30.0 nmol/L], and 47.2% had vitamin D insufficiency [serum 25(OH)D concentration between 30–<50 nmol/L]. The risk of vitamin D insufficiency was significantly higher among nulliparous pregnant women (OR: 2.72; 95% CI: 1.75–4.23), those in their first trimester (OR: Citation: Ahmed, F.; 2.68; 95% CI: 1.39–5.19), anaemic women (OR: 1.53; 95% CI: 0.99–2.35; p = 0.056) and women whose Khosravi-Boroujeni, H.; Khan, M.R.; Roy, A.K.; Raqib, R.