Musings RIK FARROWEDITORIAL

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oral History of Fernando Corbató

Oral History of Fernando Corbató Interviewed by: Steven Webber Recorded: February 1, 2006 West Newton, Massachusetts CHM Reference number: X3438.2006 © 2006 Computer History Museum Oral History of Fernando Corbató Steven Webber: Today the Computer History Museum Oral History Project is going to interview Fernando J. Corbató, known as Corby. Today is February 1 in 2006. We like to start at the very beginning on these interviews. Can you tell us something about your birth, your early days, where you were born, your parents, your family? Fernando Corbató: Okay. That’s going back a long ways of course. I was born in Oakland. My parents were graduate students at Berkeley and then about age 5 we moved down to West Los Angeles, Westwood, where I grew up [and spent] most of my early years. My father was a professor of Spanish literature at UCLA. I went to public schools there in West Los Angeles, [namely,] grammar school, junior high and [the high school called] University High. So I had a straightforward public school education. I guess the most significant thing to get to is that World War II began when I was in high school and that caused several things to happen. I’m meandering here. There’s a little bit of a long story. Because of the wartime pressures on manpower, the high school went into early and late classes and I cleverly saw that I could get a chance to accelerate my progress. I ended up taking both early and late classes and graduating in two years instead of three and [thereby] got a chance to go to UCLA in 1943. -

Oral History of Brian Kernighan

Oral History of Brian Kernighan Interviewed by: John R. Mashey Recorded April 24, 2017 Princeton, NJ CHM Reference number: X8185.2017 © 2017 Computer History Museum Oral History of Brian Kernighan Mashey: Well, hello, Brian. It’s great to see you again. Been a long time. So we’re here at Princeton, with Brian Kernighan, and we want to go do a whole oral history with him. So why don’t we start at the beginning. As I recall, you’re Canadian. Kernighan: That is right. I was born in Toronto long ago, and spent my early years in the city of Toronto. Moved west to a small town, what was then a small town, when I was in the middle of high school, and then went to the University of Toronto for my undergraduate degree, and then came here to Princeton for graduate school. Mashey: And what was your undergraduate work in? Kernighan: It was in one of these catch-all courses called Engineering Physics. It was for people who were kind of interested in engineering and math and science and didn’t have a clue what they wanted to actually do. Mashey: <laughs> So how did you come to be down here? Kernighan: I think it was kind of an accident. It was relatively unusual for people from Canada to wind up in the United States for graduate school at that point, but I thought I would try something different and so I applied to six or seven different schools in the United States, got accepted at some of them, and then it was a question of balancing things like, “Well, they promised that they would get you out in a certain number of years and they promised that they would give you money,” but the question is whether, either that was true or not and I don’t know. -

Turing Award • John Von Neumann Medal • NAE, NAS, AAAS Fellow

15-712: Advanced Operating Systems & Distributed Systems A Few Classics Prof. Phillip Gibbons Spring 2021, Lecture 2 Today’s Reminders / Announcements • Summaries are to be submitted via Canvas by class time • Announcements and Q&A are via Piazza (please enroll) • Office Hours: – Prof. Phil Gibbons: Fri 1-2 pm & by appointment – TA Jack Kosaian: Mon 1-2 pm – Zoom links: See canvas/Zoom 2 CS is a Fast Moving Field: Why Read/Discuss Old Papers? “Those who cannot remember the past are condemned to repeat it.” - George Santayana, The Life of Reason, Volume 1, 1905 See what breakthrough research ideas look like when first presented 3 The Rise of Worse is Better Richard Gabriel 1991 • MIT/Stanford style of design: “the right thing” – Simplicity in interface 1st, implementation 2nd – Correctness in all observable aspects required – Consistency – Completeness: cover as many important situations as is practical • Unix/C style: “worse is better” – Simplicity in implementation 1st, interface 2nd – Correctness, but simplicity trumps correctness – Consistency is nice to have – Completeness is lowest priority 4 Worse-is-better is Better for SW • Worse-is-better has better survival characteristics than the-right-thing • Unix and C are the ultimate computer viruses – Simple structures, easy to port, required few machine resources to run, provide 50-80% of what you want – Programmer conditioned to sacrifice some safety, convenience, and hassle to get good performance and modest resource use – First gain acceptance, condition users to expect less, later -

Interview with Barbara Liskov ACM Turing Award Recipient 2008 Done at MIT April 20, 2016 Interviewer Was Tom Van Vleck

B. Liskov Interview 1 Interview with Barbara Liskov ACM Turing Award Recipient 2008 Done at MIT April 20, 2016 Interviewer was Tom Van Vleck Interviewer is noted as “TVV” Dr. Liskov is noted as “BL” ?? = inaudible (with timestamp) or [ ] for phonetic [some slight changes have been made in this transcript to improve the readability, but no material changes were made to the content] TVV: Hello, I’m Tom Van Vleck, and I have the honor today to interview Professor Barbara Liskov, the 2008 ACM Turing Award winner. Today is April 20th, 2016. TVV: To start with, why don’t you tell us a little bit about your upbringing, where you lived, and how you got into the business? BL: I grew up in San Francisco. I was actually born in Los Angeles, so I’m a native Californian. When I was growing up, it was definitely not considered the thing to do for a young girl to be interested in math and science. In fact, in those days, the message that you got was that you were supposed to get married and have children and not have a job. Nevertheless, I was always encouraged by my parents to take academics seriously. My mother was a college grad as well as my father, so it was expected that I would go to college, which in those times was not that common. Maybe 20% of the high school class would go on to college and the rest would not. I was always very interested in math and science, so I took all the courses that were offered at my high school, which wasn’t a tremendous number compared to what is available today. -

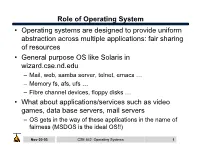

Role of Operating System

Role of Operating System • Operating systems are designed to provide uniform abstraction across multiple applications: fair sharing of resources • General purpose OS like Solaris in wizard.cse.nd.edu – Mail, web, samba server, telnet, emacs … – Memory fs, afs, ufs … – Fibre channel devices, floppy disks … • What about applications/services such as video games, data base servers, mail servers – OS gets in the way of these applications in the name of fairness (MSDOS is the ideal OS!!) Nov-20-03 CSE 542: Operating Systems 1 What is the role of OS? • Create multiple virtual machines that each user can control all to themselves – IBM 360/370 … • Monolithic kernel: Linux – One kernel provides all services. – New paradigms are harder to implement – May not be optimal for any one application • Microkernel: Mach – Microkernel provides minimal service – Application servers provide OS functionality • Nanokernel: OS is implemented as application level libraries Nov-20-03 CSE 542: Operating Systems 2 Case study: Multics • Goal: Develop a convenient, interactive, useable time shared computer system that could support many users. – Bell Labs and GE in 1965 joined an effort underway at MIT (CTSS) on Multics (Multiplexed Information and Computing Service) mainframe timesharing system. • Multics was designed to the swiss army knife of OS • Multics achieved most of the these goals, but it took a long time – One of the negative contribution was the development of simple yet powerful abstractions (UNIX) Nov-20-03 CSE 542: Operating Systems 3 Multics: Designed to be the ultimate OS • “One of the overall design goals is to create a computing system which is capable of meeting almost all of the present and near-future requirements of a large computer utility. -

Corbató Transcript Final

A. M. Turing Award Oral History Interview with Fernando J. (“Corby”) Corbató by Steve Webber Plum Island, Massachusetts April 6, 2018 Webber: Hi. My name is Steve Webber. It’s April 6th, 2018. I’m here to interview Fernando Corbató – everyone knows him as “Corby” – the ACM Turing Award winner of 1990 for his contributions to computer science. So Corby, tell me about your early life, where you went to school, where you were born, your family, your parents, anything that’s interesting for the history of computer science. Corbató: Well, my parents met at University of California, Berkeley, and they… My father got his doctorate in Spanish literature – and I was born in Oakland, California – and proceeded to… he got his first position at UCLA, which was then a new campus in Los Angeles, as a professor of Spanish literature. So I of course wasn’t very conscious of much at those ages, but I basically grew up in West Los Angeles and went to public high schools, first grammar school, then junior high, and then I began high school. Webber: What were your favorite subjects in high school? I tended to enjoy the mathematics type of subjects. As I recall, algebra was easy and enjoyable. And the… one of the results of that was that I was in high school at the time when World War II… when Pearl Harbor occurred and MIT was… rather the United States was drawn into the war. As I recall, Pearl Harbor occurred… I think it was on a Sunday, but one of the results was that it shocked the US into reacting to the war effort, and one of the results was that everything went into kind of a wartime footing. -

Notes on the History of Fork-And-Join Linus Nyman and Mikael Laakso Hanken School of Economics

Notes on the history of fork-and-join Linus Nyman and Mikael Laakso Hanken School of Economics As part of a PhD on code forking in open source software, Linus Nyman looked into the origins of how the practice came to be called forking.1 This search led back to the early history of the fork system call. Having not previously seen such a history published, this anecdote looks back at the birth of the fork system call to share what was learned, as remembered by its pioneers. The fork call allows a process (or running program) to create new processes. The original is deemed the parent process and the newly created one its child. On multiprocessor systems, these processes can run concurrently in parallel.2 Since its birth 50 years ago, the fork has remained a central element of modern computing, both with regards to software development principles and, by extension, to hardware design, which increasingly accommodates parallelism in process execution. The fork system call is imagined The year was 1962. Melvin Conway, later to become known for “Conway’s Law,”3 was troubled by what seemed an unnecessary inefficiency in computing. As Conway recalls:4 I was in the US Air Force at the time – involved with computer procurement – and I noticed that no vendor was distinguishing between “processor” and “process.” That is, when a proposal involved a multiprocessor design, each processor was committed to a single process. By late 1962, Conway had begun contemplating the idea of using a record to carry the status of a process. -

Memorial Gathering for Corby

Fernando J. Corbat6 Memorial Gathering Gifts in memory of Corby may be made to MIT's ... Fernando Corbat6 Fellowship Fund November 4, 2019 via MIT Memorial Gifts Office, Bonny Kellermann 617-253-9722 MIT Stata Center Kirsch Auditorium l•lii MIT EECS MIT CS A IL 1926- 2019 Today we gather to commemorate Fernando J. Corbat6, After CTSS, Corbat6 led a time-sharing effort called Multics 'Corby,' Professor Emeritus in the Department of Electrical (Multiplexed Information and Computing Service), which En~ neering and Computer Science at MIT directly inspired operating systems like Linux and laid the foundation for many aspects of modern computing. Multics Fernando Corbat6 was Lorn on July 1, 1926 in Oakland, doubled as a fertile training ground for an emerging California. At 17, he enlisted as a technician in the U.S. Navy, generation of programmers. Multics was available for where he first got the en~neering bug working on a range of general use at MIT in October 1969 and became a Honeywell radar and sonar systems. After World War II, he earned his • product in 1973. Corbat6 and his colleagues also opened up bachelor's deuee at Caltech before heading to MIT to communication between users with early versions of email, complete a PhD in Physics in 1956. Corbat6 then joined MIT's instant messaging, and word processing. Computation Center as a research assistant, swn moving up to become deputy director of the entire center. Another legacy is "Corbat6's Law," which states that the number of lines of code someone can write in a day is the He was appointed Associate Professor in 1962, promoted to same regardless of the language used. -

An Interview with DAN BRICKLIN and BOB FRANKSTON OH 402

An Interview with DAN BRICKLIN AND BOB FRANKSTON OH 402 Conducted by Martin Campbell-Kelly and Paul Ceruzzi on 7 May 2004 Needham, Massachusetts Charles Babbage Institute Center for the History of Information Technology University of Minnesota, Minneapolis Copyright, Charles Babbage Institute Dan Bricklin and Bob Frankston Interview 7 May 2004 Oral History 402 Abstract ABSTRACT: Dan Bricklin and Bob Frankston discuss the creation of VisiCalc, the pioneering spreadsheet application. Bricklin and Frankston begin by discussing their educational backgrounds and experiences in computing, especially with MIT’s Multics system. Bricklin then worked for DEC on typesetting and word-processing computers and, after a short time with a small start-up company, went to Harvard Business School. After MIT Frankston worked for White Weld and Interactive Data. The interview examines many of the technical, design, and programming choices in creating VisiCalc as well as interactions with Dan Fylstra and several business advisors. Bricklin comments on entries from his dated notebooks about these interactions. The interview reviews the incorporation of Software Arts in 1979, then describes early marketing of VisiCalc and the value of product evangelizing. There is discussion of rising competition from Mitch Kapor’s 1-2-3 and the steps taken by Fylstra’s software publishing company Personal Software (later VisiCorp). Part II of the interview begins with Bricklin and Frankston’s use of a Prime minicomputer to compile VisiCalc’s code for the Apple II computer. There is discussion of connections to Apple Computer and DEC, as well as publicity at the West Coast Computer Faire. The two evaluate the Fylstra essay, reviewing the naming of VisiCalc and discussing the division of labor between software developers and Fylstra as a software publisher. -

Structure and Interpretation of Computer Programs Second Edition

[Go to first, previous, next page; contents; index] [Go to first, previous, next page; contents; index] Structure and Interpretation of Computer Programs second edition Harold Abelson and Gerald Jay Sussman with Julie Sussman foreword by Alan J. Perlis The MIT Press Cambridge, Massachusetts London, England McGraw-Hill Book Company New York St. Louis San Francisco Montreal Toronto [Go to first, previous, next page; contents; index] [Go to first, previous, next page; contents; index] This book is one of a series of texts written by faculty of the Electrical Engineering and Computer Science Department at the Massachusetts Institute of Technology. It was edited and produced by The MIT Press under a joint production-distribution arrangement with the McGraw-Hill Book Company. Ordering Information: North America Text orders should be addressed to the McGraw-Hill Book Company. All other orders should be addressed to The MIT Press. Outside North America All orders should be addressed to The MIT Press or its local distributor. © 1996 by The Massachusetts Institute of Technology Second edition All rights reserved. No part of this book may be reproduced in any form or by any electronic or mechanical means (including photocopying, recording, or information storage and retrieval) without permission in writing from the publisher. This book was set by the authors using the LATEX typesetting system and was printed and bound in the United States of America. Library of Congress Cataloging-in-Publication Data Abelson, Harold Structure and interpretation of computer programs / Harold Abelson and Gerald Jay Sussman, with Julie Sussman. -- 2nd ed. p. cm. -- (Electrical engineering and computer science series) Includes bibliographical references and index. -

Compatible Time-Sharing System (1961-1973) Fiftieth Anniversary

Compatible Time-Sharing System (1961-1973) Fiftieth Anniversary Commemorative Overview The Compatible Time Sharing System (1961–1973) Fiftieth Anniversary Commemorative Overview The design of the cover, half-title page (reverse side of this page), and main title page mimics the design of the 1963 book The Compatible Time-Sharing System: A Programmer’s Guide from the MIT Press. The Compatible Time Sharing System (1961–1973) Fiftieth Anniversary Commemorative Overview Edited by David Walden and Tom Van Vleck With contributions by Fernando Corbató Marjorie Daggett Robert Daley Peter Denning David Alan Grier Richard Mills Roger Roach Allan Scherr Copyright © 2011 David Walden and Tom Van Vleck All rights reserved. Single copies may be printed for personal use. Write to us at [email protected] for a PDF suitable for two-sided printing of multiple copies for non-profit academic or scholarly use. IEEE Computer Society 2001 L Street N.W., Suite 700 Washington, DC 20036-4928 USA First print edition June 2011 (web revision 03) The web edition at http://www.computer.org/portal/web/volunteercenter/history is being updated from the print edition to correct mistakes and add new information. The change history is on page 50. To Fernando Corbató and his MIT collaborators in creating CTSS Contents Preface . ix Acknowledgments . ix MIT nomenclature and abbreviations . x 1 Early history of CTSS . 1 2 The IBM 7094 and CTSS at MIT . 5 3 Uses . 17 4 Memories and views of CTSS . 21 Fernando Corbató . 21 Marjorie Daggett . 22 Robert Daley . 24 Richard Mills . 26 Tom Van Vleck . 31 Roger Roach . -

![IBM PC [Aug 12]](https://docslib.b-cdn.net/cover/5842/ibm-pc-aug-12-4135842.webp)

IBM PC [Aug 12]

feature to Multics, the Xerox was so certain, that he promised PARC Alto [March 1], and the to "eat his words" if it didn't. As April 7th IBM PC [Aug 12]. He was also a result, during his keynote one of the developers of the speech at the 6th World Wide token ring LAN [Oct 15], and Web Conference in 1997, he Hoover on TV helped with the IEEE 802.5 placed a printed copy of the token ring standard. article in a blender, along with April 7, 1927 some unspecified liquid, and In 1984, Clark, Dave Reed [Jan consumed the pulpy mixture 31], and Saltzer, wrote the first Dignitaries gathered at the that resulted. AT&T Bell Labs auditorium in paper on the end-to-end NYC to see the first American principle which argued that host Many of his other predictions long distance TV demonstration. computers, rather than network have proved just as successful, The centerpiece was a nodes, should be responsible for such as the demise of open conversation between the US application-specific source software [Feb 3], the Secretary of Commerce, Herbert functionality. This view of the failure of wireless networking, Hoover, in Washington and the network as being purely for data and the inability of Windows AT&T President, Walter Gifford routing is at the heart of today’s and Linux to deal with video [Jan 7; April 15], in New York. net neutrality debate. streaming. His articles on these Hoover’s live picture, rendered matters were collected in the Clark's other work includes the at 50 lines of resolution, and book, “Internet Collapses and Clark-Wilson integrity model, voice were transmitted over Other InfoWorld Punditry” extensions to the Internet to telephone lines using a system (2000).