Structure and Interpretation of Computer Programs Second Edition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reflexive Interpreters 1 the Problem of Control

Reset reproduction of a Ph.D. thesis proposal submitted June 8, 1978 to the Massachusetts Institute of Technology Electrical Engineering and Computer Science Department. Reset in LaTeX by Jon Doyle, December 1995. c 1978, 1995 Jon Doyle. All rights reserved.. Current address: MIT Laboratory for Computer Science. Available via http://www.medg.lcs.mit.edu/doyle Reflexive Interpreters Jon Doyle [email protected] Massachusetts Institute of Technology, Artificial Intelligence Laboratory Cambridge, Massachusetts 02139, U.S.A. Abstract The goal of achieving powerful problem solving capabilities leads to the “advice taker” form of program and the associated problem of control. This proposal outlines an approach to this problem based on the construction of problem solvers with advanced self-knowledge and introspection capabilities. 1 The Problem of Control Self-reverence, self-knowledge, self-control, These three alone lead life to sovereign power. Alfred, Lord Tennyson, OEnone Know prudent cautious self-control is wisdom’s root. Robert Burns, A Bard’s Epitath The woman that deliberates is lost. Joseph Addison, Cato A major goal of Artificial Intelligence is to construct an “advice taker”,1 a program which can be told new knowledge and advised about how that knowledge may be useful. Many of the approaches towards this goal have proposed constructing additive formalisms for trans- mitting knowledge to the problem solver.2 In spite of considerable work along these lines, formalisms for advising problem solvers about the uses and properties of knowledge are rela- tively undeveloped. As a consequence, the nondeterminism resulting from the uncontrolled application of many independent pieces of knowledge leads to great inefficiencies. -

Oral History of Fernando Corbató

Oral History of Fernando Corbató Interviewed by: Steven Webber Recorded: February 1, 2006 West Newton, Massachusetts CHM Reference number: X3438.2006 © 2006 Computer History Museum Oral History of Fernando Corbató Steven Webber: Today the Computer History Museum Oral History Project is going to interview Fernando J. Corbató, known as Corby. Today is February 1 in 2006. We like to start at the very beginning on these interviews. Can you tell us something about your birth, your early days, where you were born, your parents, your family? Fernando Corbató: Okay. That’s going back a long ways of course. I was born in Oakland. My parents were graduate students at Berkeley and then about age 5 we moved down to West Los Angeles, Westwood, where I grew up [and spent] most of my early years. My father was a professor of Spanish literature at UCLA. I went to public schools there in West Los Angeles, [namely,] grammar school, junior high and [the high school called] University High. So I had a straightforward public school education. I guess the most significant thing to get to is that World War II began when I was in high school and that caused several things to happen. I’m meandering here. There’s a little bit of a long story. Because of the wartime pressures on manpower, the high school went into early and late classes and I cleverly saw that I could get a chance to accelerate my progress. I ended up taking both early and late classes and graduating in two years instead of three and [thereby] got a chance to go to UCLA in 1943. -

A Critique of Abelson and Sussman Why Calculating Is Better Than

A critique of Abelson and Sussman - or - Why calculating is better than scheming Philip Wadler Programming Research Group 11 Keble Road Oxford, OX1 3QD Abelson and Sussman is taught [Abelson and Sussman 1985a, b]. Instead of emphasizing a particular programming language, they emphasize standard engineering techniques as they apply to programming. Still, their textbook is intimately tied to the Scheme dialect of Lisp. I believe that the same approach used in their text, if applied to a language such as KRC or Miranda, would result in an even better introduction to programming as an engineering discipline. My belief has strengthened as my experience in teaching with Scheme and with KRC has increased. - This paper contrasts teaching in Scheme to teaching in KRC and Miranda, particularly with reference to Abelson and Sussman's text. Scheme is a "~dly-scoped dialect of Lisp [Steele and Sussman 19781; languages in a similar style are T [Rees and Adams 19821 and Common Lisp [Steele 19821. KRC is a functional language in an equational style [Turner 19811; its successor is Miranda [Turner 1985k languages in a similar style are SASL [Turner 1976, Richards 19841 LML [Augustsson 19841, and Orwell [Wadler 1984bl. (Only readers who know that KRC stands for "Kent Recursive Calculator" will have understood the title of this There are four language features absent in Scheme and present in KRC/Miranda that are important: 1. Pattern-matching. 2. A syntax close to traditional mathematical notation. 3. A static type discipline and user-defined types. 4. Lazy evaluation. KRC and SASL do not have a type discipline, so point 3 applies only to Miranda, Philip Wadhr Why Calculating is Better than Scheming 2 b LML,and Orwell. -

The Evolution of Lisp

1 The Evolution of Lisp Guy L. Steele Jr. Richard P. Gabriel Thinking Machines Corporation Lucid, Inc. 245 First Street 707 Laurel Street Cambridge, Massachusetts 02142 Menlo Park, California 94025 Phone: (617) 234-2860 Phone: (415) 329-8400 FAX: (617) 243-4444 FAX: (415) 329-8480 E-mail: [email protected] E-mail: [email protected] Abstract Lisp is the world’s greatest programming language—or so its proponents think. The structure of Lisp makes it easy to extend the language or even to implement entirely new dialects without starting from scratch. Overall, the evolution of Lisp has been guided more by institutional rivalry, one-upsmanship, and the glee born of technical cleverness that is characteristic of the “hacker culture” than by sober assessments of technical requirements. Nevertheless this process has eventually produced both an industrial- strength programming language, messy but powerful, and a technically pure dialect, small but powerful, that is suitable for use by programming-language theoreticians. We pick up where McCarthy’s paper in the first HOPL conference left off. We trace the development chronologically from the era of the PDP-6, through the heyday of Interlisp and MacLisp, past the ascension and decline of special purpose Lisp machines, to the present era of standardization activities. We then examine the technical evolution of a few representative language features, including both some notable successes and some notable failures, that illuminate design issues that distinguish Lisp from other programming languages. We also discuss the use of Lisp as a laboratory for designing other programming languages. We conclude with some reflections on the forces that have driven the evolution of Lisp. -

Performance Engineering of Proof-Based Software Systems at Scale by Jason S

Performance Engineering of Proof-Based Software Systems at Scale by Jason S. Gross B.S., Massachusetts Institute of Technology (2013) S.M., Massachusetts Institute of Technology (2015) Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY February 2021 © Massachusetts Institute of Technology 2021. All rights reserved. Author............................................................. Department of Electrical Engineering and Computer Science January 27, 2021 Certified by . Adam Chlipala Associate Professor of Electrical Engineering and Computer Science Thesis Supervisor Accepted by . Leslie A. Kolodziejski Professor of Electrical Engineering and Computer Science Chair, Department Committee on Graduate Students 2 Performance Engineering of Proof-Based Software Systems at Scale by Jason S. Gross Submitted to the Department of Electrical Engineering and Computer Science on January 27, 2021, in partial fulfillment of the requirements for the degree of Doctor of Philosophy Abstract Formal verification is increasingly valuable as our world comes to rely more onsoft- ware for critical infrastructure. A significant and understudied cost of developing mechanized proofs, especially at scale, is the computer performance of proof gen- eration. This dissertation aims to be a partial guide to identifying and resolving performance bottlenecks in dependently typed tactic-driven proof assistants like Coq. We present a survey of the landscape of performance issues in Coq, with micro- and macro-benchmarks. We describe various metrics that allow prediction of performance, such as term size, goal size, and number of binders, and note the occasional surprising lack of a bottleneck for some factors, such as total proof term size. -

Reading List

EECS 101 Introduction to Computer Science Dinda, Spring, 2009 An Introduction to Computer Science For Everyone Reading List Note: You do not need to read or buy all of these. The syllabus and/or class web page describes the required readings and what books to buy. For readings that are not in the required books, I will either provide pointers to web documents or hand out copies in class. Books David Harel, Computers Ltd: What They Really Can’t Do, Oxford University Press, 2003. Fred Brooks, The Mythical Man-month: Essays on Software Engineering, 20th Anniversary Edition, Addison-Wesley, 1995. Joel Spolsky, Joel on Software: And on Diverse and Occasionally Related Matters That Will Prove of Interest to Software Developers, Designers, and Managers, and to Those Who, Whether by Good Fortune or Ill Luck, Work with Them in Some Capacity, APress, 2004. Most content is available for free from Spolsky’s Blog (see http://www.joelonsoftware.com) Paul Graham, Hackers and Painters, O’Reilly, 2004. See also Graham’s site: http://www.paulgraham.com/ Martin Davis, The Universal Computer: The Road from Leibniz to Turing, W.W. Norton and Company, 2000. Ted Nelson, Computer Lib/Dream Machines, 1974. This book is now very rare and very expensive, which is sad given how visionary it was. Simon Singh, The Code Book: The Science of Secrecy from Ancient Egypt to Quantum Cryptography, Anchor, 2000. Douglas Hofstadter, Goedel, Escher, Bach: The Eternal Golden Braid, 20th Anniversary Edition, Basic Books, 1999. Stuart Russell and Peter Norvig, Artificial Intelligence: A Modern Approach, 2nd Edition, Prentice Hall, 2003. -

Oral History of Brian Kernighan

Oral History of Brian Kernighan Interviewed by: John R. Mashey Recorded April 24, 2017 Princeton, NJ CHM Reference number: X8185.2017 © 2017 Computer History Museum Oral History of Brian Kernighan Mashey: Well, hello, Brian. It’s great to see you again. Been a long time. So we’re here at Princeton, with Brian Kernighan, and we want to go do a whole oral history with him. So why don’t we start at the beginning. As I recall, you’re Canadian. Kernighan: That is right. I was born in Toronto long ago, and spent my early years in the city of Toronto. Moved west to a small town, what was then a small town, when I was in the middle of high school, and then went to the University of Toronto for my undergraduate degree, and then came here to Princeton for graduate school. Mashey: And what was your undergraduate work in? Kernighan: It was in one of these catch-all courses called Engineering Physics. It was for people who were kind of interested in engineering and math and science and didn’t have a clue what they wanted to actually do. Mashey: <laughs> So how did you come to be down here? Kernighan: I think it was kind of an accident. It was relatively unusual for people from Canada to wind up in the United States for graduate school at that point, but I thought I would try something different and so I applied to six or seven different schools in the United States, got accepted at some of them, and then it was a question of balancing things like, “Well, they promised that they would get you out in a certain number of years and they promised that they would give you money,” but the question is whether, either that was true or not and I don’t know. -

Introduction to the Literature on Programming Language Design Gary T

Computer Science Technical Reports Computer Science 7-1999 Introduction to the Literature On Programming Language Design Gary T. Leavens Iowa State University Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Programming Languages and Compilers Commons Recommended Citation Leavens, Gary T., "Introduction to the Literature On Programming Language Design" (1999). Computer Science Technical Reports. 59. http://lib.dr.iastate.edu/cs_techreports/59 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Introduction to the Literature On Programming Language Design Abstract This is an introduction to the literature on programming language design and related topics. It is intended to cite the most important work, and to provide a place for students to start a literature search. Keywords programming languages, semantics, type systems, polymorphism, type theory, data abstraction, functional programming, object-oriented programming, logic programming, declarative programming, parallel and distributed programming languages Disciplines Programming Languages and Compilers This article is available at Iowa State University Digital Repository: http://lib.dr.iastate.edu/cs_techreports/59 Intro duction to the Literature On Programming Language Design Gary T. Leavens TR 93-01c Jan. 1993, revised Jan. 1994, Feb. 1996, and July 1999 Keywords: programming languages, semantics, typ e systems, p olymorphism, typ e theory, data abstrac- tion, functional programming, ob ject-oriented programming, logic programming, declarative programming, parallel and distributed programming languages. -

Turing Award • John Von Neumann Medal • NAE, NAS, AAAS Fellow

15-712: Advanced Operating Systems & Distributed Systems A Few Classics Prof. Phillip Gibbons Spring 2021, Lecture 2 Today’s Reminders / Announcements • Summaries are to be submitted via Canvas by class time • Announcements and Q&A are via Piazza (please enroll) • Office Hours: – Prof. Phil Gibbons: Fri 1-2 pm & by appointment – TA Jack Kosaian: Mon 1-2 pm – Zoom links: See canvas/Zoom 2 CS is a Fast Moving Field: Why Read/Discuss Old Papers? “Those who cannot remember the past are condemned to repeat it.” - George Santayana, The Life of Reason, Volume 1, 1905 See what breakthrough research ideas look like when first presented 3 The Rise of Worse is Better Richard Gabriel 1991 • MIT/Stanford style of design: “the right thing” – Simplicity in interface 1st, implementation 2nd – Correctness in all observable aspects required – Consistency – Completeness: cover as many important situations as is practical • Unix/C style: “worse is better” – Simplicity in implementation 1st, interface 2nd – Correctness, but simplicity trumps correctness – Consistency is nice to have – Completeness is lowest priority 4 Worse-is-better is Better for SW • Worse-is-better has better survival characteristics than the-right-thing • Unix and C are the ultimate computer viruses – Simple structures, easy to port, required few machine resources to run, provide 50-80% of what you want – Programmer conditioned to sacrifice some safety, convenience, and hassle to get good performance and modest resource use – First gain acceptance, condition users to expect less, later -

Interview with Barbara Liskov ACM Turing Award Recipient 2008 Done at MIT April 20, 2016 Interviewer Was Tom Van Vleck

B. Liskov Interview 1 Interview with Barbara Liskov ACM Turing Award Recipient 2008 Done at MIT April 20, 2016 Interviewer was Tom Van Vleck Interviewer is noted as “TVV” Dr. Liskov is noted as “BL” ?? = inaudible (with timestamp) or [ ] for phonetic [some slight changes have been made in this transcript to improve the readability, but no material changes were made to the content] TVV: Hello, I’m Tom Van Vleck, and I have the honor today to interview Professor Barbara Liskov, the 2008 ACM Turing Award winner. Today is April 20th, 2016. TVV: To start with, why don’t you tell us a little bit about your upbringing, where you lived, and how you got into the business? BL: I grew up in San Francisco. I was actually born in Los Angeles, so I’m a native Californian. When I was growing up, it was definitely not considered the thing to do for a young girl to be interested in math and science. In fact, in those days, the message that you got was that you were supposed to get married and have children and not have a job. Nevertheless, I was always encouraged by my parents to take academics seriously. My mother was a college grad as well as my father, so it was expected that I would go to college, which in those times was not that common. Maybe 20% of the high school class would go on to college and the rest would not. I was always very interested in math and science, so I took all the courses that were offered at my high school, which wasn’t a tremendous number compared to what is available today. -

Role of Operating System

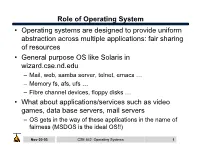

Role of Operating System • Operating systems are designed to provide uniform abstraction across multiple applications: fair sharing of resources • General purpose OS like Solaris in wizard.cse.nd.edu – Mail, web, samba server, telnet, emacs … – Memory fs, afs, ufs … – Fibre channel devices, floppy disks … • What about applications/services such as video games, data base servers, mail servers – OS gets in the way of these applications in the name of fairness (MSDOS is the ideal OS!!) Nov-20-03 CSE 542: Operating Systems 1 What is the role of OS? • Create multiple virtual machines that each user can control all to themselves – IBM 360/370 … • Monolithic kernel: Linux – One kernel provides all services. – New paradigms are harder to implement – May not be optimal for any one application • Microkernel: Mach – Microkernel provides minimal service – Application servers provide OS functionality • Nanokernel: OS is implemented as application level libraries Nov-20-03 CSE 542: Operating Systems 2 Case study: Multics • Goal: Develop a convenient, interactive, useable time shared computer system that could support many users. – Bell Labs and GE in 1965 joined an effort underway at MIT (CTSS) on Multics (Multiplexed Information and Computing Service) mainframe timesharing system. • Multics was designed to the swiss army knife of OS • Multics achieved most of the these goals, but it took a long time – One of the negative contribution was the development of simple yet powerful abstractions (UNIX) Nov-20-03 CSE 542: Operating Systems 3 Multics: Designed to be the ultimate OS • “One of the overall design goals is to create a computing system which is capable of meeting almost all of the present and near-future requirements of a large computer utility. -

Corbató Transcript Final

A. M. Turing Award Oral History Interview with Fernando J. (“Corby”) Corbató by Steve Webber Plum Island, Massachusetts April 6, 2018 Webber: Hi. My name is Steve Webber. It’s April 6th, 2018. I’m here to interview Fernando Corbató – everyone knows him as “Corby” – the ACM Turing Award winner of 1990 for his contributions to computer science. So Corby, tell me about your early life, where you went to school, where you were born, your family, your parents, anything that’s interesting for the history of computer science. Corbató: Well, my parents met at University of California, Berkeley, and they… My father got his doctorate in Spanish literature – and I was born in Oakland, California – and proceeded to… he got his first position at UCLA, which was then a new campus in Los Angeles, as a professor of Spanish literature. So I of course wasn’t very conscious of much at those ages, but I basically grew up in West Los Angeles and went to public high schools, first grammar school, then junior high, and then I began high school. Webber: What were your favorite subjects in high school? I tended to enjoy the mathematics type of subjects. As I recall, algebra was easy and enjoyable. And the… one of the results of that was that I was in high school at the time when World War II… when Pearl Harbor occurred and MIT was… rather the United States was drawn into the war. As I recall, Pearl Harbor occurred… I think it was on a Sunday, but one of the results was that it shocked the US into reacting to the war effort, and one of the results was that everything went into kind of a wartime footing.