A Neural Framework for Analyzing the Tweets of Dril

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Syllabus 2021

History 201: The History of Now Professor Patrick Iber Spring 2021 / TuTh 9:30-10:45AM / online Office Hours: Wednesday 11am-1pm, online [email protected] (608) 298-8758 TA: Meghan O’Donnell, [email protected] Sections: W 2:25-3:15; W 3:30-4:20; W 4:35-5:25 This Photo by Unknown Author is licensed under CC BY-SA History is the study of change over time, and requires hindsight to generate insight. Most history courses stop short of the present, and historians are frequently wary of applying historical analysis to our own times, before we have access to private sources and before we have the critical distance that helps us see what matters and what is ephemeral. But recent years have given many people the sense of living through historic times, and clamoring for historical context that will help them to understand the momentous changes in politics, society and culture that they observe around them. This experimental course seeks to explore the last twenty years or so from a historical point of view, using the historian’s craft to gain perspective on the present. The course will consider major developments—primarily but not exclusively in U.S. history—of the last twenty years, including 9/11 and the War on Terror, the financial crisis of 2008 and its aftermath, social movements from the Tea Party to the Movement for Black Lives, and political, cultural, and the technological changes that have been created by and shaped by these events. This course is designed to be an introduction to historical reasoning, analysis, writing, and research. -

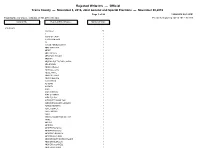

Rejected Write-Ins

Rejected Write-Ins — Official Travis County — November 8, 2016, Joint General and Special Elections — November 08,2016 Page 1 of 28 12/08/2016 02:12 PM Total Number of Voters : 496,044 of 761,470 = 65.14% Precincts Reporting 247 of 268 = 92.16% Contest Title Rejected Write-In Names Number of Votes PRESIDENT <no name> 58 A 2 A BAG OF CRAP 1 A GIANT METEOR 1 AA 1 AARON ABRIEL MORRIS 1 ABBY MANICCIA 1 ABDEF 1 ABE LINCOLN 3 ABRAHAM LINCOLN 3 ABSTAIN 3 ABSTAIN DUE TO BAD CANDIA 1 ADA BROWN 1 ADAM CAROLLA 2 ADAM LEE CATE 1 ADELE WHITE 1 ADOLPH HITLER 2 ADRIAN BELTRE 1 AJANI WHITE 1 AL GORE 1 AL SMITH 1 ALAN 1 ALAN CARSON 1 ALEX OLIVARES 1 ALEX PULIDO 1 ALEXANDER HAMILTON 1 ALEXANDRA BLAKE GILMOUR 1 ALFRED NEWMAN 1 ALICE COOPER 1 ALICE IWINSKI 1 ALIEN 1 AMERICA DESERVES BETTER 1 AMINE 1 AMY IVY 1 ANDREW 1 ANDREW BASAIGO 1 ANDREW BASIAGO 1 ANDREW D BASIAGO 1 ANDREW JACKSON 1 ANDREW MARTIN ERIK BROOKS 1 ANDREW MCMULLIN 1 ANDREW OCONNELL 1 ANDREW W HAMPF 1 Rejected Write-Ins — Official Travis County — November 8, 2016, Joint General and Special Elections — November 08,2016 Page 2 of 28 12/08/2016 02:12 PM Total Number of Voters : 496,044 of 761,470 = 65.14% Precincts Reporting 247 of 268 = 92.16% Contest Title Rejected Write-In Names Number of Votes PRESIDENT Continued.. ANN WU 1 ANNA 1 ANNEMARIE 1 ANONOMOUS 1 ANONYMAS 1 ANONYMOS 1 ANONYMOUS 1 ANTHONY AMATO 1 ANTONIO FIERROS 1 ANYONE ELSE 7 ARI SHAFFIR 1 ARNOLD WEISS 1 ASHLEY MCNEILL 2 ASIKILIZAYE 1 AUSTIN PETERSEN 1 AUSTIN PETERSON 1 AZIZI WESTMILLER 1 B SANDERS 2 BABA BOOEY 1 BARACK OBAMA 5 BARAK -

In the Supreme Court of the United States

No. XX-XX In the Supreme Court of the United States KATHLEEN SEBELIUS, SECRETARY OF HEALTH AND HUMAN SERVICES, ET AL., PETITIONERS v. HOBBY LOBBY STORES, INC., ET AL. ON PETITION FOR A WRIT OF CERTIORARI TO THE UNITED STATES COURT OF APPEALS FOR THE TENTH CIRCUIT PETITION FOR A WRIT OF CERTIORARI DONALD B. VERRILLI, JR. Solicitor General Counsel of Record STUART F. DELERY Assistant Attorney General EDWIN S. KNEEDLER Deputy Solicitor General JOSEPH R. PALMORE Assistant to the Solicitor General MARK B. STERN ALISA B. KLEIN Attorneys Department of Justice Washington, D.C. 20530-0001 [email protected] (202) 514-2217 QUESTION PRESENTED The Religious Freedom Restoration Act of 1993 (RFRA), 42 U.S.C. 2000bb et seq., provides that the government “shall not substantially burden a person’s exercise of religion” unless that burden is the least restrictive means to further a compelling governmen- tal interest. 42 U.S.C. 2000bb-1(a) and (b). The ques- tion presented is whether RFRA allows a for-profit corporation to deny its employees the health coverage of contraceptives to which the employees are other- wise entitled by federal law, based on the religious objections of the corporation’s owners. (I) PARTIES TO THE PROCEEDINGS Petitioners are Kathleen Sebelius, Secretary of Health and Human Services; the Department of Health and Human Services; Thomas E. Perez, Secre- tary of Labor; the Department of Labor; Jacob J. Lew, Secretary of the Treasury; and the Department of the Treasury. Respondents are Hobby Lobby Stores, Inc.; Mardel, Inc.; David Green; Barbara Green; Mart Green; Steve Green; and Darsee Lett. -

Hypersphere Anonymous

Hypersphere Anonymous This work is licensed under a Creative Commons Attribution 4.0 International License. ISBN 978-1-329-78152-8 First edition: December 2015 Fourth edition Part 1 Slice of Life Adventures in The Hypersphere 2 The Hypersphere is a big fucking place, kid. Imagine the biggest pile of dung you can take and then double-- no, triple that shit and you s t i l l h a v e n ’ t c o m e c l o s e t o o n e octingentillionth of a Hypersphere cornerstone. Hell, you probably don’t even know what the Hypersphere is, you goddamn fucking idiot kid. I bet you don’t know the first goddamn thing about the Hypersphere. If you were paying attention, you would have gathered that it’s a big fucking 3 place, but one thing I bet you didn’t know about the Hypersphere is that it is filled with fucked up freaks. There are normal people too, but they just aren’t as interesting as the freaks. Are you a freak, kid? Some sort of fucking Hypersphere psycho? What the fuck are you even doing here? Get the fuck out of my face you fucking deviant. So there I was, chilling out in the Hypersphere. I’d spent the vast majority of my life there, in fact. It did contain everything in my observable universe, so it was pretty hard to leave, honestly. At the time, I was stressing the fuck out about a fight I had gotten in earlier. I’d been shooting some hoops when some no-good shithouses had waltzed up to me and tried to make a scene. -

View / Open Costello Oregon 0171A 12851.Pdf

HUMAN AND COMPUTERIZED PERSONALITY INFERENCES FROM DIGITAL FOOTPRINTS ON TWITTER by CORY K. COSTELLO A DISSERTATION Presented to the Department of Psychology and the Graduate School of the University of Oregon in partial fulfillment of the requirements for the degree of Doctor of Philosophy June 2020 DISSERTATION APPROVAL PAGE Student: Cory K. Costello Title: Human And Computerized Personality Inferences From Digital Footprints On Twitter This dissertation has been accepted and approved in partial fulfillment of the requirements for the Doctor of Philosophy degree in the Department of Psychology by: Sanjay Srivastava Chair Nicholas Allen Core Member Robert Chavez Core Member Ryan Light Institutional Representative and Kate Mondloch Interim Vice Provost and Dean of the Graduate School Original approval signatures are on file with the University of Oregon Graduate School. Degree awarded June 2020. ii ® 2020 Cory K. Costello This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs (United States) License iii DISSERTATION ABSTRACT Cory K. Costello Doctor of Philosophy Department of Psychology June 2020 Title: Human And Computerized Personality Inferences From Digital Footprints On Twitter The increasing digitization of our social world has implications for personality, reputation, and their social consequences in online environments. The present dissertation is focused on how personality and reputation are reflected in digital footprints from the popular online social network Twitter, and the broader implications this has for the expression and perception of personality in online spaces. In three studies, I demonstrate that personality is reflected in the language people use in their tweets, the accounts they decide to follow, and how they construct their profile. -

Blake Deberry President And

BARCLAYS CEO ENERGY-POWER CONFERENCE SEPTEMBER 9, 2020 dril-quip.com | NYSE: DRQ CAUTIONARY STATEMENT Forward-Looking Statements The information furnished in this presentation contains “forward-looking statements” within the meaning of the federal securities laws. Forward-looking statements include the effects of the COVID-19 pandemic, and the effects of actions taken by third parties including, but not limited to, governmental authorities, customers, contractors and suppliers, in response to the COVID-19 pandemic, goals, projections, estimates, expectations, market outlook, forecasts, plans and objectives, including revenue and new product revenue, capital expenditures and other projections, project bookings, bidding and service activity, acquisition opportunities, forecasted supply and demand, forecasted drilling activity and subsea investment, liquidity, cost savings, and share repurchases and are based on assumptions, estimates and risk analysis made by management of Dril-Quip, Inc. (“Dril-Quip”) in light of its experience and perception of historical trends, current conditions, expected future developments and other factors. No assurance can be given that actual future results will not differ materially from those contained in the forward-looking statements in this presentation. Although Dril-Quip believes that all such statements contained in this presentation are based on reasonable assumptions, there are numerous variables of an unpredictable nature or outside of Dril-Quip’s control that could affect Dril-Quip’s future results and the value of its shares. Each investor must assess and bear the risk of uncertainty inherent in the forward-looking statements contained in this presentation. Please refer to Dril-Quip’s filings with the Securities and Exchange Commission (“SEC”) for additional discussion of risks and uncertainties that may affect Dril-Quip’s actual future results. -

This Relates to Agenda Item No. 2 Transportation Committee June 7, 2019

This Relates to Agenda Item No. 2 Transportation Committee June 7, 2019 From: PIO To: Clerk of the Board Subject: FW: South 125 to East 94 interchange Date: Friday, May 31, 2019 12:22:51 PM -----Original Message----- From: KEITH CLEMENTS <[email protected]> Sent: Friday, May 31, 2019 10:51 AM To: PIO <[email protected]> Subject: South 125 to East 94 interchange I would like to let you know that I am very much in favor of finishing the SR 125 south to SR 94 West interchange, so that we have a freeway to freeway connection. This is a LONG overdue project and needs to be completed as soon as possible. The congestion is a major problem during all hours of the day and really needs be addressed in the next year. I understand that funds are available to do this project and need to be earmarked to complete the connection. Thank you, Keith Clements La Mesa, CA This Relates to Agenda Item No. 2 Transportation Committee June 7, 2019 From: james rue To: Huntington, Susan; Clerk of the Board Cc: [email protected]; [email protected]; [email protected]; [email protected]; [email protected] Subject: SanDag miss use of our TAXes Date: Sunday, June 02, 2019 7:06:34 PM Hello SANDAG I'm in fear of misuse of our tax dollars by SANDAG, and I would like my opinion heard. For more than 30 years I commuted to/from downtown San Diego, I drove, I car pulled, and commuted using public transportation (bus & trolley). -

Environmental Law in New York

Developments in Federal Michael B. Gerrard and State Law Editor ENVIRONMENTAL LAW IN NEW YORK Volume 30, No. 09 September 2019 The Impossible Search for Perfect Land: Siting Renewable Generation Projects in New York State Gene Kelly and Michelle Piasecki Introduction IN THIS ISSUE There is near universal acceptance in the United States that the The Impossible Search for Perfect Land: Siting Renewable 1 Generation Projects in New York State ........................................ 167 Earth is warming, and that it has been doing so for at least LEGAL DEVELOPMENTS ......................................................... 174 several decades. Recognizing this fact, so-called ‘‘climate ^ ASBESTOS......................................................................174 deniers’’ have largely shifted in recent years from a position of ^ CLIMATE CHANGE ......................................................175 outright denial to a position of questioning the link between the ^ ENERGY .........................................................................175 warming of the planet and anthropogenic causes. Those who ^ HAZARDOUS SUBSTANCES.......................................176 maintain that humans are not responsible for climate change, ^ HISTORIC PRESERVATION.........................................177 however, find themselves in a distinct minority—particularly in ^ LAND USE......................................................................177 the world’s scientific community, as 97% of published scientists ^ MINING...........................................................................179 -

President - Presidential Seal (2)” of the Philip Buchen Files at the Gerald R

The original documents are located in Box 51, folder “President - Presidential Seal (2)” of the Philip Buchen Files at the Gerald R. Ford Presidential Library. Copyright Notice The copyright law of the United States (Title 17, United States Code) governs the making of photocopies or other reproductions of copyrighted material. Gerald R. Ford donated to the United States of America his copyrights in all of his unpublished writings in National Archives collections. Works prepared by U.S. Government employees as part of their official duties are in the public domain. The copyrights to materials written by other individuals or organizations are presumed to remain with them. If you think any of the information displayed in the PDF is subject to a valid copyright claim, please contact the Gerald R. Ford Presidential Library. Digitized from Box 51 of the Philip Buchen Files at the Gerald R. Ford Presidential Library THE WHITE HOUSE WA$HINGTON March 17, 1975 Dear Mr. Crump: I regret the delay in giving you a final response to your letter of Feburary 28 in which you requested permission on behalf of the National Geographic Society to use the Seal of the President on the dust jacket of Our Country's Presidents. While I appredate the positions you set forth in our meetingias well as your letter, after conferring with Philip Buchen, Counsel to the President, we have concluded that the terms of Executive Order 11649 do not permit us to grant your request. This office has not granted permission to place the Seal on the cover of any books dealing with the Presidency. -

Predicting Mental Health from Followed Accounts Predicting Ment

Predicting Mental Health from Followed Accounts 1 Running Head: Predicting Mental Health from Followed Accounts Predicting Mental Health from Followed Accounts on Twitter Cory K. Costello, Sanjay Srivastava, Reza Rejaie, & Maureen Zalewski University of Oregon Author note: Cory K. Costello, Sanjay Srivastava, and Maureen Zalewski, University of Oregon, Department of Psychology, 1227 University of Oregon, Eugene, OR, 97403. Reza Rejaie, University of Oregon, Department of Computer and Information Science, 1202 University of Oregon, Eugene, OR, 97403. Correspondence concerning this article should be addressed to Cory K. Costello, who is now at the Department of Psychology, University of Michigan, 530 Church Street, University of Michigan, Ann Arbor, MI 48109, email: [email protected] Study materials, analysis code, and registrations (including pre-registered Stage 1 protocol) can be found at the project site: https://osf.io/54qdm/ This material is based on work supported by the National Institute of Mental Health under Grant # 1 R21 MH106879-01 and the National Science Foundation under NSF GRANT# 1551817. Predicting Mental Health from Followed Accounts 2 Abstract The past decade has seen rapid growth in research linking stable psychological characteristics (i.e., traits) to digital records of online behavior in Online Social Networks (OSNs) like Facebook and Twitter, which has implications for basic and applied behavioral sciences. Findings indicate that a broad range of psychological characteristics can be predicted from various behavioral residue online, including language used in posts on Facebook (Park et al., 2015) and Twitter (Reece et al., 2017), and which pages a person ‘likes’ on Facebook (e.g., Kosinski, Stillwell, & Graepel, 2013). -

Conversation Contents

Conversation Contents Fwd: Shell Alaska - today NTSB will release its report on Kulluk incident "Colander, Brandi" <[email protected]> From: "Colander, Brandi" <[email protected]> Sent: Wed Aug 19 2015 16:16:49 GMT-0600 (MDT) To: Richard Cardinale <[email protected]> Fwd: Shell Alaska - today NTSB will release its report on Subject: Kulluk incident ---------- Forwarded message ---------- From: <[email protected]> Date: Thu, May 28, 2015 at 9:17 AM Subject: Shell Alaska - today NTSB will release its report on Kulluk incident To: [email protected], [email protected], [email protected] Folks - Perhaps you are aware, but just in case you aren’t. Today the National Transportation Safety Board (NTSB) is expected to release its report on the Kulluk tow incident. Sara Sara Glenn Director, Federal Government Relations & Senior Counsel ∙ Shell Oil Company ∙ 1050 K Street NW Suite 700 ∙ Washington DC 20001-4449 ∙ ph 202 466 1400 ∙ cell 202 299 6472 -- Brandi A. Colander Deputy Assistant Secretary Land & Minerals Management U.S. Department of the Interior Conversation Contents Fwd: Shell Alaska - Seattle /1. Fwd: Shell Alaska - Seattle/1.1 IMG_1958.jpg /1. Fwd: Shell Alaska - Seattle/1.2 image1.jpg "Colander, Brandi" <[email protected]> From: "Colander, Brandi" <[email protected]> Sent: Wed Aug 19 2015 16:17:43 GMT-0600 (MDT) To: Richard Cardinale <[email protected]> Subject: Fwd: Shell Alaska - Seattle Attachments: IMG_1958.jpg image1.jpg ---------- Forwarded message ---------- From: <[email protected]> Date: Thu, May 14, 2015 at 6:12 PM Subject: Shell Alaska - Seattle To: [email protected], [email protected], [email protected] Brandi, Mike, Celina - Brief update on Seattle. -

NYU Team 2.Pdf

Half-Packet by NYU Team 2 et. al. 1. A player with this surname surpassed Greg Kelser for most rebounds with one college’s team. In the last seconds of overtime during game 6 of one series, Chris Bosh blocked a three point shot taken by a player with this surname that would have tied the game 103-103. After playing in Slovenia during the 2011 lockout, that player with this surname became a starter after (*) Manu Ginóbili returned to one team as a sixth man. In a game against the Grizzlies, a player with this surname was the first in NBA history to score a triple-double without scoring 10 points. That shorter-than-average center with this surname notorious for his physicality has 9 seasons (?) played with the Splash Brothers. For 10 points, name this surname the shooting guard who won back to back titles with the Raptors and Lakers, Danny, and Warriors defensive all-star Draymond. ANSWER: Green 2. This author wrote a two-person play in which a parole officer talks with a woman in prison for killing a police officer during a robbery. At the end of Act I of a play by this author, a man receives a phone call telling him that she lied about a realtor failing to close the deal on a house just to get him to come to a surprise party. This author wrote a play in which Carol threatens to accuse her professor of (*) rape unless he removes his books from the curriculum. In a play by this author, Don and Teach try to steal back the title object that Don sold for ninety dollars.