Download Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

District Saharanpur 82

BASE LINE SURVEY IN THE MINORITY CONCENTRATED DISTRICTS OF UTTAR PRADESH (A Report of Saharanpur District) Sponsored by: Ministry of Minority Affairs Government of India New Delhi Study conducted by: Dr. R. C. TYAGI GIRI INSTITUTE OF DEVELOPMENT STUDIES SECTOR-O, ALIGANJ HOUSING SCHEME LUCKNOW-226 024 CONTENTS Title Page No DISTRICT MAP – SAHARANPUR vi EXECUTIVE SUMMARY vii-xii CHAPTER I: OUTLINE OF THE STUDY 1-3 1.1 About the study 1 1.2 Objective of the study 2 1.3 Methodology and Sample design 2 1.4 Tools 3 CHAPTER II: DEVELOPMENT STATUS IN SAHARANPUR DISTRICT 4-19 2.1 Introduction 4 2.2 Demographic Status 5 2.3 Demographic Status by Religion 6 2.4 Structure and Growth in Employment 7 2.5 Unemployment 8 2.6 Land Use Pattern 9 2.7 Coverage of Irrigation and Sources 10 2.8 Productivity of Major Crops 10 2.9 Livestock 11 2.10 Industrial Development 11 2.11 Development of Economic Infrastructure 12 2.12 Rural Infrastructure 13 2.13 Educational Infrastructure 14 2.14 Health Infrastructure 15 2.15 Housing Amenities in Saharanpur District 16 2.16 Sources of Drinking Water 17 2.17 Sources of Cooking Fuel 18 2.18 Income and Poverty Level 19 CHAPTER III: DEVELOPMENT STATUS AT THE VILLAGE LEVEL 20-31 3.1 Population 20 3.2 Occupational Pattern 20 3.3 Land use Pattern 21 3.4 Sources of Irrigation 21 3.5 Roads and Electricity 22 3.6 Drinking Water 22 3.7 Toilet Facility 23 3.8 Educational Facility 23 3.9 Students Enrollments 24 3.10 Physical Structure of Schools 24 3.11 Private Schools and Preferences of the People for Schools 25 3.12 Health Facility -

2012* S. No. Year State Region Reason 1. 2017 Jammu And

INTERNET SHUTDOWNS IN INDIA SINCE 2012* S. Year State Region Reason No. 1. 2017 Jammu Pulwama Mobile Internet services were suspended yet again in Pulwama and district of Kashmir on 16 th August, 2017 to prevent rumour Kashmir mongering after a Lashkar commander was killed in a gunfight with the security forces. 2. 2017 Jammu Kashmir Mobile and broadband Internet services were suspended in the and Valley Kashmir valley in the morning of 15 th August, 2017 as a Kashmir precautionary measure on Independence Day. Services were reportedly restored later in the day. 3. 2017 Jammu Shopian, Internet services were suspended in Shopian and Kulgam district and Kulgam of Kashmir on 13 th August, 2017 to prevent the spreading of Kashmir information after three Hizbul Mujahideen militants including the operations commander, and two army men were killed in an encounter. 4. 2017 Jammu Pulwama Internet services were suspended in Pulwama district of Kashmir and on 9 th August, 2017 as a precautionary measure after three militants Kashmir were killed in a gunfight with the Government forces in the Tral township. However, the services on state-owned Bharat Sanchar Nigam Limited remained functional. 5. 2017 Jammu Baramulla Mobile Internet services were suspended in Baramulla district of and Kashmir as a precautionary measure on 5th August, 2017 after Kashmir three LeT militants were killed in an encounter with the security forces in the Sopore town of the district. 6. 2017 Jammu Kashmir Mobile Internet services were suspended yet again across Kashmir and Valley on 1st August, 2017 as a precautionary measure fearing clashes Kashmir after the killing of Lashkar-e-Toiba commander Abu Dujana and his aide in an encounter with the security forces. -

Section-VIII : Laboratory Services

Section‐VIII Laboratory Services 8. Laboratory Services 8.1 Haemoglobin Test ‐ State level As can be seen from the graph, hemoglobin test is being carried out at almost every FRU studied However, 10 percent medical colleges do not provide the basic Hb test. Division wise‐ As the graph shows, 96 percent of the FRUs on an average are offering this service, with as many as 13 divisions having 100 percent FRUs contacted providing basic Hb test. Hemoglobin test is not available at District Women Hospital (Mau), District Women Hospital (Budaun), CHC Partawal (Maharajganj), CHC Kasia (Kushinagar), CHC Ghatampur (Kanpur Nagar) and CHC Dewa (Barabanki). 132 8.2 CBC Test ‐ State level Complete Blood Count (CBC) test is being offered at very few FRUs. While none of the sub‐divisional hospitals are having this facility, only 25 percent of the BMCs, 42 percent of the CHCs and less than half of the DWHs contacted are offering this facility. Division wise‐ As per the graph above, only 46 percent of the 206 FRUs studied across the state are offering CBC (Complete Blood Count) test service. None of the FRUs in Jhansi division is having this service. While 29 percent of the health facilities in Moradabad division are offering this service, most others are only a shade better. Mirzapur (83%) followed by Gorakhpur (73%) are having maximum FRUs with this facility. CBC test is not available at Veerangna Jhalkaribai Mahila Hosp Lucknow (Lucknow), Sub Divisional Hospital Sikandrabad, Bullandshahar, M.K.R. HOSPITAL (Kanpur Nagar), LBS Combined Hosp (Varanasi), -

Missing Lawyer at Risk of Torture

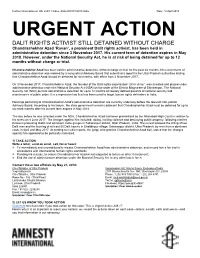

Further information on UA: 248/17 Index: ASA 20/8191/2018 India Date: 10 April 2018 URGENT ACTION DALIT RIGHTS ACTIVIST STILL DETAINED WITHOUT CHARGE Chandrashekhar Azad ‘Ravan’, a prominent Dalit rights activist, has been held in administrative detention since 3 November 2017. His current term of detention expires in May 2018. However, under the National Security Act, he is at risk of being detained for up to 12 months without charge or trial. Chandrashekhar Azad has been held in administrative detention, without charge or trial, for the past six months. His current term of administrative detention was ordered by a non-judicial Advisory Board that submitted a report to the Uttar Pradesh authorities stating that Chandrashekhar Azad should be detained for six months, with effect from 2 November 2017. On 3 November 2017, Chandrashekhar Azad, the founder of the Dalit rights organisation “Bhim Army”, was arrested and placed under administrative detention under the National Security Act (NSA) on the order of the District Magistrate of Saharanpur. The National Security Act (NSA) permits administrative detention for up to 12 months on loosely defined grounds of national security and maintenance of public order. It is a repressive law that has been used to target human rights defenders in India. Hearings pertaining to Chandrashekhar Azad’s administrative detention are currently underway before the relevant non-judicial Advisory Board. According to his lawyer, the state government remains adamant that Chandrashekhar Azad must be detained for up to six more months after his current term expires in May 2018. The day before he was arrested under the NSA, Chandrashekhar Azad had been granted bail by the Allahabad High Court in relation to his arrest on 8 June 2017. -

Current Condition of the Yamuna River - an Overview of Flow, Pollution Load and Human Use

Current condition of the Yamuna River - an overview of flow, pollution load and human use Deepshikha Sharma and Arun Kansal, TERI University Introduction Yamuna is the sub-basin of the Ganga river system. Out of the total catchment’s area of 861404 sq km of the Ganga basin, the Yamuna River and its catchment together contribute to a total of 345848 sq. km area which 40.14% of total Ganga River Basin (CPCB, 1980-81; CPCB, 1982-83). It is a large basin covering seven Indian states. The river water is used for both abstractive and in stream uses like irrigation, domestic water supply, industrial etc. It has been subjected to over exploitation, both in quantity and quality. Given that a large population is dependent on the river, it is of significance to preserve its water quality. The river is polluted by both point and non-point sources, where National Capital Territory (NCT) – Delhi is the major contributor, followed by Agra and Mathura. Approximately, 85% of the total pollution is from domestic source. The condition deteriorates further due to significant water abstraction which reduces the dilution capacity of the river. The stretch between Wazirabad barrage and Chambal river confluence is critically polluted and 22km of Delhi stretch is the maximum polluted amongst all. In order to restore the quality of river, the Government of India (GoI) initiated the Yamuna Action Plan (YAP) in the1993and later YAPII in the year 2004 (CPCB, 2006-07). Yamuna river basin River Yamuna (Figure 1) is the largest tributary of the River Ganga. The main stream of the river Yamuna originates from the Yamunotri glacier near Bandar Punch (38o 59' N 78o 27' E) in the Mussourie range of the lower Himalayas at an elevation of about 6320 meter above mean sea level in the district Uttarkashi (Uttranchal). -

LOK SABHA UNSTARRED QUESTION NO. 1326 to BE ANSWERED on 10.02.2020 REGARDING PROMOTION of CNG and PNG List of Geographical Areas Covered Till 10Th CGD Bidding Round

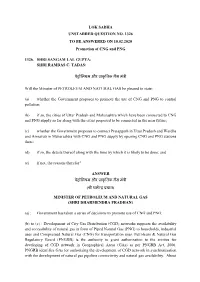

LOK SABHA UNSTARRED QUESTION NO. 1326 TO BE ANSWERED ON 10.02.2020 Promotion of CNG and PNG 1326. SHRI SANGAM LAL GUPTA: SHRI RAMDAS C. TADAS: पेट्रोलियम और प्राकृलिक गैस मंत्री Will the Minister of PETROLEUM AND NATURAL GAS be pleased to state: (a) whether the Government proposes to promote the use of CNG and PNG to control pollution; (b) if so, the cities of Uttar Pradesh and Maharashtra which have been connected to CNG and PNG supply so far along with the cities proposed to be connected in the near future; (c) whether the Government proposes to connect Pratapgarh in Uttar Pradesh and Wardha and Amravati in Maharashtra with CNG and PNG supply by opening CNG and PNG stations there; (d) if so, the details thereof along with the time by which it is likely to be done; and (e) if not, the reasons therefor? ANSWER पेट्रोलियम और प्राकृलिक गैस मंत्री (श्री धमेन्द्र प्रधान) MINISTER OF PETROLEUM AND NATURAL GAS (SHRI DHARMENDRA PRADHAN) (a) : Government has taken a series of decisions to promote use of CNG and PNG. (b) to (e) : Development of City Gas Distribution (CGD) networks supports the availability and accessibility of natural gas in form of Piped Natural Gas (PNG) to households, industrial uses and Compressed Natural Gas (CNG) for transportation uses. Petroleum & Natural Gas Regulatory Board (PNGRB) is the authority to grant authorization to the entities for developing of CGD network in Geographical Areas (GAs) as per PNGRB Act, 2006. PNGRB identifies GAs for authorizing the development of CGD network in synchronization with the development of natural gas pipeline connectivity and natural gas availability. -

Copyright by Mohammad Raisur Rahman 2008

Copyright by Mohammad Raisur Rahman 2008 The Dissertation Committee for Mohammad Raisur Rahman certifies that this is the approved version of the following dissertation: Islam, Modernity, and Educated Muslims: A History of Qasbahs in Colonial India Committee: _____________________________________ Gail Minault, Supervisor _____________________________________ Cynthia M. Talbot _____________________________________ Denise A. Spellberg _____________________________________ Michael H. Fisher _____________________________________ Syed Akbar Hyder Islam, Modernity, and Educated Muslims: A History of Qasbahs in Colonial India by Mohammad Raisur Rahman, B.A. Honors; M.A.; M.Phil. Dissertation Presented to the Faculty of the Graduate School of The University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy The University of Texas at Austin August 2008 Dedication This dissertation is dedicated to the fond memories of my parents, Najma Bano and Azizur Rahman, and to Kulsum Acknowledgements Many people have assisted me in the completion of this project. This work could not have taken its current shape in the absence of their contributions. I thank them all. First and foremost, I owe my greatest debt of gratitude to my advisor Gail Minault for her guidance and assistance. I am grateful for her useful comments, sharp criticisms, and invaluable suggestions on the earlier drafts, and for her constant encouragement, support, and generous time throughout my doctoral work. I must add that it was her path breaking scholarship in South Asian Islam that inspired me to come to Austin, Texas all the way from New Delhi, India. While it brought me an opportunity to work under her supervision, I benefited myself further at the prospect of working with some of the finest scholars and excellent human beings I have ever known. -

Uttar Pradesh District Gazetteers: Muzaffarnagar

GAZETTEER OF INDIA UTTAR PRADESH District Muzaffarnagar UTTAR PRADESH DISTRICT GAZETTEERS MUZAFFARNAGAR ■AHSLl PI AS a* TAR¥K I.AiSv State Editor Published by the Government of Uttar Pradesh (Department of District Gazetteers, U. P„ Lucknow) and Printed by Superintendent Printing & Stationery, U. p, at fbe Government Press, Rampur 1989 Price Rs. 52.00 PREFACE Earlier accounts regarding the Muzaffarnagar district are E. T. Atkinson’s Statistical, Descriptive and Histori¬ cal Account of the North-Western Provinces of India, Vol. II, (1875), various Settlement Reports of the region and H. R. Nevill’s Muzaffarnagar : A Gazetteer (Allahabad, 1903), and its supplements. The present Gazetteer of the district is the twenty- eighth in the series of revised District Gazetteers of the State of Uttar Pradesh which are being published under a scheme jointly sponsored and financed by the Union and the State Governments. A bibliography of the published works used in the preparation of this Gazetteer appears at its end. The census data of 1961 and 1971 have been made the basis for the statistics mentioned in the Gazetteer. I am grateful to the Chairman and members of the State Advisory Board, Dr P. N. Chopra, Ed.',tor, Gazetteers, Central Gazetteers Unit, Ministry of Education and Social Welfare, Government of India, New Delhi, and to all those officials and non-officials who have helped in the bringing out of this Gazetteer. D. P. VARUN l.UCKNOW : November 8, 1976 ADVISORY BOARD 1. Sri Swami Prasad Singh, Revenue Minister, Chairman Government of Uttar Pradesh 2. Sri G. C. Chaturvedi, Commissioner-eum- Viet-Chairmsn Secretary, Revenue Department 3. -

CUG No. / Email ID of JAIL OFFICIALS of up Sl.N Name of Institution Designation Mobile N0

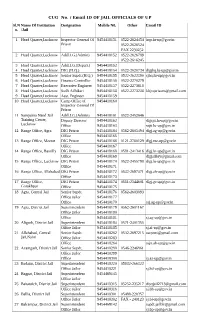

CUG No. / Email ID OF JAIL OFFICIALS OF UP Sl.N Name Of Institution Designation Mobile N0. Other Email ID o. /Jail 1 Head Quarter,Lucknow Inspector General Of 9454418151 0522-2624454 [email protected] Prison 0522-2626524 FAX 2230252 2 Head Quarter,Lucknow Addl.I.G.(Admin) 9454418152 0522-2626789 0522-2616245 3 Head Quarter,Lucknow Addl.I.G.(Depart.) 9454418153 4 Head Quarter,Lucknow DIG (H.Q.) 9454418154 0522-2620734 [email protected] 5 Head Quarter,Lucknow Senior Supdt.(H.Q.) 9454418155 0522-2622390 [email protected] 6 Head Quarter,Lucknow Finance Controller 9454418156 0522-2270279 7 Head Quarter,Lucknow Executive Engineer 9454418157 0522-2273618 8 Head Quarter,Lucknow Sodh Adhikari 9454418158 0522-2273238 [email protected] 9 Head Quarter,Lucknow Asst. Engineer 9454418159 10 Head Quarter,Lucknow Camp Office of 9454418160 Inspector General Of Prison 11 Sampurna Nand Jail Addl.I.G.(Admin) 9454418161 0522-2452646 Training Center, Deputy Director 9454418162 [email protected] Lucknow Office 9454418163 [email protected] 12 Range Office, Agra DIG Prison 9454418164 0562-2605494 [email protected] Office 9454418165 13 Range Office, Meerut DIG Prison 9454418166 0121-2760129 [email protected] Office 9454418167 14 Range Office, Bareilly DIG Prison 9454418168 0581-2413416 [email protected] Office 9454418169 [email protected] 15 Range Office, Lucknow DIG Prison 9454418170 0522-2455798 [email protected] Office 9454418171 16 Range Office, Allahabad DIG Prison 9454418172 0532-2697471 [email protected] Office 9454418173 17 Range Office, DIG Prison 9454418174 0551-2344601 [email protected] Gorakhpur Office 9454418175 18 Agra, Central Jail Senior Supdt. -

GENERAL SR Obtain Total Marks Date of Name Father's Name Address Mobileno Refno

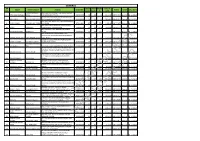

GENERAL SR Obtain Total Marks Date of Name Father's Name Address MobileNo RefNo. Caste CALL DATE NO. Marks Marks % Birth VIKRAM BAHADUR VILL MALTHUA SHAHJOT POST DARJI KUWAN 1 ATUL KUMAR SINGH SINGH DIST GONDA PIN 271123 8922028254 460 500 92 18.Jun.97 MBD-21517 General 26-11-2018 vill and post musmariya distt jalaun pincode - 3 AMIT SINGH RAM SUMRAN SINGH 285123 mob.no.9454420654 9454420654 455 500 91 10.Feb.99 MBD-23893 General 26-11-2018 VILL ILNA POST BALKA DISTT BULANDSHAHR UP 8 GAJRAJ SINGH CHANDRA PAL SINGH PIN 203409 9720110011 878 1000 87.8 14.Mär.92 MBD-22687 General 26-11-2018 Vpo Sasroli ,tehsil Matanhail ,district Jhajjar. 10 Vikash Jaibhagwan State haryana. Pincode 124106. 7988483198 349 400 87.25 01.Jän.01 MBD-22439 General 26-11-2018 VILL-MIGHAULI POST HARPALPUR HARDOI 11 ANURAG AWASTHI MUNNU LAL 241402 7755066511 434 500 86.8 13.Jul.97 MBD-20191 General 26-11-2018 VILL PACHDEORA DIWAN NAGAR POST USAWAN TEH DATAGANJ DISTT BUDAUN PIN 12 DHARMENDRA SINGH UMASHANKAR SINGH CODE 243631 9997056452 516 600 86 19.Jän.86 MBD-20157 General 26-11-2018 HOUSE NO 232/C GAYATRI NAGAR LINEPAR 13 ASHISH SHARMA AVDHESH SHARMA MORADABAD 244001 9639585736 430 500 86 09.Aug.98 MBD-31118 General 26-11-2018 mohammad shamim 15 sheeba khan khan h.no. 438k, sisora manjil lodhipur shahjahanpur 7703074904 429 500 85.8 04.Sep.98 MBD-21178 General 26-11-2018 VILLAGE NAGLA HUSAIN POST KUNDI TEHSIL SAHABAD THANA PACHDEVRA DIST HARDOI PIN 16 ARVIND KUMAR RAJ BAHADUR 241124 9410842633 428 500 85.6 01.Aug.94 MBD-30935 General 26-11-2018 VILL NAGLA AZAN AKBARBAS POST UMRARA TEH DIBAI DISTT BULANDSHAHR PIN CODE 18 MANOJ KUMAR ATAR SINGH 202393 9719340030 426 500 85.2 01.Jul.93 MBD-24597 General 26-11-2018 SHRAVAN KUMAR GANESH VIHAR COLONY NEAR RAILWAY 23 JAISWAL MADAN LAL STATION CIVIL LINES BUDAUN PIN 243601 8218208426 422 500 84.4 02.Feb.81 MBD-34105 General 26-11-2018 KARAITHI TEH SHAHABAD DISTT RAMPUR 25 NARENDRA SINGH SUGARPAL SINGH 244922 9458734188 421 500 84.2 15.Jul.95 MBD-33822 General 26-11-2018 27 AKASH SHARMA RISHIPAL SHARMA VILL-LAKRI,LAKRI FAZALPUR MORADABAD. -

Curriculum Vitae

Dr. Sri Niwas, FNASc, FASc, FNA Professor of Geophysics IITR Campus, Roorkee Department of Earth Sciences Tel. + 91-1332 275739 and 285579 Indian Institute of Technology Roorkee Roorkee – 247667, India Email: [email protected] Tel. + 91-1332 285570 Fax. + 91-1332 273560 ______________________________________________ CURRICULUM VITAE Father’s Name Late Sri Ram Adhar Pandey Date of birth July, 04, 1946 Permanent address Village & Post-Rakahat, District – Gorakhpur, UP Academic Qualification Ph.D. (Geophysics) 1974, M.Sc. (Geophysics) 1968, B.Sc. (Hons.) 1966 – all from Banaras Hindu University, Varanasi; High School (10th) 1962, Intermediate (10+2) 1964-UP Board, Allahabad. Position held Professor of Geophysics (17.4.90 – contd.), Reader (17.4.80-6.11.89), Lecturer (14.2.77-16.4.80). Scientist (CSIR Pool, 21.12.76-13.3.77) – University of Roorkee, Roorkee; Professor and Chairman (7.11.89-17.6.92, on leave from University of Roorkee)-Kurukshetra University, Kurukshetra. @ Period between 1968-20.12.76 was spent as Research Scholar, Junior Research Fellow, Senior Research Fellow and Post Doctoral Fellow as Banaras Hindu University and University of Roorkee. Awards/Honors Shanti Swarup Bhatnagar (SSB) Prize, CSIR, Government of India, 1991; Khosla Annual Research Prizes, University of Roorkee, 1987, 1988, 1990; Fellow, Indian National Science Academy (FNA), New Delhi; Fellow, Indian Academy of Science (FASc), Bangalore; Fellow, National Academy of Science (India) (FNASc), Allahabad; Fellow, Indian Geophysical Union, Hyderabad; Fellow, Association of Exploration Geophysicists, Hyderabad. Specialization Inversion of Geophysical Data; Geoelectromagnetism; Geohydrology (Exploration, development and management of groundwater); Exploration Geophysics. Research Milestones Research Papers (80)-Appendix B; Ph.D. -

Status of River Ganga and Action Plan to Improve Its Water Quality in Phase-II (District Unnao D/S to District Balia) for Chamber Meeting at NGT

Status of River Ganga and Action Plan to improve its water quality in Phase-II (District Unnao D/S to District Balia) for Chamber Meeting at NGT on 15 September, 2017 UP Pollution Control Board TC-12V, Vibhuti Khand, Gomti Nagar, Lucknow 01-09-17 Introduction River Ganga enters in U.P. in District Bijnor and after passing through major districts Meerut, Hapur, Bulandshahar, Aligarh, Kanpur Allahabad, Varanasi, Balia, it goes to Bihar onwards. Hon'ble National Green Tribunal, New Delhi is also monitoring the progress of improvement of river Ganga in Phased manner. Hon'ble NGT after hearing different steps being taken by different authorities responsible for improving the river Ganga water quality has passed order on dated 13-07-2017 for Segment-B from Haridwar D/s to Unnao. The main action which is required in this segment is to take action in the identified 86 drains meeting into Ganga & its tributaries and for treating the drains as per their quality, recycling the treated water and discharging rest treated water into river as per the prescribed standards and maintaining E-flow in every stretch of the Ganga & its tributaries. Similar steps will be required from different Stakeholders mentioned in the Hon'ble NGT order to improve river Ganga water quality in Phase-II, from Unnao D/s to Balia having total length of approx. 600 Km. Main cities on the bank of river Ganga & its tributaries from D/s Unnao to U.P. Border (Phase-II)- a) Ganga Fatehpur, Raebareli, Allahabad, Mirzapur, Varanasi, Ghazipur, Balia. b) Yamuna & its Tributaries (Hindon, Kali West) Yamuna- Saharanpur, Muzaffarnagar, Baghpat, Ghaziabad, Gautam Budh Nagar, Bulandshahar, Mathura, Agra, Firozabad, Etawah, Auraiya, Kalpi (Jalaun), Fatehpur, Allahabad, Hamirpur, Banda.