Notes for Math 450 Lecture Notes 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Math Preliminaries

Preliminaries We establish here a few notational conventions used throughout the text. Arithmetic with ∞ We shall sometimes use the symbols “∞” and “−∞” in simple arithmetic expressions involving real numbers. The interpretation given to such ex- pressions is the usual, natural one; for example, for all real numbers x, we have −∞ < x < ∞, x + ∞ = ∞, x − ∞ = −∞, ∞ + ∞ = ∞, and (−∞) + (−∞) = −∞. Some such expressions have no sensible interpreta- tion (e.g., ∞ − ∞). Logarithms and exponentials We denote by log x the natural logarithm of x. The logarithm of x to the base b is denoted logb x. We denote by ex the usual exponential function, where e ≈ 2.71828 is the base of the natural logarithm. We may also write exp[x] instead of ex. Sets and relations We use the symbol ∅ to denote the empty set. For two sets A, B, we use the notation A ⊆ B to mean that A is a subset of B (with A possibly equal to B), and the notation A ( B to mean that A is a proper subset of B (i.e., A ⊆ B but A 6= B); further, A ∪ B denotes the union of A and B, A ∩ B the intersection of A and B, and A \ B the set of all elements of A that are not in B. For sets S1,...,Sn, we denote by S1 × · · · × Sn the Cartesian product xiv Preliminaries xv of S1,...,Sn, that is, the set of all n-tuples (a1, . , an), where ai ∈ Si for i = 1, . , n. We use the notation S×n to denote the Cartesian product of n copies of a set S, and for x ∈ S, we denote by x×n the element of S×n consisting of n copies of x. -

Sets, Functions

Sets 1 Sets Informally: A set is a collection of (mathematical) objects, with the collection treated as a single mathematical object. Examples: • real numbers, • complex numbers, C • integers, • All students in our class Defining Sets Sets can be defined directly: e.g. {1,2,4,8,16,32,…}, {CSC1130,CSC2110,…} Order, number of occurence are not important. e.g. {A,B,C} = {C,B,A} = {A,A,B,C,B} A set can be an element of another set. {1,{2},{3,{4}}} Defining Sets by Predicates The set of elements, x, in A such that P(x) is true. {}x APx| ( ) The set of prime numbers: Commonly Used Sets • N = {0, 1, 2, 3, …}, the set of natural numbers • Z = {…, -2, -1, 0, 1, 2, …}, the set of integers • Z+ = {1, 2, 3, …}, the set of positive integers • Q = {p/q | p Z, q Z, and q ≠ 0}, the set of rational numbers • R, the set of real numbers Special Sets • Empty Set (null set): a set that has no elements, denoted by ф or {}. • Example: The set of all positive integers that are greater than their squares is an empty set. • Singleton set: a set with one element • Compare: ф and {ф} – Ф: an empty set. Think of this as an empty folder – {ф}: a set with one element. The element is an empty set. Think of this as an folder with an empty folder in it. Venn Diagrams • Represent sets graphically • The universal set U, which contains all the objects under consideration, is represented by a rectangle. -

Random Variable = a Real-Valued Function of an Outcome X = F(Outcome)

Random Variables (Chapter 2) Random variable = A real-valued function of an outcome X = f(outcome) Domain of X: Sample space of the experiment. Ex: Consider an experiment consisting of 3 Bernoulli trials. Bernoulli trial = Only two possible outcomes – success (S) or failure (F). • “IF” statement: if … then “S” else “F” • Examine each component. S = “acceptable”, F = “defective”. • Transmit binary digits through a communication channel. S = “digit received correctly”, F = “digit received incorrectly”. Suppose the trials are independent and each trial has a probability ½ of success. X = # successes observed in the experiment. Possible values: Outcome Value of X (SSS) (SSF) (SFS) … … (FFF) Random variable: • Assigns a real number to each outcome in S. • Denoted by X, Y, Z, etc., and its values by x, y, z, etc. • Its value depends on chance. • Its value becomes available once the experiment is completed and the outcome is known. • Probabilities of its values are determined by the probabilities of the outcomes in the sample space. Probability distribution of X = A table, formula or a graph that summarizes how the total probability of one is distributed over all the possible values of X. In the Bernoulli trials example, what is the distribution of X? 1 Two types of random variables: Discrete rv = Takes finite or countable number of values • Number of jobs in a queue • Number of errors • Number of successes, etc. Continuous rv = Takes all values in an interval – i.e., it has uncountable number of values. • Execution time • Waiting time • Miles per gallon • Distance traveled, etc. Discrete random variables X = A discrete rv. -

The Structure of the 3-Separations of 3-Connected Matroids

THE STRUCTURE OF THE 3{SEPARATIONS OF 3{CONNECTED MATROIDS JAMES OXLEY, CHARLES SEMPLE, AND GEOFF WHITTLE Abstract. Tutte defined a k{separation of a matroid M to be a partition (A; B) of the ground set of M such that |A|; |B|≥k and r(A)+r(B) − r(M) <k. If, for all m<n, the matroid M has no m{separations, then M is n{connected. Earlier, Whitney showed that (A; B) is a 1{separation of M if and only if A is a union of 2{connected components of M.WhenM is 2{connected, Cunningham and Edmonds gave a tree decomposition of M that displays all of its 2{separations. When M is 3{connected, this paper describes a tree decomposition of M that displays, up to a certain natural equivalence, all non-trivial 3{ separations of M. 1. Introduction One of Tutte’s many important contributions to matroid theory was the introduction of the general theory of separations and connectivity [10] de- fined in the abstract. The structure of the 1–separations in a matroid is elementary. They induce a partition of the ground set which in turn induces a decomposition of the matroid into 2–connected components [11]. Cun- ningham and Edmonds [1] considered the structure of 2–separations in a matroid. They showed that a 2–connected matroid M can be decomposed into a set of 3–connected matroids with the property that M can be built from these 3–connected matroids via a canonical operation known as 2–sum. Moreover, there is a labelled tree that gives a precise description of the way that M is built from the 3–connected pieces. -

39 Section J Basic Probability Concepts Before We Can Begin To

Section J Basic Probability Concepts Before we can begin to discuss inferential statistics, we need to discuss probability. Recall, inferential statistics deals with analyzing a sample from the population to draw conclusions about the population, therefore since the data came from a sample we can never be 100% certain the conclusion is correct. Therefore, probability is an integral part of inferential statistics and needs to be studied before starting the discussion on inferential statistics. The theoretical probability of an event is the proportion of times the event occurs in the long run, as a probability experiment is repeated over and over again. Law of Large Numbers says that as a probability experiment is repeated again and again, the proportion of times that a given event occurs will approach its probability. A sample space contains all possible outcomes of a probability experiment. EX: An event is an outcome or a collection of outcomes from a sample space. A probability model for a probability experiment consists of a sample space, along with a probability for each event. Note: If A denotes an event then the probability of the event A is denoted P(A). Probability models with equally likely outcomes If a sample space has n equally likely outcomes, and an event A has k outcomes, then Number of outcomes in A k P(A) = = Number of outcomes in the sample space n The probability of an event is always between 0 and 1, inclusive. 39 Important probability characteristics: 1) For any event A, 0 ≤ P(A) ≤ 1 2) If A cannot occur, then P(A) = 0. -

Matroids with Nine Elements

Matroids with nine elements Dillon Mayhew School of Mathematics, Statistics & Computer Science Victoria University Wellington, New Zealand [email protected] Gordon F. Royle School of Computer Science & Software Engineering University of Western Australia Perth, Australia [email protected] February 2, 2008 Abstract We describe the computation of a catalogue containing all matroids with up to nine elements, and present some fundamental data arising from this cataogue. Our computation confirms and extends the results obtained in the 1960s by Blackburn, Crapo & Higgs. The matroids and associated data are stored in an online database, arXiv:math/0702316v1 [math.CO] 12 Feb 2007 and we give three short examples of the use of this database. 1 Introduction In the late 1960s, Blackburn, Crapo & Higgs published a technical report describing the results of a computer search for all simple matroids on up to eight elements (although the resulting paper [2] did not appear until 1973). In both the report and the paper they said 1 “It is unlikely that a complete tabulation of 9-point geometries will be either feasible or desirable, as there will be many thousands of them. The recursion g(9) = g(8)3/2 predicts 29260.” Perhaps this comment dissuaded later researchers in matroid theory, because their cata- logue remained unextended for more than 30 years, which surely makes it one of the longest standing computational results in combinatorics. However, in this paper we demonstrate that they were in fact unduly pessimistic, and describe an orderly algorithm (see McKay [7] and Royle [11]) that confirms their computations and extends them by determining the 383172 pairwise non-isomorphic matroids on nine elements (see Table 1). -

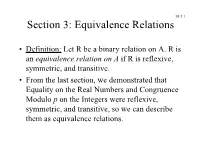

Section 3: Equivalence Relations

10.3.1 Section 3: Equivalence Relations • Definition: Let R be a binary relation on A. R is an equivalence relation on A if R is reflexive, symmetric, and transitive. • From the last section, we demonstrated that Equality on the Real Numbers and Congruence Modulo p on the Integers were reflexive, symmetric, and transitive, so we can describe them as equivalence relations. 10.3.2 Examples • What is the “smallest” equivalence relation on a set A? R = {(a,a) | a Î A}, so that n(R) = n(A). • What is the “largest” equivalence relation on a set A? R = A ´ A, so that n(R) = [n(A)]2. Equivalence Classes 10.3.3 • Definition: If R is an equivalence relation on a set A, and a Î A, then the equivalence class of a is defined to be: [a] = {b Î A | (a,b) Î R}. • In other words, [a] is the set of all elements which relate to a by R. • For example: If R is congruence mod 5, then [3] = {..., -12, -7, -2, 3, 8, 13, 18, ...}. • Another example: If R is equality on Q, then [2/3] = {2/3, 4/6, 6/9, 8/12, 10/15, ...}. • Observation: If b Î [a], then [b] = [a]. 10.3.4 A String Example • Let S = {0,1} and denote L(s) = length of s, for any string s Î S*. Consider the relation: R = {(s,t) | s,t Î S* and L(s) = L(t)} • R is an equivalence relation. Why? • REF: For all s Î S*, L(s) = L(s); SYM: If L(s) = L(t), then L(t) = L(s); TRAN: If L(s) = L(t) and L(t) = L(u), L(s) = L(u). -

Probability and Counting Rules

blu03683_ch04.qxd 09/12/2005 12:45 PM Page 171 C HAPTER 44 Probability and Counting Rules Objectives Outline After completing this chapter, you should be able to 4–1 Introduction 1 Determine sample spaces and find the probability of an event, using classical 4–2 Sample Spaces and Probability probability or empirical probability. 4–3 The Addition Rules for Probability 2 Find the probability of compound events, using the addition rules. 4–4 The Multiplication Rules and Conditional 3 Find the probability of compound events, Probability using the multiplication rules. 4–5 Counting Rules 4 Find the conditional probability of an event. 5 Find the total number of outcomes in a 4–6 Probability and Counting Rules sequence of events, using the fundamental counting rule. 4–7 Summary 6 Find the number of ways that r objects can be selected from n objects, using the permutation rule. 7 Find the number of ways that r objects can be selected from n objects without regard to order, using the combination rule. 8 Find the probability of an event, using the counting rules. 4–1 blu03683_ch04.qxd 09/12/2005 12:45 PM Page 172 172 Chapter 4 Probability and Counting Rules Statistics Would You Bet Your Life? Today Humans not only bet money when they gamble, but also bet their lives by engaging in unhealthy activities such as smoking, drinking, using drugs, and exceeding the speed limit when driving. Many people don’t care about the risks involved in these activities since they do not understand the concepts of probability. -

Parity Systems and the Delta-Matroid Intersection Problem

Parity Systems and the Delta-Matroid Intersection Problem Andr´eBouchet ∗ and Bill Jackson † Submitted: February 16, 1998; Accepted: September 3, 1999. Abstract We consider the problem of determining when two delta-matroids on the same ground-set have a common base. Our approach is to adapt the theory of matchings in 2-polymatroids developed by Lov´asz to a new abstract system, which we call a parity system. Examples of parity systems may be obtained by combining either, two delta- matroids, or two orthogonal 2-polymatroids, on the same ground-sets. We show that many of the results of Lov´aszconcerning ‘double flowers’ and ‘projections’ carry over to parity systems. 1 Introduction: the delta-matroid intersec- tion problem A delta-matroid is a pair (V, ) with a finite set V and a nonempty collection of subsets of V , called theBfeasible sets or bases, satisfying the following axiom:B ∗D´epartement d’informatique, Universit´edu Maine, 72017 Le Mans Cedex, France. [email protected] †Department of Mathematical and Computing Sciences, Goldsmiths’ College, London SE14 6NW, England. [email protected] 1 the electronic journal of combinatorics 7 (2000), #R14 2 1.1 For B1 and B2 in and v1 in B1∆B2, there is v2 in B1∆B2 such that B B1∆ v1, v2 belongs to . { } B Here P ∆Q = (P Q) (Q P ) is the symmetric difference of two subsets P and Q of V . If X\ is a∪ subset\ of V and if we set ∆X = B∆X : B , then we note that (V, ∆X) is a new delta-matroid.B The{ transformation∈ B} (V, ) (V, ∆X) is calledB a twisting. -

Topic 1: Basic Probability Definition of Sets

Topic 1: Basic probability ² Review of sets ² Sample space and probability measure ² Probability axioms ² Basic probability laws ² Conditional probability ² Bayes' rules ² Independence ² Counting ES150 { Harvard SEAS 1 De¯nition of Sets ² A set S is a collection of objects, which are the elements of the set. { The number of elements in a set S can be ¯nite S = fx1; x2; : : : ; xng or in¯nite but countable S = fx1; x2; : : :g or uncountably in¯nite. { S can also contain elements with a certain property S = fx j x satis¯es P g ² S is a subset of T if every element of S also belongs to T S ½ T or T S If S ½ T and T ½ S then S = T . ² The universal set is the set of all objects within a context. We then consider all sets S ½ . ES150 { Harvard SEAS 2 Set Operations and Properties ² Set operations { Complement Ac: set of all elements not in A { Union A \ B: set of all elements in A or B or both { Intersection A [ B: set of all elements common in both A and B { Di®erence A ¡ B: set containing all elements in A but not in B. ² Properties of set operations { Commutative: A \ B = B \ A and A [ B = B [ A. (But A ¡ B 6= B ¡ A). { Associative: (A \ B) \ C = A \ (B \ C) = A \ B \ C. (also for [) { Distributive: A \ (B [ C) = (A \ B) [ (A \ C) A [ (B \ C) = (A [ B) \ (A [ C) { DeMorgan's laws: (A \ B)c = Ac [ Bc (A [ B)c = Ac \ Bc ES150 { Harvard SEAS 3 Elements of probability theory A probabilistic model includes ² The sample space of an experiment { set of all possible outcomes { ¯nite or in¯nite { discrete or continuous { possibly multi-dimensional ² An event A is a set of outcomes { a subset of the sample space, A ½ . -

Computing Partitions Within SQL Queries: a Dead End? Frédéric Dumonceaux, Guillaume Raschia, Marc Gelgon

Computing Partitions within SQL Queries: A Dead End? Frédéric Dumonceaux, Guillaume Raschia, Marc Gelgon To cite this version: Frédéric Dumonceaux, Guillaume Raschia, Marc Gelgon. Computing Partitions within SQL Queries: A Dead End?. 2013. hal-00768156 HAL Id: hal-00768156 https://hal.archives-ouvertes.fr/hal-00768156 Submitted on 20 Dec 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Computing Partitions within SQL Queries: A Dead End? Fr´ed´eric Dumonceaux – Guillaume Raschia – Marc Gelgon December 20, 2012 Abstract The primary goal of relational databases is to provide efficient query processing on sets of tuples and thereafter, query evaluation and optimization strategies are a key issue in database implementation. Producing universally fast execu- tion plans remains a challenging task since the underlying relational model has a significant impact on algebraic definition of the operators, thereby on their implementation in terms of space and time complexity. At least, it should pre- vent a quadratic behavior in order to consider scaling-up towards the processing of large datasets. The main purpose of this paper is to show that there is no trivial relational modeling for managing collections of partitions (i.e. -

Probability Theory Review 1 Basic Notions: Sample Space, Events

Fall 2018 Probability Theory Review Aleksandar Nikolov 1 Basic Notions: Sample Space, Events 1 A probability space (Ω; P) consists of a finite or countable set Ω called the sample space, and the P probability function P :Ω ! R such that for all ! 2 Ω, P(!) ≥ 0 and !2Ω P(!) = 1. We call an element ! 2 Ω a sample point, or outcome, or simple event. You should think of a sample space as modeling some random \experiment": Ω contains all possible outcomes of the experiment, and P(!) gives the probability that we are going to get outcome !. Note that we never speak of probabilities except in relation to a sample space. At this point we give a few examples: 1. Consider a random experiment in which we toss a single fair coin. The two possible outcomes are that the coin comes up heads (H) or tails (T), and each of these outcomes is equally likely. 1 Then the probability space is (Ω; P), where Ω = fH; T g and P(H) = P(T ) = 2 . 2. Consider a random experiment in which we toss a single coin, but the coin lands heads with 2 probability 3 . Then, once again the sample space is Ω = fH; T g but the probability function 2 1 is different: P(H) = 3 , P(T ) = 3 . 3. Consider a random experiment in which we toss a fair coin three times, and each toss is independent of the others. The coin can come up heads all three times, or come up heads twice and then tails, etc.