Deterministic Versus Indeterministic Descriptions: Not That Different After All?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Introduction to Mathematical Modelling

An Introduction to Mathematical Modelling Glenn Marion, Bioinformatics and Statistics Scotland Given 2008 by Daniel Lawson and Glenn Marion 2008 Contents 1 Introduction 1 1.1 Whatismathematicalmodelling?. .......... 1 1.2 Whatobjectivescanmodellingachieve? . ............ 1 1.3 Classificationsofmodels . ......... 1 1.4 Stagesofmodelling............................... ....... 2 2 Building models 4 2.1 Gettingstarted .................................. ...... 4 2.2 Systemsanalysis ................................. ...... 4 2.2.1 Makingassumptions ............................. .... 4 2.2.2 Flowdiagrams .................................. 6 2.3 Choosingmathematicalequations. ........... 7 2.3.1 Equationsfromtheliterature . ........ 7 2.3.2 Analogiesfromphysics. ...... 8 2.3.3 Dataexploration ............................... .... 8 2.4 Solvingequations................................ ....... 9 2.4.1 Analytically.................................. .... 9 2.4.2 Numerically................................... 10 3 Studying models 12 3.1 Dimensionlessform............................... ....... 12 3.2 Asymptoticbehaviour ............................. ....... 12 3.3 Sensitivityanalysis . ......... 14 3.4 Modellingmodeloutput . ....... 16 4 Testing models 18 4.1 Testingtheassumptions . ........ 18 4.2 Modelstructure.................................. ...... 18 i 4.3 Predictionofpreviouslyunuseddata . ............ 18 4.3.1 Reasonsforpredictionerrors . ........ 20 4.4 Estimatingmodelparameters . ......... 20 4.5 Comparingtwomodelsforthesamesystem . ......... -

Random Variable = a Real-Valued Function of an Outcome X = F(Outcome)

Random Variables (Chapter 2) Random variable = A real-valued function of an outcome X = f(outcome) Domain of X: Sample space of the experiment. Ex: Consider an experiment consisting of 3 Bernoulli trials. Bernoulli trial = Only two possible outcomes – success (S) or failure (F). • “IF” statement: if … then “S” else “F” • Examine each component. S = “acceptable”, F = “defective”. • Transmit binary digits through a communication channel. S = “digit received correctly”, F = “digit received incorrectly”. Suppose the trials are independent and each trial has a probability ½ of success. X = # successes observed in the experiment. Possible values: Outcome Value of X (SSS) (SSF) (SFS) … … (FFF) Random variable: • Assigns a real number to each outcome in S. • Denoted by X, Y, Z, etc., and its values by x, y, z, etc. • Its value depends on chance. • Its value becomes available once the experiment is completed and the outcome is known. • Probabilities of its values are determined by the probabilities of the outcomes in the sample space. Probability distribution of X = A table, formula or a graph that summarizes how the total probability of one is distributed over all the possible values of X. In the Bernoulli trials example, what is the distribution of X? 1 Two types of random variables: Discrete rv = Takes finite or countable number of values • Number of jobs in a queue • Number of errors • Number of successes, etc. Continuous rv = Takes all values in an interval – i.e., it has uncountable number of values. • Execution time • Waiting time • Miles per gallon • Distance traveled, etc. Discrete random variables X = A discrete rv. -

39 Section J Basic Probability Concepts Before We Can Begin To

Section J Basic Probability Concepts Before we can begin to discuss inferential statistics, we need to discuss probability. Recall, inferential statistics deals with analyzing a sample from the population to draw conclusions about the population, therefore since the data came from a sample we can never be 100% certain the conclusion is correct. Therefore, probability is an integral part of inferential statistics and needs to be studied before starting the discussion on inferential statistics. The theoretical probability of an event is the proportion of times the event occurs in the long run, as a probability experiment is repeated over and over again. Law of Large Numbers says that as a probability experiment is repeated again and again, the proportion of times that a given event occurs will approach its probability. A sample space contains all possible outcomes of a probability experiment. EX: An event is an outcome or a collection of outcomes from a sample space. A probability model for a probability experiment consists of a sample space, along with a probability for each event. Note: If A denotes an event then the probability of the event A is denoted P(A). Probability models with equally likely outcomes If a sample space has n equally likely outcomes, and an event A has k outcomes, then Number of outcomes in A k P(A) = = Number of outcomes in the sample space n The probability of an event is always between 0 and 1, inclusive. 39 Important probability characteristics: 1) For any event A, 0 ≤ P(A) ≤ 1 2) If A cannot occur, then P(A) = 0. -

Clustering and Classification of Multivariate Stochastic Time Series

Clemson University TigerPrints All Dissertations Dissertations 5-2012 Clustering and Classification of Multivariate Stochastic Time Series in the Time and Frequency Domains Ryan Schkoda Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_dissertations Part of the Mechanical Engineering Commons Recommended Citation Schkoda, Ryan, "Clustering and Classification of Multivariate Stochastic Time Series in the Time and Frequency Domains" (2012). All Dissertations. 907. https://tigerprints.clemson.edu/all_dissertations/907 This Dissertation is brought to you for free and open access by the Dissertations at TigerPrints. It has been accepted for inclusion in All Dissertations by an authorized administrator of TigerPrints. For more information, please contact [email protected]. Clustering and Classification of Multivariate Stochastic Time Series in the Time and Frequency Domains A Dissertation Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Mechanical Engineering by Ryan F. Schkoda May 2012 Accepted by: Dr. John Wagner, Committee Chair Dr. Robert Lund Dr. Ardalan Vahidi Dr. Darren Dawson Abstract The dissertation primarily investigates the characterization and discrimina- tion of stochastic time series with an application to pattern recognition and fault detection. These techniques supplement traditional methodologies that make overly restrictive assumptions about the nature of a signal by accommodating stochastic behavior. The assumption that the signal under investigation is either deterministic or a deterministic signal polluted with white noise excludes an entire class of signals { stochastic time series. The research is concerned with this class of signals almost exclusively. The investigation considers signals in both the time and the frequency domains and makes use of both model-based and model-free techniques. -

Probability and Counting Rules

blu03683_ch04.qxd 09/12/2005 12:45 PM Page 171 C HAPTER 44 Probability and Counting Rules Objectives Outline After completing this chapter, you should be able to 4–1 Introduction 1 Determine sample spaces and find the probability of an event, using classical 4–2 Sample Spaces and Probability probability or empirical probability. 4–3 The Addition Rules for Probability 2 Find the probability of compound events, using the addition rules. 4–4 The Multiplication Rules and Conditional 3 Find the probability of compound events, Probability using the multiplication rules. 4–5 Counting Rules 4 Find the conditional probability of an event. 5 Find the total number of outcomes in a 4–6 Probability and Counting Rules sequence of events, using the fundamental counting rule. 4–7 Summary 6 Find the number of ways that r objects can be selected from n objects, using the permutation rule. 7 Find the number of ways that r objects can be selected from n objects without regard to order, using the combination rule. 8 Find the probability of an event, using the counting rules. 4–1 blu03683_ch04.qxd 09/12/2005 12:45 PM Page 172 172 Chapter 4 Probability and Counting Rules Statistics Would You Bet Your Life? Today Humans not only bet money when they gamble, but also bet their lives by engaging in unhealthy activities such as smoking, drinking, using drugs, and exceeding the speed limit when driving. Many people don’t care about the risks involved in these activities since they do not understand the concepts of probability. -

Random Matrix Eigenvalue Problems in Structural Dynamics: an Iterative Approach S

Mechanical Systems and Signal Processing 164 (2022) 108260 Contents lists available at ScienceDirect Mechanical Systems and Signal Processing journal homepage: www.elsevier.com/locate/ymssp Random matrix eigenvalue problems in structural dynamics: An iterative approach S. Adhikari a,<, S. Chakraborty b a The Future Manufacturing Research Institute, Swansea University, Swansea SA1 8EN, UK b Department of Applied Mechanics, Indian Institute of Technology Delhi, India ARTICLEINFO ABSTRACT Communicated by J.E. Mottershead Uncertainties need to be taken into account in the dynamic analysis of complex structures. This is because in some cases uncertainties can have a significant impact on the dynamic response Keywords: and ignoring it can lead to unsafe design. For complex systems with uncertainties, the dynamic Random eigenvalue problem response is characterised by the eigenvalues and eigenvectors of the underlying generalised Iterative methods Galerkin projection matrix eigenvalue problem. This paper aims at developing computationally efficient methods for Statistical distributions random eigenvalue problems arising in the dynamics of multi-degree-of-freedom systems. There Stochastic systems are efficient methods available in the literature for obtaining eigenvalues of random dynamical systems. However, the computation of eigenvectors remains challenging due to the presence of a large number of random variables within a single eigenvector. To address this problem, we project the random eigenvectors on the basis spanned by the underlying deterministic eigenvectors and apply a Galerkin formulation to obtain the unknown coefficients. The overall approach is simplified using an iterative technique. Two numerical examples are provided to illustrate the proposed method. Full-scale Monte Carlo simulations are used to validate the new results. -

Topic 1: Basic Probability Definition of Sets

Topic 1: Basic probability ² Review of sets ² Sample space and probability measure ² Probability axioms ² Basic probability laws ² Conditional probability ² Bayes' rules ² Independence ² Counting ES150 { Harvard SEAS 1 De¯nition of Sets ² A set S is a collection of objects, which are the elements of the set. { The number of elements in a set S can be ¯nite S = fx1; x2; : : : ; xng or in¯nite but countable S = fx1; x2; : : :g or uncountably in¯nite. { S can also contain elements with a certain property S = fx j x satis¯es P g ² S is a subset of T if every element of S also belongs to T S ½ T or T S If S ½ T and T ½ S then S = T . ² The universal set is the set of all objects within a context. We then consider all sets S ½ . ES150 { Harvard SEAS 2 Set Operations and Properties ² Set operations { Complement Ac: set of all elements not in A { Union A \ B: set of all elements in A or B or both { Intersection A [ B: set of all elements common in both A and B { Di®erence A ¡ B: set containing all elements in A but not in B. ² Properties of set operations { Commutative: A \ B = B \ A and A [ B = B [ A. (But A ¡ B 6= B ¡ A). { Associative: (A \ B) \ C = A \ (B \ C) = A \ B \ C. (also for [) { Distributive: A \ (B [ C) = (A \ B) [ (A \ C) A [ (B \ C) = (A [ B) \ (A [ C) { DeMorgan's laws: (A \ B)c = Ac [ Bc (A [ B)c = Ac \ Bc ES150 { Harvard SEAS 3 Elements of probability theory A probabilistic model includes ² The sample space of an experiment { set of all possible outcomes { ¯nite or in¯nite { discrete or continuous { possibly multi-dimensional ² An event A is a set of outcomes { a subset of the sample space, A ½ . -

![Arxiv:2103.07954V1 [Math.PR] 14 Mar 2021 Suppose We Ask a Randomly Chosen Person Two Questions](https://docslib.b-cdn.net/cover/7197/arxiv-2103-07954v1-math-pr-14-mar-2021-suppose-we-ask-a-randomly-chosen-person-two-questions-757197.webp)

Arxiv:2103.07954V1 [Math.PR] 14 Mar 2021 Suppose We Ask a Randomly Chosen Person Two Questions

Contents, Contexts, and Basics of Contextuality Ehtibar N. Dzhafarov Purdue University Abstract This is a non-technical introduction into theory of contextuality. More precisely, it presents the basics of a theory of contextuality called Contextuality-by-Default (CbD). One of the main tenets of CbD is that the identity of a random variable is determined not only by its content (that which is measured or responded to) but also by contexts, systematically recorded condi- tions under which the variable is observed; and the variables in different contexts possess no joint distributions. I explain why this principle has no paradoxical consequences, and why it does not support the holistic “everything depends on everything else” view. Contextuality is defined as the difference between two differences: (1) the difference between content-sharing random variables when taken in isolation, and (2) the difference between the same random variables when taken within their contexts. Contextuality thus defined is a special form of context-dependence rather than a synonym for the latter. The theory applies to any empir- ical situation describable in terms of random variables. Deterministic situations are trivially noncontextual in CbD, but some of them can be described by systems of epistemic random variables, in which random variability is replaced with epistemic uncertainty. Mathematically, such systems are treated as if they were ordinary systems of random variables. 1 Contents, contexts, and random variables The word contextuality is used widely, usually as a synonym of context-dependence. Here, however, contextuality is taken to mean a special form of context-dependence, as explained below. Histori- cally, this notion is derived from two independent lines of research: in quantum physics, from studies of existence or nonexistence of the so-called hidden variable models with context-independent map- ping [1–10],1 and in psychology, from studies of the so-called selective influences [11–18]. -

Probability Theory Review 1 Basic Notions: Sample Space, Events

Fall 2018 Probability Theory Review Aleksandar Nikolov 1 Basic Notions: Sample Space, Events 1 A probability space (Ω; P) consists of a finite or countable set Ω called the sample space, and the P probability function P :Ω ! R such that for all ! 2 Ω, P(!) ≥ 0 and !2Ω P(!) = 1. We call an element ! 2 Ω a sample point, or outcome, or simple event. You should think of a sample space as modeling some random \experiment": Ω contains all possible outcomes of the experiment, and P(!) gives the probability that we are going to get outcome !. Note that we never speak of probabilities except in relation to a sample space. At this point we give a few examples: 1. Consider a random experiment in which we toss a single fair coin. The two possible outcomes are that the coin comes up heads (H) or tails (T), and each of these outcomes is equally likely. 1 Then the probability space is (Ω; P), where Ω = fH; T g and P(H) = P(T ) = 2 . 2. Consider a random experiment in which we toss a single coin, but the coin lands heads with 2 probability 3 . Then, once again the sample space is Ω = fH; T g but the probability function 2 1 is different: P(H) = 3 , P(T ) = 3 . 3. Consider a random experiment in which we toss a fair coin three times, and each toss is independent of the others. The coin can come up heads all three times, or come up heads twice and then tails, etc. -

Sample Space, Events and Probability

Sample Space, Events and Probability Sample Space and Events There are lots of phenomena in nature, like tossing a coin or tossing a die, whose outcomes cannot be predicted with certainty in advance, but the set of all the possible outcomes is known. These are what we call random phenomena or random experiments. Probability theory is concerned with such random phenomena or random experiments. Consider a random experiment. The set of all the possible outcomes is called the sample space of the experiment and is usually denoted by S. Any subset E of the sample space S is called an event. Here are some examples. Example 1 Tossing a coin. The sample space is S = fH; T g. E = fHg is an event. Example 2 Tossing a die. The sample space is S = f1; 2; 3; 4; 5; 6g. E = f2; 4; 6g is an event, which can be described in words as "the number is even". Example 3 Tossing a coin twice. The sample space is S = fHH;HT;TH;TT g. E = fHH; HT g is an event, which can be described in words as "the first toss results in a Heads. Example 4 Tossing a die twice. The sample space is S = f(i; j): i; j = 1; 2;:::; 6g, which contains 36 elements. "The sum of the results of the two toss is equal to 10" is an event. Example 5 Choosing a point from the interval (0; 1). The sample space is S = (0; 1). E = (1=3; 1=2) is an event. Example 6 Measuring the lifetime of a lightbulb. -

Notes for Math 450 Lecture Notes 2

Notes for Math 450 Lecture Notes 2 Renato Feres 1 Probability Spaces We first explain the basic concept of a probability space, (Ω, F,P ). This may be interpreted as an experiment with random outcomes. The set Ω is the collection of all possible outcomes of the experiment; F is a family of subsets of Ω called events; and P is a function that associates to an event its probability. These objects must satisfy certain logical requirements, which are detailed below. A random variable is a function X :Ω → S of the output of the random system. We explore some of the general implications of these abstract concepts. 1.1 Events and the basic set operations on them Any situation where the outcome is regarded as random will be referred to as an experiment, and the set of all possible outcomes of the experiment comprises its sample space, denoted by S or, at times, Ω. Each possible outcome of the experiment corresponds to a single element of S. For example, rolling a die is an experiment whose sample space is the finite set {1, 2, 3, 4, 5, 6}. The sample space for the experiment of tossing three (distinguishable) coins is {HHH,HHT,HTH,HTT,THH,THT,TTH,TTT } where HTH indicates the ordered triple (H, T, H) in the product set {H, T }3. The delay in departure of a flight scheduled for 10:00 AM every day can be regarded as the outcome of an experiment in this abstract sense, where the sample space now may be taken to be the interval [0, ∞) of the real line. -

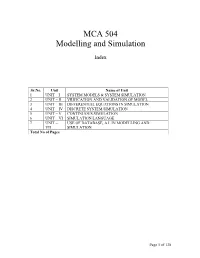

MCA 504 Modelling and Simulation

MCA 504 Modelling and Simulation Index Sr.No. Unit Name of Unit 1 UNIT – I SYSTEM MODELS & SYSTEM SIMULATION 2 UNIT – II VRIFICATION AND VALIDATION OF MODEL 3 UNIT – III DIFFERENTIAL EQUATIONS IN SIMULATION 4 UNIT – IV DISCRETE SYSTEM SIMULATION 5 UNIT – V CONTINUOUS SIMULATION 6 UNIT – VI SIMULATION LANGUAGE 7 UNIT – USE OF DATABASE, A.I. IN MODELLING AND VII SIMULATION Total No of Pages Page 1 of 138 Subject : System Simulation and Modeling Author : Jagat Kumar Paper Code: MCA 504 Vetter : Dr. Pradeep Bhatia Lesson : System Models and System Simulation Lesson No. : 01 Structure 1.0 Objective 1.1 Introduction 1.1.1 Formal Definitions 1.1.2 Brief History of Simulation 1.1.3 Application Area of Simulation 1.1.4 Advantages and Disadvantages of Simulation 1.1.5 Difficulties of Simulation 1.1.6 When to use Simulation? 1.2 Modeling Concepts 1.2.1 System, Model and Events 1.2.2 System State Variables 1.2.2.1 Entities and Attributes 1.2.2.2 Resources 1.2.2.3 List Processing 1.2.2.4 Activities and Delays 1.2.2.5 1.2.3 Model Classifications 1.2.3.1 Discrete-Event Simulation Model 1.2.3.2 Stochastic and Deterministic Systems 1.2.3.3 Static and Dynamic Simulation 1.2.3.4 Discrete vs Continuous Systems 1.2.3.5 An Example 1.3 Computer Workload and Preparation of its Models 1.3.1Steps of the Modeling Process 1.4 Summary 1.5 Key words 1.6 Self Assessment Questions 1.7 References/ Suggested Reading Page 2 of 138 1.0 Objective The main objective of this module to gain the knowledge about system and its behavior so that a person can transform the physical behavior of a system into a mathematical model that can in turn transform into a efficient algorithm for simulation purpose.