State of the Art Control of Atari Games Using Shallow Reinforcement Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rétro Gaming

ATARI - CONSOLE RETRO FLASHBACK 8 GOLD ACTIVISION – 130 JEUX Faites ressurgir vos meilleurs souvenirs ! Avec 130 classiques du jeu vidéo dont 39 jeux Activision, cette Atari Flashback 8 Gold édition Activision saura vous rappeler aux bons souvenirs du rétro-gaming. Avec les manettes sans fils ou vos anciennes manettes classiques Atari, vous n’avez qu’à brancher la console à votre télévision et vous voilà prêts pour l’action ! CARACTÉRISTIQUES : • 130 jeux classiques incluant les meilleurs hits de la console Atari 2600 et 39 titres Activision • Plug & Play • Inclut deux manettes sans fil 2.4G • Fonctions Sauvegarde, Reprise, Rembobinage • Sortie HD 720p • Port HDMI • Ecran FULL HD Inclut les jeux cultes : • Space Invaders • Centipede • Millipede • Pitfall! • River Raid • Kaboom! • Spider Fighter LISTE DES JEUX INCLUS LISTE DES JEUX ACTIVISION REF Adventure Black Jack Football Radar Lock Stellar Track™ Video Chess Beamrider Laser Blast Asteroids® Bowling Frog Pond Realsports® Baseball Street Racer Video Pinball Boxing Megamania JVCRETR0124 Centipede ® Breakout® Frogs and Flies Realsports® Basketball Submarine Commander Warlords® Bridge Oink! Kaboom! Canyon Bomber™ Fun with Numbers Realsports® Soccer Super Baseball Yars’ Return Checkers Pitfall! Missile Command® Championship Golf Realsports® Volleyball Super Breakout® Chopper Command Plaque Attack Pitfall! Soccer™ Gravitar® Return to Haunted Save Mary Cosmic Commuter Pressure Cooker EAN River Raid Circus Atari™ Hangman House Super Challenge™ Football Crackpots Private Eye Yars’ Revenge® Combat® -

Elec~Ronic Gantes Formerly Arcade Express

Elec~ronic Gantes Formerly Arcade Express Tl-IE Bl-WEEKLY ELECTRONIC GAMES NEWSLETTER VOLUME TWO, NUMBER NINE DECEMBER 4, 1983 SINGLE ISSUE PRICE $2.00 ODYSSEY EXITS The company that created the home arcading field back in 1970 has de VIDEOGAMING cided to pull in its horns while it takes its future marketing plans back to the drawing board. The Odyssey division of North American Philips has announced that it will no longer produce hardware for its Odyssey standard programmable videogame system. The publisher is expected to play out the string by market ing the already completed "War Room" and "Power Lords" cartridges for ColecoVision this Christmas season, but after that, it's plug-pulling time. Is this the end of Odyssey as a force in the gaming world? Only temporarily. The publisher plans to keep a low profile for a little while until its R&D department pushes forward with "Operation Leapfrog", the creation of N.A.P. 's first true computer. 20TH CENTURY FOX 20th Century Fox Videogames has left the game business, saying, THROWS IN THE TOWEL "Enough is enough!" According to Fox spokesmen, the canny company didn't actually experience any heavy losses. However, the foxy powers-that-be decided that the future didn't look too bright for their brand of video games, and that this is a good time to get out. The company, whose hit games included "M*A*S*H", "MegaForce", "Flash Gordon" and "Alien", reportedly does not have a large in ventory of stock on hand, and expects to experience no large financial losses as it exits the gaming business. -

A Page 1 CART TITLE MANUFACTURER LABEL RARITY Atari Text

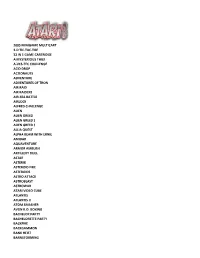

A CART TITLE MANUFACTURER LABEL RARITY 3D Tic-Tac Toe Atari Text 2 3D Tic-Tac Toe Sears Text 3 Action Pak Atari 6 Adventure Sears Text 3 Adventure Sears Picture 4 Adventures of Tron INTV White 3 Adventures of Tron M Network Black 3 Air Raid MenAvision 10 Air Raiders INTV White 3 Air Raiders M Network Black 2 Air Wolf Unknown Taiwan Cooper ? Air-Sea Battle Atari Text #02 3 Air-Sea Battle Atari Picture 2 Airlock Data Age Standard 3 Alien 20th Century Fox Standard 4 Alien Xante 10 Alpha Beam with Ernie Atari Children's 4 Arcade Golf Sears Text 3 Arcade Pinball Sears Text 3 Arcade Pinball Sears Picture 3 Armor Ambush INTV White 4 Armor Ambush M Network Black 3 Artillery Duel Xonox Standard 5 Artillery Duel/Chuck Norris Superkicks Xonox Double Ender 5 Artillery Duel/Ghost Master Xonox Double Ender 5 Artillery Duel/Spike's Peak Xonox Double Ender 6 Assault Bomb Standard 9 Asterix Atari 10 Asteroids Atari Silver 3 Asteroids Sears Text “66 Games” 2 Asteroids Sears Picture 2 Astro War Unknown Taiwan Cooper ? Astroblast Telegames Silver 3 Atari Video Cube Atari Silver 7 Atlantis Imagic Text 2 Atlantis Imagic Picture – Day Scene 2 Atlantis Imagic Blue 4 Atlantis II Imagic Picture – Night Scene 10 Page 1 B CART TITLE MANUFACTURER LABEL RARITY Bachelor Party Mystique Standard 5 Bachelor Party/Gigolo Playaround Standard 5 Bachelorette Party/Burning Desire Playaround Standard 5 Back to School Pak Atari 6 Backgammon Atari Text 2 Backgammon Sears Text 3 Bank Heist 20th Century Fox Standard 5 Barnstorming Activision Standard 2 Baseball Sears Text 49-75108 -

Download 80 PLUS 4983 Horizontal Game List

4 player + 4983 Horizontal 10-Yard Fight (Japan) advmame 2P 10-Yard Fight (USA, Europe) nintendo 1941 - Counter Attack (Japan) supergrafx 1941: Counter Attack (World 900227) mame172 2P sim 1942 (Japan, USA) nintendo 1942 (set 1) advmame 2P alt 1943 Kai (Japan) pcengine 1943 Kai: Midway Kaisen (Japan) mame172 2P sim 1943: The Battle of Midway (Euro) mame172 2P sim 1943 - The Battle of Midway (USA) nintendo 1944: The Loop Master (USA 000620) mame172 2P sim 1945k III advmame 2P sim 19XX: The War Against Destiny (USA 951207) mame172 2P sim 2010 - The Graphic Action Game (USA, Europe) colecovision 2020 Super Baseball (set 1) fba 2P sim 2 On 2 Open Ice Challenge (rev 1.21) mame078 4P sim 36 Great Holes Starring Fred Couples (JU) (32X) [!] sega32x 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) fba 2P sim 3D Crazy Coaster vectrex 3D Mine Storm vectrex 3D Narrow Escape vectrex 3-D WorldRunner (USA) nintendo 3 Ninjas Kick Back (U) [!] megadrive 3 Ninjas Kick Back (U) supernintendo 4-D Warriors advmame 2P alt 4 Fun in 1 advmame 2P alt 4 Player Bowling Alley advmame 4P alt 600 advmame 2P alt 64th. Street - A Detective Story (World) advmame 2P sim 688 Attack Sub (UE) [!] megadrive 720 Degrees (rev 4) advmame 2P alt 720 Degrees (USA) nintendo 7th Saga supernintendo 800 Fathoms mame172 2P alt '88 Games mame172 4P alt / 2P sim 8 Eyes (USA) nintendo '99: The Last War advmame 2P alt AAAHH!!! Real Monsters (E) [!] supernintendo AAAHH!!! Real Monsters (UE) [!] megadrive Abadox - The Deadly Inner War (USA) nintendo A.B. -

Colecovision

ColecoVision Last Updated on September 30, 2021 Title Publisher Qty Box Man Comments 1942 Team Pixelboy 2010: The Graphic Action Game Coleco A.E. CollectorVision Activision Decathlon, The Activision Alcazar: The Forgotten Fortress Telegames Alphabet Zoo Spinnaker Amazing Bumpman Telegames Antarctic Adventure Coleco Aquattack Interphase Armageddon CollectorVision Artillery Duel Xonox Artillery Duel / Chuck Norris Superkicks Xonox Astro Invader AtariAge B.C.'s Quest for Tires Sierra B.C.'s Quest for Tires: White Label Sierra B.C.'s Quest for Tires: Upside-Down Label Sierra B.C.'s Quest for Tires II: Grog's Revenge Coleco Bank Panic Team Pixelboy Bankruptcy Builder Team Pixelboy Beamrider Activision Blockade Runner Interphase Bomb 'N Blast CollectorVision Bomber King Team Pixelboy Bosconian Opcode Games Boulder Dash Telegames Brain Strainers Coleco Buck Rogers Super Game Team Pixelboy Buck Rogers: Planet of Zoom Coleco Bump 'n' Jump Coleco Burgertime Coleco Burgertime: Telegames Rerelease Telegames Burn Rubber CollectorVision Cabbage Patch Kids: Picture Show Coleco Cabbage Patch Kids: Adventures in the Park Coleco Campaign '84 Sunrise Carnival Coleco Cat Scheduled Oil Sampling Game, The Caterpillar Centipede Atarisoft Chack'n Pop CollectorVision Children of the Night Team Pixelboy Choplifter Coleco Choplifter: Telegames Rerelease Telegames Chuck Norris Superkicks Xonox Circus Charlie Team Pixelboy Congo Bongo Coleco Cosmic Avenger Coleco Cosmic Crisis Telegames Cosmo Fighter 2 Red Bullet Software Cosmo Fighter 3 Red Bullet Software CVDRUM E-Mancanics Dam Busters Coleco Dance Fantasy Fisher Price Defender Atarisoft Deflektor Kollection AtariAge This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database. -

WHAT GAMES ARE on a 2600 CONSOLE with 160 GAMES BUILT-IN? ****** AUTHOR: Graham.J.Percy EMAIL

******* WHAT GAMES ARE ON A 2600 CONSOLE WITH 160 GAMES BUILT-IN? ****** AUTHOR: Graham.J.Percy EMAIL: [email protected] PHONE: +61 (0) 432 606 491 FAQ Version 1.0a, 10th June, 2014. Copyright (c) 2014 Graham.J.Percy All rights reserved. This document may be reproduced, in whole or in part, provided the copyright notice remains intact and no fee is charged. The data contained herein is provided for informational purposes only. No warranty is made with regards to the accuracy of the information. This is the best info I can give on the ATARI console with 160 GAMES BUILT IN! If you see any errors or omissions or wish to comment, please email me at the above address. WHERE PURCHASED:- This unit was purchased second hand at a flea market around the early 90s. DESCRIPTION:- The 160 game unit is based on the later 4 switch variant of the original Atari 2600 VCS with "blackout" treatment. The Atari logo does not appear anywhere on the unit. Although still identified as "channel 2-3", the channel select switch has been replaced with a 3-position game-group select switch. As well as being soldered directly to the motherboard the game select switch is connected to EPROM pins and motherboard by a number of patch wires. The following words are printed in the lower right corner of the switch/socket facia:- 160 GAMES BUILT IN Internally the motherboard is a fright! Apart from switch and socket locations the layout of the board bears little resemblence to the Atari product. There is no shielding over the CPU or VLSI's, only a small metal shield over the RF modulator. -

GAME LISTS – FLASHBACK 8 GOLD and ACTIVISION GOLD

GAME LISTS – FLASHBACK 8 GOLD and ACTIVISION GOLD REGULAR F8G ACTIVISION F8G REGULAR F8G ACTIVISION F8G ON BOTH EXCLUSIVES EXCLUSIVES F8G SYSTEMS 3D Tic-Tac-Toe 3D Tic-Tac-Toe Chase It Atlantis 3D Tic-Tac-Toe Adventure Adventure Escape It Boxing Adventure Adventure II Adventure II Frogger Bridge Adventure II Air Raiders Air Raiders Front Line Checkers Air Raiders Air•Sea Battle Air•Sea Battle Jungle Hunt Demon Attack Air•Sea Battle Aquaventure Aquaventure Miss It Dolphin Aquaventure Armor Ambush Armor Ambush Polaris DragonFire Armor Ambush Asteroids Asteroids Shield Shifter Freeway Asteroids Astroblast Astroblast Space Invaders Grand Prix Astroblast Atari Climber Atari Climber Ice Hockey Atari Climber Backgammon Atlantis Laser Blast Backgammon Basketball Backgammon Plaque Attack Basketball Beamrider Basketball Private Eye Beamrider Black Jack Beamrider River Raid II Black Jack Bowling Black Jack Skiing Bowling Breakout Bowling Sky Jinks Breakout Canyon Bomber Boxing Space Shuttle Canyon Bomber Centipede Breakout Spider Fighter Centipede Championship Soccer Bridge Tennis Championship Soccer Chase It Canyon Bomber Chopper Command Chopper Command Centipede Circus Atari Circus Atari Championship Soccer Combat Combat Checkers Combat Two Combat Two Chopper Command Cosmic Commuter Cosmic Commuter Circus Atari Crackpots Crackpots Combat Crystal Castles Crystal Castles Combat Two Dark Cavern Dark Cavern Cosmic Commuter Decathlon Decathlon Crackpots Demons to Diamonds Demons to Diamonds Crystal Castles Desert Falcon Desert Falcon Dark Cavern Dodge ‘Em Dodge ‘Em Decathlon Double Dunk Double Dunk Demon Attack Dragster Dragster Demons to Diamonds Enduro Enduro Desert Falcon Fatal Run Escape It Dodge ‘Em Fishing Derby Fatal Run Dolphin Flag Capture Fishing Derby Double Dunk Football Flag Capture DragonFire Frog Pond Football Dragster Frogs and Flies Frog Pond Enduro Frostbite Frogger Fatal Run Fun with Numbers Frogs and Flies Fishing Derby Golf Front Line Flag Capture Gravitar Frostbite Football H.E.R.O. -

Premiere Issue Monkeying Around Game Reviews: Special Report

Atari Coleco Intellivision Computers Vectrex Arcade ClassicClassic GamerGamer Premiere Issue MagazineMagazine Fall 1999 www.classicgamer.com U.S. “Because Newer Isn’t Necessarily Better!” Special Report: Classic Videogames at E3 Monkeying Around Revisiting Donkey Kong Game Reviews: Atari, Intellivision, etc... Lost Arcade Classic: Warp Warp Deep Thaw Chris Lion Rediscovers His Atari Plus! · Latest News · Guide to Halloween Games · Win the book, “Phoenix” “As long as you enjoy the system you own and the software made for it, there’s no reason to mothball your equipment just because its manufacturer’s stock dropped.” - Arnie Katz, Editor of Electronic Games Magazine, 1984 Classic Gamer Magazine Fall 1999 3 Volume 1, Version 1.2 Fall 1999 PUBLISHER/EDITOR-IN-CHIEF Chris Cavanaugh - [email protected] ASSOCIATE EDITOR Sarah Thomas - [email protected] STAFF WRITERS Kyle Snyder- [email protected] Reset! 5 Chris Lion - [email protected] Patrick Wong - [email protected] Raves ‘N Rants — Letters from our readers 6 Darryl Guenther - [email protected] Mike Genova - [email protected] Classic Gamer Newswire — All the latest news 8 Damien Quicksilver [email protected] Frank Traut - [email protected] Lee Seitz - [email protected] Book Bytes - Joystick Nation 12 LAYOUT/DESIGN Classic Advertisement — Arcadia Supercharger 14 Chris Cavanaugh PHOTO CREDITS Atari 5200 15 Sarah Thomas - Staff Photographer Pong Machine scan (page 3) courtesy The “New” Classic Gamer — Opinion Column 16 Sean Kelly - Digital Press CD-ROM BIRA BIRA Photos courtesy Robert Batina Lost Arcade Classics — ”Warp Warp” 17 CONTACT INFORMATION Classic Gamer Magazine Focus on Intellivision Cartridge Reviews 18 7770 Regents Road #113-293 San Diego, Ca 92122 Doin’ The Donkey Kong — A closer look at our 20 e-mail: [email protected] on the web: favorite monkey http://www.classicgamer.com Atari 2600 Cartridge Reviews 23 SPECIAL THANKS To Sarah. -

Dancing Wings Butterfly Garden Exhibit Page 4 Opening 8 New

Spring 2017 • Volume 7 • Issue 3 News and Events for Members, Donors, and Friends P L AY Time Raceway Arcade Exhibit 3 Princess Palooza 6 Over the Rainbow 7 World Video Game Hall of Fame 7 Have a Ball Dancing Wings Butterfly Garden Exhibit Page 4 Opening 8 NEW O A New Era of Leadership: Raceway Arcade Now Open E Get to Know Steve Dubnik Through September 4 What can you tell us about What can you tell us about Play your way through the history inner-workings of Chicago Coin’s The Strong Neighborhood of Play? your family? of electronic driving games at Drive Master (1969). Explore the first E I’ve been working for nearly three Raceway Arcade, now open in the racing arcade video game, Atari’s D C My wife, Claire, and I have a museum’s Central Gallery, and learn Gran Trak 10 (1974), and the rare years with my predecessor, G. Rollie college-aged son and daughter, who XHIBIT about America’s long fascination and once controversial Adams, on The Strong Neighborhood have been coming to the museum Death Race N with the need for speed. Start your (1976)—inspired by the 1975 satirical of Play. Along with partners Konar since they were children. We became engine, zip through the evolution cult film and Properties and Indus Hospitality members of the museum in the late Death Race 2000 of driving games, and see rare criticized at the time for its Group, The Strong proposed a plan 1990s, and I have fond memories artifacts from The Strong’s depictions of violence. -

Dp Guide Lite Us

Atari 2600 USA Digital Press GB I GB I GB I 3-D Tic-Tac-Toe/Atari R2 Beat 'Em & Eat 'Em/Lady in Wadin R7 Chuck Norris Superkicks/Spike's Pe R7 3-D Tic-Tac-Toe/Sears R3 Berenstain Bears/Coleco R6 Circus/Sears R3 A Game of Concentration/Atari R3 Bermuda Triangle/Data Age R2 Circus Atari/Atari R1 Action Pak/Atari R6 Berzerk/Atari R1 Coconuts/Telesys R3 Adventure/Atari R1 Berzerk/Sears R3 Codebreaker/Atari R2 Adventure/Sears R3 Big Bird's Egg Catch/Atari R2 Codebreaker/Sears R3 Adventures of Tron/M Network R2 Blackjack/Atari R1 Color Bar Generator/Videosoft R9 Air Raid/Men-a-Vision R10 Blackjack/Sears R2 Combat/Atari R1 Air Raiders/M Network R2 Blue Print/CBS Games R3 Commando/Activision R3 Airlock/Data Age R2 BMX Airmaster/Atari R10 Commando Raid/US Games R3 Air-Sea Battle/Atari R1 BMX Airmaster/TNT Games R4 Communist Mutants From Space/St R3 Alien/20th Cent Fox R3 Bogey Blaster/Telegames R3 Condor Attack/Ultravision R8 Alpha Beam with Ernie/Atari R3 Boing!/First Star R7 Congo Bongo/Sega R2 Amidar/Parker Bros R2 Bowling/Atari R1 Cookie Monster Munch/Atari R2 Arcade Golf/Sears R3 Bowling/Sears R2 Copy Cart/Vidco R8 Arcade Pinball/Sears R3 Boxing/Activision R1 Cosmic Ark/Imagic R2 Armor Ambush/M Network R2 Brain Games/Atari R2 Cosmic Commuter/Activision R4 Artillery Duel/Xonox R6 Brain Games/Sears R3 Cosmic Corridor/Zimag R6 Artillery Duel/Chuck Norris SuperKi R4 Breakaway IV/Sears R3 Cosmic Creeps/Telesys R3 Artillery Duel/Ghost Manor/Xonox R7 Breakout/Atari R1 Cosmic Swarm/CommaVid -

Genealogies of Capture and Evasion: a Radical Black Feminist Meditation for Neoliberal Times by Lydia M. Kelow-Bennett B.A

Genealogies of Capture and Evasion: A Radical Black Feminist Meditation for Neoliberal Times By Lydia M. Kelow-Bennett B.A., University of Puget Sound, 2002 M.A., Georgetown University, 2011 A.M., Brown University, 2016 A dissertation submitted in partial fulfillment of the requirements for the Doctorate of Philosophy in the Department of Africana Studies at Brown University Providence, RI May 2018 © Copyright 2018 by Lydia Marie Kelow-Bennett This dissertation by Lydia M. Kelow-Bennett is accepted in its present form by the Department of Africana Studies as satisfying the dissertation requirement for the degree of Doctor of Philosophy Date________________ ______________________________ Matthew Guterl, Advisor Recommended to the Graduate Council Date________________ ______________________________ Anthony Bogues, Reader Date________________ ______________________________ Françoise Hamlin, Reader Approved by the Graduate Council Date________________ ____________________________________ Andrew G. Campbell, Dean of the Graduate School iii [CURRICULUM VITAE] Lydia M. Kelow-Bennett was born May 13, 1980 in Denver, Colorado, the first of four children to Jerry and Judy Kelow. She received a B.A. in Communication Studies with a minor in Psychology from the University of Puget Sound in 2002. Following several years working in education administration, she received a M.A. in Communication, Culture and Technology from Georgetown University in 2011. She received a A.M. in Africana Studies from Brown University in 2016. Lydia has been a committed advocate for students of color at Brown, and served as a Graduate Coordinator for the Sarah Doyle Women’s Center for a number of years. She was a 2017-2018 Pembroke Center Interdisciplinary Opportunity Fellow, and will begin as an Assistant Professor in the Department of Afroamerican and African Studies at the University of Michigan in Fall 2018. -

2005 Minigame Multicart 3-D Tic-Tac-Toe 32 in 1 Game Cartridge a Mysterious Thief A-Vcs-Tec Challenge Acid Drop Actionauts Adven

2005 MINIGAME MULTICART 3-D TIC-TAC-TOE 32 IN 1 GAME CARTRIDGE A MYSTERIOUS THIEF A-VCS-TEC CHALLENGE ACID DROP ACTIONAUTS ADVENTURE ADVENTURES OF TRON AIR RAID AIR RAIDERS AIR-SEA BATTLE AIRLOCK ALFRED CHALLENGE ALIEN ALIEN GREED ALIEN GREED 2 ALIEN GREED 3 ALLIA QUEST ALPHA BEAM WITH ERNIE AMIDAR AQUAVENTURE ARMOR AMBUSH ARTILLERY DUEL ASTAR ASTERIX ASTEROID FIRE ASTEROIDS ASTRO ATTACK ASTROBLAST ASTROWAR ATARI VIDEO CUBE ATLANTIS ATLANTIS II ATOM SMASHER AVGN K.O. BOXING BACHELOR PARTY BACHELORETTE PARTY BACKFIRE BACKGAMMON BANK HEIST BARNSTORMING BASE ATTACK BASIC MATH BASIC PROGRAMMING BASKETBALL BATTLEZONE BEAMRIDER BEANY BOPPER BEAT 'EM & EAT 'EM BEE-BALL BERENSTAIN BEARS BERMUDA TRIANGLE BIG BIRD'S EGG CATCH BIONIC BREAKTHROUGH BLACKJACK BLOODY HUMAN FREEWAY BLUEPRINT BMX AIR MASTER BOBBY IS GOING HOME BOGGLE BOING! BOULDER DASH BOWLING BOXING BRAIN GAMES BREAKOUT BRIDGE BUCK ROGERS - PLANET OF ZOOM BUGS BUGS BUNNY BUMP 'N' JUMP BUMPER BASH BURGERTIME BURNING DESIRE CABBAGE PATCH KIDS - ADVENTURES IN THE PARK CAKEWALK CALIFORNIA GAMES CANYON BOMBER CARNIVAL CASINO CAT TRAX CATHOUSE BLUES CAVE IN CENTIPEDE CHALLENGE CHAMPIONSHIP SOCCER CHASE THE CHUCK WAGON CHECKERS CHEESE CHINA SYNDROME CHOPPER COMMAND CHUCK NORRIS SUPERKICKS CIRCUS CLIMBER 5 COCO NUTS CODE BREAKER COLONY 7 COMBAT COMBAT TWO COMMANDO COMMANDO RAID COMMUNIST MUTANTS FROM SPACE COMPUMATE COMPUTER CHESS CONDOR ATTACK CONFRONTATION CONGO BONGO CONQUEST OF MARS COOKIE MONSTER MUNCH COSMIC ARK COSMIC COMMUTER COSMIC CREEPS COSMIC INVADERS COSMIC SWARM CRACK'ED CRACKPOTS