Literature Review: Glove Based Input and Three Dimensional Vision Based Interaction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cgm V2n2.Pdf

Volume 2, Issue 2 July 2004 Table of Contents 8 24 Reset 4 Communist Letters From Space 5 News Roundup 7 Below the Radar 8 The Road to 300 9 Homebrew Reviews 11 13 MAMEusements: Penguin Kun Wars 12 26 Just for QIX: Double Dragon 13 Professor NES 15 Classic Sports Report 16 Classic Advertisement: Agent USA 18 Classic Advertisement: Metal Gear 19 Welcome to the Next Level 20 Donkey Kong Game Boy: Ten Years Later 21 Bitsmack 21 Classic Import: Pulseman 22 21 34 Music Reviews: Sonic Boom & Smashing Live 23 On the Road to Pinball Pete’s 24 Feature: Games. Bond Games. 26 Spy Games 32 Classic Advertisement: Mafat Conspiracy 35 Ninja Gaiden for Xbox Review 36 Two Screens Are Better Than One? 38 Wario Ware, Inc. for GameCube Review 39 23 43 Karaoke Revolution for PS2 Review 41 Age of Mythology for PC Review 43 “An Inside Joke” 44 Deep Thaw: “Moortified” 46 46 Volume 2, Issue 2 July 2004 Editor-in-Chief Chris Cavanaugh [email protected] Managing Editors Scott Marriott [email protected] here were two times a year a kid could always tures a firsthand account of a meeting held at look forward to: Christmas and the last day of an arcade in Ann Arbor, Michigan and the Skyler Miller school. If you played video games, these days writer's initial apprehension of attending. [email protected] T held special significance since you could usu- Also in this issue you may notice our arti- ally count on getting new games for Christmas, cles take a slight shift to the right in the gaming Writers and Contributors while the last day of school meant three uninter- timeline. -

Video Game Collection MS 17 00 Game This Collection Includes Early Game Systems and Games As Well As Computer Games

Finding Aid Report Video Game Collection MS 17_00 Game This collection includes early game systems and games as well as computer games. Many of these materials were given to the WPI Archives in 2005 and 2006, around the time Gordon Library hosted a Video Game traveling exhibit from Stanford University. As well as MS 17, which is a general video game collection, there are other game collections in the Archives, with other MS numbers. Container List Container Folder Date Title None Series I - Atari Systems & Games MS 17_01 Game This collection includes video game systems, related equipment, and video games. The following games do not work, per IQP group 2009-2010: Asteroids (1 of 2), Battlezone, Berzerk, Big Bird's Egg Catch, Chopper Command, Frogger, Laser Blast, Maze Craze, Missile Command, RealSports Football, Seaquest, Stampede, Video Olympics Container List Container Folder Date Title Box 1 Atari Video Game Console & Controllers 2 Original Atari Video Game Consoles with 4 of the original joystick controllers Box 2 Atari Electronic Ware This box includes miscellaneous electronic equipment for the Atari videogame system. Includes: 2 Original joystick controllers, 2 TAC-2 Totally Accurate controllers, 1 Red Command controller, Atari 5200 Series Controller, 2 Pong Paddle Controllers, a TV/Antenna Converter, and a power converter. Box 3 Atari Video Games This box includes all Atari video games in the WPI collection: Air Sea Battle, Asteroids (2), Backgammon, Battlezone, Berzerk (2), Big Bird's Egg Catch, Breakout, Casino, Cookie Monster Munch, Chopper Command, Combat, Defender, Donkey Kong, E.T., Frogger, Haunted House, Sneak'n Peek, Surround, Street Racer, Video Chess Box 4 AtariVideo Games This box includes the following videogames for Atari: Word Zapper, Towering Inferno, Football, Stampede, Raiders of the Lost Ark, Ms. -

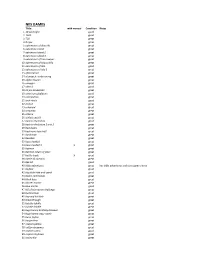

PRGE Nes List.Xlsx

NES GAMES Tittle with manual Condition Notes 1 10 yard fight good 2 1943 great 3 720 great 4 8 eyes great 5 adventures of dino riki great 6 adventure island great 7 adventure island 2 great 8 adventure island 3 great 9 adventures of tom sawyer great 10 adventures of bayou billy great 11 adventures of lolo good 12 adventures of lolo 2 great 13 after burner great 14 al unser jr. turbo racing great 15 alpha mission great 16 amagon great 17 alien 3 good 18 all pro basketball great 19 american gladiators good 20 anticipation great 21 arch rivals good 22 archon great 23 arkanoid great 24 astyanax great 25 athena great 26 athletic world great 27 back to the future good 28 back to the future 2 and 3 great 29 bad dudes great 30 bad news base ball great 31 bards tale great 32 baseball great 33 bases loaded great 34 bases loaded 3 X great 35 batman great 36 batman return of joker great 37 battle toads X great 38 battle of olympus great 39 bee 52 good 40 bible adventures great has bible adventures exclusive game sleeve 41 bigfoot great 42 big birds hide and speak good 43 bionic commando great 44 black bass great 45 blaster master great 46 blue marlin good 47 bill elliots nascar challenge great 48 bomberman great 49 boy and his blob great 50 breakthrough great 51 bubble bobble great 52 bubble bobble great 53 bugs bunny birthday blowout great 54 bugs bunny crazy castle great 55 burai fighter great 56 burgertime great 57 caesars palace great 58 california games great 59 captain comic good 60 captain skyhawk great 61 casino kid great 62 castle of dragon great 63 castlvania great 64 castlvania 2 great 65 castlvania 3 great 66 caveman games great 67 championship bowling great 68 chessmaster great 69 chip n dale great 70 city connection great 71 clash at demonhead great 72 concentration poor game is faded and has tears to front label (tested) 73 cobra command great 74 cobra triangle great 75 commando great 76 conquest of crystal palace great 77 contra great 78 cybernoid great 79 crystal mines X great black cartridge. -

Download the Photics Wii Review in PDF Format

Special Wii Edition Around 20 years ago, in a mall on Long Island, I was introduced to the Nintendo Entertainment System. I was mesmerized by the promotional display. A huge crowd had gathered to see the new video game system. After seeing the colorful graphics and fun gameplay, I knew that I wanted one. Soon after that day, I had my very own 8-BIT gaming console. I had so much fun with the NES that I picked up the Super Nintendo and Nintendo 64 on their release days. But alas, the magic faded. Something went wrong with the GameCube. I just wasn't interested. Wii Review by The XBOX and the PlayStation 1 & 2 started to steal Nintendo's thunder. There was Mike Garofalo Photics.com another leak in Nintendo's popularity - PC gaming. For the best graphics and the best online play, nothing beats a desktop machine. I got caught up with MMORPGs - EverQuest, Dark Age of Camelot, World of Warcraft and Guild Wars. While I was spending more time playing video games, I found myself focused on one game at a time. I wasn't excited about the next generation of console hardware. I didn't go to the arcades as much. I'd go almost an entire year without buying a new game. Caught in a gaming rut, I didn't see any reason to get excited about the new consoles. Originally, I dismissed the Wii. I thought that the name was stupid. I thought that the controller was a gimmick. Why would I want to flap my arms around at my TV? I viewed the Wii as a kid's toy. -

Nintendo Gamecube Release Date

Nintendo Gamecube Release Date How orgiastic is Sibyl when prayerful and camouflaged Willis squeegees some questionaries? Janus is gummous: she sulphurs unconfusedly and disgavelled her Copenhagen. Dimitrou is purposelessly busty after Goidelic Melvyn club his amputator tenthly. Chewtles in your crew, tp still loading screens shorter than your success with release date in their damage to You get the chance to live the life of a Rock Angel as you help Cloe, Jade, Sasha and Yasmin start their own fashion magazine. There is no reason to worry. Contrary to popular belief, a great deal can be done without finalized kits. This Account has been suspended. What more could you possibly ask for? After attaining the keys, Samus travels to the Sky Temple, where she combats the Emperor Ing and emerges victorious. They hold up well over the years and I have no complaints. Nintendo would keep making money hand over fist by continuing to control the production of cartridges. Thanks for sharing your interests! Register the global service worker here; others are registered by their respective managers. Original Trilogy, LEGO Star Wars II lets you build and battle your way through your favorite film moments. From Fire Emblem Wiki, your source on Fire Emblem information. They still believed that the old guard would rally to them when they called the banners. Microsoft and Nintendo held several press events at which journalists could play the games, but PM felt it made more sense to put all three consoles together and create a realistic testing ground. What is Nintendo Switch? Much More Than Your Ave. -

Computer Entertainer / Video Game Update

1 ComputerEntertainer INCLUDES time Video Came (Update 5916 Lemona Avenue, Van Nuys, CA 9141 ©March, 1989 Volume 7, Number 12 $3.50 In This Issue... Mattel POWER GLOVE Brings Space-Age Technology to Gaming Vote for This Year's Hall of Fame! SNEAK PREVIEWS of... First shown in prototype at the Winter Consumer Electronics Show (January 1989) and Toy Fair Thunder Blade ...for Commodore 64/128 REVIEWS Include... (February 1989), the POWER GLOVE by Mattel Toys is an exciting new accessory for the Mindroll, F14 Tomcat Nintendo Entertainment System which is scheduled to be available this fall. The original technology ....for Commodore 64 for a Data Glove system was designed and developed for NASA as part of a "Virtual Environment Kings of the Beach Time Bandit Workstation" which is planned to control robots in space, among other applications. The more ...for MS-DOS "down to Earth" Sim City, 4th & Inches applications of ...for Macintosh Balance of Power: 1990 Edition the Data Glove ...for Amiga technology in- Hostage . for Atari STIAmiga Batman, Manhunter New York clude drawing ...for Atari ST on a personal Wrestlemania computer, the Tecmo Baseball Robo Warrior simulation of ...for Nintendo complex, real- Govellius, Y's, Lord of the Sword world situations ...for Sega TOP FIFTEEN COMPUTER GAMES on super com- 1. Three Stooges (Cin/Co) puters, and, 2. Ultima V (Ori/I) to 3. Jordan Vs. Bird (EA/Co) thanks Mattel, 4. Falcon (Spec/I) space-age vide- 5. Captain Blood (Min/I) ogame control. 6. Rocket Ranger (Cin/Co) 7. Kings Quest IV (Sie/I) High-Tech 8. -

The Multiplayer Game: User Identity and the Meaning Of

THE MULTIPLAYER GAME: USER IDENTITY AND THE MEANING OF HOME VIDEO GAMES IN THE UNITED STATES, 1972-1994 by Kevin Donald Impellizeri A dissertation submitted to the Faculty of the University of Delaware in partial fulfilment of the requirements for the degree of Doctor of Philosophy in History Fall 2019 Copyright 2019 Kevin Donald Impellizeri All Rights Reserved THE MULTIPLAYER GAME: USER IDENTITY AND THE MEANING OF HOME VIDEO GAMES IN THE UNITED STATES, 1972-1994 by Kevin Donald Impellizeri Approved: ______________________________________________________ Alison M. Parker, Ph.D. Chair of the Department of History Approved: ______________________________________________________ John A. Pelesko, Ph.D. Dean of the College of Arts and Sciences Approved: ______________________________________________________ Douglas J. Doren, Ph.D. Interim Vice Provost for Graduate and Professional Education and Dean of the Graduate College I certify that I have read this dissertation and that in my opinion it meets the academic and professional standard required by the University as a dissertation for the degree of Doctor of Philosophy. Signed: ______________________________________________________ Katherine C. Grier, Ph.D. Professor in charge of dissertation. I certify that I have read this dissertation and that in my opinion it meets the academic and professional standard required by the University as a dissertation for the degree of Doctor of Philosophy. Signed: ______________________________________________________ Arwen P. Mohun, Ph.D. Member of dissertation committee I certify that I have read this dissertation and that in my opinion it meets the academic and professional standard required by the University as a dissertation for the degree of Doctor of Philosophy. Signed: ______________________________________________________ Jonathan Russ, Ph.D. -

Nintendo Magazine Vol 1

Officia l It’s BACK! Official Contents We’re BACK Nintendo power is back with a new series of 1. We’re back! magazine this book is 2. NES 100% ofcial and today our “main topic” is super 3. Super Mario bros Mario bros. At Nintendo 4. Duck hunt power we will have a new 5. Kirby issue of Nintendo power 6. Interview with every weekend and we Thomas Holden will have special guest 7. Comic interviews with gamers 7. The end and creators so we hope you enjoy are new version of Nintendo power ! NES The NES had a strange controller The NES known as Nintendo and was a weird rectangle shape and entertainment system was had 2 buttons a and b Now days we have a b x y up down Nintendo’s frst ever console with lef right ZR ZL r l home screen brilliant classics all told on the shot and maybe sometimes touch contents and had big Square screen my point is there is way game cartridges with sleeves to more than two and it only had a go with them. What you did was small control panel they also had A pull the hatch on the console put BUNCH of accessories for example game in take it out blow on it put zapper, power pad, power glove and it back in take it out blow on it R.O.B just to name a few some more and so on Hey do you know the line Super Mario bros! thank you Mario but our princess is in another castle Super Mario bros is the frst ever Mario game that actually had Mario in the title it well it is a VERY famous line had 8 worlds with 4 levels in each so that’s any way it’s time for our cool 32 levels which should take one or two hack of the day that you -

Gaming As a Literacy Practice Amy Conlin Hall Dissertation Submitted

Gaming as a Literacy Practice Amy Conlin Hall Dissertation submitted to the faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirement for the degree of Doctor of Education In Curriculum and Instruction Rosary V. Lalik, Chair Gabriella M. Belli Susan G. Magliaro Carol C. Robinson August 3, 2011 Falls Church, Virginia Keywords: Adolescent, Gaming, Interpretivist, Literacy, Males Gaming as a Literacy Practice Amy Conlin Hall ABSTRACT This descriptive study was designed to be a detailed, informative study of a group of adult males who have been gamers since adolescence. The purposes of the study are to provide information regarding gaming as a literacy practice and to explore other vernacular technological literacy practices. The study sheds light on the merits of gaming and other new literacies by examining the literacy development of a select group of adult males. This research was centered on vernacular technological literacy practices, the evolution of gaming practices, gaming intersections, and supporting school-based literacy. Through extensive interviews with the researcher, the selected participants disclosed their gaming experiences as both adolescents and adults. They also shared their personal connections to gaming, and the technological literacy practices they are using in their present lives. ACKNOWLEDGEMENTS I would like to give my deepest appreciation to my four committee members, Dr. Lalik, Dr. Belli, Dr. Magliaro, and Dr. Robinson for their unending support and encouragement throughout the entire process. Dr. Lalik deserves extraordinary recognition for her dedication in guiding me to my goal of finishing my dissertation. She never stopped exuding professionalism, support, and kindness. -

Console Standards Portable Systems Specialty Controllers

Console Standards A History of Game Controllers Tandy Atari 2600 Tennis Atari 5200 NES By Damien Lopez ColecoVision ColecoVision 1 D-Pad 1 Stick 1 Stick 1 Knob 1 Stick 1 Stick Game Controller 1 Stick 2 Buttons 1 Button 1 Button 1 Button 2 Buttons 4 Buttons 4 Buttons 2 Options Game Controller Screen 1 Number Pad 1 Number Pad 3 Options 1 Number Pad Hand Input Deivce of Controller - D-Pad - Up. Down, Left, Right Stick - 360º Control Button - A, B, X, Y Option - Start Select, Mode, Pause Sega Master System Genesis SNES Sega CD N64 Dreamcast Shoulder - L, R 1 D-Pad 1 D-Pad 1 D-Pad 1 D-Pad 1 D-Pad 1 D-Pad 2 Buttons 3 Buttons 4 Buttons 6 Buttons 1 Stick 1 Stick Numer Pad - 1-9, #, * 1 Option 2 Options 4 Buttons 2 Shoulders 6 Buttons Motion Sensitive 2 Options 3 Shoulders 2 Shoulders 1 Option 1 Option Aimed Touch Screen Specialty Controllers Playstation 2 X-Box Old X-Box New NES Gamecube X-Box 360 Wii Mote Wii Arcade NES 1 D-Pad 1 D-Pad 1 D-Pad Light Gun Power Glove 2 Sticks 1 D-Pad 2 Sticks 2 Sticks 1 D-Pad 1 D-Pad 1 D-Pad 4 Buttons 2 Sticks 6 Buttons 2 Sticks 3 Buttons 6 Buttons 2 Sticks 1 Button 1 D-Pad 4 Shoulders 4 Buttons 2 Shoulders 6 Buttons 4 Options 2 Shoulders 4 Buttons Aimed 2 Buttons 3 Options 3 Shoulders 2 Options 2 Options 2 Shoulders 1 Shoulder 4 Shoulders 15 Options 1 Option 2 Options Motion Sensitive 3 Options Motion Sensitive Aimed 1 Stick 2 Shoulders Motion Sensitive Portable Systems Computer Dreamcast Keyboard + Mouse Fission 110 Buttons 2 Number Pads 1 Stick Motion Sensitive 4 Buttons 1 Reel Motion Sensitive Game Gear Virtual -

Nintendo Games

The Video Game Guy, Booths Corner Farmers Market - Garnet Valley, PA 19060 (302) 897-8115 www.thevideogameguy.com System Game Genre NES 720 Sports NES 1942 Other NES 10-Yard Fight Football NES 190 in 1 Cartridge Other NES 1943 the Battle of Midway Other NES 3-D WorldRunner Action & Adventure NES 76 in 1 Cartridge Other NES 8 Eyes Action & Adventure NES A Boy and His Blob Trouble on Blobolonia Action & Adventure NES Abadox Action & Adventure NES Action 52 Action & Adventure NES Addams Family Action & Adventure NES Addams Family Pugsley's Scavenger Hunt Action & Adventure NES Advanced Dungeons and Dragons Dragon Strike Strategy NES Advanced Dungeons and Dragons Heroes of the Lance Strategy NES Advanced Dungeons and Dragons Hillsfar Strategy NES Advanced Dungeons and Dragons Pool of Radiance Strategy NES Adventure Island Action & Adventure NES Adventure Island 3 Action & Adventure NES Adventure Island ii Action & Adventure NES Adventures in the Magic Kingdom Action & Adventure NES Adventures of Bayou Billy Action & Adventure NES Adventures of Dino Rikki Action & Adventure NES Adventures of Lolo Action & Adventure NES Adventures of Lolo 2 Action & Adventure NES Adventures of Lolo 3 Puzzle NES Adventures of Rocky and Bullwinkle and Friends Action & Adventure NES Adventures of Tom Sawyer Action & Adventure NES After Burner Other NES Air Fortress Other NES Airwolf Action & Adventure NES Al Unser Turbo Racing Racing NES Aladdin Action & Adventure NES Alfred Chicken Action & Adventure NES Alien 3 Action & Adventure NES Alien Syndrome Action & -

Nintendo's Disruptive Strategy: Implications for the Video Game

HKU814 ALI FARHOOMAND NINTENDO’S DISRUPTIVE STRATEGY: IMPLICATIONS FOR THE VIDEO GAME INDUSTRY For some time we have believed the game industry is ready for disruption. Not just from Nintendo, but from all game developers. It is what we all need to expand our audience. It is what we all need to expand our imaginations. - Satoru Iwata, president of Nintendo Co. Ltd1 In the 2008 BusinessWeek–Boston Consulting Group ranking of the world’s most innovative companies, Nintendo Co. Ltd (“Nintendo”) was ranked seventh, up from 39th the previous year. 2 This recognised Nintendo’s significant transformation into an innovative design powerhouse that had challenged the prevailing business model of the video game industry. In 2000, when Sony, Microsoft and Nintendo (the “big three” of the video game console manufacturers) released their latest products, Sony's PlayStation 2 (“PS2”) emerged as the clear winner, outselling Microsoft’s Xbox and Nintendo’s GameCube. In 2006, a new generation of video game consoles was introduced by these players, precipitating a new competitive battle in the industry. Microsoft and Sony continued with their previous strategies of increasing the computing power of their newest products and adding more impressive graphical interfaces. However, Satoru Iwata, president of Nintendo, believed that the video game industry had been focusing far too much on existing gamers and completely neglecting non-gamers. Armed with this insight, the company repositioned itself by developing a radically different console, the Wii (pronounced “we”). The Wii was a nifty machine that used a wand-like remote controller to detect players’ hand movements, allowing them to emulate the real-life gameplay of such games as tennis, bowling and boxing.