Zak Middleton STS 145 March 18, 2002

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Strategy Games Big Huge Games • Bruce C

04 3677_CH03 6/3/03 12:30 PM Page 67 Chapter 3 THE EXPERTS • Sid Meier, Firaxis General Game Design: • Bill Roper, Blizzard North • Brian Reynolds, Strategy Games Big Huge Games • Bruce C. Shelley, Ensemble Studios • Peter Molyneux, Do you like to use some brains along with (or instead of) brawn Lionhead Studios when gaming? This chapter is for you—how to create breathtaking • Alex Garden, strategy games. And do we have a roundtable of celebrities for you! Relic Entertainment Sid Meier, Firaxis • Louis Castle, There’s a very good reason why Sid Meier is one of the most Electronic Arts/ accomplished and respected game designers in the business. He Westwood Studios pioneered the industry with a number of unprecedented instant • Chris Sawyer, Freelance classics, such as the very first combat flight simulator, F-15 Strike Eagle; then Pirates, Railroad Tycoon, and of course, a game often • Rick Goodman, voted the number one game of all time, Civilization. Meier has con- Stainless Steel Studios tributed to a number of chapters in this book, but here he offers a • Phil Steinmeyer, few words on game inspiration. PopTop Software “Find something you as a designer are excited about,” begins • Ed Del Castillo, Meier. “If not, it will likely show through your work.” Meier also Liquid Entertainment reminds designers that this is a project that they’ll be working on for about two years, and designers have to ask themselves whether this is something they want to work on every day for that length of time. From a practical point of view, Meier says, “You probably don’t want to get into a genre that’s overly exhausted.” For me, working on SimGolf is a fine example, and Gettysburg is another—something I’ve been fascinated with all my life, and it wasn’t mainstream, but was a lot of fun to write—a fun game to put together. -

Uva-DARE (Digital Academic Repository)

UvA-DARE (Digital Academic Repository) Engineering emergence: applied theory for game design Dormans, J. Publication date 2012 Link to publication Citation for published version (APA): Dormans, J. (2012). Engineering emergence: applied theory for game design. Creative Commons. General rights It is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), other than for strictly personal, individual use, unless the work is under an open content license (like Creative Commons). Disclaimer/Complaints regulations If you believe that digital publication of certain material infringes any of your rights or (privacy) interests, please let the Library know, stating your reasons. In case of a legitimate complaint, the Library will make the material inaccessible and/or remove it from the website. Please Ask the Library: https://uba.uva.nl/en/contact, or a letter to: Library of the University of Amsterdam, Secretariat, Singel 425, 1012 WP Amsterdam, The Netherlands. You will be contacted as soon as possible. UvA-DARE is a service provided by the library of the University of Amsterdam (https://dare.uva.nl) Download date:26 Sep 2021 Ludography Agricola (2007, board game). Lookout Games, Uwe Rosenberg. Americas Army (2002, PC). U.S. Army. Angry Birds (2009, iOS, Android, others). Rovio Mobile Ltd. Ascent (2009, PC). J. Dormans. Assassins Creed (2007, PS3, Xbox 360). Ubisoft, Inc., Ubisoft Divertissements Inc. Baldurs Gate (1998, PC). Interplay Productions, Inc. BioWare Corporations. Bejeweled (2000, PC). PopCap Games, Inc. Bewbees (2011, Android, iOS). GewGawGames. Blood Bowl, third edition (1994, board game). -

Peder Larson

Command and Conquer by Peder Larson Professor Henry Lowood STS 145 March 18, 2002 Introduction It was impossible to be a computer gamer in the mid-1990s and not be aware of Command and Conquer. During 1995 and the following couple years, Command and Conquer mania was at its peak, and the game spawned a whole series that sold over 15 million copies worldwide, making it "The best selling Computer Strategy Game Series of All Time" (Westwood Studios). Sales reached $450 million before Command and Conquer: Tiberian Sun, the third major title in the series, was released in 1999 (Romaine). The game introduced many to the genre of real-time strategy (RTS) games, in which Starcraft, Warcraft, Age of Empires, and Total Annihilation all fall. Command and Conquer, however, was extremely similar to Dune 2, a 1992 release by Westwood Studios. The interface, controls, and "harvest, build, and destroy" style were all borrowed, with some minor improvements. Yet Command and Conquer was much more successful. The graphics were better and the plot was new, but the most important improvement was the integration of network play. This greatly increased the time spent playing the game and built up a community surrounding the game. These multiplayer capabilities made Command and Conquer different and more successful than previous RTS games. History In 1983, there was only one store in Las Vegas that sold Apple hardware and software in Las Vegas: Century 23. Louis Castle had just finished up majoring in fine arts and computer science at University of Nevada - Las Vegas and was working there as a salesman. -

Disruptive Innovation and Internationalization Strategies: the Case of the Videogame Industry Par Shoma Patnaik

HEC MONTRÉAL Disruptive Innovation and Internationalization Strategies: The Case of the Videogame Industry par Shoma Patnaik Sciences de la gestion (Option International Business) Mémoire présenté en vue de l’obtention du grade de maîtrise ès sciences en gestion (M. Sc.) Décembre 2017 © Shoma Patnaik, 2017 Résumé Ce mémoire a pour objectif une analyse des deux tendances très pertinentes dans le milieu du commerce d'aujourd'hui – l'innovation de rupture et l'internationalisation. L'innovation de rupture (en anglais, « disruptive innovation ») est particulièrement devenue un mot à la mode. Cependant, cela n'est pas assez étudié dans la recherche académique, surtout dans le contexte des affaires internationales. De plus, la théorie de l'innovation de rupture est fréquemment incomprise et mal-appliquée. Ce mémoire vise donc à combler ces lacunes, non seulement en examinant en détail la théorie de l'innovation de rupture, ses antécédents théoriques et ses liens avec l'internationalisation, mais en outre, en situant l'étude dans l'industrie des jeux vidéo, il découvre de nouvelles tendances industrielles et pratiques en examinant le mouvement ascendant des jeux mobiles et jeux en lignes. Le mémoire commence par un dessein des liens entre l'innovation de rupture et l'internationalisation, sur le fondement que la recherche de nouveaux débouchés est un élément critique dans la théorie de l'innovation de rupture. En formulant des propositions tirées de la littérature académique, je postule que les entreprises « disruptives » auront une vitesse d'internationalisation plus élevée que celle des entreprises traditionnelles. De plus, elles auront plus de facilité à franchir l'obstacle de la distance entre des marchés et pénétreront dans des domaines inconnus et inexploités. -

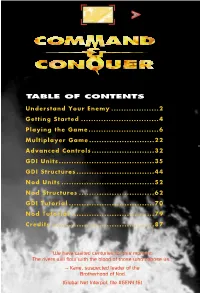

CC-Manual.Pdf

2 C&C OEM v.1 10/23/98 1:35 PM Page 1 TABLE OF CONTENTS Understand Your Enemy ...................2 Getting Started ...............................4 Playing the Game............................6 Multiplayer Game..........................22 Advanced Controls.........................32 GDI Units......................................35 GDI Structures...............................44 Nod Units .....................................52 Nod Structures ..............................62 GDI Tutorial ..................................70 Nod Tutorial .................................79 Credits .........................................87 "We have waited centuries for this moment. The rivers will flow with the blood of those who oppose us." -- Kane, suspected leader of the Brotherhood of Nod (Global Net Interpol, file #GEN4:16) 2 C&C OEM v.1 10/20/98 3:19 PM Page 2 THE BROTHERHOOD OF NOD Commonly, The Brotherhood, The Ways of Nod, ShaÆSeer among the tribes of Godan; HISTORY see INTERPOL File ARK936, Aliases of the Brotherhood, for more. FOUNDED: Date unknown: exaggerated reports place the Brotherhood’s founding before 1,800 BC IDEOLOGY: To unite third-world nations under a pseudo-religious political platform with imperialist tendencies. In actuality it is an aggressive and popular neo-fascist, anti- West movement vying for total domination of the world’s peoples and resources. Operates under the popular mantra, “Brotherhood, unity, peace”. CURRENT HEAD OF STATE: Kane; also known as Caine, Jacob (INTERPOL, File TRX11-12Q); al-Quayym, Amir (MI6 DR-416.52) BASE OF OPERATIONS: Global. Command posts previously identified at Kuantan, Malaysia; somewhere in Ar-Rub’ al-Khali, Saudi Arabia; Tokyo; Caen, France. MILITARY STRENGTH: Previously believed only to be a smaller terrorist operations, a recent scandal involving United States defense contractors confirms that the Brotherhood is well-equipped and supports significant land, sea, and air military operations. -

NOX UK Manual 2

NOX™ PCCD MANUAL Warning: To Owners Of Projection Televisions Still pictures or images may cause permanent picture-tube damage or mark the phosphor of the CRT. Avoid repeated or extended use of video games on large-screen projection televisions. Epilepsy Warning Please Read Before Using This Game Or Allowing Your Children To Use It. Some people are susceptible to epileptic seizures or loss of consciousness when exposed to certain flashing lights or light patterns in everyday life. Such people may have a seizure while watching television images or playing certain video games. This may happen even if the person has no medical history of epilepsy or has never had any epileptic seizures. If you or anyone in your family has ever had symptoms related to epilepsy (seizures or loss of consciousness) when exposed to flashing lights, consult your doctor prior to playing. We advise that parents should monitor the use of video games by their children. If you or your child experience any of the following symptoms: dizziness, blurred vision, eye or muscle twitches, loss of consciousness, disorientation, any involuntary movement or convulsion, while playing a video game, IMMEDIATELY discontinue use and consult your doctor. Precautions To Take During Use • Do not stand too close to the screen. Sit a good distance away from the screen, as far away as the length of the cable allows. • Preferably play the game on a small screen. • Avoid playing if you are tired or have not had much sleep. • Make sure that the room in which you are playing is well lit. • Rest for at least 10 to 15 minutes per hour while playing a video game. -

Creating Immersive 2D World Visuals for Platform Games – Post-Mortem: Skytails

Creating Immersive 2D World Visuals for Platform Games – Post-Mortem: Skytails Eveliina Aalto Master’s Thesis Master’s Programme in New Media Game Design and Production Aalto University 2020 1 “Although an image is clearly visible to the eye, it takes the mind to develop it into a rich, tangible brilliance, into an outstanding painting.” — Jeanne Dobie, Making color sing 1 Author Eveliina Aalto Title of thesis Creating immersive 2D world visuals for platform games – Skytails Post-Mortem Department School of Arts, Design and Architecture Degree programme New Media: Game Design & Production Supervisor Miikka Junnila Advisor Heikka Valja Year 2020 Number of pages 64 Language English Abstract Game visuals are a crucial part of the game aesthetics – art evokes emotions, gives information, and creates immersion. Creating visual assets for a game is a complex subject that can be approached in multiple ways. However, the visual choices made in games have many common qualities that can be observed and analyzed. This thesis aims to identify the benefits of applying art theory principles in two-dimensional platform games’ visuals and its impacts on the player’s aesthetic experience. The first chapter of the study introduces a brief history of platform games, screen spaces, and the method of parallax scrolling. Platform games, commonly known as platformers, refer to action games where the player advances by climbing and jumping between platforms. In 1981, the first platform arcade game, Donkey Kong, was introduced. Since then, the genre has expanded to various sub-genres and more complex graphics with the advances in the hardware. The second chapter demonstrates the core principles of art theory regarding color, composition, and shape language. -

Electronic Arts: Equity Valuation

Electronic Arts: Equity Valuation David Nobre Pinto Cardoso Advisor: Dr. José Carlos Tudela Martins April 26th, 2019 Dissertation submitted in partial fulfilment of requirements of the Master in Finance, at Católica Lisbon, School of Business and Economics 2 Abstract The following master thesis was elaborated by David Cardoso under the orientation of professor Dr. José Carlos Tudela Martins. The master thesis has as its main objective the valuation of Electronic Arts, a US based gaming/entertainment company. The Company was founded back in 1982 and since then has been operating in the mentioned sector namely by making game software for platforms such as personal computers, consoles and more recently smartphones. The Company has been enjoying success through its game franchises mainly in the sports category. The valuation process was made through two methods: Discounted Cash flows and Multiple relative valuation. In the DCF method, the Company’s future cash flows are estimated and discounted to present day. All those cash flows are then summed and yield the Enterprise value. The next procedure would be to add the cash in the balance sheet and subtract the Company’s debt to yield the Equity value. If the Equity value is divided by the number of shares outstanding which will result in the estimated value per share. In the Multiples relative valuation process, the financials of similar companies are used to make a comparison between what those companies are valued and what the Company should be valued. Finally, after doing both processes a final recommendation of buying and/or holding the Company. 3 Abstract (em português) A seguinte tese de mestrado foi elaborada por David Cardoso sob a orientação do professor Dr. -

Westwood: the Building of a Legacy

Westwood: The Building of a Legacy “What became Dune II started out as a challenge I made for myself.” – Brett Sperry David Keh SUID: 4832754 STS 145 March 18, 2002 Keh 2 As Will Wright, founder of Maxis and creator of the Sim game phenomenon, said in a presentation about game design, the realm of all video games can be visualized as existing in a game space. Successful games can be visualized as existing on a peak. Once a peak is discovered, other game designers will not venture far from these peaks, but rather cling tightly to them. Mr. Wright points out, however, that there are an infinite number of undiscovered peaks out there. Unfortunately, most game designers do not make the jump, and the majority of these peaks are left undiscovered. It is only on very rare occasions that a pioneer makes that leap into the game space in hopes of landing on a new peak. This case study will examine one such occurrence, when a designer made such a leap and found himself on one completely unexplored, unlimited, and completely astounding peak – a leap that changed the landscape of gaming forever. This case history will explore how Westwood Studios contributed to the creation of the real-time strategy (RTS) genre through its pioneering game Dune II: The Building of an Empire. Although there have been several other RTS games from Westwood Studios, I have decided to focus on Dune II specifically, because it was in this project that Westwood Studios contributed most to the RTS genre and the future of computer gaming. -

1 Before the U.S. COPYRIGHT OFFICE, LIBRARY of CONGRESS

Before the U.S. COPYRIGHT OFFICE, LIBRARY OF CONGRESS In the Matter of Exemption to Prohibition on Circumvention of Copyright Protection Systems for Access Control Technologies Docket No. 2014-07 Reply Comments of the Electronic Frontier Foundation 1. Commenter Information Mitchell Stoltz Kendra Albert Corynne McSherry (203) 424-0382 Kit Walsh [email protected] Electronic Frontier Foundation 815 Eddy St San Francisco, CA 94109 (415) 436-9333 [email protected] The Electronic Frontier Foundation (EFF) is a member-supported, nonprofit public interest organization devoted to maintaining the traditional balance that copyright law strikes between the interests of copyright owners and the interests of the public. Founded in 1990, EFF represents over 25,000 dues-paying members, including consumers, hobbyists, artists, writers, computer programmers, entrepreneurs, students, teachers, and researchers, who are united in their reliance on a balanced copyright system that ensures adequate incentives for creative work while facilitating innovation and broad access to information in the digital age. In filing these reply comments, EFF represents the interests of gaming communities, archivists, and researchers who seek to preserve the functionality of video games abandoned by their manufacturers. 2. Proposed Class Addressed Proposed Class 23: Abandoned Software—video games requiring server communication Literary works in the form of computer programs, where circumvention is undertaken for the purpose of restoring access to single-player or multiplayer video gaming on consoles, personal computers or personal handheld gaming devices when the developer and its agents have ceased to support such gaming. We propose an exemption to 17 U.S.C. § 1201(a)(1) for users who wish to modify lawfully acquired copies of computer programs for the purpose of continuing to play videogames that are no longer supported by the developer, and that require communication with a server. -

Electronic Arts 20 01 Ar

ELECTRONIC ARTS ELECTRONIC ARTS 209 REDWOOD SHORES PARKWAY, REDWOOD CITY, CA 94065-1175 www.ea.com AR 01 20 TO OUR S T O C K H O L D E R S Fiscal year 2001 was both a challenging and exciting period for our company and for the industry. The transition fr om the current generation of game consoles to next generation systems continued, marked by the debut of the first entry in the next wave of new technology – the PlayStation®2 computer entertainment system from Sony. Nintendo and Microsoft will launch new platforms later this year, creating unprecedented levels of competition, cr eativity and excitement for consumers. Most importa n t l y , this provides a unique opportunity for Electron i c A rts to drive revenue and profi t growth as we move through the next technology cycle. In conjunction with steady increases in the PC market, plus the significant new opportunity in online gaming, we believe Electron i c Ar ts is poised to achieve new levels of success and leadership in the interactive entertainment category. In FY01, consolidated net revenue decreased 6.9 percent to $1,322 million. As a result of our investment in building the leading Internet gaming website, consolidated net income decreased $128 million to a consoli- dated net loss of $11 million, while consolidated diluted earnings per share decreased $0.96 to a diluted loss per share of $0.08. Pro forma consolidated net income and earnings per share, excluding goodwill, non-cash and one-time charges were $6 million and $0.04, res p e c t i v e l y , for the year. -

View Annual Report

EA 2000 AR 2000 CONSOLIDATED FINANCIAL HIGHLIGHTS Fiscal years ended March 31, 2000 1999 % change (In millions, expect per share data) Net Revenues $1,420 $1,222 16 % Operating Income 154 105 47 % Net Income 117 73 60 % Diluted Earnings Per Share 1.76 1.15 53 % Operating Income* 172 155 11 % Pro-Forma Net Income* 130 114 14 % Pro-Forma Diluted EPS* 1.95 1.81 8 % Working Capital 440 333 32 % Total Assets 1,192 902 32 % Total Stockholders’ Equity 923 663 39 % 2000 PRO-FORMA CORE FINANCIAL HIGHLIGHTS Fiscal years ended March 31, 2000 1999 % change (In millions, expect per share data) Net Revenues $ 1,401 $1,206 16 % Operating Income 208 114 82 % Net Income 154 79 95 % Pro-Forma Net Income* 164 120 37 % $ $1,420 1.95 $1,401 $130 $1.81 $1,222 $1,206 $164 $114 $909 $1.19 $898 $120 $73 $74 98 99 00 98 99 00 98 99 00 98 99 00 98 99 00 Consolidated Consolidated Pro-Forma Consolidated Pro-Forma Pro-Forma Core Pro-Forma Core Net Revenues Net Income* Diluted EPS* Net Revenues Net Income* (In millions) (In millions) (In millions) (In millions) FISCAL 2000 OPERATING HIGHLIGHTS • 16 percent increase in EA core net revenues • Signed agreement to be exclusive provider of games • 37 percent increase in EA core net income* on AOL properties • 69 titles released: • Acquired: ™ – 30 PC –DreamWorks Interactive ™ – 30 PlayStation –Kesmai –8 N64 –PlayNation –1 Mac * EXCLUDES GOODWILL AND ONE-TIME ITEMS IN EACH YEAR. AR 2000 EA CHAIRMAN’S LETTER TO OUR STOCKHOLDERS During fiscal year 2000 Electronic Arts (EA) significantly enhanced its strategic position in the interactive entertainment category.