User Guide 2.5

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Expression Rematerialization for VLIW DSP Processors with Distributed Register Files ?

Expression Rematerialization for VLIW DSP Processors with Distributed Register Files ? Chung-Ju Wu, Chia-Han Lu, and Jenq-Kuen Lee Department of Computer Science, National Tsing-Hua University, Hsinchu 30013, Taiwan {jasonwu,chlu}@pllab.cs.nthu.edu.tw,[email protected] Abstract. Spill code is the overhead of memory load/store behavior if the available registers are not sufficient to map live ranges during the process of register allocation. Previously, works have been proposed to reduce spill code for the unified register file. For reducing power and cost in design of VLIW DSP processors, distributed register files and multi- bank register architectures are being adopted to eliminate the amount of read/write ports between functional units and registers. This presents new challenges for devising compiler optimization schemes for such ar- chitectures. This paper aims at addressing the issues of reducing spill code via rematerialization for a VLIW DSP processor with distributed register files. Rematerialization is a strategy for register allocator to de- termine if it is cheaper to recompute the value than to use memory load/store. In the paper, we propose a solution to exploit the character- istics of distributed register files where there is the chance to balance or split live ranges. By heuristically estimating register pressure for each register file, we are going to treat them as optional spilled locations rather than spilling to memory. The choice of spilled location might pre- serve an expression result and keep the value alive in different register file. It increases the possibility to do expression rematerialization which is effectively able to reduce spill code. -

User-Directed Loop-Transformations in Clang

User-Directed Loop-Transformations in Clang Michael Kruse Hal Finkel Argonne Leadership Computing Facility Argonne Leadership Computing Facility Argonne National Laboratory Argonne National Laboratory Argonne, USA Argonne, USA [email protected] hfi[email protected] Abstract—Directives for the compiler such as pragmas can Only #pragma unroll has broad support. #pragma ivdep made help programmers to separate an algorithm’s semantics from popular by icc and Cray to help vectorization is mimicked by its optimization. This keeps the code understandable and easier other compilers as well, but with different interpretations of to optimize for different platforms. Simple transformations such as loop unrolling are already implemented in most mainstream its meaning. However, no compiler allows applying multiple compilers. We recently submitted a proposal to add generalized transformations on a single loop systematically. loop transformations to the OpenMP standard. We are also In addition to straightforward trial-and-error execution time working on an implementation in LLVM/Clang/Polly to show its optimization, code transformation pragmas can be useful for feasibility and usefulness. The current prototype allows applying machine-learning assisted autotuning. The OpenMP approach patterns common to matrix-matrix multiplication optimizations. is to make the programmer responsible for the semantic Index Terms—OpenMP, Pragma, Loop Transformation, correctness of the transformation. This unfortunately makes it C/C++, Clang, LLVM, Polly hard for an autotuner which only measures the timing difference without understanding the code. Such an autotuner would I. MOTIVATION therefore likely suggest transformations that make the program Almost all processor time is spent in some kind of loop, and return wrong results or crash. -

Elimination of Memory-Based Dependences For

Elimination of Memory-Based Dependences for Loop-Nest Optimization and Parallelization: Evaluation of a Revised Violated Dependence Analysis Method on a Three-Address Code Polyhedral Compiler Konrad Trifunovic1, Albert Cohen1, Razya Ladelsky2, and Feng Li1 1 INRIA Saclay { ^Ile-de-France and LRI, Paris-Sud 11 University, Orsay, France falbert.cohen, konrad.trifunovic, [email protected] 2 IBM Haifa Research, Haifa, Israel [email protected] Abstract In order to preserve the legality of loop nest transformations and parallelization, data- dependences need to be analyzed. Memory dependences come in two varieties: they are either data-flow dependences or memory-based dependences. While data-flow de- pendences must be satisfied in order to preserve the correct order of computations, memory-based dependences are induced by the reuse of a single memory location to store multiple values. Memory-based dependences reduce the degrees of freedom for loop transformations and parallelization. While systematic array expansion techniques exist to remove all memory-based dependences, the overhead on memory footprint and the detrimental impact on register-level reuse can be catastrophic. Much care is needed when eliminating memory-based dependences, and this is particularly essential for polyhedral compilation on three-address code representation like the GRAPHITE pass of GCC. We propose and evaluate a technique allowing a compiler to ignore some memory- based dependences while checking for the legality of a given affine transformation. This technique does not involve any array expansion. When this technique is not sufficient to expose data parallelism, it can be used to compute the minimal set of scalars and arrays that should be privatized. -

Sun Microsystems: Sun Java Workstation W1100z

CFP2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECfp2000 = 1787 Sun Java Workstation W1100z SPECfp_base2000 = 1637 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jul-2004 Hardware Avail: Jul-2004 Software Avail: Jul-2004 Reference Base Base Benchmark Time Runtime Ratio Runtime Ratio 1000 2000 3000 4000 168.wupwise 1600 92.3 1733 71.8 2229 171.swim 3100 134 2310 123 2519 172.mgrid 1800 124 1447 103 1755 173.applu 2100 150 1402 133 1579 177.mesa 1400 76.1 1839 70.1 1998 178.galgel 2900 104 2789 94.4 3073 179.art 2600 146 1786 106 2464 183.equake 1300 93.7 1387 89.1 1460 187.facerec 1900 80.1 2373 80.1 2373 188.ammp 2200 158 1397 154 1425 189.lucas 2000 121 1649 122 1637 191.fma3d 2100 135 1552 135 1552 200.sixtrack 1100 148 742 148 742 301.apsi 2600 170 1529 168 1549 Hardware Software CPU: AMD Opteron (TM) 150 Operating System: Red Hat Enterprise Linux WS 3 (AMD64) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated Red Hat gcc 3.5 ssa (from RHEL WS 3) PGI Fortran 5.2 (build 5.2-0E) CPU(s) enabled: 1 core, 1 chip, 1 core/chip AMD Core Math Library (Version 2.0) for AMD64 CPU(s) orderable: 1 File System: Linux/ext3 Parallel: No System State: Multi-user, Run level 3 Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC3200 CL3 DDR SDRAM ECC Registered Disk Subsystem: IDE, 80GB, 7200RPM Other Hardware: None Notes/Tuning Information A two-pass compilation method is -

A General Compilation Algorithm to Parallelize and Optimize Counted Loops with Dynamic Data-Dependent Bounds Jie Zhao, Albert Cohen

A general compilation algorithm to parallelize and optimize counted loops with dynamic data-dependent bounds Jie Zhao, Albert Cohen To cite this version: Jie Zhao, Albert Cohen. A general compilation algorithm to parallelize and optimize counted loops with dynamic data-dependent bounds. IMPACT 2017 - 7th International Workshop on Polyhedral Compilation Techniques, Jan 2017, Stockholm, Sweden. pp.1-10. hal-01657608 HAL Id: hal-01657608 https://hal.inria.fr/hal-01657608 Submitted on 7 Dec 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A general compilation algorithm to parallelize and optimize counted loops with dynamic data-dependent bounds Jie Zhao Albert Cohen INRIA & DI, École Normale Supérieure 45 rue d’Ulm, 75005 Paris fi[email protected] ABSTRACT iterating until a dynamically computed, data-dependent up- We study the parallelizing compilation and loop nest opti- per bound. Such bounds are loop invariants, but often re- mization of an important class of programs where counted computed in the immediate vicinity of the loop they con- loops have a dynamically computed, data-dependent upper trol; for example, their definition may take place in the im- bound. Such loops are amenable to a wider set of trans- mediately enclosing loop. -

Cray Scientific Libraries Overview

Cray Scientific Libraries Overview What are libraries for? ● Building blocks for writing scientific applications ● Historically – allowed the first forms of code re-use ● Later – became ways of running optimized code ● Today the complexity of the hardware is very high ● The Cray PE insulates users from this complexity • Cray module environment • CCE • Performance tools • Tuned MPI libraries (+PGAS) • Optimized Scientific libraries Cray Scientific Libraries are designed to provide the maximum possible performance from Cray systems with minimum effort. Scientific libraries on XC – functional view FFT Sparse Dense Trilinos BLAS FFTW LAPACK PETSc ScaLAPACK CRAFFT CASK IRT What makes Cray libraries special 1. Node performance ● Highly tuned routines at the low-level (ex. BLAS) 2. Network performance ● Optimized for network performance ● Overlap between communication and computation ● Use the best available low-level mechanism ● Use adaptive parallel algorithms 3. Highly adaptive software ● Use auto-tuning and adaptation to give the user the known best (or very good) codes at runtime 4. Productivity features ● Simple interfaces into complex software LibSci usage ● LibSci ● The drivers should do it all for you – no need to explicitly link ● For threads, set OMP_NUM_THREADS ● Threading is used within LibSci ● If you call within a parallel region, single thread used ● FFTW ● module load fftw (there are also wisdom files available) ● PETSc ● module load petsc (or module load petsc-complex) ● Use as you would your normal PETSc build ● Trilinos ● -

Introduchon to Arm for Network Stack Developers

Introducon to Arm for network stack developers Pavel Shamis/Pasha Principal Research Engineer Mvapich User Group 2017 © 2017 Arm Limited Columbus, OH Outline • Arm Overview • HPC SoLware Stack • Porng on Arm • Evaluaon 2 © 2017 Arm Limited Arm Overview © 2017 Arm Limited An introduc1on to Arm Arm is the world's leading semiconductor intellectual property supplier. We license to over 350 partners, are present in 95% of smart phones, 80% of digital cameras, 35% of all electronic devices, and a total of 60 billion Arm cores have been shipped since 1990. Our CPU business model: License technology to partners, who use it to create their own system-on-chip (SoC) products. We may license an instrucBon set architecture (ISA) such as “ARMv8-A”) or a specific implementaon, such as “Cortex-A72”. …and our IP extends beyond the CPU Partners who license an ISA can create their own implementaon, as long as it passes the compliance tests. 4 © 2017 Arm Limited A partnership business model A business model that shares success Business Development • Everyone in the value chain benefits Arm Licenses technology to Partner • Long term sustainability SemiCo Design once and reuse is fundamental IP Partner Licence fee • Spread the cost amongst many partners Provider • Technology reused across mulBple applicaons Partners develop • Creates market for ecosystem to target chips – Re-use is also fundamental to the ecosystem Royalty Upfront license fee OEM • Covers the development cost Customer Ongoing royalBes OEM sells • Typically based on a percentage of chip price -

Foundations of Scientific Research

2012 FOUNDATIONS OF SCIENTIFIC RESEARCH N. M. Glazunov National Aviation University 25.11.2012 CONTENTS Preface………………………………………………….…………………….….…3 Introduction……………………………………………….…..........................……4 1. General notions about scientific research (SR)……………….……….....……..6 1.1. Scientific method……………………………….………..……..……9 1.2. Basic research…………………………………………...……….…10 1.3. Information supply of scientific research……………..….………..12 2. Ontologies and upper ontologies……………………………….…..…….…….16 2.1. Concepts of Foundations of Research Activities 2.2. Ontology components 2.3. Ontology for the visualization of a lecture 3. Ontologies of object domains………………………………..………………..19 3.1. Elements of the ontology of spaces and symmetries 3.1.1. Concepts of electrodynamics and classical gauge theory 4. Examples of Research Activity………………….……………………………….21 4.1. Scientific activity in arithmetics, informatics and discrete mathematics 4.2. Algebra of logic and functions of the algebra of logic 4.3. Function of the algebra of logic 5. Some Notions of the Theory of Finite and Discrete Sets…………………………25 6. Algebraic Operations and Algebraic Structures……………………….………….26 7. Elements of the Theory of Graphs and Nets…………………………… 42 8. Scientific activity on the example “Information and its investigation”……….55 9. Scientific research in Artificial Intelligence……………………………………..59 10. Compilers and compilation…………………….......................................……69 11. Objective, Concepts and History of Computer security…….………………..93 12. Methodological and categorical apparatus of scientific research……………114 13. Methodology and methods of scientific research…………………………….116 13.1. Methods of theoretical level of research 13.1.1. Induction 13.1.2. Deduction 13.2. Methods of empirical level of research 14. Scientific idea and significance of scientific research………………………..119 15. Forms of scientific knowledge organization and principles of SR………….121 1 15.1. Forms of scientific knowledge 15.2. -

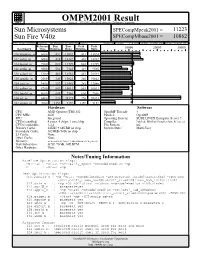

Sun Microsystems

OMPM2001 Result spec Copyright 1999-2002, Standard Performance Evaluation Corporation Sun Microsystems SPECompMpeak2001 = 11223 Sun Fire V40z SPECompMbase2001 = 10862 SPEC license #:HPG0010 Tested by: Sun Microsystems, Santa Clara Test site: Menlo Park Test date: Feb-2005 Hardware Avail:Apr-2005 Software Avail:Apr-2005 Reference Base Base Peak Peak Benchmark Time Runtime Ratio Runtime Ratio 10000 20000 30000 310.wupwise_m 6000 432 13882 431 13924 312.swim_m 6000 424 14142 401 14962 314.mgrid_m 7300 622 11729 622 11729 316.applu_m 4000 536 7463 419 9540 318.galgel_m 5100 483 10561 481 10593 320.equake_m 2600 240 10822 240 10822 324.apsi_m 3400 297 11442 282 12046 326.gafort_m 8700 869 10011 869 10011 328.fma3d_m 4600 608 7566 608 7566 330.art_m 6400 226 28323 226 28323 332.ammp_m 7000 1359 5152 1359 5152 Hardware Software CPU: AMD Opteron (TM) 852 OpenMP Threads: 4 CPU MHz: 2600 Parallel: OpenMP FPU: Integrated Operating System: SUSE LINUX Enterprise Server 9 CPU(s) enabled: 4 cores, 4 chips, 1 core/chip Compiler: PathScale EKOPath Compiler Suite, Release 2.1 CPU(s) orderable: 1,2,4 File System: ufs Primary Cache: 64KBI + 64KBD on chip System State: Multi-User Secondary Cache: 1024KB (I+D) on chip L3 Cache: None Other Cache: None Memory: 16GB (8x2GB, PC3200 CL3 DDR SDRAM ECC Registered) Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Baseline Optimization Flags: Fortran : -Ofast -OPT:early_mp=on -mcmodel=medium -mp C : -Ofast -mp Peak Optimization Flags: 310.wupwise_m : -mp -Ofast -mcmodel=medium -LNO:prefetch_ahead=5:prefetch=3 -

Best Practice Guide - Generic X86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013

Best Practice Guide - Generic x86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013 1 Best Practice Guide - Generic x86 Table of Contents 1. Introduction .............................................................................................................................. 3 2. x86 - Basic Properties ................................................................................................................ 3 2.1. Basic Properties .............................................................................................................. 3 2.2. Simultaneous Multithreading ............................................................................................. 4 3. Programming Environment ......................................................................................................... 5 3.1. Modules ........................................................................................................................ 5 3.2. Compiling ..................................................................................................................... 6 3.2.1. Compilers ........................................................................................................... 6 3.2.2. General Compiler Flags ......................................................................................... 6 3.2.2.1. GCC ........................................................................................................ 6 3.2.2.2. Intel ........................................................................................................ -

Pathscale ENZO - 2011-04-15 by Vincent - Streamcomputing

PathScale ENZO - 2011-04-15 by vincent - StreamComputing - http://www.streamcomputing.eu PathScale ENZO by vincent – Friday, 15 April 2011 http://www.streamcomputing.eu/blog/2011-04-15/pathscale-enzo/ My todo-list gets too large, because there seem so many things going on in GPGPU-world. Therefore the following article is not really complete, but I hope it gives you an idea of the product. ENZO was presented as the alternative to CUDA and OpenCL. In that light I compared them to Intel Array Building Blocks a few weeks ago, not that their technique is comparable in a technical way. PathScale’s CTO mailed me and tried to explain what ENZO really is. This article consists mainly of what he (Mr. C. Bergström) told me. Any questions you have, I will make sure he receives them. ENZO ENZO is a complete GPGPU solution and ecosystem of tools that provide full support for NVIDIA Tesla (kernel driver, runtime, assembler, code generation, front-end programming model and various other things to make a developer’s life easier). Right now it supports the HMPP C/Fortran programming model. HMPP is an open standard jointly developed by CAPS and PathScale. I’ve mentioned HMPP before, as it can translate Fortran and C-code to OpenCL, CUDA and other languages. ENZO’s implementation differs from HMPP by using native front-ends and does hardware optimised code generation. You can learn ENZO in 5 minutes if you’ve done any OpenMP-like programming in the past. For example the Fortran-code (sorry for the missing indenting): !$hmpp simple codelet, target=TESLA1 -

Polyhedral-Model Guided Loop-Nest Auto-Vectorization Konrad Trifunović, Dorit Nuzman, Albert Cohen, Ayal Zaks, Ira Rosen

Polyhedral-Model Guided Loop-Nest Auto-Vectorization Konrad Trifunović, Dorit Nuzman, Albert Cohen, Ayal Zaks, Ira Rosen To cite this version: Konrad Trifunović, Dorit Nuzman, Albert Cohen, Ayal Zaks, Ira Rosen. Polyhedral-Model Guided Loop-Nest Auto-Vectorization. The 18th International Conference on Parallel Architectures and Com- pilation Techniques, Sep 2009, Raleigh, United States. hal-00645325 HAL Id: hal-00645325 https://hal.inria.fr/hal-00645325 Submitted on 27 Nov 2011 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Polyhedral-Model Guided Loop-Nest Auto-Vectorization Konrad Trifunovic †, Dorit Nuzman ∗, Albert Cohen †, Ayal Zaks ∗ and Ira Rosen ∗ ∗IBM Haifa Research Lab, {dorit, zaks, irar}@il.ibm.com †INRIA Saclay, {konrad.trifunovic, albert.cohen }@inria.fr Abstract—Optimizing compilers apply numerous inter- Modern architectures must exploit multiple forms of par- dependent optimizations, leading to the notoriously difficult allelism provided by platforms while using the memory phase-ordering problem — that of deciding which trans- hierarchy efficiently. Systematic solutions to harness the formations to apply and in which order. Fortunately, new infrastructures such as the polyhedral compilation framework interplay of multi-level parallelism and locality are emerg- host a variety of transformations, facilitating the efficient explo- ing, by advances in automatic parallelization and loop nest ration and configuration of multiple transformation sequences.