Enea® Blackbox Recorder

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Practical UNIX Capability System

A Practical UNIX Capability System Adam Langley <[email protected]> 22nd June 2005 ii Abstract This report seeks to document the development of a capability security system based on a Linux kernel and to follow through the implications of such a system. After defining terms, several other capability systems are discussed and found to be excellent, but to have too high a barrier to entry. This motivates the development of the above system. The capability system decomposes traditionally monolithic applications into a number of communicating actors, each of which is a separate process. Actors may only communicate using the capabilities given to them and so the impact of a vulnerability in a given actor can be reasoned about. This design pattern is demonstrated to be advantageous in terms of security, comprehensibility and mod- ularity and with an acceptable performance penality. From this, following through a few of the further avenues which present themselves is the two hours traffic of our stage. Acknowledgments I would like to thank my supervisor, Dr Kelly, for all the time he has put into cajoling and persuading me that the rest of the world might have a trick or two worth learning. Also, I’d like to thank Bryce Wilcox-O’Hearn for introducing me to capabilities many years ago. Contents 1 Introduction 1 2 Terms 3 2.1 POSIX ‘Capabilities’ . 3 2.2 Password Capabilities . 4 3 Motivations 7 3.1 Ambient Authority . 7 3.2 Confused Deputy . 8 3.3 Pervasive Testing . 8 3.4 Clear Auditing of Vulnerabilities . 9 3.5 Easy Configurability . -

Beej's Guide to Unix IPC

Beej's Guide to Unix IPC Brian “Beej Jorgensen” Hall [email protected] Version 1.1.3 December 1, 2015 Copyright © 2015 Brian “Beej Jorgensen” Hall This guide is written in XML using the vim editor on a Slackware Linux box loaded with GNU tools. The cover “art” and diagrams are produced with Inkscape. The XML is converted into HTML and XSL-FO by custom Python scripts. The XSL-FO output is then munged by Apache FOP to produce PDF documents, using Liberation fonts. The toolchain is composed of 100% Free and Open Source Software. Unless otherwise mutually agreed by the parties in writing, the author offers the work as-is and makes no representations or warranties of any kind concerning the work, express, implied, statutory or otherwise, including, without limitation, warranties of title, merchantibility, fitness for a particular purpose, noninfringement, or the absence of latent or other defects, accuracy, or the presence of absence of errors, whether or not discoverable. Except to the extent required by applicable law, in no event will the author be liable to you on any legal theory for any special, incidental, consequential, punitive or exemplary damages arising out of the use of the work, even if the author has been advised of the possibility of such damages. This document is freely distributable under the terms of the Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 License. See the Copyright and Distribution section for details. Copyright © 2015 Brian “Beej Jorgensen” Hall Contents 1. Intro................................................................................................................................................................1 1.1. Audience 1 1.2. Platform and Compiler 1 1.3. -

An Introduction to Linux IPC

An introduction to Linux IPC Michael Kerrisk © 2013 linux.conf.au 2013 http://man7.org/ Canberra, Australia [email protected] 2013-01-30 http://lwn.net/ [email protected] man7 .org 1 Goal ● Limited time! ● Get a flavor of main IPC methods man7 .org 2 Me ● Programming on UNIX & Linux since 1987 ● Linux man-pages maintainer ● http://www.kernel.org/doc/man-pages/ ● Kernel + glibc API ● Author of: Further info: http://man7.org/tlpi/ man7 .org 3 You ● Can read a bit of C ● Have a passing familiarity with common syscalls ● fork(), open(), read(), write() man7 .org 4 There’s a lot of IPC ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 5 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 6 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memoryn attach ● Pseudoterminals tio a ● proc_vm_readv() / proc_vm_writev() ● Sockets ic n ● Signals ● Stream vs Datagram (vs uSeq. -

Linux and Free Software: What and Why?

Linux and Free Software: What and Why? (Qué son Linux y el Software libre y cómo beneficia su uso a las empresas para lograr productividad económica y ventajas técnicas?) JugoJugo CreativoCreativo Michael Kerrisk UniversidadUniversidad dede SantanderSantander UDESUDES © 2012 Bucaramanga,Bucaramanga, ColombiaColombia [email protected] 77 JuneJune 20122012 http://man7.org/ man7.org 1 Who am I? ● Programmer, educator, and writer ● UNIX since 1987; Linux since late 1990s ● Linux man-pages maintainer since 2004 ● Author of a book on Linux programming man7.org 2 Overview ● What is Linux? ● How are Linux and Free Software created? ● History ● Where is Linux used today? ● What is Free Software? ● Source code; Software licensing ● Importance and advantages of Free Software and Software Freedom ● Concluding remarks man7.org 3 ● What is Linux? ● How are Linux and Free Software created? ● History ● Where is Linux used today? ● What is Free Software? ● Source code; Software licensing ● Importance and advantages of Free Software and Software Freedom ● Concluding remarks man7.org 4 What is Linux? ● An operating system (sistema operativo) ● (Operating System = OS) ● Examples of other operating systems: ● Windows ● Mac OS X Penguins are the Linux mascot man7.org 5 But, what's an operating system? ● Two definitions: ● Kernel ● Kernel + package of common programs man7.org 6 OS Definition 1: Kernel ● Computer scientists' definition: ● Operating System = Kernel (núcleo) ● Kernel = fundamental program on which all other programs depend man7.org 7 Programs can live -

Linux Kernel and Driver Development Training Slides

Linux Kernel and Driver Development Training Linux Kernel and Driver Development Training © Copyright 2004-2021, Bootlin. Creative Commons BY-SA 3.0 license. Latest update: October 9, 2021. Document updates and sources: https://bootlin.com/doc/training/linux-kernel Corrections, suggestions, contributions and translations are welcome! embedded Linux and kernel engineering Send them to [email protected] - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/470 Rights to copy © Copyright 2004-2021, Bootlin License: Creative Commons Attribution - Share Alike 3.0 https://creativecommons.org/licenses/by-sa/3.0/legalcode You are free: I to copy, distribute, display, and perform the work I to make derivative works I to make commercial use of the work Under the following conditions: I Attribution. You must give the original author credit. I Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under a license identical to this one. I For any reuse or distribution, you must make clear to others the license terms of this work. I Any of these conditions can be waived if you get permission from the copyright holder. Your fair use and other rights are in no way affected by the above. Document sources: https://github.com/bootlin/training-materials/ - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/470 Hyperlinks in the document There are many hyperlinks in the document I Regular hyperlinks: https://kernel.org/ I Kernel documentation links: dev-tools/kasan I Links to kernel source files and directories: drivers/input/ include/linux/fb.h I Links to the declarations, definitions and instances of kernel symbols (functions, types, data, structures): platform_get_irq() GFP_KERNEL struct file_operations - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/470 Company at a glance I Engineering company created in 2004, named ”Free Electrons” until Feb. -

Sockets in the Kernel

Linux Kernel Networking – advanced topics (6) Sockets in the kernel Rami Rosen [email protected] Haifux, August 2009 www.haifux.org All rights reserved. Linux Kernel Networking (6)- advanced topics ● Note: ● This lecture is a sequel to the following 5 lectures I gave in Haifux: 1) Linux Kernel Networking lecture – http://www.haifux.org/lectures/172/ – slides:http://www.haifux.org/lectures/172/netLec.pdf 2) Advanced Linux Kernel Networking - Neighboring Subsystem and IPSec lecture – http://www.haifux.org/lectures/180/ – slides:http://www.haifux.org/lectures/180/netLec2.pdf Linux Kernel Networking (6)- advanced topics 3) Advanced Linux Kernel Networking - IPv6 in the Linux Kernel lecture ● http://www.haifux.org/lectures/187/ – Slides: http://www.haifux.org/lectures/187/netLec3.pdf 4) Wireless in Linux http://www.haifux.org/lectures/206/ – Slides: http://www.haifux.org/lectures/206/wirelessLec.pdf 5) Sockets in the Linux Kernel ● http://www.haifux.org/lectures/217/ – Slides: http://www.haifux.org/lectures/217/netLec5.pdf Note ● Note: This is the second part of the “Sockets in the Linux Kernel” lecture which was given in Haifux in 27.7.09. You may find some background material for this lecture in its slides: ● http://www.haifux.org/lectures/217/netLec5.pdf TOC ● TOC: – RAW Sockets – UNIX Domain Sockets – Netlink sockets – SCTP sockets. – Appendices ● Note: All code examples in this lecture refer to the recent 2.6.30 version of the Linux kernel. RAW Sockets ● There are cases when there is no interface to create sockets of a certain protocol (ICMP protocol, NETLINK protocol) => use Raw sockets. -

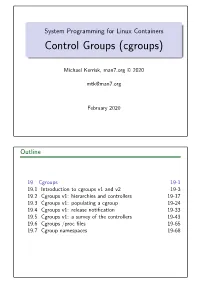

Control Groups (Cgroups)

System Programming for Linux Containers Control Groups (cgroups) Michael Kerrisk, man7.org © 2020 [email protected] February 2020 Outline 19 Cgroups 19-1 19.1 Introduction to cgroups v1 and v2 19-3 19.2 Cgroups v1: hierarchies and controllers 19-17 19.3 Cgroups v1: populating a cgroup 19-24 19.4 Cgroups v1: release notification 19-33 19.5 Cgroups v1: a survey of the controllers 19-43 19.6 Cgroups /procfiles 19-65 19.7 Cgroup namespaces 19-68 Outline 19 Cgroups 19-1 19.1 Introduction to cgroups v1 and v2 19-3 19.2 Cgroups v1: hierarchies and controllers 19-17 19.3 Cgroups v1: populating a cgroup 19-24 19.4 Cgroups v1: release notification 19-33 19.5 Cgroups v1: a survey of the controllers 19-43 19.6 Cgroups /procfiles 19-65 19.7 Cgroup namespaces 19-68 Goals Cgroups is a big topic Many controllers V1 versus V2 interfaces Our goal: understand fundamental semantics of cgroup filesystem and interfaces Useful from a programming perspective How do I build container frameworks? What else can I build with cgroups? And useful from a system engineering perspective What’s going on underneath my container’s hood? System Programming for Linux Containers ©2020, Michael Kerrisk Cgroups 19-4 §19.1 Focus We’ll focus on: General principles of operation; goals of cgroups The cgroup filesystem Interacting with the cgroup filesystem using shell commands Problems with cgroups v1, motivations for cgroups v2 Differences between cgroups v1 and v2 We’ll look briefly at some of the controllers System Programming for Linux Containers ©2020, Michael Kerrisk Cgroups 19-5 §19.1 -

Flatpak a Desktop Version of Containers

Flatpak a desktop version of containers Alexander Larsson, Red Hat What is Flatpak? A distribution-independent, Linux-based application distribution and deployment mechanism for desktop applications distribution-independent ● run on any distribution ● build on any distribution ● Any version of the distribution Linux-based ● Flatpak runs only on Linux ● Uses linux-specific features ● However, needs to run on older kernel ● Current minimum target – RHEL 7 – Ubuntu 16.04 (Xenial) – Debian 9 (Stretch) Distribution mechanism ● Built in support for install ● Built in support for updates ● Anyone can set up a repository Deployment mechanism ● Run apps in a controlled environment – “container” ● Sandbox for improved security – Default sandbox is very limited – Apps can ask for more permissions Desktop application ● Focus on GUI apps ● No root permissions ● Automatically integrates with desktop ● App lifetimes are ad-hoc and transient ● Nothing assumes a “sysadmin” being available How is flatpak different from containers Filesystem layout Docker requirements ● Examples: – REST API micro-service – Website back-end ● Few dependencies, all hand-picked ● Runs as a daemon user ● Writes to nonstandard locations in file-system ● Not a lot of integration with host – DNS – Port forwarding – Volumes for data ● No access to host filesystem ● Updates are managed Docker layout ● One image for the whole fs – Bring your own dependencies – Layout up to each app ● Independent of host filesystem layout Flatpak requirements ● Examples – Firefox – Spotify – gedit -

Open Source Software

OPEN SOURCE SOFTWARE Product AVD9x0x0-0010 Firmware and version 3.4.57 Notes on Open Source software The TCS device uses Open Source software that has been released under specific licensing requirements such as the ”General Public License“ (GPL) Version 2 or 3, the ”Lesser General Public License“ (LGPL), the ”Apache License“ or similar licenses. Any accompanying material such as instruction manuals, handbooks etc. contain copyright notes, conditions of use or licensing requirements that contradict any applicable Open Source license, these conditions are inapplicable. The use and distribution of any Open Source software used in the product is exclusively governed by the respective Open Source license. The programmers provided their software without ANY WARRANTY, whether implied or expressed, of any fitness for a particular purpose. Further, the programmers DECLINE ALL LIABILITY for damages, direct or indirect, that result from the using this software. This disclaimer does not affect the responsibility of TCS. AVAILABILITY OF SOURCE CODE: Where the applicable license entitles you to the source code of such software and/or other additional data, you may obtain it for a period of three years after purchasing this product, and, if required by the license conditions, for as long as we offer customer support for the device. If this document is available at the website of TCS and TCS offers a download of the firmware, the offer to receive the source code is valid for a period of three years and, if required by the license conditions, for as long as TCS offers customer support for the device. TCS provides an option for obtaining the source code: You may obtain any version of the source code that has been distributed by TCS for the cost of reproduction of the physical copy. -

Greg Kroah-Hartman [email protected] Github.Com/Gregkh/Presentation-Kdbus

kdbus IPC for the modern world Greg Kroah-Hartman [email protected] github.com/gregkh/presentation-kdbus Interprocess Communication ● signal ● synchronization ● communication standard signals realtime The Linux Programming Interface, Michael Kerrisk, page 878 POSIX semaphore futex synchronization named eventfd unnamed semaphore System V semaphore “record” lock file lock file lock mutex threads condition variables barrier read/write lock The Linux Programming Interface, Michael Kerrisk, page 878 data transfer pipe communication FIFO stream socket pseudoterminal POSIX message queue message System V message queue memory mapping System V shared memory POSIX shared memory shared memory memory mapping Anonymous mapping mapped file The Linux Programming Interface, Michael Kerrisk, page 878 Android ● ashmem ● pmem ● binder ashmem ● POSIX shared memory for the lazy ● Uses virtual memory ● Can discard segments under pressure ● Unknown future pmem ● shares memory between kernel and user ● uses physically contigous memory ● GPUs ● Unknown future binder ● IPC bus for Android system ● Like D-Bus, but “different” ● Came from system without SysV types ● Works on object / message level ● Needs large userspace library ● NEVER use outside an Android system binder ● File descriptor passing ● Used for Intents and application separation ● Good for small messages ● Not for streams of data ● NEVER use outside an Android system QNX message passing ● Tight coupling to microkernel ● Send message and control, to another process ● Used to build complex messages -

Analyzing a Decade of Linux System Calls

Noname manuscript No. (will be inserted by the editor) Analyzing a Decade of Linux System Calls Mojtaba Bagherzadeh Nafiseh Kahani · · Cor-Paul Bezemer Ahmed E. Hassan · · Juergen Dingel James R. Cordy · Received: date / Accepted: date Abstract Over the past 25 years, thousands of developers have contributed more than 18 million lines of code (LOC) to the Linux kernel. As the Linux kernel forms the central part of various operating systems that are used by mil- lions of users, the kernel must be continuously adapted to changing demands and expectations of these users. The Linux kernel provides its services to an application through system calls. The set of all system calls combined forms the essential Application Programming Interface (API) through which an application interacts with the kernel. In this paper, we conduct an empirical study of the 8,770 changes that were made to Linux system calls during the last decade (i.e., from April 2005 to December 2014) In particular, we study the size of the changes, and we manually identify the type of changes and bug fixes that were made. Our analysis provides an overview of the evolution of the Linux system calls over the last decade. We find that there was a considerable amount of technical debt in the kernel, that was addressed by adding a number of sibling calls (i.e., 26% of all system calls). In addition, we find that by far, the ptrace() and signal handling system calls are the most difficult to maintain and fix. Our study can be used by developers who want to improve the design and ensure the successful evolution of their own kernel APIs. -

Programmation Systèmes Cours 9 — UNIX Domain Sockets

Programmation Systèmes Cours 9 — UNIX Domain Sockets Stefano Zacchiroli [email protected] Laboratoire PPS, Université Paris Diderot 2013–2014 URL http://upsilon.cc/zack/teaching/1314/progsyst/ Copyright © 2013 Stefano Zacchiroli License Creative Commons Attribution-ShareAlike 4.0 International License http://creativecommons.org/licenses/by-sa/4.0/deed.en_US Stefano Zacchiroli (Paris Diderot) UNIX Domain Sockets 2013–2014 1 / 48 Outline 1 Sockets 2 Stream sockets 3 UNIX domain sockets 4 Datagram sockets Stefano Zacchiroli (Paris Diderot) UNIX Domain Sockets 2013–2014 2 / 48 Outline 1 Sockets 2 Stream sockets 3 UNIX domain sockets 4 Datagram sockets Stefano Zacchiroli (Paris Diderot) UNIX Domain Sockets 2013–2014 3 / 48 Sockets Sockets are IPC objects that allow to exchange data between processes running: either on the same machine( host), or on different ones over a network. The UNIX socket API first appeared in 1983 with BSD 4.2. It has been finally standardized for the first time in POSIX.1g (2000), but has been ubiquitous to every UNIX implementation since the 80s. Disclaimer The socket API is best discussed in a network programming course, which this one is not. We will only address enough general socket concepts to describe how to use a specific socket family: UNIX domain sockets. Stefano Zacchiroli (Paris Diderot) UNIX Domain Sockets 2013–2014 4 / 48 Client-server setup Let’s consider a typical client-server application scenario — no matter if they are located on the same or different hosts. Sockets are used as follows: each application: create a socket ñ idea: communication between the two applications will flow through an imaginary “pipe” that will connect the two sockets together server: bind its socket to a well-known address ñ we have done the same to set up rendez-vous points for other IPC objects, e.g.