The Perception of Natural, Cell Phone, and Computer-Synthesized Speech During the Performance of Simultaneous Visual-Motor Tasks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Article (PDF)

Libri Phonetica 1999;56:105–107 = Part I, ‘Introduction’, includes a single Winifred Strange chapter written by the editor. It is an Speech Perception and Linguistic excellent historical review of cross-lan- Experience: Issues in Cross-Language guage studies in speech perception pro- Research viding a clear conceptual framework of York Press, Timonium, 1995 the topic. Strange presents a selective his- 492 pp.; $ 59 tory of research that highlights the main ISBN 0–912752–36–X theoretical themes and methodological paradigms. It begins with a brief descrip- How is speech perception shaped by tion of the basic phenomena that are the experience with our native language and starting points for the investigation: the by exposure to subsequent languages? constancy problem and the question of This is a central research question in units of analysis in speech perception. language perception, which emphasizes Next, the author presents the principal the importance of crosslinguistic studies. theories, methods, findings and limita- Speech Perception and Linguistic Experi- tions in early cross-language research, ence: Issues in Cross-Language Research focused on categorical perception as the contains the contributions to a Workshop dominant paradigm in the study of adult in Cross-Language Perception held at the and infant perception in the 1960s and University of South Florida, Tampa, Fla., 1970s. Finally, Strange reviews the most USA, in May 1992. important findings and conclusions in This text may be said to represent recent cross-language research (1980s and the first compilation strictly focused on early 1990s), which yielded a large theoretical and methodological issues of amount of new information from a wider cross-language perception research. -

Relations Between Speech Production and Speech Perception: Some Behavioral and Neurological Observations

14 Relations between Speech Production and Speech Perception: Some Behavioral and Neurological Observations Willem J. M. Levelt One Agent, Two Modalities There is a famous book that never appeared: Bever and Weksel (shelved). It contained chapters by several young Turks in the budding new psycho- linguistics community of the mid-1960s. Jacques Mehler’s chapter (coau- thored with Harris Savin) was entitled “Language Users.” A normal language user “is capable of producing and understanding an infinite number of sentences that he has never heard before. The central problem for the psychologist studying language is to explain this fact—to describe the abilities that underlie this infinity of possible performances and state precisely how these abilities, together with the various details . of a given situation, determine any particular performance.” There is no hesi tation here about the psycholinguist’s core business: it is to explain our abilities to produce and to understand language. Indeed, the chapter’s purpose was to review the available research findings on these abilities and it contains, correspondingly, a section on the listener and another section on the speaker. This balance was quickly lost in the further history of psycholinguistics. With the happy and important exceptions of speech error and speech pausing research, the study of language use was factually reduced to studying language understanding. For example, Philip Johnson-Laird opened his review of experimental psycholinguistics in the 1974 Annual Review of Psychology with the statement: “The fundamental problem of psycholinguistics is simple to formulate: what happens if we understand sentences?” And he added, “Most of the other problems would be half way solved if only we had the answer to this question.” One major other 242 W. -

Colored-Speech Synaesthesia Is Triggered by Multisensory, Not Unisensory, Perception Gary Bargary,1,2,3 Kylie J

PSYCHOLOGICAL SCIENCE Research Report Colored-Speech Synaesthesia Is Triggered by Multisensory, Not Unisensory, Perception Gary Bargary,1,2,3 Kylie J. Barnett,1,2,3 Kevin J. Mitchell,2,3 and Fiona N. Newell1,2 1School of Psychology, 2Institute of Neuroscience, and 3Smurfit Institute of Genetics, Trinity College Dublin ABSTRACT—Although it is estimated that as many as 4% of sistent terminology reflects an underlying lack of understanding people experience some form of enhanced cross talk be- about the amount of information processing required for syn- tween (or within) the senses, known as synaesthesia, very aesthesia to be induced. For example, several studies have little is understood about the level of information pro- found that synaesthesia can occur very rapidly (Palmeri, Blake, cessing required to induce a synaesthetic experience. In Marois, & Whetsell, 2002; Ramachandran & Hubbard, 2001; work presented here, we used a well-known multisensory Smilek, Dixon, Cudahy, & Merikle, 2001) and is sensitive to illusion called the McGurk effect to show that synaesthesia changes in low-level properties of the inducing stimulus, such as is driven by late, perceptual processing, rather than early, contrast (Hubbard, Manoha, & Ramachandran, 2006) or font unisensory processing. Specifically, we tested 9 linguistic- (Witthoft & Winawer, 2006). These findings suggest that syn- color synaesthetes and found that the colors induced by aesthesia is an automatic association driven by early, unisensory spoken words are related to what is perceived (i.e., the input. However, attention, semantic information, and feature- illusory combination of audio and visual inputs) and not to binding processes (Dixon, Smilek, Duffy, Zanna, & Merikle, the auditory component alone. -

Perception and Awareness in Phonological Processing: the Case of the Phoneme

See discussions, stats, and author profiles for this publication at: http://www.researchgate.net/publication/222609954 Perception and awareness in phonological processing: the case of the phoneme ARTICLE in COGNITION · APRIL 1994 Impact Factor: 3.63 · DOI: 10.1016/0010-0277(94)90032-9 CITATIONS DOWNLOADS VIEWS 67 45 94 2 AUTHORS: José Morais Regine Kolinsky Université Libre de Bruxelles Université Libre de Bruxelles 92 PUBLICATIONS 1,938 CITATIONS 103 PUBLICATIONS 1,571 CITATIONS SEE PROFILE SEE PROFILE Available from: Regine Kolinsky Retrieved on: 15 July 2015 Cognition, 50 (1994) 287-297 OOlO-0277/94/$07.00 0 1994 - Elsevier Science B.V. All rights reserved. Perception and awareness in phonological processing: the case of the phoneme JosC Morais*, RCgine Kolinsky Laboratoire de Psychologie exptrimentale, Universitt Libre de Bruxelles, Av. Ad. Buy1 117, B-l 050 Bruxelles, Belgium Abstract The necessity of a “levels-of-processing” approach in the study of mental repre- sentations is illustrated by the work on the psychological reality of the phoneme. On the basis of both experimental studies of human behavior and functional imaging data, it is argued that there are unconscious representations of phonemes in addition to conscious ones. These two sets of mental representations are func- tionally distinct: the former intervene in speech perception and (presumably) production; the latter are developed in the context of learning alphabetic literacy for both reading and writing purposes. Moreover, among phonological units and properties, phonemes may be the only ones to present a neural dissociation at the macro-anatomic level. Finally, it is argued that even if the representations used in speech perception and those used in assembling and in conscious operations are distinct, they may entertain dependency relations. -

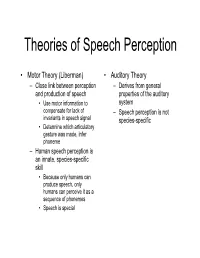

Theories of Speech Perception

Theories of Speech Perception • Motor Theory (Liberman) • Auditory Theory – Close link between perception – Derives from general and production of speech properties of the auditory • Use motor information to system compensate for lack of – Speech perception is not invariants in speech signal species-specific • Determine which articulatory gesture was made, infer phoneme – Human speech perception is an innate, species-specific skill • Because only humans can produce speech, only humans can perceive it as a sequence of phonemes • Speech is special Wilson & friends, 2004 • Perception • Production • /pa/ • /pa/ •/gi/ •/gi/ •Bell • Tap alternate thumbs • Burst of white noise Wilson et al., 2004 • Black areas are premotor and primary motor cortex activated when subjects produced the syllables • White arrows indicate central sulcus • Orange represents areas activated by listening to speech • Extensive activation in superior temporal gyrus • Activation in motor areas involved in speech production (!) Wilson and colleagues, 2004 Is categorical perception innate? Manipulate VOT, Monitor Sucking 4-month-old infants: Eimas et al. (1971) 20 ms 20 ms 0 ms (Different Sides) (Same Side) (Control) Is categorical perception species specific? • Chinchillas exhibit categorical perception as well Chinchilla experiment (Kuhl & Miller experiment) “ba…ba…ba…ba…”“pa…pa…pa…pa…” • Train on end-point “ba” (good), “pa” (bad) • Test on intermediate stimuli • Results: – Chinchillas switched over from staying to running at about the same location as the English b/p -

Effects of Language on Visual Perception

Effects of Language on Visual Perception Gary Lupyan1a, Rasha Abdel Rahmanb, Lera Boroditskyc, Andy Clarkd aUniversity of Wisconsin-Madison bHumboldt-Universität zu Berlin cUniversity of California San Diego dUniversity of Sussex Abstract Does language change what we perceive? Does speaking different languages cause us to perceive things differently? We review the behavioral and elec- trophysiological evidence for the influence of language on perception, with an emphasis on the visual modality. Effects of language on perception can be observed both in higher-level processes such as recognition, and in lower-level processes such as discrimination and detection. A consistent finding is that language causes us to perceive in a more categorical way. Rather than being fringe or exotic, as they are sometimes portrayed, we discuss how effects of language on perception naturally arise from the interactive and predictive nature of perception. Keywords: language; perception; vision; categorization; top-down effects; prediction “Even comparatively simple acts of perception are very much more at the mercy of the social patterns called words than we might suppose.” [1]. “No matter how influential language might be, it would seem preposter- ous to a physiologist that it could reach down into the retina and rewire the ganglion cells” [2]. 1Correspondence: [email protected] Preprint submitted to Trends in Cognitive Sciences August 22, 2020 Language as a form of experience that affects perception What factors influence how we perceive the world? For example, what makes it possible to recognize the object in Fig. 1a? Or to locate the ‘target’ in Fig. 1b? Where is the head of the bird in Fig. -

How Infant Speech Perception Contributes to Language Acquisition Judit Gervain* and Janet F

Language and Linguistics Compass 2/6 (2008): 1149–1170, 10.1111/j.1749-818x.2008.00089.x How Infant Speech Perception Contributes to Language Acquisition Judit Gervain* and Janet F. Werker University of British Columbia Abstract Perceiving the acoustic signal as a sequence of meaningful linguistic representations is a challenging task, which infants seem to accomplish effortlessly, despite the fact that they do not have a fully developed knowledge of language. The present article takes an integrative approach to infant speech perception, emphasizing how young learners’ perception of speech helps them acquire abstract structural properties of language. We introduce what is known about infants’ perception of language at birth. Then, we will discuss how perception develops during the first 2 years of life and describe some general perceptual mechanisms whose importance for speech perception and language acquisition has recently been established. To conclude, we discuss the implications of these empirical findings for language acquisition. 1. Introduction As part of our everyday life, we routinely interact with children and adults, women and men, as well as speakers using a dialect different from our own. We might talk to them face-to-face or on the phone, in a quiet room or on a busy street. Although the speech signal we receive in these situations can be physically very different (e.g., men have a lower-pitched voice than women or children), we usually have little difficulty understanding what our interlocutors say. Yet, this is no easy task, because the mapping from the acoustic signal to the sounds or words of a language is not straightforward. -

Speech Perception

UC Berkeley Phonology Lab Annual Report (2010) Chapter 5 (of Acoustic and Auditory Phonetics, 3rd Edition - in press) Speech perception When you listen to someone speaking you generally focus on understanding their meaning. One famous (in linguistics) way of saying this is that "we speak in order to be heard, in order to be understood" (Jakobson, Fant & Halle, 1952). Our drive, as listeners, to understand the talker leads us to focus on getting the words being said, and not so much on exactly how they are pronounced. But sometimes a pronunciation will jump out at you - somebody says a familiar word in an unfamiliar way and you just have to ask - "is that how you say that?" When we listen to the phonetics of speech - to how the words sound and not just what they mean - we as listeners are engaged in speech perception. In speech perception, listeners focus attention on the sounds of speech and notice phonetic details about pronunciation that are often not noticed at all in normal speech communication. For example, listeners will often not hear, or not seem to hear, a speech error or deliberate mispronunciation in ordinary conversation, but will notice those same errors when instructed to listen for mispronunciations (see Cole, 1973). --------begin sidebar---------------------- Testing mispronunciation detection As you go about your daily routine, try mispronouncing a word every now and then to see if the people you are talking to will notice. For instance, if the conversation is about a biology class you could pronounce it "biolochi". After saying it this way a time or two you could tell your friend about your little experiment and ask if they noticed any mispronounced words. -

Vowel Perception and Production in Adolescents with Reading Disabilities

Vowel Perception and Production in Adolescents with Reading Disabilities Carol Bertucci Lexington Public Schools Lexington, Massachusetts Pamela Hook Charles Haynes MGH Institute of Health Professions Boston, Massachusetts Paul Macaruso Community College of Rhode Island and Haskins Laboratories Providence, Rhode Island and New Haven, Connecticut Corine Bickley Massachusetts Institute of Technology Cambridge, Massachusetts Perception and production of the vowels /i/,I/, /l/el in the words pit, pet and pat were investigated in two groups of adolescents who differed significantly on measures of reading and phonological aware- ness. Perception performance was assessed using slope measurements between vowel categories. Duration measurements and a rating sys- tem based on first and second formant (Fl and F2) values were used to analyze production performance. As a group, the students with read- ing disabilities not only perceived but also produced less well-defined vowel categories than the control group of age-matched good readers. Perception and production performance, however, were not correlated. Results suggest that the speech processing difficulties of the students with reading disabilities include weak phonological coding for vowel sounds with similar phonetic characteristics.The implications of these findings for intervention are addressed. Annals of Dyslexia, Vol. 53,2003 Copyright ©2003by The International Dyslexia Association® ISSN 0736-9387 174 VOWEL PERCEPTION AND PRODUCTION 175 Learning to read an alphabetic writing system such as English involves establishing sound-symbol correspondences. Although it is not dear exactly how this process takes place, it presumably involves several factors: for example, the ability to perceive and discriminate speech sounds, the capacity to form and store cate- gories of speech sounds, and the ability to link these categories with specific orthographic symbols. -

Anatomical Evidence of an Indirect Pathway for Word Repetition

Published Ahead of Print on January 29, 2020 as 10.1212/WNL.0000000000008746 ARTICLE OPEN ACCESS Anatomical evidence of an indirect pathway for word repetition Stephanie J. Forkel, PhD, Emily Rogalski, PhD, Niki Drossinos Sancho, MSc, Lucio D’Anna, MD, PhD, Correspondence Pedro Luque Laguna, PhD, Jaiashre Sridhar, MSc, Flavio Dell’Acqua, PhD, Sandra Weintraub, PhD, Dr. Catani Cynthia Thompson, PhD, M.-Marsel Mesulam, MD, and Marco Catani, MD, PhD [email protected] Neurology® 2020;94:e1-e13. doi:10.1212/WNL.0000000000008746 Abstract Objective To combine MRI-based cortical morphometry and diffusion white matter tractography to describe the anatomical correlates of repetition deficits in patients with primary progressive aphasia (PPA). Methods The traditional anatomical model of language identifies a network for word repetition that includes Wernicke and Broca regions directly connected via the arcuate fasciculus. Recent tractography findings of an indirect pathway between Wernicke and Broca regions suggest a critical role of the inferior parietal lobe for repetition. To test whether repetition deficits are associated with damage to the direct or indirect pathway between both regions, tractography analysis was performed in 30 patients with PPA (64.27 ± 8.51 years) and 22 healthy controls. Cortical volume measurements were also extracted from 8 perisylvian language areas connected by the direct and indirect pathways. Results Compared to healthy controls, patients with PPA presented with reduced performance in repetition tasks and increased damage to most of the perisylvian cortical regions and their connections through the indirect pathway. Repetition deficits were prominent in patients with cortical atrophy of the temporo-parietal region with volumetric reductions of the indirect pathway. -

Localising Foreign Accents in Speech Perception, Storage and Production

Localising foreign accents in speech perception, storage and production Yuki Asano Department of Linguistics, Faculty of Humanities University of Konstanz, Germany This dissertation is submitted for the degree of Doctor of Philosophy 2016 Konstanzer Online-Publikations-System (KOPS) URL: http://nbn-resolving.de/urn:nbn:de:bsz:352-0-386434 ACKNOWLEDGEMENTS For their insightful comments, suggestions, questions, ideas, for this thesis Bettina Braun Ryoko Hayashi Aditi Lahiri René Kager For making my research project financially possible Bettina Braun The German Academic Exchange Service (DAAD) The German National Academic Foundation University of Konstanz For their general interest, comments, and inspiring ideas Bettina Braun Miriam Butt Nicole Dehé Bijarke Frellesvig Rolf Grawert Janet Grijzenhout Michele Gubian Carlos Guessenhoven Ryoko Hayashi Bari¸sKabak René Kager iv Aditi Lahiri Frans Plank Dominik Sasha Giuseppina Turco For correcting my English Filippo Cervelli For their technical support Michele Gubian Joachim Kleinmann Dominik Sasha Research assistants For their attendance of my experiments Participants For trusting in my research potential Bettina Braun Masahiko & Kyoko Asano For their mental support Masahiko & Kyoko Asano Bettina Braun Emilia & Filippo Cervelli Hannelore & Rolf Grawert Thank you very much! ZUSAMMENFASSUNG Die Dissertationsschrift beschäftigt sich mit der Suche nach möglichen Quellen eines fremdsprachlichen Akzents in der Prosodie, insbesondere in F0 und segmentaler Län- ge. Anhand der in den Kapiteln 2 bis 4 dargestellten -

The Lexical Restructuring Hypothesis: Two Claims of PA Evaluated Michelle E

The Lexical Restructuring Hypothesis: Two Claims of PA Evaluated a Michelle E. Erskine, Tristan Mahr, Benjamin Munson , and Jan R. Edwards University of Wisconsin-Madison, aUniversity of Minnesota Introduction Methods & Analysis Results Results Nonword Repetition • Phonological awareness (PA) is an important skill for learning to Mediation Model 1 Mediation Model 2 Stimuli read (McBride-Chang, 1990; Cunningham & Stanovich, 1997). • 22 pairs of nonsense words adapted from Edwards et al., 2004 • A variety of factors including phonological working memory Blending Results: • Pairs included a 2-phoneme sequence that contrasted in (PWM), speech perception, and vocabulary skills have been shown • Consistent with complete mediation. phonotactic probability Vocabulary to be related to phonological awareness. • A boot-strapping method to evaluate mediation yields a • Presented stimuli matched child’s native dialect significant indirect effect of speech perception on PA, p < .001, • However, the relationships among these factors are not well and the direct effect of speech perception on PA is no longer understood. Procedure significant. • The lexical restructuring hypothesis proposes that the association • Nonwords were paired with a picture of an unfamiliar object in a • 94% of the effect of speech perception on PA is mediated by among PWM, speech perception and PA are secondary to vocabulary picture-prompted auditory-word-repetition task Phonological Phonological expressive vocabulary size. development in children. Working Memory Awareness • The direct effect of speech perception on PA is no longer • Thus, PA primarily emerges as a result of the gradual significant when the model accounts for effects of reorganization of the lexicon and, to a lesser degree, the vocabulary size.