Getting Started with CUDA Greg Ruetsch, Brent Oster

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

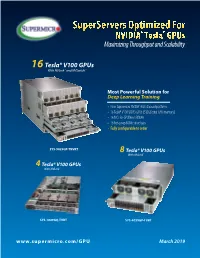

Supermicro GPU Solutions Optimized for NVIDIA Nvlink

SuperServers Optimized For NVIDIA® Tesla® GPUs Maximizing Throughput and Scalability 16 Tesla® V100 GPUs With NVLink™ and NVSwitch™ Most Powerful Solution for Deep Learning Training • New Supermicro NVIDIA® HGX-2 based platform • 16 Tesla® V100 SXM3 GPUs (512GB total GPU memory) • 16 NICs for GPUDirect RDMA • 16 hot-swap NVMe drive bays • Fully configurable to order SYS-9029GP-TNVRT 8 Tesla® V100 GPUs With NVLink™ 4 Tesla® V100 GPUs With NVLink™ SYS-1029GQ-TVRT SYS-4029GP-TVRT www.supermicro.com/GPU March 2019 Maximum Acceleration for AI/DL Training Workloads PERFORMANCE: Highest Parallel peak performance with NVIDIA Tesla V100 GPUs THROUGHPUT: Best in class GPU-to-GPU bandwidth with a maximum speed of 300GB/s SCALABILITY: Designed for direct interconections between multiple GPU nodes FLEXIBILITY: PCI-E 3.0 x16 for low latency I/O expansion capacity & GPU Direct RDMA support DESIGN: Optimized GPU cooling for highest sustained parallel computing performance EFFICIENCY: Redundant Titanium Level power supplies & intelligent cooling control Model SYS-1029GQ-TVRT SYS-4029GP-TVRT • Dual Intel® Xeon® Scalable processors with 3 UPI up to • Dual Intel® Xeon® Scalable processors with 3 UPI up to 10.4GT/s CPU Support 10.4GT/s • Supports up to 205W TDP CPU • Supports up to 205W TDP CPU • 8 NVIDIA® Tesla® V100 GPUs • 4 NVIDIA Tesla V100 GPUs • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s GPU Support • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s • Optimized for GPUDirect RDMA • Optimized for GPUDirect RDMA • Independent CPU and GPU thermal zones -

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Performance Management of Internet Applications in the Cloud

Hasso-Plattner-Institut University of Potsdam Internet Technology and Systems Group Scalability and Performance Management of Internet Applications in the Cloud A thesis submitted for the degree of "Doktors der Ingenieurwissenschaften" (Dr.-Ing.) in IT Systems Engineering Faculty of Mathematics and Natural Sciences University of Potsdam By: Wesam Dawoud Supervisor: Prof. Dr. Christoph Meinel Potsdam, Germany, March 2013 This work is licensed under a Creative Commons License: Attribution – Noncommercial – No Derivative Works 3.0 Germany To view a copy of this license visit http://creativecommons.org/licenses/by-nc-nd/3.0/de/ Published online at the Institutional Repository of the University of Potsdam: URL http://opus.kobv.de/ubp/volltexte/2013/6818/ URN urn:nbn:de:kobv:517-opus-68187 http://nbn-resolving.de/urn:nbn:de:kobv:517-opus-68187 To my lovely parents To my lovely wife Safaa To my lovely kids Shatha and Yazan Acknowledgements At Hasso Plattner Institute (HPI), I had the opportunity to meet many wonderful people. It is my pleasure to thank those who sup- ported me to make this thesis possible. First and foremost, I would like to thank my Ph.D. supervisor, Prof. Dr. Christoph Meinel, for his continues support. In spite of his tight schedule, he always found the time to discuss, guide, and motivate my research ideas. The thanks are extended to Dr. Karin-Irene Eiermann for assisting me even before moving to Germany. I am also grateful for Michaela Schmitz. She managed everything well to make everyones life easier. I owe a thanks to Dr. Nemeth Sharon for helping me to improve my English writing skills. -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

An Emerging Architecture in Smart Phones

International Journal of Electronic Engineering and Computer Science Vol. 3, No. 2, 2018, pp. 29-38 http://www.aiscience.org/journal/ijeecs ARM Processor Architecture: An Emerging Architecture in Smart Phones Naseer Ahmad, Muhammad Waqas Boota * Department of Computer Science, Virtual University of Pakistan, Lahore, Pakistan Abstract ARM is a 32-bit RISC processor architecture. It is develop and licenses by British company ARM holdings. ARM holding does not manufacture and sell the CPU devices. ARM holding only licenses the processor architecture to interested parties. There are two main types of licences implementation licenses and architecture licenses. ARM processors have a unique combination of feature such as ARM core is very simple as compare to general purpose processors. ARM chip has several peripheral controller, a digital signal processor and ARM core. ARM processor consumes less power but provide the high performance. Now a day, ARM Cortex series is very popular in Smartphone devices. We will also see the important characteristics of cortex series. We discuss the ARM processor and system on a chip (SOC) which includes the Qualcomm, Snapdragon, nVidia Tegra, and Apple system on chips. In this paper, we discuss the features of ARM processor and Intel atom processor and see which processor is best. Finally, we will discuss the future of ARM processor in Smartphone devices. Keywords RISC, ISA, ARM Core, System on a Chip (SoC) Received: May 6, 2018 / Accepted: June 15, 2018 / Published online: July 26, 2018 @ 2018 The Authors. Published by American Institute of Science. This Open Access article is under the CC BY license. -

It's Meant to Be Played

Issue 10 $3.99 (where sold) THE WAY It’s meant to be played Ultimate PC Gaming with GeForce All the best holiday games with the power of NVIDIA Far Cry’s creators outclass its already jaw-dropping technology Battlefi eld 2142 with an epic new sci-fi battle World of Warcraft: Company of Heroes Warhammer: The Burning Crusade Mark of Chaos THE NEWS Notebooks are set to transform Welcome... PC gaming Welcome to the 10th issue of The Way It’s Meant To Be Played, the he latest must-have gaming system is… T magazine dedicated to the very best in a notebook PC. Until recently considered mainly PC gaming. In this issue, we showcase a means for working on the move or for portable 30 games, all participants in NVIDIA’s presentations, laptops complete with dedicated graphic The Way It’s Meant To Be Played processing units (GPUs) such as the NVIDIA® GeForce® program. In this program, NVIDIA’s Go 7 series are making a real impact in the gaming world. Latest thing: Laptops developer technology engineers work complete with dedicated The advantages are obvious – gamers need no longer be graphic processing units with development teams to get the are making an impact in very best graphics and effects into tied to their desktop set-up. the gaming world. their new titles. The games are then The new NVIDIA® GeForce® Go 7900 notebook rigorously tested by three different labs GPUs are designed for extreme HD gaming, and gaming at NVIDIA for compatibility, stability, and hardware specialists such as Alienware and Asus have performance to ensure that any game seen the potential of the portable platform. -

Introduction to Multi-Threading and Vectorization Matti Kortelainen Larsoft Workshop 2019 25 June 2019 Outline

Introduction to multi-threading and vectorization Matti Kortelainen LArSoft Workshop 2019 25 June 2019 Outline Broad introductory overview: • Why multithread? • What is a thread? • Some threading models – std::thread – OpenMP (fork-join) – Intel Threading Building Blocks (TBB) (tasks) • Race condition, critical region, mutual exclusion, deadlock • Vectorization (SIMD) 2 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading Image courtesy of K. Rupp 3 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization Motivations for multithreading • One process on a node: speedups from parallelizing parts of the programs – Any problem can get speedup if the threads can cooperate on • same core (sharing L1 cache) • L2 cache (may be shared among small number of cores) • Fully loaded node: save memory and other resources – Threads can share objects -> N threads can use significantly less memory than N processes • If smallest chunk of data is so big that only one fits in memory at a time, is there any other option? 4 6/25/19 Matti Kortelainen | Introduction to multi-threading and vectorization What is a (software) thread? (in POSIX/Linux) • “Smallest sequence of programmed instructions that can be managed independently by a scheduler” [Wikipedia] • A thread has its own – Program counter – Registers – Stack – Thread-local memory (better to avoid in general) • Threads of a process share everything else, e.g. – Program code, constants – Heap memory – Network connections – File handles -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

Data Sheet FUJITSU Tablet STYLISTIC M702

Data Sheet FUJITSU Tablet STYLISTIC M702 Data Sheet FUJITSU Tablet STYLISTIC M702 Designed for the toughest businesses The Fujitsu STYLISTIC M702 is the ideal choice if you are looking for a robust yet lightweight high- performance tablet PC to support you in the field and to increase business continuity. Being water- and dustproof, it protects components most frequently damaged, making it perfect for semi-ruggedized applications. The 25.7 cm (10.1-inch) STYLISTIC M702 provides protection against environmental conditions, ensuring uncompromising productivity whether exposed to rain, humidity or dust. Moreover, it can easily be integrated into your company’s Virtual Desktop Infrastructure (VDI), thus ensuring protected anytime access to your company data while its business software lets you securely manage your contacts and emails. Embedded 4G/LTE delivers latest high-speed connectivity for end-to-end performance in the most challenging situations and the Android operating system’s rich multimedia features also make it perfect for private use. Semi-Ruggedized Ensure absolute business continuity and protect your tablet from environmental conditions.Work from anywhere while exposing the tablet to rain, humidity or dust without causing any component damage. Waterproof (tested for IPX5/7/8 specifications), dustproof (tested for IP5X specification), durable with toughened glass Business Match Ultimate productivity from anywhere. Securely access your business applications, data and company intranet with the business software selection and protect -

Technical Brief

Technical Brief SLI-Ready Memory with Enhanced Performance Profiles One-Click Hassle-Free Memory Performance Boost May 2006 TB-0529-001_v01 One-Click Hassle-Free Memory Performance Boost with SLI-Ready Memory Gamers and PC enthusiasts are endlessly searching for opportunities to improve the performance of their PCs. Optimizing system performance is a function of the major components used—graphics processing unit (GPU) add-in cards, CPU, chipset, and memory. It is also a function of tuning and overclocking the various PC components. Overclocking, however, has disadvantages such as system instability and inconsistent performance measurements from one system to another. SLI-Ready memory with Enhanced Performance Profiles (EPP) is a new approach that simplifies overclocking and ensures memory and platform compatibility. The NVIDIA nForce 590 SLI core logic is the first NVIDIA® platform that supports the new EPP functionality. Memory DIMMs which receive the SLI-Ready certification are required to support EPP technology to ensure the memories can be automatically detected and their full potential realized with the NVIDIA nForce® 590 SLI® chipset. The SLI-Ready certification process ensures the memory modules have passed a comprehensive set of tests and meet the minimum requirements for delivering our customers the outstanding experience they expect from SLI systems. SLI-Ready Memory with EPP at a Glance System memory modules (DIMMs) are built using an electrically-erasable programmable read-only memory (EEPROM) that can hold up to 256 Bytes of data. The EEPROM is used to store Serial Presence Detect (SPD) information defined by JEDEC which include manufacturer part number, manufacturer name, some timing parameters, serial number, etc. -

Unit: 4 Processes and Threads in Distributed Systems

Unit: 4 Processes and Threads in Distributed Systems Thread A program has one or more locus of execution. Each execution is called a thread of execution. In traditional operating systems, each process has an address space and a single thread of execution. It is the smallest unit of processing that can be scheduled by an operating system. A thread is a single sequence stream within in a process. Because threads have some of the properties of processes, they are sometimes called lightweight processes. In a process, threads allow multiple executions of streams. Thread Structure Process is used to group resources together and threads are the entities scheduled for execution on the CPU. The thread has a program counter that keeps track of which instruction to execute next. It has registers, which holds its current working variables. It has a stack, which contains the execution history, with one frame for each procedure called but not yet returned from. Although a thread must execute in some process, the thread and its process are different concepts and can be treated separately. What threads add to the process model is to allow multiple executions to take place in the same process environment, to a large degree independent of one another. Having multiple threads running in parallel in one process is similar to having multiple processes running in parallel in one computer. Figure: (a) Three processes each with one thread. (b) One process with three threads. In former case, the threads share an address space, open files, and other resources. In the latter case, process share physical memory, disks, printers and other resources. -

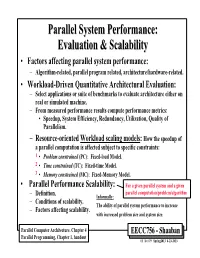

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors