Linked Archival Metadata: a Guidebook Eric Lease Morgan And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

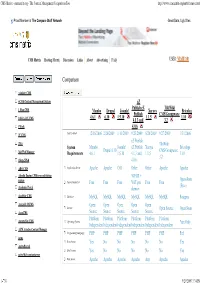

CMS Matrix - Cmsmatrix.Org - the Content Management Comparison Tool

CMS Matrix - cmsmatrix.org - The Content Management Comparison Tool http://www.cmsmatrix.org/matrix/cms-matrix Proud Member of The Compare Stuff Network Great Data, Ugly Sites CMS Matrix Hosting Matrix Discussion Links About Advertising FAQ USER: VISITOR Compare Search Return to Matrix Comparison <sitekit> CMS +CMS Content Management System eZ Publish eZ TikiWiki 1 Man CMS Mambo Drupal Joomla! Xaraya Bricolage Publish CMS/Groupware 4.6.1 6.10 1.5.10 1.1.5 1.10 1024 AJAX CMS 4.1.3 and 3.2 1Work 4.0.6 2F CMS Last Updated 12/16/2006 2/26/2009 1/11/2009 9/23/2009 8/20/2009 9/27/2009 1/31/2006 eZ Publish 2flex TikiWiki System Mambo Joomla! eZ Publish Xaraya Bricolage Drupal 6.10 CMS/Groupware 360 Web Manager Requirements 4.6.1 1.5.10 4.1.3 and 1.1.5 1.10 3.2 4Steps2Web 4.0.6 ABO.CMS Application Server Apache Apache CGI Other Other Apache Apache Absolut Engine CMS/news publishing 30EUR + system Open-Source Approximate Cost Free Free Free VAT per Free Free (Free) Academic Portal domain AccelSite CMS Database MySQL MySQL MySQL MySQL MySQL MySQL Postgres Accessify WCMS Open Open Open Open Open License Open Source Open Source AccuCMS Source Source Source Source Source Platform Platform Platform Platform Platform Platform Accura Site CMS Operating System *nix Only Independent Independent Independent Independent Independent Independent ACM Ariadne Content Manager Programming Language PHP PHP PHP PHP PHP PHP Perl acms Root Access Yes No No No No No Yes ActivePortail Shell Access Yes No No No No No Yes activeWeb contentserver Web Server Apache Apache -

A Framework for Ontology-Based Library Data Generation, Access and Exploitation

Universidad Politécnica de Madrid Departamento de Inteligencia Artificial DOCTORADO EN INTELIGENCIA ARTIFICIAL A framework for ontology-based library data generation, access and exploitation Doctoral Dissertation of: Daniel Vila-Suero Advisors: Prof. Asunción Gómez-Pérez Dr. Jorge Gracia 2 i To Adelina, Gustavo, Pablo and Amélie Madrid, July 2016 ii Abstract Historically, libraries have been responsible for storing, preserving, cata- loguing and making available to the public large collections of information re- sources. In order to classify and organize these collections, the library commu- nity has developed several standards for the production, storage and communica- tion of data describing different aspects of library knowledge assets. However, as we will argue in this thesis, most of the current practices and standards available are limited in their ability to integrate library data within the largest information network ever created: the World Wide Web (WWW). This thesis aims at providing theoretical foundations and technical solutions to tackle some of the challenges in bridging the gap between these two areas: library science and technologies, and the Web of Data. The investigation of these aspects has been tackled with a combination of theoretical, technological and empirical approaches. Moreover, the research presented in this thesis has been largely applied and deployed to sustain a large online data service of the National Library of Spain: datos.bne.es. Specifically, this thesis proposes and eval- uates several constructs, languages, models and methods with the objective of transforming and publishing library catalogue data using semantic technologies and ontologies. In this thesis, we introduce marimba-framework, an ontology- based library data framework, that encompasses these constructs, languages, mod- els and methods. -

Flemish Public Libraries – Digital Library Systems Architecture Study 10-Dec-13

Flemish Public Libraries – Digital Library Systems Architecture Study 10-Dec-13 Flemish Public Libraries - Digital Library Systems Architecture Study Last update: 27 NOV 2013 Researchers: Jean-François Declercq Rosemie Callewaert François Vermaut Ordered by: Bibnet vzw Vereniging van de Vlaamse Provincies Vlaamse Gemeenschapscommissie van het Brussels Hoofdstedelijk Gewest 2013-11-27-DigitalLibrarySystemArchitecture p. 1 / 163 Flemish Public Libraries – Digital Library Systems Architecture Study 10-Dec-13 Table of contents 1 Introduction ............................................................................................................................... 6 1.1 Definition of a Digital Library ............................................................................................. 6 1.2 Study Objectives ................................................................................................................ 7 1.3 Study Approach ................................................................................................................. 7 1.4 Study timeline .................................................................................................................... 8 1.5 Study Steering Committee ................................................................................................. 9 2 ICT Systems Architecture Concepts ......................................................................................... 10 2.1 Enterprise Architecture Model ....................................................................................... -

Download Slide (PDF Document)

When Django is too bloated Specialized Web-Applications with Werkzeug EuroPython 2017 – Rimini, Italy Niklas Meinzer @NiklasMM Gotthard Base Tunnel Photographer: Patrick Neumann Python is amazing for web developers! ● Bottle ● BlueBream ● CherryPy ● CubicWeb ● Grok ● Nagare ● Pyjs ● Pylons ● TACTIC ● Tornado ● TurboGears ● web2py ● Webware ● Zope 2 Why would I want to use less? ● Learn how stuff works Why would I want to use less? ● Avoid over-engineering – Wastes time and resources – Makes updates harder – It’s a security risk. Why would I want to use less? ● You want to do something very specific ● Plan, manage and document chemotherapy treatments ● Built with modern web technology ● Used by hospitals in three European countries Patient Data Lab Data HL7 REST Pharmacy System Database Printers Werkzeug = German for “tool” ● Developed by pocoo team @ pocoo.org – Flask, Sphinx, Jinja2 ● A “WSGI utility” ● Very lightweight ● No ORM, No templating engine, etc ● The basis of Flask and others Werkzeug Features Overview ● WSGI – WSGI 1.0 compatible, WSGI Helpers ● Wrapping of requests and responses ● HTTP Utilities – Header processing, form data parsing, cookies ● Unicode support ● URL routing system ● Testing tools – Testclient, Environment builder ● Interactive Debugger in the Browser A simple Application A simple Application URL Routing Middlewares ● Separate parts of the Application as wsgi apps ● Combine as needed Request Static files DB Part of Application conn with DB access User Dispatcher auth Part of Application without DB access Response HTTP Utilities ● Work with HTTP dates ● Read and dump cookies ● Parse form data Using the test client Using the test client - pytest fixtures Using the test client - pytest fixtures Interactive debugger in the Browser Endless possibilities ● Connect to a database with SQLalchemy ● Use Jinja2 to render documents ● Use Celery to schedule asynchronous tasks ● Talk to 3rd party APIs with requests ● Make syscalls ● Remote control a robot to perform tasks at home Thank you! @NiklasMM NiklasMM Photographer: Patrick Neumann. -

FI-WARE Product Vision Front Page Ref

FI-WARE Product Vision front page Ref. Ares(2011)1227415 - 17/11/20111 FI-WARE Product Vision front page Large-scale Integrated Project (IP) D2.2: FI-WARE High-level Description. Project acronym: FI-WARE Project full title: Future Internet Core Platform Contract No.: 285248 Strategic Objective: FI.ICT-2011.1.7 Technology foundation: Future Internet Core Platform Project Document Number: ICT-2011-FI-285248-WP2-D2.2b Project Document Date: 15 Nov. 2011 Deliverable Type and Security: Public Author: FI-WARE Consortium Contributors: FI-WARE Consortium. Abstract: This deliverable provides a high-level description of FI-WARE which can help to understand its functional scope and approach towards materialization until a first release of the FI-WARE Architecture is officially released. Keyword list: FI-WARE, PPP, Future Internet Core Platform/Technology Foundation, Cloud, Service Delivery, Future Networks, Internet of Things, Internet of Services, Open APIs Contents Articles FI-WARE Product Vision front page 1 FI-WARE Product Vision 2 Overall FI-WARE Vision 3 FI-WARE Cloud Hosting 13 FI-WARE Data/Context Management 43 FI-WARE Internet of Things (IoT) Services Enablement 94 FI-WARE Applications/Services Ecosystem and Delivery Framework 117 FI-WARE Security 161 FI-WARE Interface to Networks and Devices (I2ND) 186 Materializing Cloud Hosting in FI-WARE 217 Crowbar 224 XCAT 225 OpenStack Nova 226 System Pools 227 ISAAC 228 RESERVOIR 229 Trusted Compute Pools 230 Open Cloud Computing Interface (OCCI) 231 OpenStack Glance 232 OpenStack Quantum 233 Open -

Application from the Bibliothèque Nationale De France (Bnf) for Gallica (Gallica.Bnf.Fr) and Data (Data.Bnf.Fr) TABLE of CONTENTS I

Stanford Prize for Innovation in Research Libraries (SPIRL) Application from the Bibliothèque nationale de France (BnF) for Gallica (gallica.bnf.fr) and Data (data.bnf.fr) TABLE OF CONTENTS I. Nominators’ statement ........................................................................................................... 1 II. Narrative description ............................................................................................................ 2 1. THE GALLICA DIGITAL LIBRARY: AN ONGOING INNOVATION .................................... 2 1.1 A BRIEF HISTORY OF GALLICA ..................................................................................... 2 Gallica today and tomorrow ................................................................................................... 6 1.2 GALLICA TRULY CARES ABOUT ITS END USERS ....................................................... 6 Smart search .......................................................................................................................... 6 Smart browsing ...................................................................................................................... 7 Gallica made mobile .............................................................................................................. 7 1.3 A LIBRARY DESIGNED FOR USE AND RE-USE ............................................................ 8 Terms of use .......................................................................................................................... 8 Off line -

Report of the Committee on Data Management

REPORT OF THE COMMITTEE ON DATA MANAGEMENT GOVERNMENT OF INDIA NATIONAL STATISTICAL COMMISSION MINISTRY OF STATISTICS AND PROGRAMME IMPLEMENTATION NEW DELHI CONTENTS Page Number Constitution, Terms of Reference and Members‘ List (i) Acknowledgements (vii) List of Abbreviations (ix) Introduction, Executive Summary, and Main observations (xviii) Chapter - 1 Indian Statistical system, Challenges in Official Statistics & data management and remedial measures 1. The importance of official statistics 1 2. Current scenario of National Statistical System in India 3 3. Need to know and right to share 4 4. Issues of decentralized system 5 5. Vertical and horizontal issues 7 6. Priority 9 7. Inter operability 9 8. Need for assessability and communicability 10 9. Need for scanning of published data before conversion into defined data format 11 10. Non-official data providers 12 11. Consistency in definition and standards 13 12. Draw upon insights into problems 13 13. Technological and technical issues 15 14. Training intervention/ capacity building 17 15. Technology versus Applications 19 16. Legal framework for providing data 19 17. Setting up of Data Management Division 20 Chapter - 2 Conceptual framework on Data management 1. Data Management 22 2. Data governance 25 3. Data Architecture, Analysis and Design 26 4. Database Management 29 5. Data Security Management 30 6. Data Quality Management 32 7. Reference and Master Data Management 34 8. Data Warehousing and Business Intelligence Management 36 9. Document, Record and Content Management 39 10. Meta Data Management 40 11. Contact Data Management 42 12. Data Movement 44 13. Statistical Data Metadata Exchange (SDMX) 44 14. Secured Data Centers 46 Chapter -3 Recommendations: 1. -

Brainomics: Harnessing the Cubicweb Semantic

Brainomics: Harnessing the CubicWeb semantic framework to manage large neuromaging genetics shared resources David Goyard, Antoine Grigis, Dimitri Papadopoulos-Orfanos, Michel Vittot, Vincent Frouin, Adrien Di Mascio To cite this version: David Goyard, Antoine Grigis, Dimitri Papadopoulos-Orfanos, Michel Vittot, Vincent Frouin, et al.. Brainomics: Harnessing the CubicWeb semantic framework to manage large neuromaging genetics shared resources. Journées RITS 2015, Mar 2015, Dourdan, France. p34-35 Section imagerie génétique. inserm-01145600 HAL Id: inserm-01145600 https://www.hal.inserm.fr/inserm-01145600 Submitted on 24 Apr 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Actes des Journées Recherche en Imagerie et Technologies pour la Santé - RITS 2015 34 Brainomics: Harnessing the CubicWeb semantic framework to manage large neuromaging genetics shared resources 1 1 1 2 1 2 D. Goyard , A. Grigis , D. Papadopoulos Orfanos , V. Michel , V. Frouin ∗, A. Di Mascio 1 CEA DSV NeuroSpin, UNATI, 91 Gif sur Yvette, France. 2 Logilab, Paris, France. ∗ [email protected]. Abstract - In neurosciences or psychiatry, large mul- one workpackage devoted to this issue. The package was ticentric population studies are being acquired and the twofolds: (i) to develop specific Python modules using the corresponding data are made available to the acquisi- CubicWeb framework (Logilab, Paris) and (ii) to demon- tion partners or the scientific community. -

Guidelines for Publishing Structured Metadata on the Web

Final Report Research Data Alliance Research Metadata Schemas Working Group Guidelines for publishing structured metadata on the web 15 June 2021, V3.0 Mingfang Wu, Nick Juty, RDA Research Metadata Schemas WG, Julia Collins, Ruth Duerr, Chantel Ridsdale, Adam Shepherd, Chantelle Verhey, Leyla Jael Castro Creative Commons Attribution Only 4.0 license (CC-BY) RDA Research Metadata Schemas Working Group Table of Contents Executive Summary ................................................................................................................... 1 Terminology ............................................................................................................................... 2 1. Introduction ......................................................................................................................... 4 2. Process to publish structured metadata .............................................................................. 6 3. Data model ......................................................................................................................... 7 4. Recommendations .............................................................................................................. 9 Recommendation 1: Clarify the purpose(s) of your markup (or why you want to markup your data) ......................................................................................................................................10 Recommendation 2: Identify what resources are to be marked up with structured data .........11 Recommendation -

Metadata in the Context of the European Library Project

DC-2002: METADATA FOR E-COMMUNITIES: SUPPORTING DIVERSITY AND CONVERGENCE 2002 Proceedings of the International Conference on Dublin Core and Metadata for e-Communities, 2002 October 13-17, 2002 - Florence, Italy Organized by: Associazione Italiana Biblioteche (AIB) Biblioteca Nazionale Centrale di Firenze (BNCF) Dublin Core Metadata Initiative (DCMI) European University Institute (IUE) Istituto e Museo di Storia della Scienza (IMSS) Regione Toscana Università degli Studi di Firenze DC-2002 : metadata for e-communities : supporting diversity and con- vergence 2002 : proceedings of the International Conference on Dublin Core and Metadata for E-communities, 2002, October 13-17, 2002, Florence, Italy / organized by Associazione italiana biblioteche (AIB) … [at al.]. – Firenze : Firenze University Press, 2002. http://epress.unifi.it/ ISBN 88-8453-043-1 025.344 (ed. 20) Dublin Core – Congresses Cataloging of computer network resources - Congresses Print on demand is available © 2002 Firenze University Press Firenze University Press Borgo Albizi, 28 50122 Firenze, Italy http://epress.unifi.it/ Printed in Italy Proc. Int. Conf. on Dublin Core and Metadata for e-Communities 2002 © Firenze University Press DC-2002: Metadata for e-Communities: Supporting Diversity and Convergence DC-2002 marks the tenth in the ongoing series of International Dublin Core Workshops, and the second that includes a full program of tutorials and peer-reviewed conference papers. Interest in Dublin Core metadata has grown from a small collection of pioneering projects to adoption by governments and international organ- izations worldwide. The scope of interest and investment in metadata is broader still, however, and finding coherence in this rapidly growing area is challenging. The theme of DC-2002 — supporting diversity and convergence — acknowledges these changes. -

Efficient Querying of Distributed Sources in a Mobile Environment Through Source Indexing and Caching

Faculty of Science, Department of Computer science Efficient querying of distributed sources in a mobile environment through source indexing and caching. Graduation thesis submitted in partial fulfillment of the requirements for the degree of Master in Applied Informatics. Elien Paret Promoter: Prof. Dr. Olga De Troyer Advisors: Dr. Sven Casteleyn & William Van Woensel Academic year 2009 -2010 Acknowledgements In this small chapter I would like to thank everyone that helped me achieve this thesis. First of all I would like to thank my promoter, Prof. Olga De Troyer, for providing me the opportunity to realize this thesis and for her personal assistance during this entire academic year. Secondly, I would like to express my deepest gratitude to my two excellent advisors, Dr. Sven Casteleyn and PhD William Van Woensel. I am very thankful for their personal guidance, moral support and all the knowledge they shared with me. I also want to thank my dear boyfriend Pieter Callewaert for his moral support, kindness, patience, encouragement and for being there for me at all circumstances and times. Finally I would like to thank my family for their love and support during my entire life and for supporting me getting my masters degree and achieving this thesis. Thank you all. -i- Abstract Mobile devices have become a part of the everyday life; they are used anywhere and at anytime, for communication, looking up information, consulting an agenda, making notes, playing games etc. At the same time, the hardware of these devices has evolved significantly: e.g., faster processors, larger memory and improved connectivity … The hardware evolution along with the recent advancements of identification techniques, has lead to new opportunities for developers of mobile applications: mobile applications can be aware of their environment and the objects in it. -

Brainomics: Harnessing the Cubicweb Semantic

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by HAL-CEA Brainomics: Harnessing the CubicWeb semantic framework to manage large neuromaging genetics shared resources David Goyard, Antoine Grigis, Dimitri Papadopoulos Orfanos, Vincent Michel, Vincent Frouin, Adrien Di Mascio To cite this version: David Goyard, Antoine Grigis, Dimitri Papadopoulos Orfanos, Vincent Michel, Vincent Frouin, et al.. Brainomics: Harnessing the CubicWeb semantic framework to manage large neuromaging genetics shared resources. Journ´eesRITS 2015, Mar 2015, Dourdan, France. Actes des Journ´ees RITS 2015, p34-35 Section imagerie g´en´etique,2015. <inserm-01145600> HAL Id: inserm-01145600 http://www.hal.inserm.fr/inserm-01145600 Submitted on 24 Apr 2015 HAL is a multi-disciplinary open access L'archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destin´eeau d´ep^otet `ala diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publi´esou non, lished or not. The documents may come from ´emanant des ´etablissements d'enseignement et de teaching and research institutions in France or recherche fran¸caisou ´etrangers,des laboratoires abroad, or from public or private research centers. publics ou priv´es. Distributed under a Creative Commons Attribution 4.0 International License Actes des Journées Recherche en Imagerie et Technologies pour la Santé - RITS 2015 34 Brainomics: Harnessing the CubicWeb semantic framework to manage large neuromaging genetics shared resources 1 1 1 2 1 2 D. Goyard , A. Grigis , D. Papadopoulos Orfanos , V. Michel , V. Frouin ∗, A. Di Mascio 1 CEA DSV NeuroSpin, UNATI, 91 Gif sur Yvette, France.