Isa2 Action 2017.01 Standard-Based Archival

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mapping Spatiotemporal Data to RDF: a SPARQL Endpoint for Brussels

International Journal of Geo-Information Article Mapping Spatiotemporal Data to RDF: A SPARQL Endpoint for Brussels Alejandro Vaisman 1, * and Kevin Chentout 2 1 Instituto Tecnológico de Buenos Aires, Buenos Aires 1424, Argentina 2 Sopra Banking Software, Avenue de Tevuren 226, B-1150 Brussels, Belgium * Correspondence: [email protected]; Tel.: +54-11-3457-4864 Received: 20 June 2019; Accepted: 7 August 2019; Published: 10 August 2019 Abstract: This paper describes how a platform for publishing and querying linked open data for the Brussels Capital region in Belgium is built. Data are provided as relational tables or XML documents and are mapped into the RDF data model using R2RML, a standard language that allows defining customized mappings from relational databases to RDF datasets. In this work, data are spatiotemporal in nature; therefore, R2RML must be adapted to allow producing spatiotemporal Linked Open Data.Data generated in this way are used to populate a SPARQL endpoint, where queries are submitted and the result can be displayed on a map. This endpoint is implemented using Strabon, a spatiotemporal RDF triple store built by extending the RDF store Sesame. The first part of the paper describes how R2RML is adapted to allow producing spatial RDF data and to support XML data sources. These techniques are then used to map data about cultural events and public transport in Brussels into RDF. Spatial data are stored in the form of stRDF triples, the format required by Strabon. In addition, the endpoint is enriched with external data obtained from the Linked Open Data Cloud, from sites like DBpedia, Geonames, and LinkedGeoData, to provide context for analysis. -

ISO/TC46 (Information and Documentation) Liaison to IFLA

ISO/TC46 (Information and Documentation) liaison to IFLA Annual Report 2015 TC46 on Information and documentation has been leading efforts related to information management since 1947. Standards1 developed under ISO/TC46 facilitate access to knowledge and information and standardize automated tools, computer systems, and services relating to its major stakeholders of: libraries, publishing, documentation and information centres, archives, records management, museums, indexing and abstracting services, and information technology suppliers to these communities. TC46 has a unique role among ISO information-related committees in that it focuses on the whole lifecycle of information from its creation and identification, through delivery, management, measurement, and archiving, to final disposition. *** The following report summarizes activities of TC46, SC4, SC8 SC92 and their resolutions of the annual meetings3, in light of the key-concepts of interest to the IFLA community4. 1. SC4 Technical interoperability 1.1 Activities Standardization of protocols, schemas, etc. and related models and metadata for processes used by information organizations and content providers, including libraries, archives, museums, publishers, and other content producers. 1.2 Active Working Group WG 11 – RFID in libraries WG 12 – WARC WG 13 – Cultural heritage information interchange WG 14 – Interlibrary Loan Transactions 1.3 Joint working groups 1 For the complete list of published standards, cfr. Appendix A. 2 ISO TC46 Subcommittees: TC46/SC4 Technical interoperability; TC46/SC8 Quality - Statistics and performance evaluation; TC46/SC9 Identification and description; TC46/SC 10 Requirements for document storage and conditions for preservation - Cfr Appendix B. 3 The 42nd ISO TC46 plenary, subcommittee and working groups meetings, Beijing, June 1-5 2015. -

Model Bilateral and Multilateral Agreements and Conventions Concerning the Transfer of Archives

UNESCO ARCHIVES PARIS, 4 May 1981 Original: French UNITED NATIONS EDUCATIONAL, SCIENTIFIC AND CULTURAL ORZANIZATION GENERAL INFORMATION PROGRAMME MODEL BILATERAL AND MULTILATERAL AGREEMENTS AND CONVENTIONS CONCERNING THE TRANSFER OF ARCHIVES Charles Kecskemdti and Evert Van Laar t PGI-81/WS/3 SUMMARY Paae Introduction . 5 Guidelines and archival principles (Circular letter CL/2671 from the Director-General of UneSCO) . 6 1. Preparation and conclusion of different types of agreements . and conventions . 8 L 1.1 Types of agreements and conventions . 8 1.2 Choice of the agreement to be concluded ........... 9 1.3 Bodies responsible for preparing a draft agreement or convention ........................ 14 1.4 Procedure for preparing type E *knd F conventions ....... 14 1.5 Conclusion and ratification procedure ............ 15 1.6 Additional measures ..................... 16 1.7 Arbitration procedure .................... 16 2. Bilateral agreements and conventions ................ 16 2.1 Identification of archive groups and documents to be covered by the agreement or convention ............ 16 2.2 Nature of the documents to be transferred under a convention: originals or copies ............... 17 2.3 Access facilities after transfer ............... 18 2.4 Financing of the production of copies ............ 18 2.5 Right of reproduction of microfilms transferred ....... 18 2.6 Financing of the transfer .................. 19 2.7 Conditions under which transferred documents should bestored .......................... 19 2.8 Additional provisions .................... ,2U 3. Bilateral or multilateral agreements and conventions on the establishment of joint heritages . 20 3.1 The concept of a joint heritage . 20 3.2 Procedures and responsibilities . 22 3.3 Organization and financing of programmes . 25 3.4 Status of copies transferred, and access regulations . -

Open Web Ontobud: an Open Source RDF4J Frontend

Open Web Ontobud: An Open Source RDF4J Frontend Francisco José Moreira Oliveira University of Minho, Braga, Portugal [email protected] José Carlos Ramalho Department of Informatics, University of Minho, Braga, Portugal [email protected] Abstract Nowadays, we deal with increasing volumes of data. A few years ago, data was isolated, which did not allow communication or sharing between datasets. We live in a world where everything is connected, and our data mimics this. Data model focus changed from a square structure like the relational model to a model centered on the relations. Knowledge graphs are the new paradigm to represent and manage this new kind of information structure. Along with this new paradigm, a new kind of database emerged to support the new needs, graph databases! Although there is an increasing interest in this field, only a few native solutions are available. Most of these are commercial, and the ones that are open source have poor interfaces, and for that, they are a little distant from end-users. In this article, we introduce Ontobud, and discuss its design and development. A Web application that intends to improve the interface for one of the most interesting frameworks in this area: RDF4J. RDF4J is a Java framework to deal with RDF triples storage and management. Open Web Ontobud is an open source RDF4J web frontend, created to reduce the gap between end users and the RDF4J backend. We have created a web interface that enables users with a basic knowledge of OWL and SPARQL to explore ontologies and extract information from them. -

A Performance Study of RDF Stores for Linked Sensor Data

Semantic Web 1 (0) 1–5 1 IOS Press 1 1 2 2 3 3 4 A Performance Study of RDF Stores for 4 5 5 6 Linked Sensor Data 6 7 7 8 Hoan Nguyen Mau Quoc a,*, Martin Serrano b, Han Nguyen Mau c, John G. Breslin d , Danh Le Phuoc e 8 9 a Insight Centre for Data Analytics, National University of Ireland Galway, Ireland 9 10 E-mail: [email protected] 10 11 b Insight Centre for Data Analytics, National University of Ireland Galway, Ireland 11 12 E-mail: [email protected] 12 13 c Information Technology Department, Hue University, Viet Nam 13 14 E-mail: [email protected] 14 15 d Confirm Centre for Smart Manufacturing and Insight Centre for Data Analytics, National University of Ireland 15 16 Galway, Ireland 16 17 E-mail: [email protected] 17 18 e Open Distributed Systems, Technical University of Berlin, Germany 18 19 E-mail: [email protected] 19 20 20 21 21 Editors: First Editor, University or Company name, Country; Second Editor, University or Company name, Country 22 Solicited reviews: First Solicited Reviewer, University or Company name, Country; Second Solicited Reviewer, University or Company name, 22 23 Country 23 24 Open reviews: First Open Reviewer, University or Company name, Country; Second Open Reviewer, University or Company name, Country 24 25 25 26 26 27 27 28 28 29 Abstract. The ever-increasing amount of Internet of Things (IoT) data emanating from sensor and mobile devices is creating 29 30 new capabilities and unprecedented economic opportunity for individuals, organisations and states. -

Storage, Indexing, Query Processing, And

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 23 May 2020 doi:10.20944/preprints202005.0360.v1 STORAGE,INDEXING,QUERY PROCESSING, AND BENCHMARKING IN CENTRALIZED AND DISTRIBUTED RDF ENGINES:ASURVEY Waqas Ali Department of Computer Science and Engineering, School of Electronic, Information and Electrical Engineering (SEIEE), Shanghai Jiao Tong University, Shanghai, China [email protected] Muhammad Saleem Agile Knowledge and Semantic Web (AKWS), University of Leipzig, Leipzig, Germany [email protected] Bin Yao Department of Computer Science and Engineering, School of Electronic, Information and Electrical Engineering (SEIEE), Shanghai Jiao Tong University, Shanghai, China [email protected] Axel-Cyrille Ngonga Ngomo University of Paderborn, Paderborn, Germany [email protected] ABSTRACT The recent advancements of the Semantic Web and Linked Data have changed the working of the traditional web. There is a huge adoption of the Resource Description Framework (RDF) format for saving of web-based data. This massive adoption has paved the way for the development of various centralized and distributed RDF processing engines. These engines employ different mechanisms to implement key components of the query processing engines such as data storage, indexing, language support, and query execution. All these components govern how queries are executed and can have a substantial effect on the query runtime. For example, the storage of RDF data in various ways significantly affects the data storage space required and the query runtime performance. The type of indexing approach used in RDF engines is key for fast data lookup. The type of the underlying querying language (e.g., SPARQL or SQL) used for query execution is a key optimization component of the RDF storage solutions. -

Website Analysis of Croatian Archives - Possibilities and Limits in Archive Use

DAAAM INTERNATIONAL SCIENTIFIC BOOK 2012 pp. 651-668 CHAPTER 54 WEBSITE ANALYSIS OF CROATIAN ARCHIVES - POSSIBILITIES AND LIMITS IN ARCHIVE USE PAVELIN, G. Abstract: The aim of this paper was to present the current status and characteristics of the Croatian archive websites. The paper describes the connection, similarities and differences between archives and the Internet as a media, whereby their similarity in terms of organization and presentation of information was indicated. Internet as a medium has its own laws and therefore preferences of users must be taken into account when creating websites. This is especially important for archives as institutions, because they present a national heritage through the Internet. The second part is a practical research of the characteristics of Croatian archive websites. Throughout three phases of website analysis it can be seen that Croatian archive websites are satisfactory designed, but have many shortcomings. The SWOT analysis shows how to improve a site, which also includes advantages and disadvantages, as well as opportunities and threats of Croatian archive websites. Key words: archive, Internet, website, analysis, Croatia Authors´ data: Mr. sc. Pavelin, G[oran]; University of Zadar, Department of Tourism and communication studies, Franje Tudmana 24i, Zadar, Croatia, [email protected] This Publication has to be referred as: Pavelin, G[oran] (2012). Website Analysis of Croatian Archives - Possibilities and Limits in Archive Use, Chapter 54 in DAAAM International Scientific Book 2012, pp. 651-668, B. Katalinic (Ed.), Published by DAAAM International, ISBN 978-3-901509-86-5, ISSN 1726-9687, Vienna, Austria DOI: 10.2507/daaam.scibook.2012.54 651 Pavelin, G.: Website Analysis of Croatian Archives - Possibilities and Limits in Archive Use 1. -

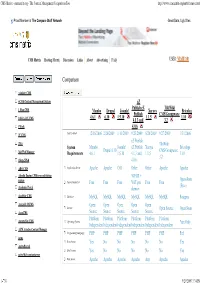

CMS Matrix - Cmsmatrix.Org - the Content Management Comparison Tool

CMS Matrix - cmsmatrix.org - The Content Management Comparison Tool http://www.cmsmatrix.org/matrix/cms-matrix Proud Member of The Compare Stuff Network Great Data, Ugly Sites CMS Matrix Hosting Matrix Discussion Links About Advertising FAQ USER: VISITOR Compare Search Return to Matrix Comparison <sitekit> CMS +CMS Content Management System eZ Publish eZ TikiWiki 1 Man CMS Mambo Drupal Joomla! Xaraya Bricolage Publish CMS/Groupware 4.6.1 6.10 1.5.10 1.1.5 1.10 1024 AJAX CMS 4.1.3 and 3.2 1Work 4.0.6 2F CMS Last Updated 12/16/2006 2/26/2009 1/11/2009 9/23/2009 8/20/2009 9/27/2009 1/31/2006 eZ Publish 2flex TikiWiki System Mambo Joomla! eZ Publish Xaraya Bricolage Drupal 6.10 CMS/Groupware 360 Web Manager Requirements 4.6.1 1.5.10 4.1.3 and 1.1.5 1.10 3.2 4Steps2Web 4.0.6 ABO.CMS Application Server Apache Apache CGI Other Other Apache Apache Absolut Engine CMS/news publishing 30EUR + system Open-Source Approximate Cost Free Free Free VAT per Free Free (Free) Academic Portal domain AccelSite CMS Database MySQL MySQL MySQL MySQL MySQL MySQL Postgres Accessify WCMS Open Open Open Open Open License Open Source Open Source AccuCMS Source Source Source Source Source Platform Platform Platform Platform Platform Platform Accura Site CMS Operating System *nix Only Independent Independent Independent Independent Independent Independent ACM Ariadne Content Manager Programming Language PHP PHP PHP PHP PHP PHP Perl acms Root Access Yes No No No No No Yes ActivePortail Shell Access Yes No No No No No Yes activeWeb contentserver Web Server Apache Apache -

A Framework for Ontology-Based Library Data Generation, Access and Exploitation

Universidad Politécnica de Madrid Departamento de Inteligencia Artificial DOCTORADO EN INTELIGENCIA ARTIFICIAL A framework for ontology-based library data generation, access and exploitation Doctoral Dissertation of: Daniel Vila-Suero Advisors: Prof. Asunción Gómez-Pérez Dr. Jorge Gracia 2 i To Adelina, Gustavo, Pablo and Amélie Madrid, July 2016 ii Abstract Historically, libraries have been responsible for storing, preserving, cata- loguing and making available to the public large collections of information re- sources. In order to classify and organize these collections, the library commu- nity has developed several standards for the production, storage and communica- tion of data describing different aspects of library knowledge assets. However, as we will argue in this thesis, most of the current practices and standards available are limited in their ability to integrate library data within the largest information network ever created: the World Wide Web (WWW). This thesis aims at providing theoretical foundations and technical solutions to tackle some of the challenges in bridging the gap between these two areas: library science and technologies, and the Web of Data. The investigation of these aspects has been tackled with a combination of theoretical, technological and empirical approaches. Moreover, the research presented in this thesis has been largely applied and deployed to sustain a large online data service of the National Library of Spain: datos.bne.es. Specifically, this thesis proposes and eval- uates several constructs, languages, models and methods with the objective of transforming and publishing library catalogue data using semantic technologies and ontologies. In this thesis, we introduce marimba-framework, an ontology- based library data framework, that encompasses these constructs, languages, mod- els and methods. -

Flemish Public Libraries – Digital Library Systems Architecture Study 10-Dec-13

Flemish Public Libraries – Digital Library Systems Architecture Study 10-Dec-13 Flemish Public Libraries - Digital Library Systems Architecture Study Last update: 27 NOV 2013 Researchers: Jean-François Declercq Rosemie Callewaert François Vermaut Ordered by: Bibnet vzw Vereniging van de Vlaamse Provincies Vlaamse Gemeenschapscommissie van het Brussels Hoofdstedelijk Gewest 2013-11-27-DigitalLibrarySystemArchitecture p. 1 / 163 Flemish Public Libraries – Digital Library Systems Architecture Study 10-Dec-13 Table of contents 1 Introduction ............................................................................................................................... 6 1.1 Definition of a Digital Library ............................................................................................. 6 1.2 Study Objectives ................................................................................................................ 7 1.3 Study Approach ................................................................................................................. 7 1.4 Study timeline .................................................................................................................... 8 1.5 Study Steering Committee ................................................................................................. 9 2 ICT Systems Architecture Concepts ......................................................................................... 10 2.1 Enterprise Architecture Model ....................................................................................... -

FI-WARE Product Vision Front Page Ref

FI-WARE Product Vision front page Ref. Ares(2011)1227415 - 17/11/20111 FI-WARE Product Vision front page Large-scale Integrated Project (IP) D2.2: FI-WARE High-level Description. Project acronym: FI-WARE Project full title: Future Internet Core Platform Contract No.: 285248 Strategic Objective: FI.ICT-2011.1.7 Technology foundation: Future Internet Core Platform Project Document Number: ICT-2011-FI-285248-WP2-D2.2b Project Document Date: 15 Nov. 2011 Deliverable Type and Security: Public Author: FI-WARE Consortium Contributors: FI-WARE Consortium. Abstract: This deliverable provides a high-level description of FI-WARE which can help to understand its functional scope and approach towards materialization until a first release of the FI-WARE Architecture is officially released. Keyword list: FI-WARE, PPP, Future Internet Core Platform/Technology Foundation, Cloud, Service Delivery, Future Networks, Internet of Things, Internet of Services, Open APIs Contents Articles FI-WARE Product Vision front page 1 FI-WARE Product Vision 2 Overall FI-WARE Vision 3 FI-WARE Cloud Hosting 13 FI-WARE Data/Context Management 43 FI-WARE Internet of Things (IoT) Services Enablement 94 FI-WARE Applications/Services Ecosystem and Delivery Framework 117 FI-WARE Security 161 FI-WARE Interface to Networks and Devices (I2ND) 186 Materializing Cloud Hosting in FI-WARE 217 Crowbar 224 XCAT 225 OpenStack Nova 226 System Pools 227 ISAAC 228 RESERVOIR 229 Trusted Compute Pools 230 Open Cloud Computing Interface (OCCI) 231 OpenStack Glance 232 OpenStack Quantum 233 Open -

Lisa Cant Final Draft of Senior Thesis Professor Senocak 04/12/2012

Lisa Cant Final Draft of Senior Thesis Professor Senocak C4997 04/12/2012 How the Preservation of Archives During WWII Led to a Radical Reformation of Strategic Intelligence Efforts Captured documents invariably furnish important and reliable information concerning the enemy which makes it possible to draw conclusions as to his organization, strength, and intentions and which may facilitate our war effort materially. -Captured German Order of the Day, as quoted in MIRS History.1 In 1943, in the midst of World War Two, the Allies established what was perhaps the most unusual and unexpected army unit of the war: the Monuments, Fine Arts and Archives army unit (MFA&A), created not so much to further the war effort but specifically to address the fate of culturally significant objects. The unit notably placed archivists and art specialists within advancing American and British army units.2 Drawn from existing army divisions these volunteers had as their mission the safeguarding of works of art, monumental buildings, and— more significantly for this study—archives, for the preserved archives ultimately produced intelligence that was valuable both to the ongoing war effort and for the post-war administration of Allied occupied Germany. In order to achieve their mission effectively, the embedded archivists had to be on the front lines, as this was where the most damage could be expected to happen. This was also incidentally where the freshest information could be found. This paper will focus on the archives, what they produced, and what happened to them once they were captured. Although the MFA&A division was initially created to find looted objects and protect culturally relevant material including archives, the Military Intelligence Research Section (MIRS), a joint British and American program, recognized the possible intelligence benefits that 1 AGAR-S doc.