Eyetoy - EDA385 Project Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cámara Eyetoy® USB

Cámara EyeToy® USB Disponible: Ahora Género: Entretenimiento Accesorios: Nº de referencia: SCUS-97600 Público: Para todo público Cámara EyeToy® USB (para PlayStation®2) UPC: 7-11719-76000-9 Cámara EyeToy® USB Sé la estrella del juego ¿Qué es EyeToy? EyeToy es una tecnología exclusiva de PlayStation®2 que brinda una manera sencilla, intuitiva y divertida de controlar los juegos, interactuar con amigos, etc. La tecnología EyeToy de la cámara EyeToy USB (para PlayStation®2) tiene rastreo de movimientos, sensores de luz y un micrófono incorporado para grabar y detectar audio. Puede usarse como cámara para tomar fotos, grabar video y mostrar a los jugadores en la TV**. Conecta la cámara EyeToy USB (para PlayStation®2) al sistema PlayStation® 2 por medio de uno de los puertos USB y pon un juego compatible con EyeToy para comenzar a divertirte. EyeToy® incluye: Características claves • La exclusiva cámara EyeToy® USB, • La revolucionaria cámara EyeToy® USB te transforma en la estrella del juego que cuenta con rastreo de movimiento y tecnología de • Tecnología de rastreo de movimiento: El movimiento corporal se traduce detección de luz y un micrófono inmediatamente en interacción en la pantalla incorporado • Amplio atractivo: La jugada es tan intuitiva y sencilla que EyeToy® resulta atractivo para todo público ¿Cómo funciona? • Jugada intuitiva: No es necesario usar un control; todos pueden divertirse La cámara EyeToy® USB simplemente con EyeToy®. No es necesario contar con experiencia previa en videojuegos se conecta a uno de los dos puertos • Mensajes de video: Graba hasta 60 segundos de mensajes de video USB en la parte frontal de la consola personalizados en tu tarjeta de memoria (8MB) (para PlayStation®2)* para PlayStation®2. -

Du Mutisme Au Dialogue

École Nationale Supérieure Louis Lumière Promotion Son 2015 Du mutisme au dialogue Les interactions vocales dans le jeu vidéo Partie pratique : v0x Mémoire de fin d'étude Rédacteur : Charles MEYER Directeur interne: Thierry CODUYS Directrice externe : Isabelle BALLET Rapporteur : Claude GAZEAU Année universitaire 2014-2015 Mémoire soutenu le 15 juin 2015 Remerciements : Je tiens à remercier chaleureusement mes deux directeurs de mémoire pour leur implication, leur confiance et leur exigence. Je remercie tout particulièrement Nicolas GIDON, sans qui la réalisation de la partie pratique de ce mémoire aurait été plus chronophage et complexe.. Je remercie et salue Nicolas FOURNIER et Baptiste PALACIN, dont les travaux et la gentillesse ont été une source d'inspiration et de détermination. Je remercie également ma mère, ma tante, Jordy, Julien et Julien (n'en déplaise à Julien), Timothée et mes amis pour leur soutien indéfectible. Merci à madame VALOUR, monsieur COLLET, monsieur FARBRÈGES ainsi qu'à leurs élèves. Enfin, merci à From Software et à NetherRealm Studios pour leur jeux, qui auront été un défouloir bienvenu. Page 2 Résumé Ce mémoire de master a pour objet d'étude les interactions vocales dans le jeu vidéo. Cependant, il ne se limite pas à une étude historique de l'évolution de la vocalité au sein des jeux vidéo mais en propose une formalisation théorique autour de trois concepts essentiels : Mécanique, Narration et Immersion. De ces trois concepts découlent trois types de voix : les voix système, les voix narratives (linéaires et non- linéaires) et les voix d'ambiance. Dans le prolongement de cette étude et en s'appuyant sur les travaux menés dans le cadre des parties expérimentale et pratique de ce mémoire, ayant abouti à la réalisation d'un jeu vidéo basé sur l'analyse spectrale de la voix du joueur, v0x, nous proposons une extension de cette théorie de la vocalité vidéo-ludique afin d'intégrer l'inclusion de la voix du joueur au sein de ce cadre d'étude. -

Terms & Conditions for Warranty Service for Playstation Selected

Terms & Conditions for Warranty Service for PlayStation selected Peripherals* This Warranty is valid for PlayStation selected Peripherals* (“the Product”) as specified here excluding PlayStation software. 1. Sony Computer Entertainment Hong Kong Limited ("SCEH") shall subject to the Terms and Conditions for Warranty Service, cause Telecom Service One Limited ("TSO") to provide warranty to the Product for a period of one (1) year from the original purchase date of the Product. TSO shall at its discretion, either repair or replace the Product with the equivalent Product free of charge, where the Product is faulty due to defective materials or workmanship. Any defective or additional parts of the Product which are replaced /removed by TSO in the course of repairing, if any, shall be the property of TSO. Even if TSO repairs or replaces the Product, its original one (1) year warranty term is not extended. 2. This Warranty is only valid: a) in Hong Kong (all peripherals) and Macau ( peripherals of PlayStation®Portable only ) and cannot be exchanged for a Manufacturer's Limited Warranty (Tourist Warranty); b) provided that the original purchase receipt or invoice indicating the date of purchase and retailer's name (and has not been altered or defaced since the date of original purchase) and the complete package of the Product bearing the complete warranty label, are presented together with the faulty Product; and c) when the factory-applied model number has not been altered, defaced or removed from the Product. 3. This Warranty does not cover -

SLEH-00448 Solución De Problemas

Sugerencias • Compruebe que el fondo detrás de usted esté lo más estático posible, ya que el movimiento en el fondo puede impedir el juego. • Compruebe que tiene suficiente espacio para jugar. Tenga cuidado con estantes, puertas, paredes, Cámara PlayStation®Eye / Câmera PlayStation®Eye animales y, sobre todo, otras personas. Para más información acerca de la función de conversación de voz/video de la cámara PlayStation®Eye, Manual de instrucciones / Manual de Instruções consulte la guía del usuario de la consola PS3™. SLEH-00448 Solución de problemas ESPAÑOL ® Consulte esta sección si tiene algún problema al utilizar este producto. Si el problema persiste, visite Componentes PlayStation Move nuestra página www.latam.playstation.com o llame a la línea de servicio al cliente de su respectivo país, la cual se lista en la póliza de garantía que acompaña a éste. ® ® La cámara PlayStation Eye no reconoce sus movimientos. Cámara PlayStation Eye • Compruebe que no haya luces parpadeando y que esté iluminado uniformemente por una luz frontal. • Es posible que exista un problema con la luz de su cuarto. • De ser posible, evite el uso de focos de bajo consumo energético y tubos fluorescentes y encienda cualquier otra luz adicional. Los botones en pantalla se activan solos. • Es posible que haya movimientos de fondo detrás de usted. Compruebe que el fondo detrás de usted Consola PlayStation®3 Mando de movimiento PlayStation®Move esté lo más estático posible. • Si hay alguna ventana en el fondo, considere cerrar las persianas y usar luz artificial. Su imagen en pantalla aparece demasiado brillante u oscura. • La luz de su cuarto no es la ideal. -

Initial Usability Assessment of Off-The-Shelf Video Game Consoles for Clinical Game-Based Motor Rehabilitation

Initial usability assessment of off-the-shelf video game consoles for clinical game-based motor rehabilitation B. Lange, S. Flynn and A. Rizzo Institute for Creative Technologies, University of Southern California, Marina Del Rey, CA 90292, USA Aims/background: Off-the-shelf games for consoles such as the Nintendo Wii, Nintendo WiiFit and Sony PlayStation 2 EyeToy have been developed and tested for the purpose of entertainment. Many clinics are adopting the use of these off-the-shelf devices for exercise, social interaction and rehabilitation because they are affordable, accessible and can be used within the clinic and home. Our group carried out initial usability evaluations for these off-the-shelf games and a prototype game (using an off-the-shelf device) specifically developed for people with disabilities. Methods: A series of studies have been undertaken through formative and summative evaluation and focus group research with a sample of people recovering from spinal cord injury, traumatic brain injury and stroke. Findings from two studies are presented. Following a demonstration and trial of the devices, observational and questionnaire data were collected to determine participants’ perception of each system’s usability, appeal and enjoyment. Results: The first study involved evaluation and focus group discussions of seven participants (two females, five males) with SCI (n54) and CVA (n53). Findings indicated that interaction with the EyeToy interface appeared to be more intuitive than the use of the Wii-mote interaction device, although some participants had difficulty navigating the menu of the PlayStation EyeToy. The second study involved evaluation of six participants (SCI54 males, TBI51 male, CVA51 female), aged between 25 and 58 years. -

An Assessment of Mobile Fitness Games

An Assessment of Mobile Fitness Games An Interactive Qualifying Project by Alex Carli-Dorsey James Jackman Nicholas Massa Date: 01/30/2015 Report Submitted to: Bengisu Tulu and Emmanuel Agu Worcester Polytechnic Institute This report represents work of WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. For more information about the projects program at WPI, see http://www.wpi.edu/Academics/Projects. 1 Abstract The purpose of this project was to find, document, and review mobile fitness games from the Android and iTunes marketplace, with a focus on those that encourage physical exercise. Using a variety of marketplaces and web searches, we, among a group of recruited and willing participants under study, played and reviewed Android and iPhone games that featured movement as an integral part of the game. We reported how effective the game is for fitness, and how engaging the content is for players. We provided reviews that can be used by: 1) Individuals seeking an enjoyable method of achieving a certain level of fitness, and 2) Game developers hoping to produce a compelling mobile fitness game of their own. 2 Table of Contents Abstract ..................................................................................................................................................... 2 1. Introduction .......................................................................................................................................... -

Viewing Friendly

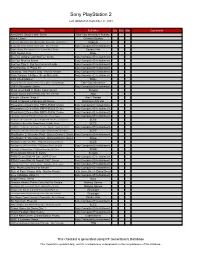

Sony PlayStation 2 Last Updated on September 27, 2021 Title Publisher Qty Box Man Comments Army Men: Sarge's War: Demo Take-Two Interactive Softwa... Black: Demo Criterion Studios Capcom vs. SNK 2: Mark of the Millenium 2001: Promo Only - Not for Resale Capcom Destruction Derby Arenas: Demo Only - Not for Resale Sony Computer Entertainment... Dragon Quest: The Journey of the Cursed King: Demo Square Enix DVD Region Free Blaze EyeToy: Antigrav and SpyToy: Demo Sony Computer Entertainment... EyeToy: Monkey Mania Sony Computer Entertainment... EyeToy: Play 3: EyeToy Camera Bundle Sony Computer Entertainment... Final Fantasy X: Press Kit Sony Computer Entertainment... Getaway, The: Promo Only - Not for Resale Sony Computer Entertainment... Gran Turismo 3 A-Spec: Press Materials Sony Computer Entertainment... GTA Cheat Master Blaze Haunted Mansion, Disney's The: Promo Only - Not for Resale Take-Two Interactive JAK II: Renegade: Demo Sony Computer Entertainment... Metal Gear Solid 3: Snake Eater: Demo Konami Musashi: Samurai Legend: Promo Only - Not for Resale Atari Naruto: Ultimate Ninja 3 Atari / Bandai Need for Speed: Underground: Demo Electronic Arts Ltd. Playstation 2 Demo Disc PBPX-95204: Demo Sony Computer Entertainment... Playstation 2 Demo Disc PBPX-95205: Demo Sony Computer Entertainment... Playstation 2 Demo Disc PBPX-95506: Demo Sony Computer Entertainment... PlayStation 2 Demo Disc PBPX-95514: Demo Only - Not for Resale Sony Computer Entertainment... PlayStation 2 Demo Disc: Bonus Demo 10 (Old) + Network Access Disc: Demo SCEE PlayStation 2 Demo Disc: Bonus Demo 12 (Old) : Demo SCEE PlayStation 2 Demo Disc: Bonus Demo 7 (15/16) + Network Access Disc: Demo Sony Computer Entertainment... PlayStation 2 Demo Disc: Bonus Demo 8 (Old) + Network Access Disc: Demo SCEE PlayStation 2: Welcome Pack - Bonus Demo 4: Demo Sony Computer Entertainment.. -

Alternative User Interfaces in Videogaming

Alternative User Interfaces in Videogaming Sabine Berger∗ Vienna University of Technology Figure 1: Alternative Gaming Interfaces in use. Abstract rently common ways of interacting with video gaming systems. The most common, widely used, gaming interface ist the game pad. This state of the art report gives an overview about the most com- The game pad or simply controller is a handheld device that is usu- mon alternative user interfaces for videogaming currently avail- ally connected to a gaming console using a cable or wireless con- able on the market, including The Wii-Remote, the Nintendo Du- nection as via Bluetooth (for example used with the Xbox 360 con- alScreen handheld, camera-, microphone- and musical instrument troller). Its purpose is to accept input from the user and proceed it controllers, as well as dance mats and buzzer controllers. A short to the console in order to control the events in games and/or the sys- description with some technical data is given about each of them. tem settings of the device. For this there are further several standard Further, important aspects of videogaming are analysed on the basis elements of a controller layout called digital pads, analog-sticks, of the previously discussed alternative gaming interfaces. Lastly a trigger buttons, shoulder buttons and face/action buttons (see Fig- short preview about possible or already set future alternative gam- ures figgamepad and 3, all of which are able to sense either digital ing interfaces is given. or analog inputs from the user. Each analog-stick may be moved to any direction in around a horizontal 360 circle. -

PLAYSTATION®2 SPECIAL COLOR MODEL “PEARL WHITE” to BECOME AVAILABLE for the SUMMER SEASON Limited Time Offer for the Summer Season in Japan

PLAYSTATION®2 SPECIAL COLOR MODEL “PEARL WHITE” TO BECOME AVAILABLE FOR THE SUMMER SEASON Limited Time Offer for the Summer Season in Japan Tokyo, July 1, 2004 – Sony Computer Entertainment Japan (SCEJ), a division of Sony Computer Entertainment Inc. responsible for business operations in Japan, announced today th that PlayStation®2 “Pearl White” will become available in Japan on July 15 , 2004, as a special color model version limited for the summer season 2004. With a host of attractive software titles released by first and third party developers and publishers, cumulative production shipments of PlayStation 2 hardware reached about 17 million units in Japan, gaining strong support from a broad range of users regardless of gender and age. In the past, SCEJ released special model versions of PlayStation 2 for a limited time and volume to expand the enjoyment of PlayStation 2, and this offering has enjoyed wide popularity among users. In particular, “SAKURA”, a limited color model that was released in spring 2003, has received continuous requests after reaching its target shipment and was made available again as a limited model for spring season 2004. In Japan, white has been a very popular color, having strong demands from users. The new color version adopts a stylish “Pearl White”, with a pearly lustrous finish. It is an original color for the Japanese market that will be released as a limited time offer for the summer season. Separately sold Analog Controller DUALSHOCK®2, and Vertical Stand (for PlayStation 2) of the same color will also become available with this offering. Various easy-to-play software titles from EyeToy™ as well as PlayStation 2 the Best series are planned to be released for the summer season. -

Du Mutisme Au Dialogue

École Nationale Supérieure Louis Lumière Promotion Son 2015 Du mutisme au dialogue Les interactions vocales dans le jeu vidéo Partie pratique : v0x Mémoire de fin d'étude Rédacteur : Charles MEYER Directeur interne: Thierry CODUYS Directrice externe : Isabelle BALLET Rapporteur : Claude GAZEAU Année universitaire 2014-2015 Mémoire soutenu le 15 juin 2015 Remerciements : Je tiens à remercier chaleureusement mes deux directeurs de mémoire pour leur implication, leur confiance et leur exigence. Je remercie tout particulièrement Nicolas GIDON, sans qui la réalisation de la partie pratique de ce mémoire aurait été plus chronophage et complexe.. Je remercie et salue Nicolas FOURNIER et Baptiste PALACIN, dont les travaux et la gentillesse ont été une source d'inspiration et de détermination. Je remercie également ma mère, ma tante, Jordy, Julien et Julien (n'en déplaise à Julien), Timothée et mes amis pour leur soutien indéfectible. Merci à madame VALOUR, monsieur COLLET, monsieur FARBRÈGES ainsi qu'à leurs élèves. Enfin, merci à From Software et à NetherRealm Studios pour leur jeux, qui auront été un défouloir bienvenu. Page 2 Résumé Ce mémoire de master a pour objet d'étude les interactions vocales dans le jeu vidéo. Cependant, il ne se limite pas à une étude historique de l'évolution de la vocalité au sein des jeux vidéo mais en propose une formalisation théorique autour de trois concepts essentiels : Mécanique, Narration et Immersion. De ces trois concepts découlent trois types de voix : les voix système, les voix narratives (linéaires et non- linéaires) et les voix d'ambiance. Dans le prolongement de cette étude et en s'appuyant sur les travaux menés dans le cadre des parties expérimentale et pratique de ce mémoire, ayant abouti à la réalisation d'un jeu vidéo basé sur l'analyse spectrale de la voix du joueur, v0x, nous proposons une extension de cette théorie de la vocalité vidéo-ludique afin d'intégrer l'inclusion de la voix du joueur au sein de ce cadre d'étude. -

Game Developer | December 2010

CONTENTS.1110 VOLUME 17 NUMBER 11 DEPARTMENTS 2 gaME PLAN By Brandon Sheffield [ e d i to r i a l ] POSTMORTEM Move Back to Kinect 26 Ubisoft Montreal's splinter Cell ConviCtion 4 HEADS UP DISPLAY [ n e w s ] Moving Splinter Cell's trademark stealth-based game play in an IGDA Leadership Forum, the Automatypewriter, and Bunten papers action oriented direction without undermining the series was a tricky archived. problem for Ubisoft Montreal. The original game design for ConviCtion was radically different from the franchise's traditional mechanics and 6 2010 front line award finalists [ n e w s ] necessitated a mid-development reboot. Fortunately, clear direction The Award Finalists for Art, Audio, Game Engine, Middleware, and a well-tuned production pipeline kept the team from being Networking, and Production/Programming tools. knocked off balance by the shift. By Patrick Redding, Alex Parizeau, and Maxime Beland 33 TOOL BOX By Tom Carroll [ r e v i e w ] Autodesk 3ds Max 2011 FEATURES 36 piXel pUsHer By Steve Theodore [ a r t ] 9 fragged The Balkans Big explosions with lots of fragmentation and debris are a video game mainstay. But making them look convincingly unique is a tedious 38 tHE INNER PRODUCt By Giacomino Veltri [ p r o g r a MM i n g ] process for artists. Here, the authors describe a procedural technique Game Configuration at Crystal Lake for automatically generating fragmented meshes that can save both time and sanity. 41 aUral fiXATION By Jesse Harlin [ s o U n d ] By Robert Perry and Peter Wilkins The Weight of Silence 15 Full reaCtive eyes entertainMent 42 DESIGN OF THe tiMES By Soren Johnson [ d e s i g n ] Are the now-ubiquitous Quick Time Events in games a lazy way to Stop Making Sense keep players mashing buttons? Or, are they broadening the range of expression for game designers? Using examples from the past and 44 good Job! [ C a r e e r ] present, this article looks to the future of QTEs. -

Design Materials O1.Pdf

Design Materials – Outcome 1 Computer Games: Design Outcome 1 F915 10/11/12 Computer Games: Design - Outcome 1 F915 10/11/12 Page 1 Design Materials – Outcome 1 Contents Contents .............................................................................................................. 2 The History of Gaming Consoles ........................................................................ 5 Consoles Today .................................................................................................. 6 Portable Media Players ....................................................................................... 7 Control and Input Devices ...................................................................................... 9 Joysticks ............................................................................................................. 9 Mouse ................................................................................................................. 9 Keyboard ............................................................................................................. 9 Steering Wheel.................................................................................................. 10 Gamepads ........................................................................................................ 10 Paddle ............................................................................................................... 10 Trackball ..........................................................................................................