Game Sound : an Introduction to the History, Theory, and Practice of Video Game Music and Sound Design / Karen Collins

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Name Region System Mapper Savestate Powerpak

Name Region System Mapper Savestate Powerpak Savestate '89 Dennou Kyuusei Uranai Japan Famicom 1 YES Supported YES 10-Yard Fight Japan Famicom 0 YES Supported YES 10-Yard Fight USA NES-NTSC 0 YES Supported YES 1942 Japan Famicom 0 YES Supported YES 1942 USA NES-NTSC 0 YES Supported YES 1943: The Battle of Midway USA NES-NTSC 2 YES Supported YES 1943: The Battle of Valhalla Japan Famicom 2 YES Supported YES 2010 Street Fighter Japan Famicom 4 YES Supported YES 3-D Battles of Worldrunner, The USA NES-NTSC 2 YES Supported YES 4-nin Uchi Mahjong Japan Famicom 0 YES Supported YES 6 in 1 USA NES-NTSC 41 NO Supported NO 720° USA NES-NTSC 1 YES Supported YES 8 Eyes Japan Famicom 4 YES Supported YES 8 Eyes USA NES-NTSC 4 YES Supported YES A la poursuite de l'Octobre Rouge France NES-PAL-B 4 YES Supported YES ASO: Armored Scrum Object Japan Famicom 3 YES Supported YES Aa Yakyuu Jinsei Icchokusen Japan Famicom 4 YES Supported YES Abadox: The Deadly Inner War USA NES-NTSC 1 YES Supported YES Action 52 USA NES-NTSC 228 NO Supported NO Action in New York UK NES-PAL-A 1 YES Supported YES Adan y Eva Spain NES-PAL 3 YES Supported YES Addams Family, The USA NES-NTSC 1 YES Supported YES Addams Family, The France NES-PAL-B 1 YES Supported YES Addams Family, The Scandinavia NES-PAL-B 1 YES Supported YES Addams Family, The Spain NES-PAL-B 1 YES Supported YES Addams Family, The: Pugsley's Scavenger Hunt USA NES-NTSC 1 YES Supported YES Addams Family, The: Pugsley's Scavenger Hunt UK NES-PAL-A 1 YES Supported YES Addams Family, The: Pugsley's Scavenger Hunt -

THIEF PS4 Manual

See important health and safety warnings in the system Settings menu. GETTING STARTED PlayStation®4 system Starting a game: Before use, carefully read the instructions supplied with the PS4™ computer entertainment system. The documentation contains information on setting up and using your system as well as important safety information. Touch the (power) button of the PS4™ system to turn the system on. The power indicator blinks in blue, and then lights up in white. Insert the Thief disc with the label facing up into the disc slot. The game appears in the content area of the home screen. Select the software title in the PS4™ system’s home screen, and then press the S button. Refer to this manual for Default controls: information on using the software. Xxxxxx xxxxxx left stick Xxxxxx xxxxxx R button Quitting a game: Press and hold the button, and then select [Close Application] on the p Xxxxxx xxxxxx E button screen that is displayed. Xxxxxx xxxxxx W button Returning to the home screen from a game: To return to the home screen Xxxxxx xxxxxx K button Xxxxxx xxxxxx right stick without quitting a game, press the p button. To resume playing the game, select it from the content area. Xxxxxx xxxxxx N button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) H button Removing a disc: Touch the (eject) button after quitting the game. Xxxxxx xxxxxx (Xxxxxx xxxxxxx) J button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Q button Trophies: Earn, compare and share trophies that you earn by making specic in-game Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Click touchpad accomplishments. Trophies access requires a Sony Entertainment Network account . -

Liste Des Jeux Nintendo NES Chase Bubble Bobble Part 2

Liste des jeux Nintendo NES Chase Bubble Bobble Part 2 Cabal International Cricket Color a Dinosaur Wayne's World Bandai Golf : Challenge Pebble Beach Nintendo World Championships 1990 Lode Runner Tecmo Cup : Football Game Teenage Mutant Ninja Turtles : Tournament Fighters Tecmo Bowl The Adventures of Rocky and Bullwinkle and Friends Metal Storm Cowboy Kid Archon - The Light And The Dark The Legend of Kage Championship Pool Remote Control Freedom Force Predator Town & Country Surf Designs : Thrilla's Surfari Kings of the Beach : Professional Beach Volleyball Ghoul School KickMaster Bad Dudes Dragon Ball : Le Secret du Dragon Cyber Stadium Series : Base Wars Urban Champion Dragon Warrior IV Bomberman King's Quest V The Three Stooges Bases Loaded 2: Second Season Overlord Rad Racer II The Bugs Bunny Birthday Blowout Joe & Mac Pro Sport Hockey Kid Niki : Radical Ninja Adventure Island II Soccer NFL Track & Field Star Voyager Teenage Mutant Ninja Turtles II : The Arcade Game Stack-Up Mappy-Land Gauntlet Silver Surfer Cybernoid - The Fighting Machine Wacky Races Circus Caper Code Name : Viper F-117A : Stealth Fighter Flintstones - The Surprise At Dinosaur Peak, The Back To The Future Dick Tracy Magic Johnson's Fast Break Tombs & Treasure Dynablaster Ultima : Quest of the Avatar Renegade Super Cars Videomation Super Spike V'Ball + Nintendo World Cup Dungeon Magic : Sword of the Elements Ultima : Exodus Baseball Stars II The Great Waldo Search Rollerball Dash Galaxy In The Alien Asylum Power Punch II Family Feud Magician Destination Earthstar Captain America and the Avengers Cyberball Karnov Amagon Widget Shooting Range Roger Clemens' MVP Baseball Bill Elliott's NASCAR Challenge Garry Kitchen's BattleTank Al Unser Jr. -

Cámara Eyetoy® USB

Cámara EyeToy® USB Disponible: Ahora Género: Entretenimiento Accesorios: Nº de referencia: SCUS-97600 Público: Para todo público Cámara EyeToy® USB (para PlayStation®2) UPC: 7-11719-76000-9 Cámara EyeToy® USB Sé la estrella del juego ¿Qué es EyeToy? EyeToy es una tecnología exclusiva de PlayStation®2 que brinda una manera sencilla, intuitiva y divertida de controlar los juegos, interactuar con amigos, etc. La tecnología EyeToy de la cámara EyeToy USB (para PlayStation®2) tiene rastreo de movimientos, sensores de luz y un micrófono incorporado para grabar y detectar audio. Puede usarse como cámara para tomar fotos, grabar video y mostrar a los jugadores en la TV**. Conecta la cámara EyeToy USB (para PlayStation®2) al sistema PlayStation® 2 por medio de uno de los puertos USB y pon un juego compatible con EyeToy para comenzar a divertirte. EyeToy® incluye: Características claves • La exclusiva cámara EyeToy® USB, • La revolucionaria cámara EyeToy® USB te transforma en la estrella del juego que cuenta con rastreo de movimiento y tecnología de • Tecnología de rastreo de movimiento: El movimiento corporal se traduce detección de luz y un micrófono inmediatamente en interacción en la pantalla incorporado • Amplio atractivo: La jugada es tan intuitiva y sencilla que EyeToy® resulta atractivo para todo público ¿Cómo funciona? • Jugada intuitiva: No es necesario usar un control; todos pueden divertirse La cámara EyeToy® USB simplemente con EyeToy®. No es necesario contar con experiencia previa en videojuegos se conecta a uno de los dos puertos • Mensajes de video: Graba hasta 60 segundos de mensajes de video USB en la parte frontal de la consola personalizados en tu tarjeta de memoria (8MB) (para PlayStation®2)* para PlayStation®2. -

General MIDI 2 February 6, 2007

General MIDI 2 February 6, 2007 Version 1.2a Including PAN Formula, MIDI Tuning Changes and Mod Depth Range Recommendation Published By: The MIDI Manufacturers Association Los Angeles, CA PREFACE Abstract: General MIDI 2 is a group of extensions made to General MIDI (Level 1) allowing for expanded standardized control of MIDI devices. This increased functionality includes extended sounds sets and additional performance and control parameters. New MIDI Messages: Numerous new MIDI messages were defined specifically to support the desired performance features of General MIDI 2. The message syntax and details are published in the Complete MIDI 1.0 Detailed Specification version 1999 (and later): MIDI Tuning Bank/Dump Extensions (C/A-020) Scale/Octave Tuning (C/A-021) Controller Destination Setting (C/A-022) Key-Based Instrument Controll SysEx Messages (C/A-023) Global Parameter Control SysEx Message (C/A-024) Master Fine/Course Tuning SysEx Messages (C/A-025) Modulation Depth Range RPN (C/A-026) General MIDI 2 Message: Universal Non-Realtime System Exclusive sub-ID #2 under General MIDI sub-ID #1 is reserved for General MIDI 2 system messages (see page 21 herein). Changes from version 1.0 to version 1.1: o Section 3.3.5: changed PAN formula per RP-036 o Section 4.7: Added new recommendations per RP-037 Changes from version 1.1 to version 1.2: o Section 3.4.4: added recommendation for Mod Depth Range Response per RP-045 o V 1.2a is reformatted for PDF distribution General MIDI 2 Specification (Recommended Practice) RP-024 (incorporating changes per RP-036, RP-037, and RP-045) Copyright ©1999, 2003, and 2007 MIDI Manufacturers Association Incorporated ALL RIGHTS RESERVED. -

The Cross Over Talk

Programming Composers and Composing Programmers Victoria Dorn – Sony Interactive Entertainment 1 About Me • Berklee College of Music (2013) – Sound Design/Composition • Oregon State University (2018) – Computer Science • Audio Engineering Intern -> Audio Engineer -> Software Engineer • Associate Software Engineer in Research and Development at PlayStation • 3D Audio for PS4 (PlayStation VR, Platinum Wireless Headset) • Testing, general research, recording, and developer support 2 Agenda • Programming tips/tricks for the audio person • Audio and sound tips/tricks for the programming person • Creating a dialog and establishing vocabulary • Raise the level of common understanding between sound people and programmers • Q&A 3 Media Files Used in This Presentation • Can be found here • https://drive.google.com/drive/folders/1FdHR4e3R4p59t7ZxAU7pyMkCdaxPqbKl?usp=sharing 4 Programming Tips for the ?!?!?! Audio Folks "Binary Code" by Cncplayer is licensed under CC BY-SA 3.0 5 Music/Audio Programming DAWs = Programming Language(s) Musical Motives = Programming Logic Instruments = APIs or Libraries 6 Where to Start?? • Learning the Language • Pseudocode • Scripting 7 Learning the Language • Programming Fundamentals • Variables (a value with a name) soundVolume = 10 • Loops (works just like looping a sound actually) for (loopCount = 0; while loopCount < 10; increase loopCount by 1){ play audio file one time } • If/else logic (if this is happening do this, else do something different) if (the sky is blue){ play bird sounds } else{ play rain sounds -

Sega Megadrive European PAL Checklist

The Sega Megadrive European PAL Checklist □ 688 Attack Sub □ Crack Down □ Gunship □ Aaahh!!! Real Monsters □ Crue Ball □ Gunstar Heroes □ Addams Family Values □ Cutthroat Island □ Gynoug □ Addams Family, The □ Cyberball □ Hard Drivin' □ Adventures of Batman & Robin, The □ Cyborg Justice □ Hardball (Box) □ Adventures of Mighty Max, The □ Daffy Duck in Hollywood □ Hardball III □ Aero the Acro-Bat □ Dark Castle □ Hardball '94 □ Aero the Acro-Bat 2 □ David Robinson's Supreme Court □ Haunting, The □ After Burner 2 □ Davis Cup World Tour □ Havoc □ Aladdin □ Death and Return of Superman, The □ Hellfire □ Alex Kidd in the Enchanted Castle □ Decap Attack □ Herzog Zwei □ Alien 3 □ Demolition Man □ Home Alone □ Alien Soldier □ Desert Demolition starring Road Runner □ Hook □ Alien Storm □ Desert Strike: Return to the Gulf □ Hurricanes □ Alisia Dragoon □ Dick Tracy □ Hyperdunk □ Altered Beast □ Dino Dini's Soccer □ Immortal, The □ Andre Agassi Tennis □ Disney Collection, The □ Incredible Crash Dummies, The □ Animaniacs □ DJ Boy □ Incredible Hulk, The □ Another World □ Donald in Maui Mallard □ Indiana Jones and the Last Crusade □ Aquatic Games starring James Pond □ Double Clutch □ International Rugby □ Arcade Classics □ Double Dragon (Box) □ International Sensible Soccer □ Arch Rivals □ Double Dragon 3: The Arcade Game □ International Superstar Soccer Deluxe □ Ariel: The Little Mermaid □ Double Hits: Micro Machines & Psycho Pinball □ International Tour Tennis □ Arnold Palmer Tournament Golf □ Dr. Robotnik's Mean Bean Machine □ Izzy's Quest for the -

ELECTRONIC ARTS INC. (Exact Name of Registrant As Speciñed in Its Charter)

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K ≤ ANNUAL REPORT PURSUANT TO SECTION 13 OR 15 (d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the Ñscal year ended March 31, 2003 OR n TRANSITION REPORT PURSUANT TO SECTION 13 OR 15 (d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to Commission File No. 0-17948 ELECTRONIC ARTS INC. (Exact name of Registrant as speciÑed in its charter) Delaware 94-2838567 (State or other jurisdiction of (I.R.S. Employer incorporation or organization) IdentiÑcation No.) 209 Redwood Shores Parkway Redwood City, California 94065 (Address of principal executive oÇces) (Zip Code) Registrant's telephone number, including area code: (650) 628-1500 Securities registered pursuant to Section 12(b) of the Act: None Securities registered pursuant to Section 12(g) of the Act: Class A Common Stock, $.01 par value (Title of class) Indicate by check mark whether the Registrant (1) has Ñled all reports required to be Ñled by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the Registrant was required to Ñle such reports), and (2) has been subject to such Ñling requirements for the past 90 days. YES ≤ NO n Indicate by check mark if disclosure of delinquent Ñlers pursuant to Item 405 of Regulation S-K is not contained herein, and will not be contained, to the best of registrant's knowledge, in deÑnitive proxy or information statements incorporated by reference in Part III of this Form 10-K or any amendment to this Form 10-K. -

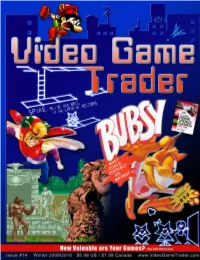

Video Game Trader Magazine & Price Guide

Winter 2009/2010 Issue #14 4 Trading Thoughts 20 Hidden Gems Blue‘s Journey (Neo Geo) Video Game Flashback Dragon‘s Lair (NES) Hidden Gems 8 NES Archives p. 20 19 Page Turners Wrecking Crew Vintage Games 9 Retro Reviews 40 Made in Japan Coin-Op.TV Volume 2 (DVD) Twinkle Star Sprites Alf (Sega Master System) VectrexMad! AutoFire Dongle (Vectrex) 41 Video Game Programming ROM Hacking Part 2 11Homebrew Reviews Ultimate Frogger Championship (NES) 42 Six Feet Under Phantasm (Atari 2600) Accessories Mad Bodies (Atari Jaguar) 44 Just 4 Qix Qix 46 Press Start Comic Michael Thomasson’s Just 4 Qix 5 Bubsy: What Could Possibly Go Wrong? p. 44 6 Spike: Alive and Well in the land of Vectors 14 Special Book Preview: Classic Home Video Games (1985-1988) 43 Token Appreciation Altered Beast 22 Prices for popular consoles from the Atari 2600 Six Feet Under to Sony PlayStation. Now includes 3DO & Complete p. 42 Game Lists! Advertise with Video Game Trader! Multiple run discounts of up to 25% apply THIS ISSUES CONTRIBUTORS: when you run your ad for consecutive Dustin Gulley Brett Weiss Ad Deadlines are 12 Noon Eastern months. Email for full details or visit our ad- Jim Combs Pat “Coldguy” December 1, 2009 (for Issue #15 Spring vertising page on videogametrader.com. Kevin H Gerard Buchko 2010) Agents J & K Dick Ward February 1, 2009(for Issue #16 Summer Video Game Trader can help create your ad- Michael Thomasson John Hancock 2010) vertisement. Email us with your requirements for a price quote. P. Ian Nicholson Peter G NEW!! Low, Full Color, Advertising Rates! -

Disruptive Innovation and Internationalization Strategies: the Case of the Videogame Industry Par Shoma Patnaik

HEC MONTRÉAL Disruptive Innovation and Internationalization Strategies: The Case of the Videogame Industry par Shoma Patnaik Sciences de la gestion (Option International Business) Mémoire présenté en vue de l’obtention du grade de maîtrise ès sciences en gestion (M. Sc.) Décembre 2017 © Shoma Patnaik, 2017 Résumé Ce mémoire a pour objectif une analyse des deux tendances très pertinentes dans le milieu du commerce d'aujourd'hui – l'innovation de rupture et l'internationalisation. L'innovation de rupture (en anglais, « disruptive innovation ») est particulièrement devenue un mot à la mode. Cependant, cela n'est pas assez étudié dans la recherche académique, surtout dans le contexte des affaires internationales. De plus, la théorie de l'innovation de rupture est fréquemment incomprise et mal-appliquée. Ce mémoire vise donc à combler ces lacunes, non seulement en examinant en détail la théorie de l'innovation de rupture, ses antécédents théoriques et ses liens avec l'internationalisation, mais en outre, en situant l'étude dans l'industrie des jeux vidéo, il découvre de nouvelles tendances industrielles et pratiques en examinant le mouvement ascendant des jeux mobiles et jeux en lignes. Le mémoire commence par un dessein des liens entre l'innovation de rupture et l'internationalisation, sur le fondement que la recherche de nouveaux débouchés est un élément critique dans la théorie de l'innovation de rupture. En formulant des propositions tirées de la littérature académique, je postule que les entreprises « disruptives » auront une vitesse d'internationalisation plus élevée que celle des entreprises traditionnelles. De plus, elles auront plus de facilité à franchir l'obstacle de la distance entre des marchés et pénétreront dans des domaines inconnus et inexploités. -

Du Mutisme Au Dialogue

École Nationale Supérieure Louis Lumière Promotion Son 2015 Du mutisme au dialogue Les interactions vocales dans le jeu vidéo Partie pratique : v0x Mémoire de fin d'étude Rédacteur : Charles MEYER Directeur interne: Thierry CODUYS Directrice externe : Isabelle BALLET Rapporteur : Claude GAZEAU Année universitaire 2014-2015 Mémoire soutenu le 15 juin 2015 Remerciements : Je tiens à remercier chaleureusement mes deux directeurs de mémoire pour leur implication, leur confiance et leur exigence. Je remercie tout particulièrement Nicolas GIDON, sans qui la réalisation de la partie pratique de ce mémoire aurait été plus chronophage et complexe.. Je remercie et salue Nicolas FOURNIER et Baptiste PALACIN, dont les travaux et la gentillesse ont été une source d'inspiration et de détermination. Je remercie également ma mère, ma tante, Jordy, Julien et Julien (n'en déplaise à Julien), Timothée et mes amis pour leur soutien indéfectible. Merci à madame VALOUR, monsieur COLLET, monsieur FARBRÈGES ainsi qu'à leurs élèves. Enfin, merci à From Software et à NetherRealm Studios pour leur jeux, qui auront été un défouloir bienvenu. Page 2 Résumé Ce mémoire de master a pour objet d'étude les interactions vocales dans le jeu vidéo. Cependant, il ne se limite pas à une étude historique de l'évolution de la vocalité au sein des jeux vidéo mais en propose une formalisation théorique autour de trois concepts essentiels : Mécanique, Narration et Immersion. De ces trois concepts découlent trois types de voix : les voix système, les voix narratives (linéaires et non- linéaires) et les voix d'ambiance. Dans le prolongement de cette étude et en s'appuyant sur les travaux menés dans le cadre des parties expérimentale et pratique de ce mémoire, ayant abouti à la réalisation d'un jeu vidéo basé sur l'analyse spectrale de la voix du joueur, v0x, nous proposons une extension de cette théorie de la vocalité vidéo-ludique afin d'intégrer l'inclusion de la voix du joueur au sein de ce cadre d'étude. -

They Played the Merger Game: a Retrospective Analysis in the UK Videogames Market

No 113 They Played the Merger Game: A Retrospective Analysis in the UK Videogames Market Luca Aguzzoni, Elena Argentesi, Paolo Buccirossi, Lorenzo Ciari, Tomaso Duso, Massimo Tognoni, Cristiana Vitale October 2013 IMPRINT DICE DISCUSSION PAPER Published by düsseldorf university press (dup) on behalf of Heinrich‐Heine‐Universität Düsseldorf, Faculty of Economics, Düsseldorf Institute for Competition Economics (DICE), Universitätsstraße 1, 40225 Düsseldorf, Germany www.dice.hhu.de Editor: Prof. Dr. Hans‐Theo Normann Düsseldorf Institute for Competition Economics (DICE) Phone: +49(0) 211‐81‐15125, e‐mail: [email protected] DICE DISCUSSION PAPER All rights reserved. Düsseldorf, Germany, 2013 ISSN 2190‐9938 (online) – ISBN 978‐3‐86304‐112‐0 The working papers published in the Series constitute work in progress circulated to stimulate discussion and critical comments. Views expressed represent exclusively the authors’ own opinions and do not necessarily reflect those of the editor. They Played the Merger Game: A Retrospective Analysis in the UK Videogames Market Luca Aguzzoni Lear Elena Argentesi University of Bologna Paolo Buccirossi Lear Lorenzo Ciari European Bank for Reconstruction and Development Tomaso Duso Deutsches Institut für Wirtschaftsforschung (DIW Berlin) and Düsseldorf Institute for Competition Economics (DICE) Massimo Tognoni UK Competition Commission Cristiana Vitale OECD October 2013 Corresponding author: Elena Argentesi, Department of Economics, University of Bologna, Piazza Scaravilli 2, 40126 Bologna, Italy, Tel: + 39 051 2098661, Fax: +39 051 2098040, E-Mail: [email protected]. This paper is partially based on a research project we undertook for the UK Competition Commission (CC). We thank the CC’s staff for their support during the course of this study.