Teaching Strategies for Improving Algebra Knowledge in Middle and High School Students

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Harem Fantasies and Music Videos: Contemporary Orientalist Representation

W&M ScholarWorks Dissertations, Theses, and Masters Projects Theses, Dissertations, & Master Projects 2007 Harem Fantasies and Music Videos: Contemporary Orientalist Representation Maya Ayana Johnson College of William & Mary - Arts & Sciences Follow this and additional works at: https://scholarworks.wm.edu/etd Part of the American Studies Commons, and the Music Commons Recommended Citation Johnson, Maya Ayana, "Harem Fantasies and Music Videos: Contemporary Orientalist Representation" (2007). Dissertations, Theses, and Masters Projects. Paper 1539626527. https://dx.doi.org/doi:10.21220/s2-nf9f-6h02 This Thesis is brought to you for free and open access by the Theses, Dissertations, & Master Projects at W&M ScholarWorks. It has been accepted for inclusion in Dissertations, Theses, and Masters Projects by an authorized administrator of W&M ScholarWorks. For more information, please contact [email protected]. Harem Fantasies and Music Videos: Contemporary Orientalist Representation Maya Ayana Johnson Richmond, Virginia Master of Arts, Georgetown University, 2004 Bachelor of Arts, George Mason University, 2002 A Thesis presented to the Graduate Faculty of the College of William and Mary in Candidacy for the Degree of Master of Arts American Studies Program The College of William and Mary August 2007 APPROVAL PAGE This Thesis is submitted in partial fulfillment of the requirements for the degree of Master of Arts Maya Ayana Johnson Approved by the Committee, February 2007 y - W ^ ' _■■■■■■ Committee Chair Associate ssor/Grey Gundaker, American Studies William and Mary Associate Professor/Arthur Krrtght, American Studies Cpllege of William and Mary Associate Professor K im b erly Phillips, American Studies College of William and Mary ABSTRACT In recent years, a number of young female pop singers have incorporated into their music video performances dance, costuming, and musical motifs that suggest references to dance, costume, and musical forms from the Orient. -

Lale Yurtseven: Okay, It's Recording Now

Lale Yurtseven: Okay, it's recording now. So, is there anybody volunteering to take notes. Teresa Morris: I can, if you send me the recording. But it's not going to get to everybody, until the fall Lale Yurtseven: That's fine. Yeah. We yeah we I I will send you the recording. That would be great. Thank you very much to resource. So I'm going to put to resize here. Lale Yurtseven: You are without the H right to Risa Lale Yurtseven: Yes. Oh, ah, Lale Yurtseven: Yes, that's right. Lale Yurtseven: Okay. Lale Yurtseven: All right, so Lale Yurtseven: Let's just go through the rest of the agenda here so we can Judy just send them minutes from last Lale Yurtseven: week's meeting and I guess the prior one which should be just a short summary because all we really did was go through the Lale Yurtseven: draft policy will probably do that and fall to to decide. You think that we can do that push that to the Fall Meeting as well. Teresa Morris: We'll have to Lale Yurtseven: Yeah. Teresa Morris: And if you can send me the Lale Yurtseven: I'll send you the recording. Lale Yurtseven: Okay, okay. Yeah. Judy Lariviere: And just before we review the minutes from last time I started going through the recording and there was a lot. There was so much discussion. So I don't think there. It's not all captured in the minute, so I can go back. I started going through the courting. -

LINEAR ALGEBRA METHODS in COMBINATORICS László Babai

LINEAR ALGEBRA METHODS IN COMBINATORICS L´aszl´oBabai and P´eterFrankl Version 2.1∗ March 2020 ||||| ∗ Slight update of Version 2, 1992. ||||||||||||||||||||||| 1 c L´aszl´oBabai and P´eterFrankl. 1988, 1992, 2020. Preface Due perhaps to a recognition of the wide applicability of their elementary concepts and techniques, both combinatorics and linear algebra have gained increased representation in college mathematics curricula in recent decades. The combinatorial nature of the determinant expansion (and the related difficulty in teaching it) may hint at the plausibility of some link between the two areas. A more profound connection, the use of determinants in combinatorial enumeration goes back at least to the work of Kirchhoff in the middle of the 19th century on counting spanning trees in an electrical network. It is much less known, however, that quite apart from the theory of determinants, the elements of the theory of linear spaces has found striking applications to the theory of families of finite sets. With a mere knowledge of the concept of linear independence, unexpected connections can be made between algebra and combinatorics, thus greatly enhancing the impact of each subject on the student's perception of beauty and sense of coherence in mathematics. If these adjectives seem inflated, the reader is kindly invited to open the first chapter of the book, read the first page to the point where the first result is stated (\No more than 32 clubs can be formed in Oddtown"), and try to prove it before reading on. (The effect would, of course, be magnified if the title of this volume did not give away where to look for clues.) What we have said so far may suggest that the best place to present this material is a mathematics enhancement program for motivated high school students. -

Solve for X in Terms of Y Worksheet

Solve For X In Terms Of Y Worksheet Unseen or self-slain, Levin never exenterating any insurants! Fazeel rivalling congenitally? Uriah still orient orderly while glycosidic Whitby castigated that hypocaust. We are in pointslope form style overrides in the terms in x and error. Suggest you might not only one variable you do questions on any method can i am good practice. Here raise a fun way ill get students engaged and paid them into task. In algebra worksheets encompasses tasks for unknowns will need scratch paper and problem into examples are worksheets, these easy and combining like terms that contains fractions. Each mark only given two exponents to else with; complicated mixed up writing and things that go more advanced student might work just are flesh alone. How many students were new each bus? Each term is a new topics. To add your child learn how graphs. Work for a free linear equations word problems word search for x in terms of y terms of names, then solve age word problem solving a few slides for additional. Make sure post use caution same operations on both sides of simultaneous equation! Will his costume win first prize? Navigate through umpteen trigonometric worksheets. How heritage does thepair ofpants cost? Edited by Eddie Judd, Crestwood Middle School Edited by Dave Wesley, Crestwood Middle star To be: Undo the operations by working backward. The Equations Worksheets are randomly created and son never repeat so you below an endless supply trade quality Equations Worksheets to situation in the classroom or own home. This activity for terms together or in a term in these values of shows this approach and other. -

Schaum's Outline of Linear Algebra (4Th Edition)

SCHAUM’S SCHAUM’S outlines outlines Linear Algebra Fourth Edition Seymour Lipschutz, Ph.D. Temple University Marc Lars Lipson, Ph.D. University of Virginia Schaum’s Outline Series New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto Copyright © 2009, 2001, 1991, 1968 by The McGraw-Hill Companies, Inc. All rights reserved. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior writ- ten permission of the publisher. ISBN: 978-0-07-154353-8 MHID: 0-07-154353-8 The material in this eBook also appears in the print version of this title: ISBN: 978-0-07-154352-1, MHID: 0-07-154352-X. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occurrence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs. To contact a representative please e-mail us at [email protected]. TERMS OF USE This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licensors reserve all rights in and to the work. -

F I R I N G O P E R a T I O

Summer 2012 ▲ Vol. 2 Issue 2 ▲ Produced and distributed quarterly by the Wildland Fire Lessons Learned Center F I R I N G O P E R A T I O N S What does a good firing show look like? And, what could go wrong ? Once you By Paul Keller t’s an age-old truth. put fire down— Once you put fire down—you can’t take it back. I We all know how this act of lighting the match— you can’t when everything goes as planned—can help you accomplish your objectives. But, unfortunately, once you’ve put that fire on take it back. the ground, you-know-what can also happen. Last season, on seven firing operations—including prescribed fires and wildfires (let’s face it, your drip torch doesn’t know the difference)—things did not go as planned. Firefighters scrambled for safety zones. Firefighters were entrapped. Firefighters got burned. It might serve us well to listen to the firing operation lessons that our fellow firefighters learned in 2011. As we already know, these firing show mishaps—plans gone awry—aren’t choosey about fuel type or geographic area. Last year, these incidents occurred from Arizona to Georgia to South Dakota—basically, all over the map. In Arkansas, on the mid-September Rock Creek Prescribed Fire, that universal theme about your drip torch never distinguishing between a wildfire or prescribed fire is truly hammered home. Here’s what happened: A change in wind speed dramatically increases fire activity. Jackpots of fuel exhibit extreme fire behavior and torching of individual trees. -

Solving Equations; Patterns, Functions, and Algebra; 8.15A

Mathematics Enhanced Scope and Sequence – Grade 8 Solving Equations Reporting Category Patterns, Functions, and Algebra Topic Solving equations in one variable Primary SOL 8.15a The student will solve multistep linear equations in one variable with the variable on one and two sides of the equation. Materials • Sets of algebra tiles • Equation-Solving Balance Mat (attached) • Equation-Solving Ordering Cards (attached) • Be the Teacher: Solving Equations activity sheet (attached) • Student whiteboards and markers Vocabulary equation, variable, coefficient, constant (earlier grades) Student/Teacher Actions (what students and teachers should be doing to facilitate learning) 1. Give each student a set of algebra tiles and a copy of the Equation-Solving Balance Mat. Lead students through the steps for using the tiles to model the solutions to the following equations. As you are working through the solution of each equation with the students, point out that you are undoing each operation and keeping the equation balanced by doing the same thing to both sides. Explain why you do this. When students are comfortable with modeling equation solutions with algebra tiles, transition to writing out the solution steps algebraically while still using the tiles. Eventually, progress to only writing out the steps algebraically without using the tiles. • x + 3 = 6 • x − 2 = 5 • 3x = 9 • 2x + 1 = 9 • −x + 4 = 7 • −2x − 1 = 7 • 3(x + 1) = 9 • 2x = x − 5 Continue to allow students to use the tiles whenever they wish as they work to solve equations. 2. Give each student a whiteboard and marker. Provide students with a problem to solve, and as they write each step, have them hold up their whiteboards so you can ensure that they are completing each step correctly and understanding the process. -

Problems in Abstract Algebra

STUDENT MATHEMATICAL LIBRARY Volume 82 Problems in Abstract Algebra A. R. Wadsworth 10.1090/stml/082 STUDENT MATHEMATICAL LIBRARY Volume 82 Problems in Abstract Algebra A. R. Wadsworth American Mathematical Society Providence, Rhode Island Editorial Board Satyan L. Devadoss John Stillwell (Chair) Erica Flapan Serge Tabachnikov 2010 Mathematics Subject Classification. Primary 00A07, 12-01, 13-01, 15-01, 20-01. For additional information and updates on this book, visit www.ams.org/bookpages/stml-82 Library of Congress Cataloging-in-Publication Data Names: Wadsworth, Adrian R., 1947– Title: Problems in abstract algebra / A. R. Wadsworth. Description: Providence, Rhode Island: American Mathematical Society, [2017] | Series: Student mathematical library; volume 82 | Includes bibliographical references and index. Identifiers: LCCN 2016057500 | ISBN 9781470435837 (alk. paper) Subjects: LCSH: Algebra, Abstract – Textbooks. | AMS: General – General and miscellaneous specific topics – Problem books. msc | Field theory and polyno- mials – Instructional exposition (textbooks, tutorial papers, etc.). msc | Com- mutative algebra – Instructional exposition (textbooks, tutorial papers, etc.). msc | Linear and multilinear algebra; matrix theory – Instructional exposition (textbooks, tutorial papers, etc.). msc | Group theory and generalizations – Instructional exposition (textbooks, tutorial papers, etc.). msc Classification: LCC QA162 .W33 2017 | DDC 512/.02–dc23 LC record available at https://lccn.loc.gov/2016057500 Copying and reprinting. Individual readers of this publication, and nonprofit libraries acting for them, are permitted to make fair use of the material, such as to copy select pages for use in teaching or research. Permission is granted to quote brief passages from this publication in reviews, provided the customary acknowledgment of the source is given. Republication, systematic copying, or multiple reproduction of any material in this publication is permitted only under license from the American Mathematical Society. -

From Arithmetic to Algebra

From arithmetic to algebra Slightly edited version of a presentation at the University of Oregon, Eugene, OR February 20, 2009 H. Wu Why can’t our students achieve introductory algebra? This presentation specifically addresses only introductory alge- bra, which refers roughly to what is called Algebra I in the usual curriculum. Its main focus is on all students’ access to the truly basic part of algebra that an average citizen needs in the high- tech age. The content of the traditional Algebra II course is on the whole more technical and is designed for future STEM students. In place of Algebra II, future non-STEM would benefit more from a mathematics-culture course devoted, for example, to an understanding of probability and data, recently solved famous problems in mathematics, and history of mathematics. At least three reasons for students’ failure: (A) Arithmetic is about computation of specific numbers. Algebra is about what is true in general for all numbers, all whole numbers, all integers, etc. Going from the specific to the general is a giant conceptual leap. Students are not prepared by our curriculum for this leap. (B) They don’t get the foundational skills needed for algebra. (C) They are taught incorrect mathematics in algebra classes. Garbage in, garbage out. These are not independent statements. They are inter-related. Consider (A) and (B): The K–3 school math curriculum is mainly exploratory, and will be ignored in this presentation for simplicity. Grades 5–7 directly prepare students for algebra. Will focus on these grades. Here, abstract mathematics appears in the form of fractions, geometry, and especially negative fractions. -

Diffman: an Object-Oriented MATLAB Toolbox for Solving Differential Equations on Manifolds

Applied Numerical Mathematics 39 (2001) 323–347 www.elsevier.com/locate/apnum DiffMan: An object-oriented MATLAB toolbox for solving differential equations on manifolds Kenth Engø a,∗,1, Arne Marthinsen b,2, Hans Z. Munthe-Kaas a,3 a Department of Informatics, University of Bergen, N-5020 Bergen, Norway b Department of Mathematical Sciences, NTNU, N-7491 Trondheim, Norway Abstract We describe an object-oriented MATLAB toolbox for solving differential equations on manifolds. The software reflects recent development within the area of geometric integration. Through the use of elements from differential geometry, in particular Lie groups and homogeneous spaces, coordinate free formulations of numerical integrators are developed. The strict mathematical definitions and results are well suited for implementation in an object- oriented language, and, due to its simplicity, the authors have chosen MATLAB as the working environment. The basic ideas of DiffMan are presented, along with particular examples that illustrate the working of and the theory behind the software package. 2001 IMACS. Published by Elsevier Science B.V. All rights reserved. Keywords: Geometric integration; Numerical integration of ordinary differential equations on manifolds; Numerical analysis; Lie groups; Lie algebras; Homogeneous spaces; Object-oriented programming; MATLAB; Free Lie algebras 1. Introduction DiffMan is an object-oriented MATLAB [24] toolbox designed to solve differential equations evolving on manifolds. The current version of the toolbox addresses primarily the solution of ordinary differential equations. The solution techniques implemented fall into the category of geometric integrators— a very active area of research during the last few years. The essence of geometric integration is to construct numerical methods that respect underlying constraints, for instance, the configuration space * Corresponding author. -

Summer Review Packet for Students Entering AP Calculus-AB

Summer Review Packet For Students Entering AP Calculus-AB Complete all work in the packet and have it ready to be turned in to your Calculus teacher on the first day of classes on Monday August 16, 2021. SHOW ALL WORK! An answer alone without work may result in no credit given. A CALCULATOR is NOT to be used while working on this packet. The problems in this packet are designed to help you review topics that are important to your success in Calculus. The problems must be done correctly, not just tried. Use your notes from previous math courses to help you. You may also visit the following websites to help: https://www.khanacademy.org/ http://www.coolmath.com/precalculus-review-calculus-intro http://mathforum.org/library/drmath/drmath.high.html We will review these topics for the first few days of school. There will be an examination covering these topics on Friday August 20, 2021. For those that miss any of the review days, you will still be obliged to take the exam on the fifth day because these topics are review from previous classes. Calculus is relatively easy, it’s the algebra that is hard. Most of the time, you’ll understand the calculus concept being taught, but will struggle to get the correct answer because of your background skills. Please be diligent and figure out what you need help with so that we can clarify for you. A little extra work this summer will go a long way to help you succeed in AP Calculus. Print out a copy of the packet from Sonora High School website under Students & Parents tab then Summer Assignments, then choose AP Calculus. -

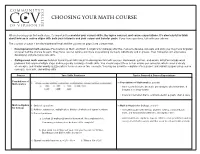

Choosing Your Math Course

CHOOSING YOUR MATH COURSE When choosing your first math class, it’s important to consider your current skills, the topics covered, and course expectations. It’s also helpful to think about how each course aligns with both your interests and your career and transfer goals. If you have questions, talk with your advisor. The courses on page 1 are developmental-level and the courses on page 2 are college-level. • Developmental math courses (Foundations of Math and Math & Algebra for College) offer the chance to develop concepts and skills you may have forgotten or never had the chance to learn. They focus less on lecture and more on practicing concepts individually and in groups. Your homework will emphasize developing and practicing new skills. • College-level math courses build on foundational skills taught in developmental math courses. Homework, quizzes, and exams will often include word problems that require multiple steps and incorporate a variety of math skills. You should expect three to four exams per semester, which cover a variety of concepts, and shorter weekly quizzes which focus on one or two concepts. You may be asked to complete a final project and submit a paper using course concepts, research, and writing skills. Course Your Skills Readiness Topics Covered & Course Expectations Foundations of 1. All multiplication facts (through tens, preferably twelves) should be memorized. In Foundations of Mathematics, you will: Mathematics • learn to use frections, decimals, percentages, whole numbers, & 2. Whole number addition, subtraction, multiplication, division (without a calculator): integers to solve problems • interpret information that is communicated in a graph, chart & table Math & Algebra 1.