Interactional Morality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Recorded Jazz in the 20Th Century

Recorded Jazz in the 20th Century: A (Haphazard and Woefully Incomplete) Consumer Guide by Tom Hull Copyright © 2016 Tom Hull - 2 Table of Contents Introduction................................................................................................................................................1 Individuals..................................................................................................................................................2 Groups....................................................................................................................................................121 Introduction - 1 Introduction write something here Work and Release Notes write some more here Acknowledgments Some of this is already written above: Robert Christgau, Chuck Eddy, Rob Harvilla, Michael Tatum. Add a blanket thanks to all of the many publicists and musicians who sent me CDs. End with Laura Tillem, of course. Individuals - 2 Individuals Ahmed Abdul-Malik Ahmed Abdul-Malik: Jazz Sahara (1958, OJC) Originally Sam Gill, an American but with roots in Sudan, he played bass with Monk but mostly plays oud on this date. Middle-eastern rhythm and tone, topped with the irrepressible Johnny Griffin on tenor sax. An interesting piece of hybrid music. [+] John Abercrombie John Abercrombie: Animato (1989, ECM -90) Mild mannered guitar record, with Vince Mendoza writing most of the pieces and playing synthesizer, while Jon Christensen adds some percussion. [+] John Abercrombie/Jarek Smietana: Speak Easy (1999, PAO) Smietana -

Jazz Collection: Reprise; Arthur Blythe

Jazz Collection: Reprise; Arthur Blythe Samstag, 06. Juni, 17.06 – 18.30 Uhr (Erstausstrahlung vom 30.6.2015) Der Saxophonist Arthur Blythe Der Altsaxophonist Arthur Blythe ging Ende der 1970er-Jahre wie ein Stern auf und versank zwanzig Jahre später wieder in der Versenkung. Was machte aus ihm einen Star und weshalb konnte er sich nicht halten? Fragen, die in der Jazz Collection zu klären sind. Arthur Blythe zeichnet sein sein scharfer und durchdringender Sound aus und ein riesiges Vibrato, wie es die grossen Swingsaxophonisten hatten. Nach Anfängen in der lokalen Band in Kalifornien und eigenen Aufnahmen auf obskuren Labels kam Blythe zu Columbia und konnte dort eine Reihe von hervorragend produzierten und eigenwilligen Alben veröffentlichen. Eine Zeit lang noch tourte er danach mit Allstar- Formationen, doch nach der Jahrtausendwende verebbte seine Karriere. Das ist bedauerlich, Arthur Blythe lohnt eine vertiefte Auseinandersetzung. Die Saxophonistin Nicole Johaenntgen hat es gemacht. Redaktion: Beat Blaser Moderation: Andreas Müller-Crepon Horace Tapscott: The Giant is Awakened (1969) Label: Flying Dutchman Youtube Track A2: For Fats Arthur Blythe: Bush Baby (1977) Label: Adelphi, 5008 Track A2: For Fats Arthur Blythe: Lenox Avenue Breakdown (1979) Label: CBS Track A2: Lenox Avenue Breakdown Arthur Blythe: Light Blue (1983) Label: CBS Track A1: We See The Leaders: Unforeseen Blessings (1988) Label: Black Saint, 120 129-2 Track 02: Hip Dripper Arthur Blythe: Hipmotism (1991) Label: Enja, 120 129-2 Track 06: Miss Eugie Arthur Blythe: -

Hypertext Located Within ENTRIES Multiple Discourses, Is an Intertextual Script, a Rendition, of My Internship (1998-1999) and Research Experiences (1998-2001)

SITEMAP This is the Beta-version for XML with similar formating. Non-functional in PDF The dissertation web, a hypertext located within ENTRIES multiple discourses, is an intertextual script, a rendition, of my internship (1998-1999) and research experiences (1998-2001). EXITS ENTRIES EXITS • SITEMAP • THEORETICAL NARRATIVES • RESEARCH NARRATIVES • INTERNSHIP NARRATIVES • Transforming Performances: An Intern-Researcher's Hypertextual Journey in a Postmodern Community © Saliha Bava 2001-Doctoral Dissertation About Saliha Bava Marriage & Family Therapy Program at Virginia Tech Blacksburg, VA Email Site Uploaded On: January 09, 2002 IHMC Concept Map Software Go To Graphic Map Index Go To Graphic Map Legend SITEMAP This is the Beta-version for XML with similar formating. Non-functional in PDF The dissertation web, a hypertext located within GRAPHIC MAP INDEX multiple discourses, is an intertextual script, a rendition, of my internship (1998-1999) and research Locating the various textual spaces within the dissertation web is experiences (1998-2001). assisted by the maps that appear at the top of certain sections. Various sections may share the same map. If you are viewing the dissertation web in PDF format the interactive maps are non- functional. They can be accessed in html or xml formats only. NAME OF GRAPHIC MAP: MAP DESCRIPTION (Click Map for Larger View) OF MAP Dissertation Web: Spaces Providing an Overview of the Dissertation Web Hypertext Map: Spaces Locating Hypertext Interlinks Internship Map: Spaces Locating Internship Narratives -

Souvenir of Benny

July 2011 | No. 111 Your FREE Guide to the NYC Jazz Scene nycjazzrecord.com Benny Carter Souvenir of Benny Freddy Cole • Zeena Parkins • psi Records • Event Calendar Is it getting hot around here? The usual East Coast jump from chilly post-winter to scorching full summer (otherwise known as spring - guess it really can hang New York@Night you up the most) has occurred, buoyed by last month’s slew of festivals. A nice 4 perk to summer in the city is how jazz gets to come out of the basements and dark rooms and get some sun, not to mention larger-than-usual crowds. There are Interview: Freddy Cole shows in various parks and the city’s open spaces this month; check our Event 6 by Andrew Vélez Calendar for places to tan while soaking up some jazz. But jazz is still an indoor, night-time music. Our Coverage this month will Artist Feature: Zeena Parkins take you all around the city. The late Benny Carter (On The Cover) was a legendary 7 by Kurt Gottschalk musician and composer and will be fêted at the annual Jazz in July celebration at 92nd Street Y. We have canvassed a number of his colleagues for remembrances of On The Cover: Benny Carter this giant. Vocalist/pianist Freddy Cole (Interview) is long out of the shadow of 9 by Alex Henderson his brother, releasing albums regularly and appearing to great acclaim all over the world, including Jazz Standard this month. Zeena Parkins (Artist Profile) is part of Encore: Lest We Forget: a ‘long’ line of harp improvisers and works in all number of creative environments; 10 Juini Booth John Jenkins this month she is at Blue Note and The Stone with different groups. -

MARS LANDING Broadcasting from Space

EXCLUSIVES beyerdynamic MCD100 RSP Circle Surround Sonodore RCM -402 Grace Design 201 Yamaha 02R v2 GPS SoundPals Vac Raac TEQ -1 SA &V Octavia Joemeek SC3 Symetrix 628 Orban 9200 Jünger e07 MARS LANDING broadcasting from space TAPELESS LOCATION RECORDERS PAFI INTERCHANGE ARRIVES The POSTING CONTACT NARADA EQ: THE GUIDE MICHAEL WALDEN Interview 0 9 770144 59 ii i www.americanradiohistory.com Coi 131 While other companies wrestle with the digital learning curve, AMS Neve provides sixteen years of experience as LOGIC 2 Digital Mixing Console the leading designer of digital mixing and editing solutions. With over 300 digital consoles LOGIC DFC in daily service around the Digital Film : onsole world, no other company provides a greater range of advanced digital audio post -production systems. ., . 1 b we ` .. +I,1,Ì',i .1.I 01.1 AUDIOFILE Hard Disk Editor HEAD OFFICE AMS Neve plc Billington Road Burnley Lancs BB11 SUB England AMS Tel: +44 (0) 1282 457011 Fax: +44 (0) 1282 417282 LONDON - Tel: 0171 916 282E Fax: 0171 916 2827 GERMANY - Tel: 61 31 9 42 520 Fax: 61 31 9 42 5210 ' NEW YORK - Tel: (212) 965 1400 Fax: (212) 965 3739 HOLLYWOOD Tel: (818) 753 8789 Fax: (818) 623 4839 TORONTO Tel: (416) 365 3363 Fax: (416) 365 1044 NEVE e -mail: enquiry®ams- neve.com - http: / /www.ams- neve.com www.americanradiohistory.com 6 Editorial 85 Event horizons: Studio Sound on Broadcast: the Web and pro-audio options Mars landing 8 Putting the Red Planet on the map -and the television Soundings Intcrnational nc\\ :md contracts 95 10 Facility: World Events Vonk Sound ABOVE: Jodle Foster is making Contact. -

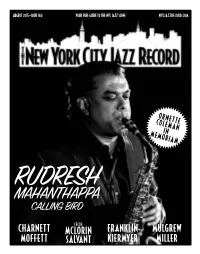

Rudresh Calling Bird

AUGUST 2015—ISSUE 160 YOUR FREE GUIDE TO THE NYC JAZZ SCENE NYCJAZZRECORD.COM ORNETTE COLEMAN MEMORIAMIN MAHANTHAPPARUDRESH CALLING BIRD CÉCILE CHARNETT MCLORIN FRANKLIN MULGREW MOFFETT SALVANT KIERMYER MILLER Managing Editor: Laurence Donohue-Greene Editorial Director & Production Manager: Andrey Henkin To Contact: The New York City Jazz Record 116 Pinehurst Avenue, Ste. J41 AUGUST 2015—ISSUE 160 New York, NY 10033 United States New York@Night 4 Laurence Donohue-Greene: [email protected] Interview : Charnett Moffett by alex henderson Andrey Henkin: 6 [email protected] General Inquiries: Artist Feature : Cecile McLorin Salvant 7 by russ musto [email protected] Advertising: On The Cover : Rudresh Mahanthappa 8 by terrell holmes [email protected] Editorial: [email protected] Encore : Franklin Kiermyer 10 by james pietaro Calendar: [email protected] Lest We Forget : Mulgrew Miller 10 by ken dryden VOXNews: [email protected] Letters to the Editor: LAbel Spotlight : Xanadu 11 by donald elfman [email protected] VOXNEWS 11 by katie bull US Subscription rates: 12 issues, $35 International Subscription rates: 12 issues, $45 For subscription assistance, send check, cash or money order to the address above In Memoriam 12 by andrey henkin or email [email protected] Festival Report Staff Writers 13 David R. Adler, Clifford Allen, Fred Bouchard, Stuart Broomer, In Memoriam : Ornette Coleman 14 Katie Bull, Thomas Conrad, Ken Dryden, Donald Elfman, Brad Farberman, Sean Fitzell, -

Index Bluesnews Nr. 1 Bis 105

Index bluesnews Nr. 1 bis 105 Name/Titel Thema/Titel Bemerkungen Heft Seite Rubrik „Unknowable Journey“ von Ginger Blues in der PdSK‐Bestenliste 4/2020 104 8 Intro 1. AndersWelt Guitar Night Stadthalle, Landstuhl, 05.11.2011 68 81 Live On Stage 1. Blues Now! Festival Basel (Schweiz), Volkshaus 79 84 Live On Stage 1. Bluesfest Detmold 1996 Stadthalle, Detmold, 12.10.1996 7 19 Live On Stage 1. Bluesfestival Dresden 2004 Tante Ju, Dresden, 23.‐24.04.2004 38 33 Live On Stage 1. Bowers & Wilkins R&B Festival Garry Weber Event Center, Halle, 01.11.2003 36 32 Live On Stage 1. Bowers & Wilkins Rhythm & Blues Festival Vorschau 33 13 Intro 1. European Blues Challenge Kulturbrauerei, Berlin, 18.‐19.03.2011 66 80‐81 Live On Stage 1. European Blues Challenge in Berlin 16 Formationen aus 16 europäischen Ländern werden erwartet 64 16 Intro 1. Freiburg Blues Festival 80 85 Live On Stage 1. Hildesheimer Bluesfestival Hildesheim, 21.‐22. 11.1998 16 23 Live On Stage 1. International Blues Event Reinstorf, 1996 7 19 Live On Stage 1. Kammgarn Bluesfestival Kulturzentrum Kammgarn, Kaiserlautern, 2001 27 32 Live On Stage 1. Lübecker Bluesfestival 1996 Lübeck, 07.09.1996 7 23 Live On Stage 1. Muddy Lives Blues Festival Festivalpremiere im brandenburgischen Lieberose 81 12 Intro 1. Ruhrpott Blues Festival Kulturzentrum Werkstatt, Witten, 10.10.1998 16 26 Live On Stage 1. Trossinger Blues‐Fabrik Open‐Air, Trossingen, 06.‐08.09.2002 32 32 Live On Stage 1. Vienna Blues Spring Wien, 02.04.‐03.05.2005 42 39 Live On Stage 10 J Hiss Live In Der Scala 46 71 Rezensionen 10 Jahre Blues & Boogie Night 91 16 Intro 10 Jahre bluesnews Ein "Prosit" auf 10 Jahre bluesnews 43 6 Intro 10 Jahre Jazzthing Rundes Jubiläum für die Kollegen von Jazzthing 36 9 Intro 10 Jahre Schmölzer Blues Tage Schmölzer Blues Tage 2002 unter dem Motto "Boppin' The Blues" 31 10 Intro 10. -

2020 a Year of Loss Here Are a Few People That Died This Year

THE INDEPENDENT JOURNAL OF CREATIVE IMPROVISED MUSIC 2020 A YEAR OF LOSS HERE ARE A FEW PEOPLE THAT DIED THIS YEAR ANDREW KOWALCZYK, producer, died on April 6, 2020. He was 63. ANDY GONZÁLEZ died on April 2020. He was 69. ANNIE ROSS, jazz singer and actor, died on July 21, 2020. She was 89. BILL WITHERS, singer - songwriter, died on March 30, 2020. He was 81. BUCKY PIZZARELLI, guitarist, died on April 1, 2020. He was 94. CAREI THOMAS, jazz pianist and composer- died on. She was 81. DANNY LAEKE, studio engineer died on April 27, 2020. He was 69. DONN TRENNER, died on May 16, 2020. He was 93. EDDY DAVIS banjo virtuoso died on April 7, 2020. He was 79. EDDIE GALE [tpt] died on July 10, 2020. He was 78. ELLIS MARSALIS died on April 1, 2020. He was 85. FREDDY COLE singer, pianist, died on June 27, 2020. He was 88. FREDERICK C TILLIS [ts/ss/composer] died on May 3, 2020. He was 90. GARY PEACOCK [bass] died on Sept. 4. 2020. He was 85. HAL SINGER [ts] died on August 18, 2020. He was 100. HAL WILLNER, legendary producer died on April 7, 2020. He was 64. HELEN JONES WOODS [tbn] died on July 25, 2020. She was 96. HENRY GRIMES, legendary bassist, died on April 17, 2020. He was 84. IRA SULLIVAN [tpt, fl] died Sept. 21,2020; He was 89. JEANIE LAMBE, legendary Glasgow jazz singer died on May 29, 2020. She was 79. JOHN MAXWELL BUCHER trumpet and cornet, died on April 5, 2020.