Timed Automata for Security of Real-Time Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

So 2 2009__Ruffini Taglioni.Pdf

Il giovane insegnante a tiges, l’altro, che si chiama Tornado, ha la te- Taglioni, durante il Al centro, il professor stata monoalbero a cascata di ingranaggi e su servizio militare, sul sedile Taglioni con allievi e posteriore di una Benelli colleghi della Scuola Tecnica Dichiarato non idoneo, sfugge ad ogni obbli- di una ciclistica appositamente disegnata può 500 Industriale Alberghetti go con l’Esercito della Repubblica Sociale e ri- raggiungere i km/h 140. Se ne ha notizia an- Nello Baruzzi, di Imola, 1950. Fabio Taglioni, Archivio personale Archivio Istituto trova sia la strada di casa, sia il tempo per stu- che in versione bialbero. diare e lavorare. Ad Imola, per l’anno 1944-’45, “F.Alberghetti”, Imola la Scuola Secondaria di Avviamento professio- Dalla Ceccato alla Mondial la forza delle idee nale gli dà mandato di insegnare Tecnologia e Disegno Tecnico. Una supplenza che Taglioni Circa lo sviluppo di questo conserva anche nell’anno successivo, ma che piccolo bolide, gli storici ENRICO RUFFINI lascia nel 1947, quando si laurea Ingegnere di- non sempre vanno d’ac- scutendo la tesi su di un motore 250 a 4 cilindri cordo. Ma è quasi certo a V sovralimentato. La Benelli sembra dimo- che sia presentato alla F.B Mondial, ottenendo da parte del Conte Boselli scarso interesse, assieme l numero 1.000 è veramente un bel numero, affronta senza timore quel severo studio, ma ad una segnalazione favo- specie se corrisponde a 1.000 motociclette, presto lo interrompe causa la guerra. revole per la Ceccato. In una diversa dall’altra. A questo incredibile ri- Il servizio militare sarà gravoso e travaglia- tal modo la Casa di Alte Isultato si perviene sommando tutti i modelli di to. -

Back Matter (PDF)

THE JOURNAL OF IMMUNOLOGY Manuscripts should be typewritten and carefully revised before submission and should be sent to Dr. Arthur F. Coca, Pearl River, New York. Authors will be charged for space in excess of 20 printed pages; also for all illustrations and for tabular matter in excess of 10 per cent of the text space. Tnr JOURNAL OF I~aUNOLOGY is issued monthly. Two volumes a year are issued. Twenty-five reprints, without covers, of articles will be furnished to contributors free of cost when ordered in advance. A table showing cost of reprints, with an order slip, is sent with proof. Orders for purchased reprints must be limited to 1,000 copies. Published monthly, beginning January, 1929. (From 1916-1925, and in 1928 Vol- umes I-X, inclusive, and Vol. XV published bi-monthly. Two volumes per year were published during 1926-1927.) The subscription price is $5.00 per volume. For countries outside the Postal Union, add 50 cents a volume. Two volumes ordered at the same time, $9.00; overseas, $10.00. The subscriptions of members of the American Association of Immunologists are in- cluded in the membership dues and are handled by the Assc~Sation's Treasurer, Dr. Arthur F. Coca, Pearl River, New York All other subscriptions should be sent to the publishers. Claims for copies lost in the mails must be received within 30 days, domestic (90 days, foreign) of the date of issue. Changes of address must be received at least two weeks in advance of issue. Correspondence concerning business matters Should be addressed to The Williams & Wilkins Company, Publishers of Scientific Journals and Books, Mount Royal and Guilford Avenues, Baltimore, U. -

MRD 2017 22 FINALE Reportage Ulster GP.Indd

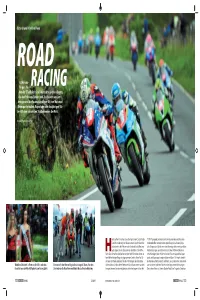

Ulster Grand Prix Nordirland ROAD ist Adrena- lin pur. Ne- RACING ben der TT auf der Isle of Man gibt es weitere Events, die den Puls von Fahrern und Zuschauern ans Limit bringen und die Faszination dieser Art von Motorrad- Rennsport erhalten. Reportage vom diesjährigen Uls- ter-GP, dem schnellsten Straßenrennen der Welt. Text und Fotos von Ralf Falbe ier draußen hat schon Joey Dunlop traniert“, erzählt der TT. Mit Topspeed zentimeternah an Hauswänden und Bäumen Taxifahrer und zeigt auf die einsamen Landstraßen, die vorbeizuknallen erfordert eine spezielle psychische und physi- vorbeiziehen. Wir fahren nach Dundrod bei Belfast, wo sche Disposition. Gleich am ersten Renntag erleben wir sportliche Hauch diese Jahr im Spätsommer der Ulster Grand Prix Höchstleistungen und schlimmste Dramen: Während der briti- läuft. Das schnellste Straßenrennen der Welt führt über einen sie- sche Haudegen Peter Hickman in den Klassen Superbike, Super- ben Meilen langen Ring von abgesperrten Landstraßen. Für Zu- stock und Supersport siegt und den mit über 134 mp/h schnells- schauer ein Mordsspektakel, für das Fahrerlager der ultimative ten Rundenschnitt erreicht, kollidiert sein Landsmann Jamie Hod- Weibliche Schönheit in Form von Grid Girls oder den Elementar für den Rennerfolg ist die Leistung des Teams. Vor dem Adrenalinkick. Selbst die erfahrensten Road Racer zittern vor den son mit einem anderen Fahrer und erliegt seinen Verletzungen. Kanditatinnen zur Miss UGP gehört zum Racing-Spirit Start müssen die Maschinen noch durch die technische Abnahme hier gefahrenen Geschwindigkeiten, die höher liegen als bei der Der andere Fahrer ist Jamies Bruder Rob. Eine Tragödie. Gleichzei- 122 FINALE 22/2017 www.motorradonline.de FINALE 123 Road Racing in Irland: Motorrad-Straßenrennen haben eine lange Tradition in Nordirland. -

Riders Briefs August 2020

The official magazine of Auckland Motorcycle Club, Inc. AUGUST 2020 In this issue: • Dick Smart – Part 3 • AMCC AGM & Prize-Giving (Part 1) • Mount Wellington Update • AMCC Club Series News • Battle Of The Clubs News • And Lots More ….. 1110 Great South Road, PO Box 22362, Otahuhu, Auckland Ph: 276 0880 EXECUTIVE COMMITTEE 2020 - 2021 Email Phone PATRON Jim Campbell PRESIDENT Greg Percival [email protected] 021 160 3960 VICE PRESIDENT Adam Mitchell [email protected] 021 128 4108 SECRETARY TBA [email protected] TBA TREASURER Paul Garrett [email protected] 021 821 138 MEMBERSHIP MXTiming [email protected] and John Catton CLUB CAPTAIN Adam Mitchell [email protected] 021 128 4108 ROAD RACE John Catton [email protected] COMMITTEE Adam Mitchell 021 128 4108 Mark Wigley 027 250 3237 Paul Garrett 021 821 138 Tim Sibley Jim Manoah 021 536 792 Neal Martin 021 823 508 ROAD RACE MX Timing [email protected] 027 201 1177 SECRETARY Nicole Bol GENERAL Glenn Mettam [email protected] 021 902 849 COMMITTEE Trevor Heaphy 022 647 7899 Philip Kavermann 021 264 8021 Alistair Wilton 027 457 4254 Juniper White 021 040 3819 MINIATURE ROAD David Diprose [email protected] 021 275 0003 RACE CHIEF FLAG Juniper White [email protected] 021 040 3819 MARSHAL NZIGP R EP Trevor Heaphy [email protected] 022 647 7899 MAGAZINE EDITOR Philip Kavermann [email protected] 021 264 8021 & MEDIA MNZ REP Glenn Mettam [email protected] 021 902 849 WEBSITE Johannes Rol [email protected] 021 544 514 Cover Image: 2020 AMCC AGM & Prize-Giving PRESIDENT’S REPORT – AUGUST 2020 Hi Club Members & Supporters, We had a good turn out on July 4 th for the AGM & Prize Giving, where we saw a few changes in the elected positions. -

SJ 144 Grudzien 2017

nr 12/2017 (144) str. 4 cena: 11,90 zł (w tym 5 % VAT) FALSTART JESZCZE KILKanaście dni po uruchomieniu modułU CENTRALNEJ EWIDENCJI POJAZDÓW W CEPIK-U 2.0 SYstem nie działał, jaK POWINIEN. Potwierdzają TO m.in. urzędnicy pracujący w wydziałACH KOMUNIKACJI. DO SYSTEMU NIE Były podłączone tAKże WSZYSTKIE STACJE KONTROLI POJAZDÓW. str. 8 JEDNORAZOWA TEORIA? KIEROWCY, KTÓRZY MAJą PRAWO JAZDY NP. kATEGORII B I STarają się o UPRAWNIENIA NA ciężarówKI LUB AUTOBUSY, MIELIBY ZDAWać tylKO EGZAMIN PRAKTYCZNY. Z TAKą inicjaTYWą wySTąpił poseł PAweł SZRAMKA. – A CO Z wiedzą specjalistyczną? – dopytują eKSPERCI Z Branży szKOLENIOWEJ. WWW.SZKOLA-JAZDY.PL Starter Uczmy się na błędach nego modułu, czyli Centralnej Ewidencji Kie- rowców. Ma on bowiem bezpośredni związek z funkcjonowaniem ośrodków szkolenia kierow- ców. Bo to właśnie w CEPiK-u 2.0 szkoły jazdy będą musiały np. aktualizować profil kandyda- Zmiana ta na kierowcę… Krzysztof Giżycki Miejmy nadzieję, że Ministerstwo Cyfryzacji po- trafi wyciągać wnioski z listopadowego falstartu ruchomienie modułu Centralnej Ewiden- systemu i 4 czerwca wszystko zadziała, jak po- warty cji Pojazdów w ramach CEPiK-u 2.0 trud- winno. A skoro jesteśmy przy życzeniach – wszak Od 20 listopada Wojewódzki Ośro- no uznać za sukces Ministerstwa Cyfryzacji. zbliżają się święta Bożego Narodzenia – dobrze U dek Ruchu Drogowego w Piotrkowie Powód jest prosty. Nie działa ona bez zarzutu. byłoby myśleć, że wyciągnąć wnioski ze swoich Przynajmniej do czasu zamknięcia tego numeru błędów potrafi także Ministerstwo Infrastruktu- Trybunalskim przeprowadza egzami- „Szkoły Jazdy”. Problemy z wpisywaniem infor- ry i Budownictwa. Może warto w końcu przed- ny praktyczne na kat. B z wykorzy- macji do CEPiK-u miała część stacji kontroli po- stawić oficjalną i gruntownie przemyślaną pro- staniem nowych samochodów, czyli jazdów, dokonujących okresowych przeglądów pozycję zmian w systemie szkolenia i egzamino- citroenów C3. -

Special Edition Motonews Express GUZZI

The Ontario Guzzi - N°8 EDITION - 2018 SPECIAL Riders The Ontario Guzzi Riders 1 The Midual 2017 News Type Express N°8 1 French Luxury in Motorcycle Special Edition MOTONews Express GUZZI ONTARIO GUZZI RIDERS Not too many products can excite my senses the way the www.ontarioguzziriders.com Midual Type 1 did. Once in a while a product rise to a level of perfection never achieved before. https://groups.yahoo.com/neo/ groups/ontarioguzzi/info Today I am bringing you to the world of the Midual PRESIDENT Type 1. Tis French motorcycle is not for the regular Phil Tunbridge Joe. 705-722-3312 [email protected] Tis bike is a jewel and like all masterpieces the price tag is there too. Tink __________ of it as the Bugatti of motorcycles. EDITOR Afer Dior, Cartier, Chanel, Don Perignon, Bugatti and Grey Poupon, Pat Castel France is also sharing another unique creation, that will put you above all if 613-878-9600 [email protected] you can afford it… __________ In the following pages, you will discover the creation, the vision of a man, NEWSLETTER & the knowhow of the workers, the excellence of the crafsmanship and the ADVERTISING OFFICE history behind the name. 2743 Massicotte Lane Ottawa, On K1T 3G9 Charles Jacob (an executive from Midual) has been kind enough to share Canada his thoughts with us on the Type 1. His words will unveil a passion on the [email protected] __________ story of the bike, the people behind it. From the conception to the production, with him you will discover that excellence and savoir faire still COVER PAGE exist in today’s world. -

Ducati Museum

Ducati Museum Past and future, challenges and successes, vision and determination: the Ducati Museum is a journey through the legendary 90-year history of the Company, renowned across the world for its style, performance and the search for perfection. Each product is a work of art. A story told using language composed of shapes and colours, emphasised by delicate, ethereal staging. An intense adventure, in which every bike exhibited becomes a true work of art to be experienced. The new Ducati Museum is characterised by its very modern concept, in which the colour white dominates so as to ensure that the product takes centre stage, with no distractions. Each bike exhibited is a work of art, a story told using a language composed of shapes and colours and emphasised by dedicated installations. In addition, the new Ducati Museum exudes the Ducati brand identity of Style, Sophistication and Performance. With its new look, the Ducati Museum is a journey through legendary Ducati history, to the heart of a company in which every product is conceived, designed and created to provide unique emotions. The new Ducati Museum has an entire area dedicated to some of the most iconic road bikes that have contributed to making the company’s history so great. And it is here that the story of the so-called “production bike” is recounted in its entirety, or rather, the conception and development of the project in very particular technological, economic and social contexts. This leads to the Museum’s new narrative, developed according to three paths: the history of Ducati production bikes and the social and cultural context in which they are conceived; the racing history with exhibits including race bikes and winners’ trophies, and finally the ‘Ducati heroes’, or rather the Ducati riders themselves. -

Honda 400 Four Once You Passed Your Motorcycle Test in the Mid-1970S You Probably Bought One of These

RIDE2LIVE || MARQUE HISTORY Honda 400 Four Once you passed your motorcycle test in the mid-1970s you probably bought one of these. If not, this is what all the fuss was about. WORDS: GREG PULLEN PHOTOS: JOE DICK MOTORCYCLE: GLYNIS ROBERTS 110 APRIL 2013 || CLASSIC BIKE GUIDE “This was genesis, the point where the Japanese proved they could build a European- standard sportbike.” o a certain age group, perhaps most The new breed of riders wanted an agile, sporting tellingly the sports moped generation, motorcycle that would look as at-home in the pits of Imola Honda’s 400 Four was the finest sporting or Daytona as outside the chip shop. In 1974 this meant a motorcycle you could buy. Well, unless you four-cylinder, four-stroke with six-gears, a four-into-one had won the pools. exhaust, humped seat and low handlebars. TOtherwise you might look on the CB400F as a cramped At Earl’s Court that year Honda showed a wowed crowd little buzz-bomb, which is what most Americans thought. just that. For while the British lapped up imaginary race tracks on The scales might have said the 400F was heavy, but the compact Four, US buyers ignored the little Honda, even sitting on one proved that scales aren’t as clever as people when it was updated with incongruously high bars and think, and compared to the lardy CB750F1 and the almost more forward-set footrests. invisible CB550F (even when painted orange) Honda Testing the Japanese 400s, one American magazine somehow hit the nail right on the head with the 400. -

2017 Manual of Motorcycle Sport

Motorcycling Australia MOTORCYCLING AUSTRALIA 2017 Manual of Motorcycle Sport Published annually since 1928 Motorcycling Australia is by Motorcycling Australia the Australian affiliate of ABN 83 057 830 083 the Fèdèration Internationale PO Box 134 de Motocyclisme. South Melbourne 3205 Victoria Australia Tel: 03 9684 0500 Fax: 03 9684 0555 email: [email protected] website: www.ma.org.au This publication is available electronically from: www.fim.ch www.ma.org.au ISSN 1833-2609 2017. All material in this book is the copyright of Motorcycling Australia Ltd (MA) and may not be reproduced without prior written permission from the CEO INTRODUCTION 2017 MANUAL OF MOTORCYCLE SPORT INTRODUCTION TO THE 2017 EDITION Welcome to Motorcycling Australia’s 2017 Manual of Motorcycle Sport (MoMS), a publication designed to assist you in your riding or officiating throughout the upcoming calendar year. The Manual of Motorcycle Sport The MoMS is the motorcycle racing ‘Bible’ and the development and provision of the rules and information within this resource is one of the key functions of Motorcycling Australia (MA). While the information is correct at the time of printing, things can and do change through-out the year. For this reason we urge you to keep an eye on the MA website, where bulletins and updated versions are posted as necessary (www.ma.org.au). The PDF version of the manual is optimised for use on all your devices for access to the MoMS anywhere, anytime. You have the option to utilise the manual offline, with content available chapter by chapter to download, save and print as required. -

TT World Series Feasibility Study

The Government of the Isle of Man Department for Economic Development TT World Series Feasibility Study May 2012 DOCUMENT CONTROL Amendment History Version Date File Reference Author Remarks/Changes No. 1 01-05-12 IoMTTWorldSeries-Draft SCM Draft Report Sign-off List Name Position Date Remarks Angus Buchanan Director 01-05-12 Signature Distribution List Name Organisation Date Colin Kniverton Isle of Man Department for Economic Development 01-05-12 Paul Phillips Isle of Man Department for Economic Development 01-05-12 Government of the Isle of Man TT World Series Feasibility Study CONTENTS EXECUTIVE SUMMARY i 1 INTRODUCTION & BACKGROUND ............................................................................ 1 1.1 Our Brief .................................................................................................................... 1 1.2 Background to the Isle of Man TT Race ..................................................................... 1 1.3 Project structure ........................................................................................................ 2 2 THE ISLE OF MAN TT AND THE PROPOSED WORLD SERIES ................................ 4 2.1 Introduction ................................................................................................................ 4 2.2 The Essence of the TT and the opportunity ............................................................... 4 2.3 Distance of courses ................................................................................................... 6 2.4 Number