Microsoft Academic: a Multidisciplinary Comparison of Citation Counts with Scopus and Mendeley for 29 Journals 1 Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Final Version

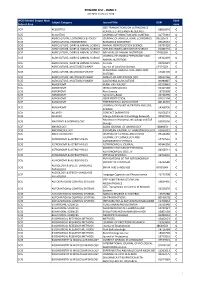

REWARD LIST ‐ RANK C JCR 2018 ‐CiteScore 2018 WOS Edition/ Scopus Main Rank Subject Category Journal Title ISSN Subject Area code IEEE TRANSACTIONS ON ULTRASONICS SCIE ACOUSTICS 08853010 C FERROELECTRICS AND FREQUENCY SCIE ACOUSTICS JOURNAL OF VIBRATION AND CONTROL 10775463 C SCIE AGRICULTURAL ECONOMICS & POLICY JOURNAL OF AGRICULTURAL ECONOMICS 0021857X C SCIE AGRICULTURAL ENGINEERING BIOMASS & BIOENERGY 09619534 C SCIE AGRICULTURE, DAIRY & ANIMAL SCIENCE ANIMAL REPRODUCTION SCIENCE 03784320 C SCIE AGRICULTURE, DAIRY & ANIMAL SCIENCE APPLIED ANIMAL BEHAVIOUR SCIENCE 01681591 C SCIE AGRICULTURE, DAIRY & ANIMAL SCIENCE ARCHIVES OF ANIMAL NUTRITION 1745039X C JOURNAL OF ANIMAL PHYSIOLOGY AND SCIE AGRICULTURE, DAIRY & ANIMAL SCIENCE 09312439 C ANIMAL NUTRITION SCIE AGRICULTURE, DAIRY & ANIMAL SCIENCE Animals 20762615 C SCIE AGRICULTURE, MULTIDISCIPLINARY Journal of Land Use Science 1747423X C RENEWABLE AGRICULTURE AND FOOD SCIE AGRICULTURE, MULTIDISCIPLINARY 17421705 C SYSTEMS SCIE AGRICULTURE, MULTIDISCIPLINARY ANNALS OF APPLIED BIOLOGY 00034746 C SCIE AGRICULTURE, MULTIDISCIPLINARY CALIFORNIA AGRICULTURE 00080845 C SCIE AGRONOMY PLANT PATHOLOGY 00320862 C SCIE AGRONOMY IRRIGATION SCIENCE 03427188 C SCIE AGRONOMY Rice Science 16726308 C SCIE AGRONOMY Agronomy‐Basel 20734395 C SCIE AGRONOMY CROP PROTECTION 02612194 C SCIE AGRONOMY EXPERIMENTAL AGRICULTURE 00144797 C JOURNAL OF PLANT NUTRITION AND SOIL SCIE AGRONOMY 14368730 C SCIENCE SCIE ALLERGY CONTACT DERMATITIS 01051873 C SCIE ALLERGY Allergy Asthma & Immunology Research 20927355 C Advances -

Tipping Points: Cancelling Journals When Arxiv Access Is Good Enough

Tipping points: cancelling journals when arXiv access is good enough Tony Aponte Sciences Collection Coordinator UCLA Library ASEE ELD Lightning Talk June 17, 2019 Preprint explosion! Brian Resnick and Julia Belluz. (2019). The war to free science. Vox https://www.vox.com/the-highlight/2019/6/3/18271538/open- access-elsevier-california-sci-hub-academic-paywalls Preprint explosion! arXiv. (2019). arXiv submission rate statistics https://arxiv.org/help/stats/2018_by_area/index 2018 Case Study: two physics journals and arXiv ● UCLA: heavy users of arXiv. Not so heavy users of version of record ● Decent UC authorship ● No UC editorial board members 2017 Usage Annual cost Cost per use 2017 Impact Factor Journal A 103 $8,315 ~$80 1.291 Journal B 72 $6,344 ~$88 0.769 Just how many of these articles are OA? OAISSN.py - Enter a Journal ISSN and a year and this python program will tell you how many DOIs from that year have an open access version2 Ryan Regier. (2018). OAISSN.py https://github.com/ryregier/OAcounts. Just how many of these articles are OA? Ryan Regier. (2018). OAISSN.py https://github.com/ryregier/OAcounts. Just how many of these articles are OA? % OA articles from 2017 % OA articles from 2018 Journal A 68% 64% Journal B 11% 8% Ryan Regier. (2018). OAISSN.py https://github.com/ryregier/OAcounts. arXiv e-prints becoming closer to publisher versions of record according to UCLA similarity study of arXiv articles vs versions of record Martin Klein, Peter Broadwell, Sharon E. Farb, Todd Grappone. 2018. Comparing Published Scientific Journal Articles to Their Pre-Print Versions -- Extended Version. -

Microsoft Academic Search and Google Scholar Citations

Microsoft Academic Search and Google Scholar Citations Microsoft Academic Search and Google Scholar Citations: A comparative analysis of author profiles José Luis Ortega1 VICYT-CSIC, Serrano, 113 28006 Madrid, Spain, [email protected] Isidro F. Aguillo Cybermetrics Lab, CCHS-CSIC, Albasanz, 26-28 28037 Madrid, Spain, [email protected] Abstract This paper aims to make a comparative analysis between the personal profiling capabilities of the two most important free citation-based academic search engines, namely Microsoft Academic Search (MAS) and Google Scholar Citations (GSC). Author profiles can be very useful for evaluation purposes once the advantages and the shortcomings of these services are described and taken into consideration. A total of 771 personal profiles appearing in both the MAS and the GSC databases are analysed. Results show that the GSC profiles include more documents and citations than those in MAS, but with a strong bias towards the Information and Computing sciences, while the MAS profiles are disciplinarily better balanced. MAS shows technical problems such as a higher number of duplicated profiles and a lower updating rate than GSC. It is concluded that both services could be used for evaluation proposes only if they are applied along with other citation indexes as a way to supplement that information. Keywords: Microsoft Academic Search; Google Scholar Citations; Web bibliometrics; Academic profiles; Research evaluation; Search engines Introduction In November 2009, Microsoft Research Asia started a new web search service specialized in scientific information. Even though Google (Google Scholar) already introduced an academic search engine in 2004, the proposal of Microsoft Academic Search (MAS) went beyond a mere document retrieval service that counts citations. -

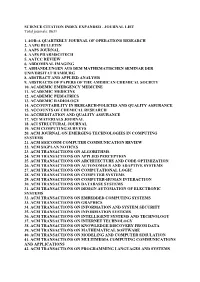

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total Journals: 8631

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total journals: 8631 1. 4OR-A QUARTERLY JOURNAL OF OPERATIONS RESEARCH 2. AAPG BULLETIN 3. AAPS JOURNAL 4. AAPS PHARMSCITECH 5. AATCC REVIEW 6. ABDOMINAL IMAGING 7. ABHANDLUNGEN AUS DEM MATHEMATISCHEN SEMINAR DER UNIVERSITAT HAMBURG 8. ABSTRACT AND APPLIED ANALYSIS 9. ABSTRACTS OF PAPERS OF THE AMERICAN CHEMICAL SOCIETY 10. ACADEMIC EMERGENCY MEDICINE 11. ACADEMIC MEDICINE 12. ACADEMIC PEDIATRICS 13. ACADEMIC RADIOLOGY 14. ACCOUNTABILITY IN RESEARCH-POLICIES AND QUALITY ASSURANCE 15. ACCOUNTS OF CHEMICAL RESEARCH 16. ACCREDITATION AND QUALITY ASSURANCE 17. ACI MATERIALS JOURNAL 18. ACI STRUCTURAL JOURNAL 19. ACM COMPUTING SURVEYS 20. ACM JOURNAL ON EMERGING TECHNOLOGIES IN COMPUTING SYSTEMS 21. ACM SIGCOMM COMPUTER COMMUNICATION REVIEW 22. ACM SIGPLAN NOTICES 23. ACM TRANSACTIONS ON ALGORITHMS 24. ACM TRANSACTIONS ON APPLIED PERCEPTION 25. ACM TRANSACTIONS ON ARCHITECTURE AND CODE OPTIMIZATION 26. ACM TRANSACTIONS ON AUTONOMOUS AND ADAPTIVE SYSTEMS 27. ACM TRANSACTIONS ON COMPUTATIONAL LOGIC 28. ACM TRANSACTIONS ON COMPUTER SYSTEMS 29. ACM TRANSACTIONS ON COMPUTER-HUMAN INTERACTION 30. ACM TRANSACTIONS ON DATABASE SYSTEMS 31. ACM TRANSACTIONS ON DESIGN AUTOMATION OF ELECTRONIC SYSTEMS 32. ACM TRANSACTIONS ON EMBEDDED COMPUTING SYSTEMS 33. ACM TRANSACTIONS ON GRAPHICS 34. ACM TRANSACTIONS ON INFORMATION AND SYSTEM SECURITY 35. ACM TRANSACTIONS ON INFORMATION SYSTEMS 36. ACM TRANSACTIONS ON INTELLIGENT SYSTEMS AND TECHNOLOGY 37. ACM TRANSACTIONS ON INTERNET TECHNOLOGY 38. ACM TRANSACTIONS ON KNOWLEDGE DISCOVERY FROM DATA 39. ACM TRANSACTIONS ON MATHEMATICAL SOFTWARE 40. ACM TRANSACTIONS ON MODELING AND COMPUTER SIMULATION 41. ACM TRANSACTIONS ON MULTIMEDIA COMPUTING COMMUNICATIONS AND APPLICATIONS 42. ACM TRANSACTIONS ON PROGRAMMING LANGUAGES AND SYSTEMS 43. ACM TRANSACTIONS ON RECONFIGURABLE TECHNOLOGY AND SYSTEMS 44. -

Jennifer I. Luebke 1 Curriculum Vitae Jennifer I. Luebke, Ph.D. Associate

Jennifer I. Luebke Curriculum Vitae Jennifer I. Luebke, Ph.D. Associate Professor of Anatomy & Neurobiology Associate Professor of Psychiatry Director, Laboratory of Cellular Neurobiology Boston University School of Medicine 85 East Newton Street, M949 Boston, Massachusetts 02118 Voice: 617-638-4930 Email: [email protected] Education and Employment History: 1980-1984: B.S. (Biology) Randolph-Macon College, Ashland, Virginia 1984-1986: Laboratory Technician (Laboratory of Cell Biology and Genetics, NIDDK) National Institutes of Health, Bethesda, Maryland 1986-1990: Ph.D. Student (Anatomy & Neurobiology), Boston University School of Medicine, Boston, Massachusetts (Linda L. Wright, mentor) 1990-1992: Postdoctoral Fellow, Department of Psychiatry, Harvard Medical School, Boston, Massachusetts (Robert W. McCarley and Robert W. Greene mentors) 1992-1995: Postdoctoral Fellow, Department of Physiology, Tufts University Medical School, Boston, Massachusetts (Kathleen Dunlap, mentor) 1995-2003: Assistant Professor, Department of Anatomy & Neurobiology and Department of Psychiatry, Boston University School of Medicine, Boston, Massachusetts 2004-Present: Associate Professor, Department of Anatomy & Neurobiology and Department of Psychiatry, Boston University School of Medicine, Boston, Massachusetts 2010-Present: Adjunct Associate Professor, Department of Neuroscience, Mount Sinai School of Medicine, New York, New York Research Summary: Research is directed toward understanding alterations in the structure and function of individual cortical pyramidal cells in a rhesus monkey model of normal aging and in transgenic mouse models of neurodegenerative disease. Using whole-cell patch-clamp methods and ultra-high resolution confocal microscopy, Dr. Luebke has demonstrated marked alterations in action potential firing patterns (and underlying ionic currents), glutamatergic and GABAergic synaptic response properties and detailed dendritic and spine architecture in cortical pyramidal cells both in normal aging and in mouse models of neurodegenerative disease. -

TETRAHEDRON the International Journal for the Rapid Publication of Full Original Research Papers and Critical Reviews in Organic Chemistry

TETRAHEDRON The International Journal for the Rapid Publication of Full Original Research Papers and Critical Reviews in Organic Chemistry AUTHOR INFORMATION PACK TABLE OF CONTENTS XXX . • Description p.1 • Audience p.1 • Impact Factor p.1 • Abstracting and Indexing p.2 • Editorial Board p.2 • Guide for Authors p.4 ISSN: 0040-4020 DESCRIPTION . Tetrahedron publishes full accounts of research having outstanding significance in the broad field of organic chemistry and its related disciplines, such as organic materials and bio-organic chemistry. Regular papers in Tetrahedron are expected to represent detailed accounts of an original study having substantially greater scope and details than that found in a communication, as published in Tetrahedron Letters. Tetrahedron also publishes thematic collections of papers as special issues and 'Reports', commissioned in-depth reviews providing a comprehensive overview of a research area. Benefits to authors We also provide many author benefits, such as free PDFs, a liberal copyright policy, special discounts on Elsevier publications and much more. Please click here for more information on our author services. Please see our Guide for Authors for information on article submission. If you require any further information or help, please visit our Support Center AUDIENCE . Organic Chemists, Bio-organic Chemists. IMPACT FACTOR . 2020: 2.457 © Clarivate Analytics Journal Citation Reports 2021 AUTHOR INFORMATION PACK 3 Oct 2021 www.elsevier.com/locate/tet 1 ABSTRACTING AND INDEXING . PubMed/Medline CAB International Chemical Abstracts Current Contents - Life Sciences and Clinical Medicine Current Contents Current Contents - Physical, Chemical & Earth Sciences Derwent Drug File EI Compendex Plus Embase Pascal Francis Research Alert Science Citation Index Web of Science AGRICOLA BIOSIS Citation Index Scopus Reaxys EDITORIAL BOARD . -

Predicting Cognitive Decline: Genetic, Environmental and Lifestyle Risk Factors

Predicting Cognitive Decline: Genetic, Environmental and Lifestyle Risk Factors Shea J. Andrews April 2017 A thesis by compilation submitted for the degree of Doctor of Philosophy of The Australian National University © Copyright by Shea John Frederick Andrews 2017 All Rights Reserved i Declaration This work was conducted from February 2013 to August 2016 at the Genome Diversity and Health Group, John Curtin School of Medical Research, The Australian National University, Canberra, ACT. This thesis by compilation consists of five original publications describing the analyses I have performed during my candidature investigating the role of genetic, environmental and lifestyle risk factors in normal cognitive aging. All five publications have been published in Q1 ranked journals according to the SCImago Journal & Country Rankings in the fields of neurology, genetics, psychiatry and mental health, and geriatric and gerontology. My specific contribution to each manuscript is detailed in the subsequent pages in the form of a statement signed by the senior author of each publication. This document has not been submitted for qualifications at any other academic institution. Shea Andrews Canberra, Australia April 2017 ii Published Papers Andrews, SJ, Das, D., Anstey, KJ., Easteal, S. (2015). Interactive effect of APOE genotype and blood pressure on cognitive decline: The PATH through life project. Journal of Alzheimer's Disease. 44(4): 1087-98. DOI: 10.3233/JAD- 140630 For this publication, I designed and performed all statistical analyses and wrote the manuscript. A slightly modified version of this paper is presented in Chapter 3. ____________________ Simon Easteal Senior Author April 2017 Andrews SJ, Das D, Cherbuin N, Anstey KJ, Easteal S. -

Scholarly Metrics Recommendations for Research Libraries: Deciphering the Trees in the Forest Table of Contents

EUROPE’S RESEARCH LIBRARY NETWORK Scholarly Metrics Recommendations for Research Libraries: Deciphering the Trees in the Forest Table of Contents 01 3D. Learn About Platforms Before Implementing Them In Services & 15 INTRODUCTION 03 Homogenize Different Sources ABOUT LIBER 3E. Work With Researchers To Build Awareness Of Benefits But Educate 16 1. DISCOVERY & DISCOVERABILITY 05 About Weaknesses of Scholarly Metrics 1A. Provide Contextual Information To Allow the Discovery of Related 05 3F. Expand Your Perspective When Developing Services; Avoid Single- 17 Work & Users Purpose Approaches 1B. Exploit Rich Network Structures & Implement Bibliometric Methods To 07 3G. Teach Colleagues New Skills & Library Goals & Services 17 Enable Discovery 1C. Encourage Sharing Of Library Collections Under Open Licenses 08 4. RESEARCH ASSESSMENT 19 4A. Establish Appropriate Goals for Assessment Exercises Before Selecting 19 2. SHOWCASING ACHIEVEMENTS 09 Databases & Metrics; Be Transparent About Use & Interpretation 2A. Incentivize Researchers To Share Scholarly Works, Promote Achievements 09 4B. Use Different Data Sources to Include Various Scholarly Works & 20 Online & Engage With Audiences Disciplinary Communication & Publication Cultures 2B. Encourage Researchers To Showcase Scientific Contributions & Monitor 11 4C. Rely on Objective, Independent & Commonly-Accepted Data Sources 21 Impact To Provide Sound & Transparent Scholarly Metrics 4D. Avoid Using Composite Indicators & Conflating Different Aspects 21 3. SERVICE DEVELOPMENT 13 Of Scholarly Works & Impact; Don’t Lose The Multifaceted Nature of 3A. Join Forces With Stakeholders To Provide Services Reusing Existing 13 Metrics & Distort Interpretation Resources, Tools, Methods & Data 3B. Value Various Levels of Engagement; Favour Standardized, Well-Established 15 23 CONCLUSION & CALL FOR ACTION Practices & Easy-To-Use Tools 25 REFERENCES 3C. -

Google Scholar, Microsoft Academic, Scopus, Dimensions, Web of Science, and Opencitations’ COCI: a Multidisciplinary Comparison of Coverage Via Citations

✅ The content of this manuscript has been peer-reviewed and accepted for publication at Scientometrics (ISSN 1588-2861) Cite as: Martín-Martín, A., Thelwall, M., Orduna-Malea, E., & Delgado López-Cózar, E. (2021). Google Scholar, Microsoft Academic, Scopus, Dimensions, Web of Science, and OpenCitations’ COCI: A multidisciplinary comparison of coverage via citations. Scientometrics, 126(1), 871-906. https://doi.org/10.1007/s11192-020-03690-4 Google Scholar, Microsoft Academic, Scopus, Dimensions, Web of Science, and OpenCitations’ COCI: a multidisciplinary comparison of coverage via citations Alberto Martín-Martín 1 , Mike Thelwall 2 , Enrique Orduna- 3 1 Malea , Emilio Delgado López-Cózar Abstract (also in Spanish, Chinese) Introduction New sources of citation data have recently become available, such as Microsoft Academic, Dimensions, and the OpenCitations Index of CrossRef open DOI-to-DOI citations (COCI). Although these have been compared to the Web of Science (WoS), Scopus, or Google Scholar, there is no systematic evidence of their differences across subject categories. Methods In response, this paper investigates 3,073,351 citations found by these six data sources to 2,515 English-language highly-cited documents published in 2006 from 252 subject categories, expanding and updating the largest previous study. Results Google Scholar found 88% of all citations, many of which were not found by the other sources, and nearly all citations found by the remaining sources (89%-94%). A similar pattern held within most subject categories. Microsoft Academic is the second largest overall (60% of all citations), including 82% of Scopus citations and 86% of Web of Science citations. In most categories, Microsoft Academic found more citations than Scopus and WoS (182 and 223 subject categories, respectively), but had coverage gaps in some areas, such as Physics and some Humanities categories. -

Brain Ageing in the New Millennium

Brain ageing in the new millennium Julian N. Trollor, Michael J. Valenzuela Objective: This paper examines the current literature pertaining to brain ageing. The objective of this review is to provide an overview of the effects of ageing on brain structure and function and to examine possible mediators of these changes. Methods: A MEDLINE search was conducted for each area of interest. A selective review was undertaken of relevant articles. Results: Although fundamental changes in fluid intellectual abilities occur with age, global cognitive decline is not a hallmark of the ageing process. Decline in fluid intellectual ability is paralleled by regionally specific age related changes apparent from both structural and functional neuroimaging studies. The histopathological mediators of these changes do not appear to be reduction in neuronal number, which, with the exception of selected hippo- campal regions, remain relatively stable across age. At the molecular level, several mech- anisms of age related change have been postulated. Such theoretical models await refinement and may eventually provide a basis for therapy designed to reduce effects of the ageing process. The role of possible protective factors such as ‘brain reserve’, neuro- protective agents and hormonal factors in modifying individual vulnerability to the ageing process has been the focus of a limited number of studies. Conclusion: Our understanding of the functional and structural changes associated with both healthy and pathological ageing is rapidly gaining in sophistication and complexity. An awareness of the fundamental biological substrates underpinning the ageing process will allow improved insights into vulnerability to neuropsychiatric disease associated with advancing age. Key words: brain ageing, cognition, dementia, neuroimaging. -

Citeseerx: 20 Years of Service to Scholarly Big Data

CiteSeerX: 20 Years of Service to Scholarly Big Data Jian Wu Kunho Kim C. Lee Giles Old Dominion University Pennsylvania State University Pennsylvania State University Norfolk, VA University Park, PA University Park, PA [email protected] [email protected] [email protected] ABSTRACT access to a growing number of researchers. Mass digitization par- We overview CiteSeerX, the pioneer digital library search engine, tially solved the problem by storing document collections in digital that has been serving academic communities for more than 20 years repositories. The advent of modern information retrieval methods (first released in 1998), from three perspectives. The system per- significantly expedited the process of relevant search. However, spective summarizes its architecture evolution in three phases over documents are still saved individually by many users. In 1997, three the past 20 years. The data perspective describes how CiteSeerX computer scientists at the NEC Research Institute (now NEC Labs), has created searchable scholarly big datasets and made them freely New Jersey, United States – Steven Lawrence, Kurt Bollacker, and available for multiple purposes. In order to be scalable and effective, C. Lee Giles, conceived an idea to create a network of computer AI technologies are employed in all essential modules. To effectively science research papers through citations, which was to be imple- train these models, a sufficient amount of data has been labeled, mented by a search engine, the prototype CiteSeer. Their intuitive which can then be reused for training future models. Finally, we idea, automated citation indexing [8], changed the way researchers discuss the future of CiteSeerX. -

The Effects of Normal Aging on Myelin and Nerve Fibers: a Review∗

Journal of Neurocytology 31, 581–593 (2002) The effects of normal aging on myelin and nerve fibers: A review∗ ALAN PETERS Department of Anatomy and Neurobiology, Boston University School of Medicine, 715, Albany Street, Boston, MA 02118, USA [email protected] Received 7 January 2003; revised 4 March 2003; accepted 5 March 2003 Abstract It was believed that the cause of the cognitive decline exhibited by human and non-human primates during normal aging was a loss of cortical neurons. It is now known that significant numbers of cortical neurons are not lost and other bases for the cognitive decline have been sought. One contributing factor may be changes in nerve fibers. With age some myelin sheaths exhibit degenerative changes, such as the formation of splits containing electron dense cytoplasm, and the formation on myelin balloons. It is suggested that such degenerative changes lead to cognitive decline because they cause changes in conduction velocity, resulting in a disruption of the normal timing in neuronal circuits. Yet as degeneration occurs, other changes, such as the formation of redundant myelin and increasing thickness suggest of sheaths, suggest some myelin formation is continuing during aging. Another indication of this is that oligodendrocytes increase in number with age. In addition to the myelin changes, stereological studies have shown a loss of nerve fibers from the white matter of the cerebral hemispheres of humans, while other studies have shown a loss of nerve fibers from the optic nerves and anterior commissure in monkeys. It is likely that such nerve fiber loss also contributes to cognitive decline, because of the consequent decrease in connections between neurons.