Bibliometrics to Altmetrics: a Changing Trend

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“Altmetrics” Using Google Scholar, Twitter, Mendeley, Facebook

Pre-Print Version Altmetrics of “altmetrics” using Google Scholar, Twitter, Mendeley, Facebook, Google-plus, CiteULike, Blogs and Wiki Saeed-Ul Hassan, Uzair Ahmed Gillani [email protected] Information Technology University, 346-B Ferozepur Road, Lahore (Pakistan) Abstract: We measure the impact of “altmetrics” field by deploying altmetrics indicators using the data from Google Scholar, Twitter, Mendeley, Facebook, Google- plus, CiteULike, Blogs and Wiki during 2010- 2014. To capture the social impact of scientific publications, we propose an index called alt-index, analogues to h-index. Across the deployed indices, our results have shown high correlation among the indicators that capture social impact. While we observe medium Pearson’s correlation (ρ= .247) among the alt-index and h-index, a relatively high correlation is observed between social citations and scholarly citations (ρ= .646). Interestingly, we find high turnover of social citations in the field compared with the traditional scholarly citations, i.e. social citations are 42.2% more than traditional citations. The social mediums such as Twitter and Mendeley appear to be the most effective channels of social impact followed by Facebook and Google-plus. Overall, altmetrics appears to be working well in the field of “altmetrics”. Keywords: Altmetrics, Social Media, Usage Indicators, Alt-index Pre-Print Version Introduction In scholarly world, altmetrics are getting popularity as to support and/or alternative to traditional citation-based evaluation metrics such as impact factor, h-index etc. (Priem et. al., 2010). The concept of altmetrics was initially proposed in 2010 as a generalization of article level metrics and has its roots in the #altmetrics hashtag (McIntyre et al, 2011). -

How to Search for Academic Journal Articles Online

How to search for Academic Journal Articles Online While you CAN get to the online resources from the library page, I find that getting onto MyUT and clicking the LIBRARY TAB is much easier and much more familiar. I will start from there: Within the Library tab, there is a box called “Electronic Resources” and within that box is a hyperlink that will take you to “Research Databases by Name.” Click that link as shown below: The page it will take you looks like the picture below. Click “Listed by Name.” This will take you to a list starting with A, and the top selection is the one you want, it is called “Academic Search Complete.” Click it as pictured below: THIS SECTION IS ONLY IF YOU ARE ON AN OFF-CAMPUS COMPUTER: You will be required to log-in if you are off campus. The First page looks like this: Use the pull-down menu to find “University of Toledo” The Branch should default to “Main Campus,” which is what you want. Then click “Submit.” Next it will ask for you First and Last Name and your Rocket ID. If you want to use your social security number, that is also acceptable (but a little scary.). If you use your rocket ID, be sure to include the R at the beginning of the number. Then click Submit again and you are IN. The opening page has the searchbox right in the middle. When searching, start narrow and then get broader if you do not find enough results. For Example, when researching Ceremony by Leslie Silko, you may want your first search to be “Silko, Ceremony.” If you don’t find enough articles, you may then want to just search “Silko.” Finally, you may have to search for “Native American Literature.” And so on and so forth. -

Sci-Hub Provides Access to Nearly All Scholarly Literature

Sci-Hub provides access to nearly all scholarly literature A DOI-citable version of this manuscript is available at https://doi.org/10.7287/peerj.preprints.3100. This manuscript was automatically generated from greenelab/scihub-manuscript@51678a7 on October 12, 2017. Submit feedback on the manuscript at git.io/v7feh or on the analyses at git.io/v7fvJ. Authors • Daniel S. Himmelstein 0000-0002-3012-7446 · dhimmel · dhimmel Department of Systems Pharmacology and Translational Therapeutics, University of Pennsylvania · Funded by GBMF4552 • Ariel Rodriguez Romero 0000-0003-2290-4927 · arielsvn · arielswn Bidwise, Inc • Stephen Reid McLaughlin 0000-0002-9888-3168 · stevemclaugh · SteveMcLaugh School of Information, University of Texas at Austin • Bastian Greshake Tzovaras 0000-0002-9925-9623 · gedankenstuecke · gedankenstuecke Department of Applied Bioinformatics, Institute of Cell Biology and Neuroscience, Goethe University Frankfurt • Casey S. Greene 0000-0001-8713-9213 · cgreene · GreeneScientist Department of Systems Pharmacology and Translational Therapeutics, University of Pennsylvania · Funded by GBMF4552 PeerJ Preprints | https://doi.org/10.7287/peerj.preprints.3100v2 | CC BY 4.0 Open Access | rec: 12 Oct 2017, publ: 12 Oct 2017 Abstract The website Sci-Hub provides access to scholarly literature via full text PDF downloads. The site enables users to access articles that would otherwise be paywalled. Since its creation in 2011, Sci- Hub has grown rapidly in popularity. However, until now, the extent of Sci-Hub’s coverage was unclear. As of March 2017, we find that Sci-Hub’s database contains 68.9% of all 81.6 million scholarly articles, which rises to 85.2% for those published in toll access journals. -

Open Access Availability of Scientific Publications

Analytical Support for Bibliometrics Indicators Open access availability of scientific publications Analytical Support for Bibliometrics Indicators Open access availability of scientific publications* Final Report January 2018 By: Science-Metrix Inc. 1335 Mont-Royal E. ▪ Montréal ▪ Québec ▪ Canada ▪ H2J 1Y6 1.514.495.6505 ▪ 1.800.994.4761 [email protected] ▪ www.science-metrix.com *This work was funded by the National Science Foundation’s (NSF) National Center for Science and Engineering Statistics (NCSES). Any opinions, findings, conclusions or recommendations expressed in this report do not necessarily reflect the views of NCSES or the NSF. The analysis for this research was conducted by SRI International on behalf of NSF’s NCSES under contract number NSFDACS1063289. Analytical Support for Bibliometrics Indicators Open access availability of scientific publications Contents Contents .............................................................................................................................................................. i Tables ................................................................................................................................................................. ii Figures ................................................................................................................................................................ ii Abstract ............................................................................................................................................................ -

An Initiative to Track Sentiments in Altmetrics

Halevi, G., & Schimming, L. (2018). An Initiative to Track Sentiments in Altmetrics. Journal of Altmetrics, 1(1): 2. DOI: https://doi.org/10.29024/joa.1 PERSPECTIVE An Initiative to Track Sentiments in Altmetrics Gali Halevi and Laura Schimming Icahn School of Medicine at Mount Sinai, US Corresponding author: Gali Halevi ([email protected]) A recent survey from Pew Research Center (NW, Washington & Inquiries 2018) found that over 44 million people receive science-related information from social media channels to which they subscribe. These include a variety of topics such as new discoveries in health sciences as well as “news you can use” information with practical tips (p. 3). Social and news media attention to scientific publications has been tracked for almost a decade by several platforms which aggregate all public mentions of and interactions with scientific publications. Since the amount of comments, shares, and discussions of scientific publications can reach an audience of thousands, understanding the overall “sentiment” towards the published research is a mammoth task and typically involves merely reading as many posts, shares, and comments as possible. This paper describes an initiative to track and provide sentiment analysis to large social and news media mentions and label them as “positive”, “negative” or “neutral”. Such labels will enable an overall understanding of the content that lies within these social and news media mentions of scientific publications. Keywords: sentiment analysis; opinion mining; scholarly output Background Traditionally, the impact of research has been measured through citations (Cronin 1984; Garfield 1964; Halevi & Moed 2015; Moed 2006, 2015; Seglen 1997). The number of citations a paper receives over time has been directly correlated to the perceived impact of the article. -

Citation Indexing Services: ISI, Wos and Other Facilities

Citation Indexing Impact Factor and “h-index” Where do we stand? Citation Indexing Services: ISI, WoS and Other Facilities Volker RW Schaa GSI Helmholtzzentrum für Schwerionenforschung GmbH Darmstadt, Germany JACoW Team Meeting 2012 IFIC@Valencia, Spain November, 2012 Volker RW Schaa Citation Indexing Services: ISI, WoS and Other Facilities Citation Indexing Impact Factor and “h-index” Where do we stand? Citation Indexing Services: ISI, WoS and Other Facilities Volker RW Schaa GSI Helmholtzzentrum für Schwerionenforschung GmbH Darmstadt, Germany JACoW Team Meeting 2012 IFIC@Valencia, Spain November, 2012 Volker RW Schaa Citation Indexing Services: ISI, WoS and Other Facilities Citation Indexing Impact Factor and “h-index” Where do we stand? 1 Citation Indexing What are we talking about? Citation Indexing: How does it work? 2 Impact Factor and “h-index” Popularity Usage JACoW in “ISI Web of Knowledge”? 3 Where do we stand? References, references, references, . Volker RW Schaa Citation Indexing Services: ISI, WoS and Other Facilities WoS — Web of Science “Web of Science” is an online academic citation index provided by Thomson Reuters. ISI Web of Knowledge Thomson Scientific & Healthcare bought “ISI” in 1992 and since then it is known as “Thomson ISI”. “Web of Science” is now called “ISI Web of Knowledge”. Citation Indexing What are we talking about? Impact Factor and “h-index” Citation Indexing: How does it work? Where do we stand? What are we talking about? ISI — Institute for Scientific Information ISI was founded in 1960. It publishes the annual “Journal Citation Reports” which lists impact factors for each of the journals that it tracks. Volker RW Schaa Citation Indexing Services: ISI, WoS and Other Facilities ISI Web of Knowledge Thomson Scientific & Healthcare bought “ISI” in 1992 and since then it is known as “Thomson ISI”. -

Piracy of Scientific Papers in Latin America: an Analysis of Sci-Hub Usage Data

Developing Latin America Piracy of scientific papers in Latin America: An analysis of Sci-Hub usage data Juan D. Machin-Mastromatteo Alejandro Uribe-Tirado Maria E. Romero-Ortiz This article was originally published as: Machin-Mastromatteo, J.D., Uribe-Tirado, A., and Romero-Ortiz, M. E. (2016). Piracy of scientific papers in Latin America: An analysis of Sci-Hub usage data. Information Development, 32(5), 1806–1814. http://dx.doi.org/10.1177/0266666916671080 Abstract Sci-Hub hosts pirated copies of 51 million scientific papers from commercial publishers. This article presents the site’s characteristics, it criticizes that it might be perceived as a de-facto component of the Open Access movement, it replicates an analysis published in Science using its available usage data, but limiting it to Latin America, and presents implications caused by this site for information professionals, universities and libraries. Keywords: Sci-Hub, piracy, open access, scientific articles, academic databases, serials crisis Scientific articles are vital for students, professors and researchers in universities, research centers and other knowledge institutions worldwide. When academic publishing started, academies, institutions and professional associations gathered articles, assessed their quality, collected them in journals, printed and distributed its copies; with the added difficulty of not having digital technologies. Producing journals became unsustainable for some professional societies, so commercial scientific publishers started appearing and assumed printing, sales and distribution on their behalf, while academics retained the intellectual tasks. Elsevier, among the first publishers, emerged to cover operations costs and profit from sales, now it is part of an industry that grew from the process of scientific communication; a 10 billion US dollar business (Murphy, 2016). -

Exploratory Analysis of Publons Metrics and Their Relationship with Bibliometric and Altmetric Impact

Exploratory analysis of Publons metrics and their relationship with bibliometric and altmetric impact José Luis Ortega Institute for Advanced Social Studies (IESA-CSIC), Córdoba, Spain, [email protected] Abstract Purpose: This study aims to analyse the metrics provided by Publons about the scoring of publications and their relationship with impact measurements (bibliometric and altmetric indicators). Design/methodology/approach: In January 2018, 45,819 research articles were extracted from Publons, including all their metrics (scores, number of pre and post reviews, reviewers, etc.). Using the DOI identifier, other metrics from altmetric providers were gathered to compare the scores of those publications in Publons with their bibliometric and altmetric impact in PlumX, Altmetric.com and Crossref Event Data (CED). Findings: The results show that (1) there are important biases in the coverage of Publons according to disciplines and publishers; (2) metrics from Publons present several problems as research evaluation indicators; and (3) correlations between bibliometric and altmetric counts and the Publons metrics are very weak (r<.2) and not significant. Originality/value: This is the first study about the Publons metrics at article level and their relationship with other quantitative measures such as bibliometric and altmetric indicators. Keywords: Publons, Altmetrics, Bibliometrics, Peer-review 1. Introduction Traditionally, peer-review has been the most appropriate way to validate scientific advances. Since the first beginning of the scientific revolution, scientific theories and discoveries were discussed and agreed by the research community, as a way to confirm and accept new knowledge. This validation process has arrived until our days as a suitable tool for accepting the most relevant manuscripts to academic journals, allocating research funds or selecting and promoting scientific staff. -

A Comprehensive Analysis of the Journal Evaluation System in China

A comprehensive analysis of the journal evaluation system in China Ying HUANG1,2, Ruinan LI1, Lin ZHANG1,2,*, Gunnar SIVERTSEN3 1School of Information Management, Wuhan University, China 2Centre for R&D Monitoring (ECOOM) and Department of MSI, KU Leuven, Belgium 3Nordic Institute for Studies in Innovation, Research and Education, Tøyen, Oslo, Norway Abstract Journal evaluation systems are important in science because they focus on the quality of how new results are critically reviewed and published. They are also important because the prestige and scientific impact of journals is given attention in research assessment and in career and funding systems. Journal evaluation has become increasingly important in China with the expansion of the country’s research and innovation system and its rise as a major contributor to global science. In this paper, we first describe the history and background for journal evaluation in China. Then, we systematically introduce and compare the most influential journal lists and indexing services in China. These are: the Chinese Science Citation Database (CSCD); the journal partition table (JPT); the AMI Comprehensive Evaluation Report (AMI); the Chinese STM Citation Report (CJCR); the “A Guide to the Core Journals of China” (GCJC); the Chinese Social Sciences Citation Index (CSSCI); and the World Academic Journal Clout Index (WAJCI). Some other lists published by government agencies, professional associations, and universities are briefly introduced as well. Thereby, the tradition and landscape of the journal evaluation system in China is comprehensively covered. Methods and practices are closely described and sometimes compared to how other countries assess and rank journals. Keywords: scientific journal; journal evaluation; peer review; bibliometrics 1 1. -

Inter-Rater Reliability and Convergent Validity of F1000prime Peer Review

Accepted for publication in the Journal of the Association for Information Science and Technology Inter-rater reliability and convergent validity of F1000Prime peer review Lutz Bornmann Division for Science and Innovation Studies Administrative Headquarters of the Max Planck Society Hofgartenstr. 8, 80539 Munich, Germany. Email: [email protected] Abstract Peer review is the backbone of modern science. F1000Prime is a post-publication peer review system of the biomedical literature (papers from medical and biological journals). This study is concerned with the inter-rater reliability and convergent validity of the peer recommendations formulated in the F1000Prime peer review system. The study is based on around 100,000 papers with recommendations from Faculty members. Even if intersubjectivity plays a fundamental role in science, the analyses of the reliability of the F1000Prime peer review system show a rather low level of agreement between Faculty members. This result is in agreement with most other studies which have been published on the journal peer review system. Logistic regression models are used to investigate the convergent validity of the F1000Prime peer review system. As the results show, the proportion of highly cited papers among those selected by the Faculty members is significantly higher than expected. In addition, better recommendation scores are also connected with better performance of the papers. Keywords F1000; F1000Prime; Peer review; Highly cited papers; Adjusted predictions; Adjusted predictions at representative values 2 1 Introduction The first known cases of peer-review in science were undertaken in 1665 for the journal Philosophical Transactions of the Royal Society (Bornmann, 2011). Today, peer review is the backbone of science (Benda & Engels, 2011); without a functioning and generally accepted evaluation instrument, the significance of research could hardly be evaluated. -

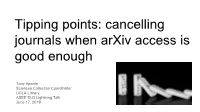

Tipping Points: Cancelling Journals When Arxiv Access Is Good Enough

Tipping points: cancelling journals when arXiv access is good enough Tony Aponte Sciences Collection Coordinator UCLA Library ASEE ELD Lightning Talk June 17, 2019 Preprint explosion! Brian Resnick and Julia Belluz. (2019). The war to free science. Vox https://www.vox.com/the-highlight/2019/6/3/18271538/open- access-elsevier-california-sci-hub-academic-paywalls Preprint explosion! arXiv. (2019). arXiv submission rate statistics https://arxiv.org/help/stats/2018_by_area/index 2018 Case Study: two physics journals and arXiv ● UCLA: heavy users of arXiv. Not so heavy users of version of record ● Decent UC authorship ● No UC editorial board members 2017 Usage Annual cost Cost per use 2017 Impact Factor Journal A 103 $8,315 ~$80 1.291 Journal B 72 $6,344 ~$88 0.769 Just how many of these articles are OA? OAISSN.py - Enter a Journal ISSN and a year and this python program will tell you how many DOIs from that year have an open access version2 Ryan Regier. (2018). OAISSN.py https://github.com/ryregier/OAcounts. Just how many of these articles are OA? Ryan Regier. (2018). OAISSN.py https://github.com/ryregier/OAcounts. Just how many of these articles are OA? % OA articles from 2017 % OA articles from 2018 Journal A 68% 64% Journal B 11% 8% Ryan Regier. (2018). OAISSN.py https://github.com/ryregier/OAcounts. arXiv e-prints becoming closer to publisher versions of record according to UCLA similarity study of arXiv articles vs versions of record Martin Klein, Peter Broadwell, Sharon E. Farb, Todd Grappone. 2018. Comparing Published Scientific Journal Articles to Their Pre-Print Versions -- Extended Version. -

Microsoft Academic Search and Google Scholar Citations

Microsoft Academic Search and Google Scholar Citations Microsoft Academic Search and Google Scholar Citations: A comparative analysis of author profiles José Luis Ortega1 VICYT-CSIC, Serrano, 113 28006 Madrid, Spain, [email protected] Isidro F. Aguillo Cybermetrics Lab, CCHS-CSIC, Albasanz, 26-28 28037 Madrid, Spain, [email protected] Abstract This paper aims to make a comparative analysis between the personal profiling capabilities of the two most important free citation-based academic search engines, namely Microsoft Academic Search (MAS) and Google Scholar Citations (GSC). Author profiles can be very useful for evaluation purposes once the advantages and the shortcomings of these services are described and taken into consideration. A total of 771 personal profiles appearing in both the MAS and the GSC databases are analysed. Results show that the GSC profiles include more documents and citations than those in MAS, but with a strong bias towards the Information and Computing sciences, while the MAS profiles are disciplinarily better balanced. MAS shows technical problems such as a higher number of duplicated profiles and a lower updating rate than GSC. It is concluded that both services could be used for evaluation proposes only if they are applied along with other citation indexes as a way to supplement that information. Keywords: Microsoft Academic Search; Google Scholar Citations; Web bibliometrics; Academic profiles; Research evaluation; Search engines Introduction In November 2009, Microsoft Research Asia started a new web search service specialized in scientific information. Even though Google (Google Scholar) already introduced an academic search engine in 2004, the proposal of Microsoft Academic Search (MAS) went beyond a mere document retrieval service that counts citations.