Appendix B Development Tools

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Redhawk Linux User's Guide

Linux® User’s Guide 0898004-520 May 2007 Copyright 2007 by Concurrent Computer Corporation. All rights reserved. This publication or any part thereof is intended for use with Concurrent products by Concurrent personnel, customers, and end–users. It may not be reproduced in any form without the written permission of the publisher. The information contained in this document is believed to be correct at the time of publication. It is subject to change without notice. Concurrent makes no warranties, expressed or implied, concerning the information contained in this document. To report an error or comment on a specific portion of the manual, photocopy the page in question and mark the correction or comment on the copy. Mail the copy (and any additional comments) to Concurrent Computer Corporation, 2881 Gateway Drive, Pompano Beach, Florida, 33069. Mark the envelope “Attention: Publications Department.” This publication may not be reproduced for any other reason in any form without written permission of the publisher. Concurrent Computer Corporation and its logo are registered trademarks of Concurrent Computer Corporation. All other Concurrent product names are trademarks of Concurrent while all other product names are trademarks or registered trademarks of their respective owners. Linux® is used pursuant to a sublicense from the Linux Mark Institute. Printed in U. S. A. Revision History: Date Level Effective With August 2002 000 RedHawk Linux Release 1.1 September 2002 100 RedHawk Linux Release 1.1 December 2002 200 RedHawk Linux Release 1.2 April 2003 300 RedHawk Linux Release 1.3, 1.4 December 2003 400 RedHawk Linux Release 2.0 March 2004 410 RedHawk Linux Release 2.1 July 2004 420 RedHawk Linux Release 2.2 May 2005 430 RedHawk Linux Release 2.3 March 2006 500 RedHawk Linux Release 4.1 May 2006 510 RedHawk Linux Release 4.1 May 2007 520 RedHawk Linux Release 4.2 Preface Scope of Manual This manual consists of three parts. -

Debugging Kernel Problems

Debugging Kernel Problems by GregLehey Edition for AsiaBSDCon 2004 Taipei, 13 March 2004 Debugging Kernel Problems by GregLehey([email protected]) Copyright © 1995-2004 GregLehey 3Debugging Kernel Problems Preface Debugging kernel problems is a black art. Not manypeople do it, and documentation is rare, in- accurate and incomplete. This document is no exception: faced with the choice of accuracyand completeness, I chose to attempt the latter.Asusual, time was the limiting factor,and this draft is still in beta status. This is a typical situation for the whole topic of kernel debugging: building debug tools and documentation is expensive,and the people who write them are also the people who use them, so there'satendencytobuild as much of the tool as necessary to do the job at hand. If the tool is well-written, it will be reusable by the next person who looks at a particular area; if not, it might fall into disuse. Consider this book a starting point for your own develop- ment of debugging tools, and remember: more than anywhere else, this is an area with ``some as- sembly required''. Debugging Kernel Problems 4 1 Introduction Operating systems fail. All operating systems contain bugs, and theywill sometimes cause the system to behave incorrectly.The BSD kernels are no exception. Compared to most other oper- ating systems, both free and commercial, the BSD kernels offer a large number of debugging tools. This tutorial examines the options available both to the experienced end user and also to the developer. In this tutorial, we’ll look at the following topics: • Howand whykernels fail. -

Ethereal Developer's Guide Draft 0.0.2 (15684) for Ethereal 0.10.11

Ethereal Developer's Guide Draft 0.0.2 (15684) for Ethereal 0.10.11 Ulf Lamping, Ethereal Developer's Guide: Draft 0.0.2 (15684) for Ethere- al 0.10.11 by Ulf Lamping Copyright © 2004-2005 Ulf Lamping Permission is granted to copy, distribute and/or modify this document under the terms of the GNU General Public License, Version 2 or any later version published by the Free Software Foundation. All logos and trademarks in this document are property of their respective owner. Table of Contents Preface .............................................................................................................................. vii 1. Foreword ............................................................................................................... vii 2. Who should read this document? ............................................................................... viii 3. Acknowledgements ................................................................................................... ix 4. About this document .................................................................................................. x 5. Where to get the latest copy of this document? ............................................................... xi 6. Providing feedback about this document ...................................................................... xii I. Ethereal Build Environment ................................................................................................14 1. Introduction .............................................................................................................15 -

A.5.1. Linux Programming and the GNU Toolchain

Making the Transition to Linux A Guide to the Linux Command Line Interface for Students Joshua Glatt Making the Transition to Linux: A Guide to the Linux Command Line Interface for Students Joshua Glatt Copyright © 2008 Joshua Glatt Revision History Revision 1.31 14 Sept 2008 jg Various small but useful changes, preparing to revise section on vi Revision 1.30 10 Sept 2008 jg Revised further reading and suggestions, other revisions Revision 1.20 27 Aug 2008 jg Revised first chapter, other revisions Revision 1.10 20 Aug 2008 jg First major revision Revision 1.00 11 Aug 2008 jg First official release (w00t) Revision 0.95 06 Aug 2008 jg Second beta release Revision 0.90 01 Aug 2008 jg First beta release License This document is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 United States License [http:// creativecommons.org/licenses/by-nc-sa/3.0/us/]. Legal Notice This document is distributed in the hope that it will be useful, but it is provided “as is” without express or implied warranty of any kind; without even the implied warranties of merchantability or fitness for a particular purpose. Although the author makes every effort to make this document as complete and as accurate as possible, the author assumes no responsibility for errors or omissions, nor does the author assume any liability whatsoever for incidental or consequential damages in connection with or arising out of the use of the information contained in this document. The author provides links to external websites for informational purposes only and is not responsible for the content of those websites. -

Linux Kernel and Driver Development Training Slides

Linux Kernel and Driver Development Training Linux Kernel and Driver Development Training © Copyright 2004-2021, Bootlin. Creative Commons BY-SA 3.0 license. Latest update: October 9, 2021. Document updates and sources: https://bootlin.com/doc/training/linux-kernel Corrections, suggestions, contributions and translations are welcome! embedded Linux and kernel engineering Send them to [email protected] - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/470 Rights to copy © Copyright 2004-2021, Bootlin License: Creative Commons Attribution - Share Alike 3.0 https://creativecommons.org/licenses/by-sa/3.0/legalcode You are free: I to copy, distribute, display, and perform the work I to make derivative works I to make commercial use of the work Under the following conditions: I Attribution. You must give the original author credit. I Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under a license identical to this one. I For any reuse or distribution, you must make clear to others the license terms of this work. I Any of these conditions can be waived if you get permission from the copyright holder. Your fair use and other rights are in no way affected by the above. Document sources: https://github.com/bootlin/training-materials/ - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/470 Hyperlinks in the document There are many hyperlinks in the document I Regular hyperlinks: https://kernel.org/ I Kernel documentation links: dev-tools/kasan I Links to kernel source files and directories: drivers/input/ include/linux/fb.h I Links to the declarations, definitions and instances of kernel symbols (functions, types, data, structures): platform_get_irq() GFP_KERNEL struct file_operations - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/470 Company at a glance I Engineering company created in 2004, named ”Free Electrons” until Feb. -

Hands on #1 Overview

Hands On #1 ercises Overview See Wednesday’ s hands on Part Where1 : Starting is your andinstallation familiarizing ? Getting the example programs Running novice examples : N01, N03, N02 … Part Examine2 : Looking cross into sections Geant4, trying it out with ex Simulate depth dose curve Compute and plot Bragg curve Addenda : other examples, histogramming Your Geant4 installation VMware Player users under Windows or Mac OS all files downloaded from http://geant4.in2p3.fr/cenbg/vmware.html in principle, no installation needed all your peripherals should be operational (WiFi, disks,…) Installation from beginning CERN link http://geant4.web.cern.ch/geant4/support/download.shtml SLAC link http://geant4.slac.stanford.edu/installation/ User forum http://geant4-hn.slac.stanford.edu:5090/HyperNews/public/get/installconfig.html Installation guide http://geant4.web.cern.ch/geant4/UserDocumentation/UsersGuides/InstallationGuide/html/index.html This Hands On will help you check your installation of Geant4 is correct If not, we can try to help during this Hands On… Access your Geant4 installation for VMware users Start the VMware player software Start your VMware machine Log onto the VMware machine Username: local1 , password: local1 Open a terminal (right click on desktop with mouse) You are now working under Scientific Linux 4.2 with gcc 3.4.4 By default on your Windows PC, the directory /mnt/hgfs/echanges is a link to C:\ Tips for VMware users (1/2) Geant4 8.3 installation path : /usr/local/geant4 you need root privileges -

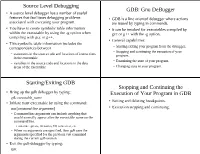

Source Level Debugging GDB: Gnu Debugger Starting/Exiting GDB Stopping and Continuing the Execution of Your Program In

Source Level Debugging GDB: Gnu DeBugger ● A source level debugger has a number of useful features that facilitates debugging problems ● GDB is a line oriented debugger where actions associated with executing your program. are issued by typing in commands. ● You have to create symbolic table information ● It can be invoked for executables compiled by within the executable by using the -g option when gcc or g++ with the -g option. compiling with gcc or g++. ● General capabilities: ● This symbolic table information includes the correspondances between – Starting/exiting your program from the debugger. – Stopping and continuing the execution of your – statements in the source code and locations of instructions program. in the executable – Examining the state of your program. – variables in the source code and locations in the data areas of the executable – Changing state in your program. Starting/Exiting GDB Stopping and Continuing the ● Bring up the gdb debugger by typing: Execution of Your Program in GDB gdb executable_name ● Setting and deleting breakpoints. ● Initiate your executable by using the command: run [command-line arguments] ● Execution stepping and continuing. – Command line arguments can include anything that would normally appear after the executable name on the command line. ● run-time options, filenames, I/O redirection, etc. – When no arguments are specified, then gdb uses the arguments specified for the previous run command during the current gdb session. ● Exit the gdb debugger by typing: quit Setting and Deleting Breakpoints Examples of Setting and Deleting Breakpoints ● Can set a breakpoint to stop: – at a particular source line number or function (gdb) break # sets breakpoint at current line # number – when a specified condition occurs (gdb) break 74 # sets breakpoint at line 74 in the # current file ● General form. -

Devt: Let the Device Talk

Iowa State University Capstones, Theses and Creative Components Dissertations Summer 2020 DevT: Let the Device Talk Chander Bhushan Gupta Follow this and additional works at: https://lib.dr.iastate.edu/creativecomponents Part of the Data Storage Systems Commons Recommended Citation Gupta, Chander Bhushan, "DevT: Let the Device Talk" (2020). Creative Components. 585. https://lib.dr.iastate.edu/creativecomponents/585 This Creative Component is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Creative Components by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. DevT: Let the Device Talk by Chander Bhushan Gupta A Creative Component submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Computer Engineering Program of Study Committee: Mai Zheng, Major Professor The student author, whose presentation of the scholarship herein was approved by the program of study committee, is solely responsible for the content of this creative component. The Graduate College will ensure this creative component is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2020 Copyright c Chander Bhushan Gupta, 2020. All rights reserved. ii TABLE OF CONTENTS Page LIST OF TABLES . iv LIST OF FIGURES . .v ACKNOWLEDGMENTS . vii ABSTRACT . viii CHAPTER 1. INTRODUCTION . .1 1.1 Motivation . .3 1.2 Related Work . .5 1.3 Outline . .6 CHAPTER 2. REVIEW OF LITERATURE . .7 2.1 Why FEMU? . -

Thread Scheduling in Multi-Core Operating Systems Redha Gouicem

Thread Scheduling in Multi-core Operating Systems Redha Gouicem To cite this version: Redha Gouicem. Thread Scheduling in Multi-core Operating Systems. Computer Science [cs]. Sor- bonne Université, 2020. English. tel-02977242 HAL Id: tel-02977242 https://hal.archives-ouvertes.fr/tel-02977242 Submitted on 24 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Ph.D thesis in Computer Science Thread Scheduling in Multi-core Operating Systems How to Understand, Improve and Fix your Scheduler Redha GOUICEM Sorbonne Université Laboratoire d’Informatique de Paris 6 Inria Whisper Team PH.D.DEFENSE: 23 October 2020, Paris, France JURYMEMBERS: Mr. Pascal Felber, Full Professor, Université de Neuchâtel Reviewer Mr. Vivien Quéma, Full Professor, Grenoble INP (ENSIMAG) Reviewer Mr. Rachid Guerraoui, Full Professor, École Polytechnique Fédérale de Lausanne Examiner Ms. Karine Heydemann, Associate Professor, Sorbonne Université Examiner Mr. Etienne Rivière, Full Professor, University of Louvain Examiner Mr. Gilles Muller, Senior Research Scientist, Inria Advisor Mr. Julien Sopena, Associate Professor, Sorbonne Université Advisor ABSTRACT In this thesis, we address the problem of schedulers for multi-core architectures from several perspectives: design (simplicity and correct- ness), performance improvement and the development of application- specific schedulers. -

Linux Update

Linux Kernel Debugging Tools Jason Wessel - Product Architect for WR Linux Core Runtime - Kernel.org KGDB Maintainer June 23th, 2010 Agenda • Talk about a number of common kernel debugging tools • Describe high level tactics for using kernel debugging tools • Demonstrate using several tools The harsh reality is you could spend a whole day or more talking about each tool. *** Later find slides/code at: http://kgdb.wiki.kernel.org *** 2 © 2010 Wind River Exciting news • For 2.6.35-rc1 – kdb (the kernel debug shell) merged to mainline! – Ability to debug before console_init() – You can use the EHCI debug port with kgdb • There are thoughts about next few years of kgdb/kdb – Implement complete atomic kernel mode setting – Continue to improve the non ehci debug usb console – Improve keyboard panic handler – Further integration with kprobes and hw assisted debugging – netconsole / kgdboe v2 – Use dedicated HW queues • The only bad news is it takes a long time to get there. 3 © 2010 Wind River Is there anything better than kgdb? • Good – kgdb / kdb • Better – QEMU/KVM backend debugger – Virtual box backend debugger – vmware backend debugger – kdump/kexec • Best – ICE (usb or ethernet) – Simics (because it has backward stepping) • In a class by itself – printk() / trace_printk() The challenge is knowing what to use when... Working tools rock! 4 © 2010 Wind River A bit about printk() and timing • printk is probably the #1 most reliable debug • Any seasoned kernel developer has surely experienced: – Add a printk and the bug goes away! – Timing in -

![The Data Display Debugger Ddd [−−Gdb] [−−Dbx] [−−Xdb] [−−Jdb]](https://docslib.b-cdn.net/cover/0462/the-data-display-debugger-ddd-gdb-dbx-xdb-jdb-1680462.webp)

The Data Display Debugger Ddd [−−Gdb] [−−Dbx] [−−Xdb] [−−Jdb]

() () NAME ddd, xddd - the data display debugger SYNOPSIS ddd [ −−gdb ][−−dbx ][−−xdb ][−−jdb ][−−pydb ][−−perl ][−−debugger name ][−−[r]host [username@]hostname ]] [−−help ][−−trace ][−−version ][−−configuration ][options... ] [ program [ core | process-id ]] but usually just ddd program DESCRIPTION The purpose of a debugger such as DDD is to allow you to see what is going on “inside” another program while it executes—or what another program was doing at the moment it crashed. DDD can do four main kinds of things (plus other things in support of these) to help you catch bugs in the act: • Start your program, specifying anything that might affect its behavior. • Make your program stop on specified conditions. • Examine what has happened, when your program has stopped. • Change things in your program, so you can experiment with correcting the effects of one bug and go on to learn about another. “Classical” UNIX debuggers such as the GNU debugger (GDB) provide a command-line interface and a multitude of commands for these and other debugging purposes. DDD is a comfortable graphical user interface around an inferior GDB, DBX, XDB, JDB, Python debugger, or Perl debugger. INVOKING DDD You can run DDD with no arguments or options. However, the most usual way to start DDD is with one argument or two, specifying an executable program as the argument: ddd program You can also start with both an executable program and a core file specified: ddd program core You can, instead, specify a process ID as a second argument, if you want to debug a running process: ddd program 1234 would attach DDD to process 1234 (unless you also have a file named ‘ 1234 ’; DDD does check for a core file first). -

Debugging and Tuning Linux for EDA

Debugging and Tuning Linux for EDA Fabio Somenzi [email protected] University of Colorado at Boulder Outline Compiling gcc icc/ecc Debugging valgrind purify ddd Profiling gcov, gprof quantify vtl valgrind Compiling Compiler options related to static checks debugging optimization Profiling-driven optimization Compiling with GCC gcc -Wall -O3 -g reports most uses of potentially uninitialized variables -O3 (or -O6) necessary to trigger dataflow analysis can be fooled by if (cond) x = VALUE; ... if (cond) y = x; Uninitialized variables not considered for register allocation may escape Achieving -Wall-clean code is not too painful and highly desirable Compiling C code with g++ is more painful, but has its rewards Compiling with GCC gcc -mcpu=pentium4 -malign-double -mcpu=pentium4 optimizes for the Pentium 4, but produces code that runs on any x86 -march=pentium4 uses Pentium 4-specific instructions -malign-double forces alignment of double’s to double-word boundary Use either for all files or for none gcc -mfpmath=sse Controls the use of SSE instructions for floating point For complete listing, check gcc’s info page under Invoking gcc ! Submodel Options Compiling with ICC ICC is the Intel compiler for IA-32 systems. http://www.intel.com/software/products/ icc -O3 -g -ansi -w2 -Wall Aggressive optimization Retain debugging info Strict ANSI conformance Display remarks, warnings, and errors Enable all warnings Remarks tend to be a bit overwhelming Fine grain control over diagnostic: see man page Compiling with ICC icc -tpp7 Optimize instruction scheduling