Exploring Misogyny Across the Manosphere in Reddit

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intersectionality: T E Fourth Wave Feminist Twitter Community

#Intersectionality: T e Fourth Wave Feminist Twitter Community Intersectionality, is the marrow within the bones of fem- Tegan Zimmerman (PhD, Comparative Literature, inism. Without it, feminism will fracture even further – University of Alberta) is an Assistant Professor of En- Roxane Gay (2013) glish/Creative Writing and Women’s Studies at Stephens College in Columbia, Missouri. A recent Visiting Fel- This article analyzes the term “intersectional- low in the Centre for Contemporary Women’s Writing ity” as defined by Kimberlé Williams Crenshaw (1989, and the Institute of Modern Languages Research at the 1991) in relation to the digital turn and, in doing so, University of London, Zimmerman specializes in con- considers how this concept is being employed by fourth temporary women’s historical fiction and contempo- wave feminists on Twitter. Presently, little scholarship rary gender theory. Her book Matria Redux: Caribbean has been devoted to fourth wave feminism and its en- Women’s Historical Fiction, forthcoming from North- gagement with intersectionality; however, some notable western University Press, examines the concepts of ma- critics include Kira Cochrane, Michelle Goldberg, Mik- ternal history and maternal genealogy. ki Kendall, Ealasaid Munro, Lola Okolosie, and Roop- ika Risam.1 Intersectionality, with its consideration of Abstract class, race, age, ability, sexuality, and gender as inter- This article analyzes the term “intersectionality” as de- secting loci of discriminations or privileges, is now the fined by Kimberlé Williams Crenshaw in relation to the overriding principle among today’s feminists, manifest digital turn: it argues that intersectionality is the dom- by theorizing tweets and hashtags on Twitter. Because inant framework being employed by fourth wave fem- fourth wave feminism, more so than previous feminist inists and that is most apparent on social media, espe- movements, focuses on and takes up online technolo- cially on Twitter. -

Great Meme War:” the Alt-Right and Its Multifarious Enemies

Angles New Perspectives on the Anglophone World 10 | 2020 Creating the Enemy The “Great Meme War:” the Alt-Right and its Multifarious Enemies Maxime Dafaure Electronic version URL: http://journals.openedition.org/angles/369 ISSN: 2274-2042 Publisher Société des Anglicistes de l'Enseignement Supérieur Electronic reference Maxime Dafaure, « The “Great Meme War:” the Alt-Right and its Multifarious Enemies », Angles [Online], 10 | 2020, Online since 01 April 2020, connection on 28 July 2020. URL : http:// journals.openedition.org/angles/369 This text was automatically generated on 28 July 2020. Angles. New Perspectives on the Anglophone World is licensed under a Creative Commons Attribution- NonCommercial-ShareAlike 4.0 International License. The “Great Meme War:” the Alt-Right and its Multifarious Enemies 1 The “Great Meme War:” the Alt- Right and its Multifarious Enemies Maxime Dafaure Memes and the metapolitics of the alt-right 1 The alt-right has been a major actor of the online culture wars of the past few years. Since it came to prominence during the 2014 Gamergate controversy,1 this loosely- defined, puzzling movement has achieved mainstream recognition and has been the subject of discussion by journalists and scholars alike. Although the movement is notoriously difficult to define, a few overarching themes can be delineated: unequivocal rejections of immigration and multiculturalism among most, if not all, alt- right subgroups; an intense criticism of feminism, in particular within the manosphere community, which itself is divided into several clans with different goals and subcultures (men’s rights activists, Men Going Their Own Way, pick-up artists, incels).2 Demographically speaking, an overwhelming majority of alt-righters are white heterosexual males, one of the major social categories who feel dispossessed and resentful, as pointed out as early as in the mid-20th century by Daniel Bell, and more recently by Michael Kimmel (Angry White Men 2013) and Dick Howard (Les Ombres de l’Amérique 2017). -

Download Download

DOI: 10.4119/ijcv-3805 IJCV: Vol. 14(2)/2020 Connecting Structures: Resistance, Heroic Masculinity and Anti-Feminism as Bridging Narratives within Group Radicalization David Meieringi [email protected] Aziz Dzirii [email protected] Naika Foroutani [email protected] i Berlin Institute for Integration and Migration Research (BIM) at the Humboldt University Berlin Vol. 14(2)/2020 The IJCV provides a forum for scientific exchange and public dissemination of up-to-date scien- tific knowledge on conflict and violence. The IJCV is independent, peer reviewed, open access, and included in the Social Sciences Citation Index (SSCI) as well as other rele- vant databases (e.g., SCOPUS, EBSCO, ProQuest, DNB). The topics on which we concentrate—conflict and violence—have always been central to various disciplines. Consequently, the journal encompasses contributions from a wide range of disciplines, including criminology, economics, education, ethnology, his- tory, political science, psychology, social anthropology, sociology, the study of reli- gions, and urban studies. All articles are gathered in yearly volumes, identified by a DOI with article-wise pagi- nation. For more information please visit www.ijcv.or g Suggested Citation: APA: Meiering, D., Dziri, A., & Foroutan, N. (2020). Connecting structures: Resistance, heroic masculinity and anti-feminism as bridging narratives within group radicaliza- tion. International Journal of Conflict and Violence, 14(2), 1-19. doi: 10.4119/ijcv-3805 Harvard: Meiering, David, Dziri, Aziz, Foroutan, Naika. 2020. Connecting Structures: Resistance, Heroic Masculinity and Anti-Feminism as Bridging Narratives within Group Radicalization. International Journal of Conflict and Violence 14(2): 1-19. doi: 10.4119/ijcv-3805 This work is licensed under the Creative Commons Attribution—NoDerivatives License. -

Resistance, Heroic Masculinity and Anti-Feminism As Bridging Narratives Within Group Radicalization

�������� ������������� � � ��������� ����������� ��������������������������������� �!������"����#����$���%� &����'�(����(����)��%�����*����������+��,���-���.� ��%���#�/����� ����%�"�������� %���%�(�������0,��1��#���%� &/�/��/���� %/����/20,��1��#���%� *��3��'�������� 4�������0,��1��#���%� ��)��#�������������4�����������������%�"����������������,�5)�"6�����,��!�(1�#%��7��������$�)��#�� 8�#��� 596�9�9� :,���;�8 .����%�����4���(�4����������4����2�,�������%�.�1#���%����(���������4��.����%���������� ��4���3��+#�%���������4#������%����#������:,���;�8������%�.��%��� �.���������+�% ��.�� ������ ���%����#�%�%�����,�������#���������������������%�2�5����6����+�##������,�����#�� �����%���1�����5���� ����<7� �=)��� �<��>��� ��*)6�� :,����.�������+,��,�+�������������?���4#������%����#����?,�����#+�$��1����������# �����������%����.#�����������@����#$ ��,��������#�����(.������������1�������4��(�� +�%���������4�%����.#���� ����#�%�������(���#��$ ������(��� ��%������� ���,��#��$ �,��� ���$ �.�#�����#�������� �.�$�,�#��$ ������#����,��.�#��$ ������#��$ ��,�����%$��4���#�� ����� ���%���1������%����� &##������#����������,���%����$���#$���#�(�� ��%����4��%�1$�������+��,������#��+����.���� ������� '���(������4��(������.#�������������+++����������� ��������%���������� &<&��"������� ��� ��/��� �&� �A�'������� �*��59�9�6����������������������������������� ,������(����#����$���%������4�(����(����1��%����������������+��,�������.���%���#�/�� ������������������ ������� �������� ����������� ��������596��������%������� ������������� !�����%� �"������� �����% ��/��� -

The Incel Lexicon: Deciphering the Emergent Cryptolect of a Global Misogynistic Community

The incel lexicon: Deciphering the emergent cryptolect of a global misogynistic community Kelly Gothard,1 David Rushing Dewhurst,2 Joshua R. Minot,1 Jane Lydia Adams,1 Christopher M. Danforth,1, 3, 4 and Peter Sheridan Dodds1, 3, 4 1Computational Story Lab, Vermont Complex Systems Center, MassMutual Center of Excellence for Complex Systems and Data Science, Vermont Advanced Computing Core, The University of Vermont, Burlington, VT 05401. 2Charles River Analytics, 625 Mount Auburn Street, Cambridge, MA 02138. 3Department of Mathematics & Statistics, University of Vermont, Burlington, VT 05401. 4Department of Computer Science, University of Vermont, Burlington, VT 05401. (Dated: May 26, 2021) Evolving out of a gender-neutral framing of an involuntary celibate identity, the concept of `incels' has come to refer to an online community of men who bear antipathy towards themselves, women, and society-at-large for their perceived inability to find and maintain sexual relationships. By exploring incel language use on Reddit, a global online message board, we contextualize the incel community's online expressions of misogyny and real-world acts of violence perpetrated against women. After assembling around three million comments from incel-themed Reddit channels, we analyze the temporal dynamics of a data driven rank ordering of the glossary of phrases belonging to an emergent incel lexicon. Our study reveals the generation and normalization of an extensive coded misogynist vocabulary in service of the group's identity. I. INTRODUCTION with the incel community, such as the Tallahassee yoga studio shooting, during which Scott Beierle Incels are self-identified members of a global, shot and killed two women shortly after referencing online subculture whose members subscribe to Elliot Rodger in videos online [5]. -

Om Mäns Pro- Och Antifeministiska Engagemang

Om mäns pro- och antifeministiska engagemang Jonas Mollwing Pedagogiska institutionen Umeå centrum för genusstudier, Genusforskarskolan Umeå 2021 Detta verk är skyddat av svensk upphovsrätt (Lag 1960:729) Avhandling för filosofie doktorsexamen ISBN: 978-91-7855-477-5 (print) ISBN: 978-91-7855-478-2 (pdf) ISSN: 0281-6768 Akademiska avhandlingar vid Pedagogiska institutionen, Umeå universitet; nr 127 Omslag: Gabriella Dekombis, Umeå universitet Elektronisk version tillgänglig på: http://umu.diva-portal.org/ Tryck: Cityprint i Norr AB Umeå, Sverige 2021 Till Njord, Egil, Freja och Sol Innehållsförteckning Introduktion ...................................................................................... 1 Inledning ........................................................................................................................... 1 Profeminism och antifeminism ................................................................................ 4 Syfte och frågeställningar ......................................................................................... 7 Avhandlingens disposition ........................................................................................ 7 Vetenskapligt förhållningssätt ........................................................................................ 9 Vetenskapsfilosofiska antaganden........................................................................... 9 Kunskapens dimensioner .................................................................................... 9 Verklighetens djup ............................................................................................. -

On Misogyny, the Classics, and the Political Right: for an Ethical Scholarship of Ancient History

ISSN 2421-0730 NUMERO 1 – GIUGNO 2019 FRANCESCO ROTIROTI On Misogyny, the Classics, and the Political Right: For an Ethical Scholarship of Ancient History D. ZUCKERBERG, Not All Dead White Men: Classics and Misogyny in the Digital Age, Harvard University Press, Cambridge, Mass., and London 2018, pp. 270 n. 1/2019 FRANCESCO ROTIROTI* On Misogyny, the Classics, and the Political Right: For an Ethical Scholarship of Ancient History D. ZUCKERBERG, Not All Dead White Men: Classics and Misogyny in the Digital Age, Harvard University Press, Cambridge, Mass., and London 2018, pp. 270 I. Not All Dead White Men is the first book by Donna Zuckerberg, an American classicist educated at Princeton University, best known as the founder and chief editor of Eidolon, an online journal Whose declared mission is to “mak[e] the classics political and personal, feminist and fun.”1 Some of the most attractive and remarkable of Eidolon’s articles are precisely those Which adopt a progressive, inclusive, and feminist perspective on the place of ancient history and the classics in present-day politics. The book under revieW has a similar inspiration, concerned as it is with the appropriation of the classics by What is often described as the “manosphere,” a loose netWork of online communities connected by their aWareness of the purported misandry of present-day societies and fostering * Postdoctoral Researcher in Legal History at “Magna Graecia” University of Catanzaro. 1 Eidolon’s Mission Statement, in Eidolon, Aug. 21, 2017, https://eidolon.pub/eidolons- mission-statement-d026012023d5. On the political element, see esp. D. ZUCKERBERG, Welcome to the New Eidolon! in Eidolon, Aug. -

Defections by GOP Kill Health Bill For

CYAN MAGENTA YELLOW BLACK » TODAY’S ISSUE U DAILY BRIEFING, A2 • TRIBUTES, A5 • WORLD & BUSINESS, B5 • CLASSIFIEDS, B6 • PUZZLES & TV, C3 CLASS B BASEBALL CHAMPION SCRAPPERS ROLL COMING TO COVELLI 50% Baird bests Astro in Game 5 7-run 7th bedevils Batavia Stevie Nicks show set for Sept. 15 OFF SPORTS | B1 SPORTS | B1 VALLEY LIFE | C1 vouchers. DETAILS, A2 FOR DAILY & BREAKING NEWS LOCALLY OWNED SINCE 1869 TUESDAY, JULY 18, 2017 U 75¢ Lordstown police: Pot smuggling via Fusion has occurred before Law enforcement Marijuana bales were found on popular Ford car at rail yard in 2015 seized marijuana in the trunks of Ford By ED RUNYAN ment on Monday Detective Chris Bordonaro of Fusions at dealerships [email protected] provided the de- the Lordstown police said the rail in Portage, Stark LORDSTOWN tails after Jeff Orr, yard notifi ed Lordstown offi cers and Columbiana Last week was not the fi rst time former Trumbull after finding 50 to 100 pounds counties, as well as that marijuana made its way from Ashtabula Group of marijuana attached to the one in Pennsylvania. VINDICATOR The rail car carrying Mexico to the United States inside Law Enforcement EXCLUSIVE Fusion. the Fusions had come the spare-tire compartments of Task Force com- It appeared tape was used to from Hermosillo, Ford Fusions via the CSX rail yard mander, revealed secure the marijuana, and rem- Mexico, and had sat in Lordstown. the case to The Vindicator. nants of the tape were found on idle in Chicago 18 An Aug. 7, 2015, incident at the In that case, bales of marijuana 11 other vehicles, suggesting the hours before coming same rail yard, which is off state were found attached to the un- drugs had been off-loaded from to Lordstown. -

To What Extent Incels' Misogynistic and Violent Attitudes Towards

To what extent Incels’ misogynistic and violent attitudes towards women are driven by their unsatisfied mating needs and entitlement to sex? Bachelor Thesis in Psychology Faculty of Behavioural, Management and Social Sciences Department of Psychology Author: Sara Konutgan (s1839454) Supervisor: Dr. Pelin Gül & Noortje Kloos, MSc To what extent are Incels’ misogynistic and violent attitudes towards women driven by their unsatisfied mating needs and entitlement to sex? Sara Konutgan University of Twente Abstract The purpose of this research is to investigate which psychological factors drive Incels to have violent and misogynistic attitudes towards women. This paper focuses on the investigation of two psychological factors: entitlement and frustrated mating needs. A cross-sectional survey design was used to gather data through an online survey. The sample involved 139 participants of which 28 participants define themselves as Incels. The results of the multiple regression analysis indicated that participants who have higher feelings of entitlement to sex and higher struggles with romantic and sexual relationships show a higher level of misogyny and violent attitudes towards women. This study contributes to the understanding of the psychological factors that drive misogynistic ideologies that exist among Incels as well as in society. Keywords: Incels, Entitlement, Misogyny, Sexual Frustration, Romantic Frustration, Frustrated Mating Needs 1 Introduction The term “Incel” is the short form for being an involuntary celibate which can be defined as being unable to find a partner for sexual or romantic relationships even though it is desired (Young, 2019). Some Incels are sharing their attitudes and opinions in online forums. Although not all members of Incel communities have misogynistic or violent attitudes towards women, some members are sharing misogyny (Ging, 2017). -

Exhibit SS-LLL to the Declaration of Anna M

Case 3:17-cv-01017-BEN-JLB Document 6-7 Filed 05/26/17 PageID.644 Page 1 of 105 1 C.D. Michel – SBN 144258 Sean A. Brady – SBN 262007 2 Anna M. Barvir – SBN 268728 Matthew D. Cubeiro – SBN 291519 3 MICHEL & ASSOCIATES, P.C. 180 E. Ocean Boulevard, Suite 200 4 Long Beach, CA 90802 Telephone: (562) 216-4444 5 Facsimile: (562) 216-4445 Email: [email protected] 6 Attorneys for Plaintiffs 7 8 UNITED STATES DISTRICT COURT 9 SOUTHERN DISTRICT OF CALIFORNIA 10 VIRGINIA DUNCAN, RICHARD Case No: 17-cv-1017-BEN-JLB LEWIS, PATRICK LOVETTE, DAVID 11 MARGUGLIO, CHRISTOPHER EXHIBITS SS-LLL TO THE WADDELL, CALIFORNIA RIFLE & DECLARATION OF ANNA M. 12 PISTOL ASSOCIATION, BARVIR IN SUPPORT OF INCORPORATED, a California PLAINTIFFS’ MOTION FOR 13 corporation, PRELIMINARY INJUNCTION 14 Plaintiffs, 15 v. 16 XAVIER BECERRA, in his official capacity as Attorney General of the State 17 of California; and DOES 1-10, 18 Defendants. 19 20 21 22 23 24 25 26 27 28 1 EXHIBITS SS-LLL 17-cv-1017-BEN-JLB Case 3:17-cv-01017-BEN-JLB Document 6-7 Filed 05/26/17 PageID.645 Page 2 of 105 1 EXHIBITS 2 TABLE OF CONTENTS 3 Exhibit Letter Description Page Numbers 4 E Gun Digest 2013 (Jerry Lee ed., 67th ed. 2012) 000028-118 5 F Website pages for Glock “Safe Action” Gen4 Pistols, 000119-157 6 Glock; M&P®9 M2.0™, Smith & Wesson; CZ 75 B, CZ- 7 USA; Ruger® SR9®, Ruger; P320 Nitron Full-Size, Sig 8 Sauer 9 G Pages 73-97 from The Complete Book of Autopistols: 2013 000158-183 10 Buyer’s Guide (2013) 11 H David B. -

Alt-Stoicism? Actually, No…

4 (6) 2018 DOI: 10.26319/6921 Matthew Sharpe Faculty of Arts and Education Deakin University Into the Heart of Darkness Or: Alt-Stoicism? Actually, No… In several conversations over the last months, people have independently raised a troubling sign of the times. Since the mid-2010s, it seems, “Alt-Right” bloggers have begun to populate Facebook and other online venues of the “Modern Stoicism” movement, claiming that the ancient philosophy vindicates their misogynistic and nativist views, complete with sometimes-erroneous “quotations” from Marcus Aurelius. Donna Zuckerberg, in several articles1 and in her recent book, Not All Dead White Men: Classics and Misogyny in the Digital Age2, has done a courageous service to us all by examining and documenting this phenomenon. As she writes: The Red Pill emphasizes Stoicism practicality in nearly every article about the philosophy… Illimitable Men, a blog that uses a more literary and philosophical approach than most manosphere sites, lists Marcus Aurelius’s Meditations second in the top ten “books for men,” describing it as “helpful as a spiritual guide to dealing with, and perceiving life.” Meditations also appears on a list of “Comprehensive Red Pill Books” on the Red Pill subreddit, where it is described as “a very simple pathway to practical philosophy.” In a review of Epictetus’s Enchiridion on Return of Kings, Valizadeh praises Stoicism and claims that “Stoicism will give you more practical tools on 1) Donna Zuckerberg, “How to Be a Good Classicist Under a Bad Emperor,” Eidolon, November 22, 2016, https://eidolon.pub/how- to-be-a-good-classicist-under-a-bad-emperor-6b848df6e54a; Donna Zuckerberg, “So I Wrote a Thing,” Eidolon, October 8, 2018, https://eidolon.pub/so-i-wrote-a-thing-6726d9449a2b. -

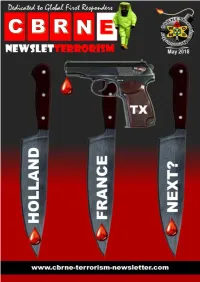

MAY 2018 Part C.Pdf

Page | 1 CBRNE-TERRORISM NEWSLETTER – May 2018 www.cbrne-terrorism-newsletter.com Page | 2 CBRNE-TERRORISM NEWSLETTER – May 2018 www.cbrne-terrorism-newsletter.com Page | 3 CBRNE-TERRORISM NEWSLETTER – May 2018 Weaponizing Artificial Intelligence: The Scary Prospect Of AI- Enabled Terrorism By Bernard Marr Source: https://www.forbes.com/sites/bernardmarr/2018/04/23/weaponizing-artificial-intelligence-the- scary-prospect-of-ai-enabled-terrorism/#7c68133877b6 There has been much speculation about the power and dangers of artificial intelligence (AI), but it’s been primarily focused on what AI will do to our jobs in the very near future. Now, there’s discussion among tech leaders, governments and journalists about how artificial intelligence is making lethal autonomous weapons systems possible and what could transpire if this technology falls into the hands of a rogue state or terrorist organization. Debates on the moral and legal implications of autonomous weapons have begun and there are no easy answers. Autonomous weapons already developed The United Nations recently discussed the use of autonomous weapons and the possibility to institute an international ban on “killer robots.” This debate comes on the heels of more than 100 leaders from the artificial intelligence community, including Tesla’s Elon Musk and Alphabet’s Mustafa Suleyman, warning that these weapons could lead to a “third revolution in warfare.” Although artificial intelligence has enabled improvements and efficiencies in many sectors of our economy from entertainment to transportation to healthcare, when it comes to weaponized machines being able to function without intervention from humans, a lot of questions are raised. There are already a number of weapons systems with varying levels of human involvement that are actively being tested today.