Agent-Based Programming Interfaces for Children Tina Quach

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Sun of Words

The Sun of Words Excerpts from Aber ich lebe nur von den Zwischenräumen, an interview between Herbert Gamper and Peter Handke Wednesday April 9th to Saturday April 12th, 1986 On the morning of April 9th, 1986, it was an unusually warm day with the föhn wind blowing, and I met Peter Handke in front of the house where he was living on the Mönchsberg. He first lead me up to the tower, from where one can see down to the southern parts of Salzburg, over the plains and towards the mountains (the Untersberg and the Staufen). I asked him about the Morzger forest, whose southern extremities were visible, and about the nearby area where Loser, the protagonist of Across, lived. He asked whether these settings interested me, and this was what determined the first question I asked after we had gone down to sit at the small table by the well, in the tree-shadows, and I had taken the final, inevitable step, so that the game could begin, and switched on the tape recorder. We regretted that the singing of the chaffinches and the titmice would not be transcribed to paper; again and again it seemed to me ridiculous to pose a question in the middle of this concert. I told of a visit with Thomas Bernhard, many years ago, when, without my asking, he showed me the offices of the lawyer Moro (from the story Ungenach) in Gmunden, as well as the fallen trees infested with bark beetles at the edge of his land that had been reimagined as the General’s forest from the play Die Jagdgesellschaft. -

Workspace Wellness Workspace Essentials Work Better. Feel Better

2017 Workspace Wellness Workspace Essentials Work Better. Feel Better. 100 Innovation in Motion™ YEARS Since 1917 Table of Contents Workspace Wellness Well-Being Solutions Guide 4 - 5 Sit-Stands 6 - 9 Monitor & Laptop Arms 10 - 12 Monitor & Laptop Supports 13 - 14 Keyboard Managers 15 - 21 Palm & Wrist Supports 22 - 25 Back Supports 26 - 27 Foot Supports 28 - 29 Document Holders 30 - 31 Machine Stands 32 Workspace Essentials Essentials Introduction 33 Privacy & Screen Protectors 34 - 35 Tablet Solutions 36 - 37 Home Office Solutions 38 - 41 Keyboards & Mice 42 - 44 Index Product Listings 45 - 49 Helping you work better by making your work easier. 2 Create a comfortable Improve well-being at and stylish home office. every workstation. Workspace Wellness Work better. Feel better. Workspace and wellness, connected. Fellowes Workspace Wellness products operate on a simple but powerful notion: We believe office equipment should do more than improve your performance. It should also improve your health. We take a holistic approach offering advanced ergonomics to mitigate the negative effects of everyday tasks along with groundbreaking solutions to promote the positive effects of body movement. It’s innovation with a new goal: moving from just preventing health issues to actually enhancing health; going beyond just working better to helping you feel better, too. 4 Head & Eye Discomfort Back Discomfort Cause: Improper Monitor or Laptop Position, Cause: Slouching, Knees Above Hips Incorrect Document Placement Solution: Back Supports, Foot Supports -

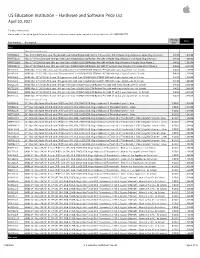

Apple US Education Price List

US Education Institution – Hardware and Software Price List April 30, 2021 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac iMac with Intel processor MHK03LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/256GB SSD/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 8/4/20 1,049.00 MXWT2LL/A iMac 27" 5K/3.1GHz 6-core 10th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,699.00 MXWU2LL/A iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 1,899.00 MXWV2LL/A iMac 27" 5K/3.8GHz 8-core 10th-gen Intel Core i7/8GB/512GB SSD/Radeon Pro 5500 XT w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 2,099.00 BR332LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 3-year AppleCare+ for Schools 8/4/20 1,168.00 BR342LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 4-year AppleCare+ for Schools 8/4/20 1,218.00 BR2P2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 3-year AppleCare+ for Schools 8/4/20 1,818.00 BR2S2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 4-year AppleCare+ for Schools 8/4/20 1,868.00 BR2Q2LL/A BNDL iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB -

Holiday Catalog

Brilliant for what’s next. With the power to achieve anything. AirPods Pro AppleCare+ Protection Plan†* $29 Key Features • Active Noise Cancellation for immersive sound • Transparency mode for hearing and connecting with the world around you • Three sizes of soft, tapered silicone tips for a customizable fit • Sweat and water resistant1 • Adaptive EQ automatically tunes music to the shape of your ear • Easy setup for all your Apple devices2 • Quick access to Siri by saying “Hey Siri”3 • The Wireless Charging Case delivers more than 24 hours of battery life4 AirPods Pro. Magic amplified. Noise nullified. Active Noise Cancellation for immersive sound. Transparency mode for hearing what’s happening around you. Sweat and water resistant.1 And a more customizable fit for all-day comfort. AirPods® AirPods AirPods Pro with Charging Case with Wireless Charging Case with Wireless Charging Case $159 $199 $249 1 AirPods Pro are sweat and water resistant for non-water sports and exercise and are IPX4 rated. Sweat and water resistance are not permanent conditions. The charging case is not sweat or water resistant. 2 Requires an iCloud account and macOS 10.14.4, iOS 12.2, iPadOS, watchOS 5.2, or tvOS 13.2 or later. 3Siri may not be available in all languages or in all areas, and features may vary by area. 4 Battery life varies by use and configuration. See apple.com/batteries for details. Our business is part of a select group of independent Apple® Resellers and Service Providers who have a strong commitment to Apple’s Mac® and iOS platforms and have met or exceeded Apple’s highest training and sales certifications. -

Sidecar Sidecar Lets You Expand Your Mac Workspace—And Your Creativity—With Ipad and Apple Pencil

Sidecar Sidecar lets you expand your Mac workspace—and your creativity—with iPad and Apple Pencil. October 2019 Contents Overview ...............................................................................................................3 Easy setup ............................................................................................................4 iPad as second display ......................................................................................... 5 iPad as tablet input device ....................................................................................6 Additional features ...............................................................................................8 Sidecar | October 2019 2 Overview Key Features Adding a second display has been a popular way for Mac users to extend their desktop and spread out their work. With Sidecar, Extended desktop Mac users can now do the same thing with their iPad. iPad makes Expand your Mac workspace using your iPad as a second display. Place one app a gorgeous second display that is perfect in the office or on on each screen, or put your main canvas the go. Plus Sidecar enables using Apple Pencil for tablet input on one display and your tools and on Mac apps for the very first time. Convenient sidebar and palettes on the other. Touch Bar controls let users create without taking their hands off iPad. And they can interact using familiar Multi-Touch gestures Apple Pencil to pinch, swipe, and zoom; as well as new iPadOS text editing Use Apple Pencil for tablet input with your favorite creative professional gestures like copy, cut, paste, and more. Sidecar works equally Mac apps. well over a wired or wireless connection, so users can create while sitting at their desk or relaxing on the sofa. Sidebar The handy sidebar puts essential modifier keys like Command, Control, Shift, and Option right at your fingertips. Touch Bar Touch Bar provides app-specific controls at the bottom of the iPad screen, even if your Mac does not have Touch Bar. -

Discografía De BLUE NOTE Records Colección Particular De Juan Claudio Cifuentes

CifuJazz Discografía de BLUE NOTE Records Colección particular de Juan Claudio Cifuentes Introducción Sin duda uno de los sellos verdaderamente históricos del jazz, Blue Note nació en 1939 de la mano de Alfred Lion y Max Margulis. El primero era un alemán que se había aficionado al jazz en su país y que, una vez establecido en Nueva York en el 37, no tardaría mucho en empezar a grabar a músicos de boogie woogie como Meade Lux Lewis y Albert Ammons. Su socio, Margulis, era un escritor de ideología comunista. Los primeros testimonios del sello van en la dirección del jazz tradicional, por entonces a las puertas de un inesperado revival en plena era del swing. Una sentida versión de Sidney Bechet del clásico Summertime fue el primer gran éxito de la nueva compañía. Blue Note solía organizar sus sesiones de grabación de madrugada, una vez terminados los bolos nocturnos de los músicos, y pronto se hizo popular por su respeto y buen trato a los artistas, que a menudo podían involucrarse en tareas de producción. Otro emigrante aleman, el fotógrafo Francis Wolff, llegaría para unirse al proyecto de su amigo Lion, creando un tandem particulamente memorable. Sus imágenes, unidas al personal diseño del artista gráfico Reid Miles, constituyeron la base de las extraordinarias portadas de Blue Note, verdadera seña de identidad estética de la compañía en las décadas siguientes mil veces imitada. Después de la Guerra, Blue Note iniciaría un giro en su producción musical hacia los nuevos sonidos del bebop. En el 47 uno de los jóvenes representantes del nuevo estilo, el pianista Thelonious Monk, grabó sus primeras sesiones Blue Note, que fue también la primera compañía del batería Art Blakey. -

NEW HAMPSHIRE Preliminary Discussions in Preparation for the Arrival of the Par Ticipants on Saturday

V Rolling Ridge Delegates To Convene Saturday By Charlotte Anderson Ten discussion leaders and the steering committee of the third annual Rolling Ridge Conference on Campus Affairs of UNH, are leaving tomorrow for North Andover, Mass., at 4:00 p.m. to hold NEW HAMPSHIRE preliminary discussions in preparation for the arrival of the par ticipants on Saturday. VOL. No. 42 Issue 6 Z413 Durham, N. H., October 23, 1952 PRICE 7 CENTS The theme of the conference will be “ Purpose and Participation on a Uni versity Campus,” with two subdivisions being “ Purpose and Participation in Academic Life,” and “Purpose and Par Mayor Stars Issues Statement I. C. Stars Wins By ticipation in Campus Life.” Questions Good Evening, Mr. and Mrs. America. Let’s go to . My five- under these two divisions will center star platform for the vear 1952 shall be: Close Margin; 3000 about leadership, student government, Star No. 1. student conduct, women’s regulations, discrimination extra-curricular social ac Be it heretofore, henceforth, and forwith known that as the new tivities, attendance rules, advisor system, Mayor of Durham, my reign over this far city shall be conceived Alumni Return Home cheating, faculty-student relations, fra in celluloid and thoroughly washed in chlorophyll. One of the most successful and well ternity and University relationship, van Star No. 2. attended Homecoming Weekends in dalism, and required courses. Be it also known that my first great production of the coming University history was highlighted by All conference delegates will gather in the close election of Mr. I. C. Stars, T-Hall parking lot at 1 :15 on Saturday, year will be centered in the College Woods and will star Boris of Phi Mu Delta, by a nine-vote mar and will register for the conference in Karloff and Margaret O’Brien in “Strange Love.” Co-featured with gin oyer his rival, Mr, Jones, of Sigma the T-Hall lobby. -

726708699321-Itunes-Booklet.Pdf

robert gibson flux and fire Twelve Poems (2004) 18 Night Music (2002) 3:53 James Stern, violin Eric Kutz, violoncello Audrey Andrist, piano 1 Aura 1:27 19 Flux and Fire (2006) 11:13 2 Wind Chime 1:10 Aeolus Quartet: 3 Cloudburst 0:57 Nicholas Tavani, Rachel Shapiro, violin 4 Reflection 2:21 Gregory Luce, viola 5 2:3 0:40 Alan Richardson, violoncello 6 Waves 1:04 7 Hommage 1:14 20 Night Music (2002) 3:52 8 Entropy 1:05 Katherine Murdock, viola 9 Barcarolle 1:24 10 Shoal 0:48 21 Offrande (1996) 9:11 11 Quatrain 1:23 Aeolus Quartet 12 Octave 1:41 22 Night Music (2002) 3:48 Soundings (2001) James Stern, violin Robert Oppelt, Richard Barber, Jeffrey Weisner, Ali Kian Yazdanfar, double bass Total: 60:54 13 Tenuous 2:23 14 Diaphanous 3:01 15 Nebulous 2:45 16 Luminous 3:03 17 Capricious 2:33 innova 993 © Robert Gibson. All Rights Reserved, 2018. innova® Recordings is the label of the American Composers Forum. www.innova.mu www.robertgibsonmusic.com Schoodic Peninsula Acadia National Park Offrande sketches (1996) Luxury, impulse! I draft a phrase and believe it protects me from this icy world, that goes through my body like a shoal of sardines. —Alain Bosquet “Regrets” (excerpt) from No Matter No Fact translation by Edouard Roditi My music has often been guided by imagery and emotion from poems that I have come to know and love. The boundaries between the arts are fluid, and the metaphor that for me most aptly illuminates this relationship—and the process of composition—is the act of translation. -

![Greek Color Theory and the Four Elements [Full Text, Not Including Figures] J.L](https://docslib.b-cdn.net/cover/6957/greek-color-theory-and-the-four-elements-full-text-not-including-figures-j-l-1306957.webp)

Greek Color Theory and the Four Elements [Full Text, Not Including Figures] J.L

University of Massachusetts Amherst ScholarWorks@UMass Amherst Greek Color Theory and the Four Elements Art July 2000 Greek Color Theory and the Four Elements [full text, not including figures] J.L. Benson University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/art_jbgc Benson, J.L., "Greek Color Theory and the Four Elements [full text, not including figures]" (2000). Greek Color Theory and the Four Elements. 1. Retrieved from https://scholarworks.umass.edu/art_jbgc/1 This Article is brought to you for free and open access by the Art at ScholarWorks@UMass Amherst. It has been accepted for inclusion in Greek Color Theory and the Four Elements by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. Cover design by Jeff Belizaire ABOUT THIS BOOK Why does earlier Greek painting (Archaic/Classical) seem so clear and—deceptively— simple while the latest painting (Hellenistic/Graeco-Roman) is so much more complex but also familiar to us? Is there a single, coherent explanation that will cover this remarkable range? What can we recover from ancient documents and practices that can objectively be called “Greek color theory”? Present day historians of ancient art consistently conceive of color in terms of triads: red, yellow, blue or, less often, red, green, blue. This habitude derives ultimately from the color wheel invented by J.W. Goethe some two centuries ago. So familiar and useful is his system that it is only natural to judge the color orientation of the Greeks on its basis. To do so, however, assumes, consciously or not, that the color understanding of our age is the definitive paradigm for that subject. -

Sedletter: Volume 13 Number 2

S outhwest E ducational D evelopment SEDL L aboratory ETTERN ews VOLUME XIII N UMBER 2 OCTOBER 2001 Teachers— They matter I N S I D E most Are Our Teachers Good Enough?, PAGE 3 Are Alternative Certification Programs a Solution to the Teacher Shortage?, PAGE 8 Dumas Invests in Future with Permanent Sub Program, PAGE 13 Tough Enough to Teach, PAGE 17 Mentoring Program to Put Arkansas Teachers on Path to Success, PAGE 19 E-Mentoring for Math and Science Teachers Made Easy, PAGE 21 A Click Away: Online Resources for Recruitment, Retention, and Quality Issues, PAGE 22 A Changing For the past several years we’ve read predictions that the teacher workforce will change. During a recent “meet the teachers” night at my son’s high school, I realized this may be coming true. Only one of my son’s four teachers fit my conception of a traditional teacher, or at least my conception of a teacher who had followed a traditional career path. Teacher One teacher, in his early 50s, had spent 25 years running a successful software programming company. The second teacher had retired two years ago, but came out of retirement to teach three classes because the high school desperately needed a calculus teacher. The third had traveled Workforce the world and attended law school before deciding what he really wanted to do was teach. These teachers represent what may be a trend in the teaching profession, especially as schools and districts struggle to meet the estimated need of more than 2 million teachers over the next decade. -

Apple US Education Institution Price List

US Education Institution – Hardware and Software Price List March 18, 2020 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac MMQA2LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/1TB hard drive/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 6/5/17 1,049.00 MRT32LL/A iMac 21.5" 4K/3.6GHz quad-core 8th-gen Intel Core i3/8GB/1TB hard drive/Radeon Pro 555X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,249.00 MRT42LL/A iMac 21.5" 4K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 560X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,399.00 MRQY2LL/A iMac 27" 5K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 570X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,699.00 MRR02LL/A iMac 27" 5K/3.1GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 575X w/Apple Magic Keyboard & Apple Magic Mouse 2 3/19/19 1,899.00 MRR12LL/A iMac 27" 5K/3.7GHz 6-core 8th-gen Intel Core i5/8GB/2TB Fusion drive/Radeon Pro 580X w/Apple Magic Keyboard & Apple Magic Mouse 2 3/19/19 2,099.00 BPPZ2LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/1TB hard drive/Intel IPG 640 with 3-year AppleCare+ for Schools 2/7/20 1,168.00 BPPY2LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/1TB hard drive/Intel IPG 640 with 4-year AppleCare+ for Schools 2/7/20 1,218.00 BPQ92LL/A BNDL iMac 21.5" -

MEXICO CITY BLUES Other Works by Jack Kerouac Published by Grove Press Dr

MEXICO CITY BLUES Other Works by Jack Kerouac Published by Grove Press Dr. Sax Lonesome Traveler Satori in Paris and Pic (one volume) The Subterraneans MEXICO CITY BLUES Jack Kerouac Copyright © 1959 by Jack Kerouac All rights reserved. No part of this book may be reproduced in any form or by any electronic or mechanical means, or the facilitation thereof, including information storage and retrieval systems, without permission in writing from the publisher, except by a reviewer, who may quote brief passages in a review. Any members of educational institutions wishing to photocopy part or all of the work for classroom use, or publishers who would like to obtain permission to include the work in an anthology, should send their inquiries to Grove/Atlantic, Inc., 841 Broadway, New York, NY 10003. Published simultaneously in Canada Printed in the United States of America Library of Congress Cataloging-in-Publication Data Kerouac, Jack, 1922-1969. Mexico City blues / Jack Kerouac. p. cm. eBook ISBN-13: 978-0-8021-9568-5 I. Title. PS3521.E735M4 1990 813’.54—dc20 90-2748 Grove Press an imprint of Grove/Atlantic, Inc. 841 Broadway New York, NY 10003 Distributed by Publishers Group West www.groveatlantic.com MEXICO CITY BLUES MEXICO CITY BLUES NOTE I want to be considered a jazz poet blowing a long blues in an afternoon jam session on Sunday. I take 242 choruses; my ideas vary and sometimes roll from chorus to chorus or from halfway through a chorus to halfway into the next. 1st Chorus Butte Magic of Ignorance Butte Magic Is the same as no-Butte All one light Old Rough Roads One High Iron Mainway Denver is the same “The guy I was with his uncle was the governor of Wyoming” “Course he paid me back” Ten Days Two Weeks Stock and Joint “Was an old crook anyway” The same voice on the same ship The Supreme Vehicle S.