Monte Carlo Sampling Methods for Approximating Interactive Pomdps

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Monte Carlo Method - Wikipedia

2. 11. 2019 Monte Carlo method - Wikipedia Monte Carlo method Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes:[1] optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of risk in business and, in mathematics, evaluation of multidimensional definite integrals with complicated boundary conditions. In application to systems engineering problems (space, oil exploration, aircraft design, etc.), Monte Carlo–based predictions of failure, cost overruns and schedule overruns are routinely better than human intuition or alternative "soft" methods.[2] In principle, Monte Carlo methods can be used to solve any problem having a probabilistic interpretation. By the law of large numbers, integrals described by the expected value of some random variable can be approximated by taking the empirical mean (a.k.a. the sample mean) of independent samples of the variable. When the probability distribution of the variable is parametrized, mathematicians often use a Markov chain Monte Carlo (MCMC) sampler.[3][4][5][6] The central idea is to design a judicious Markov chain model with a prescribed stationary probability distribution. -

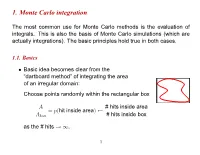

1. Monte Carlo Integration

1. Monte Carlo integration The most common use for Monte Carlo methods is the evaluation of integrals. This is also the basis of Monte Carlo simulations (which are actually integrations). The basic principles hold true in both cases. 1.1. Basics Basic idea becomes clear from the • “dartboard method” of integrating the area of an irregular domain: Choose points randomly within the rectangular box A # hits inside area = p(hit inside area) Abox # hits inside box as the # hits . ! 1 1 Buffon’s (1707–1788) needle experiment to determine π: • – Throw needles, length l, on a grid of lines l distance d apart. – Probability that a needle falls on a line: d 2l P = πd – Aside: Lazzarini 1901: Buffon’s experiment with 34080 throws: 355 π = 3:14159292 ≈ 113 Way too good result! (See “π through the ages”, http://www-groups.dcs.st-and.ac.uk/ history/HistTopics/Pi through the ages.html) ∼ 2 1.2. Standard Monte Carlo integration Let us consider an integral in d dimensions: • I = ddxf(x) ZV Let V be a d-dim hypercube, with 0 x 1 (for simplicity). ≤ µ ≤ Monte Carlo integration: • - Generate N random vectors x from flat distribution (0 (x ) 1). i ≤ i µ ≤ - As N , ! 1 V N f(xi) I : N iX=1 ! - Error: 1=pN independent of d! (Central limit) / “Normal” numerical integration methods (Numerical Recipes): • - Divide each axis in n evenly spaced intervals 3 - Total # of points N nd ≡ - Error: 1=n (Midpoint rule) / 1=n2 (Trapezoidal rule) / 1=n4 (Simpson) / If d is small, Monte Carlo integration has much larger errors than • standard methods. -

Lab 4: Monte Carlo Integration and Variance Reduction

Lab 4: Monte Carlo Integration and Variance Reduction Lecturer: Zhao Jianhua Department of Statistics Yunnan University of Finance and Economics Task The objective in this lab is to learn the methods for Monte Carlo Integration and Variance Re- duction, including Monte Carlo Integration, Antithetic Variables, Control Variates, Importance Sampling, Stratified Sampling, Stratified Importance Sampling. 1 Monte Carlo Integration 1.1 Simple Monte Carlo estimator 1.1.1 Example 5.1 (Simple Monte Carlo integration) Compute a Monte Carlo(MC) estimate of Z 1 θ = e−xdx 0 and compare the estimate with the exact value. m <- 10000 x <- runif(m) theta.hat <- mean(exp(-x)) print(theta.hat) ## [1] 0.6324415 print(1 - exp(-1)) ## [1] 0.6321206 : − : The estimate is θ^ = 0:6355 and θ = 1 − e 1 = 0:6321. 1.1.2 Example 5.2 (Simple Monte Carlo integration, cont.) R 4 −x Compute a MC estimate of θ = 2 e dx: and compare the estimate with the exact value of the integral. m <- 10000 x <- runif(m, min = 2, max = 4) theta.hat <- mean(exp(-x)) * 2 print(theta.hat) 1 ## [1] 0.1168929 print(exp(-2) - exp(-4)) ## [1] 0.1170196 : − : The estimate is θ^ = 0:1172 and θ = 1 − e 1 = 0:1170. 1.1.3 Example 5.3 (Monte Carlo integration, unbounded interval) Use the MC approach to estimate the standard normal cdf Z 1 1 2 Φ(x) = p e−t /2dt: −∞ 2π Since the integration cover an unbounded interval, we break this problem into two cases: x ≥ 0 and x < 0, and use the symmetry of the normal density to handle the second case. -

Bayesian Computation: MCMC and All That

Bayesian Computation: MCMC and All That SCMA V Short-Course, Pennsylvania State University Alan Heavens, Tom Loredo, and David A van Dyk 11 and 12 June 2011 11 June Morning Session 10.00 - 13.00 10.00 - 11.15 The Basics: Heavens Bayes Theorem, Priors, and Posteriors. 11.15 - 11.30 Coffee Break 11.30 - 13.00 Low Dimensional Computing Part I Loredo 11 June Afternoon Session 14.15 - 17.30 14.15 - 15.15 Low Dimensional Computing Part II Loredo 15.15 - 16.00 MCMC Part I van Dyk 16.00 - 16.15 Coffee Break 16.15 - 17.00 MCMC Part II van Dyk 17.00 - 17.30 R Tutorial van Dyk 12 June Morning Session 10.00 - 13.15 10.00 - 10.45 Data Augmentation and PyBLoCXS Demo van Dyk 10.45 - 11.45 MCMC Lab van Dyk 11.45 - 12.00 Coffee Break 12.00 - 13.15 Output Analysls* Heavens and Loredo 12 June Afternoon Session 14.30 - 17.30 14.30 - 15.25 MCMC and Output Analysis Lab Heavens 15.25 - 16.05 Hamiltonian Monte Carlo Heavens 16.05 - 16.20 Coffee Break 16.20 - 17.00 Overview of Other Advanced Methods Loredo 17.00 - 17.30 Final Q&A Heavens, Loredo, and van Dyk * Heavens (45 min) will cover basic convergence issues and methods, such as G&R's multiple chains. Loredo (30 min) will discuss Resampling The Bayesics Alan Heavens University of Edinburgh, UK Lectures given at SCMA V, Penn State June 2011 I V N E R U S E I T H Y T O H F G E R D I N B U Outline • Types of problem • Bayes’ theorem • Parameter EsJmaon – Marginalisaon – Errors • Error predicJon and experimental design: Fisher Matrices • Model SelecJon LCDM fits the WMAP data well. -

Monte Carlo and Markov Chain Monte Carlo Methods

MONTE CARLO AND MARKOV CHAIN MONTE CARLO METHODS History: Monte Carlo (MC) and Markov Chain Monte Carlo (MCMC) have been around for a long time. Some (very) early uses of MC ideas: • Conte de Buffon (1777) dropped a needle of length L onto a grid of parallel lines spaced d > L apart to estimate P [needle intersects a line]. • Laplace (1786) used Buffon’s needle to evaluate π. • Gosset (Student, 1908) used random sampling to determine the distribution of the sample correlation coefficient. • von Neumann, Fermi, Ulam, Metropolis (1940s) used games of chance (hence MC) to study models of atomic collisions at Los Alamos during WW II. We are concerned with the use of MC and, in particular, MCMC methods to solve estimation and detection problems. As discussed in the introduction to MC methods (handout # 4), many estimation and detection problems require evaluation of integrals. EE 527, Detection and Estimation Theory, # 4b 1 Monte Carlo Integration MC Integration is essentially numerical integration and thus may be thought of as estimation — we will discuss MC estimators that estimate integrals. Although MC integration is most useful when dealing with high-dimensional problems, the basic ideas are easiest to grasp by looking at 1-D problems first. Suppose we wish to evaluate Z G = g(x) p(x) dx Ω R where p(·) is a density, i.e. p(x) ≥ 0 for x ∈ Ω and Ω p(x) dx = 1. Any integral can be written in this form if we can transform their limits of integration to Ω = (0, 1) — then choose p(·) to be uniform(0, 1). -

Approximate and Integrate: Variance Reduction in Monte Carlo Integration Via Function Approximation

Approximate and integrate: Variance reduction in Monte Carlo integration via function approximation Yuji Nakatsukasa ∗ June 15, 2018 Abstract Classical algorithms in numerical analysis for numerical integration (quadrature/cubature) follow the principle of approximate and integrate: the integrand is approximated by a simple function (e.g. a polynomial), which is then integrated exactly. In high- dimensional integration, such methods quickly become infeasible due to the curse of dimensionality. A common alternative is the Monte Carlo method (MC), which simply takes the average of random samples, improving the estimate as more and more sam- ples are taken. The main issue with MC is its slow (though dimension-independent) convergence, and various techniques have been proposed to reduce the variance. In this work we suggest a numerical analyst's interpretation of MC: it approximates the inte- grand with a constant function, and integrates that constant exactly. This observation leads naturally to MC-like methods where the approximant is a non-constant function, for example low-degree polynomials,p sparse grids or low-rank functions. We show that these methods have the same O(1= N) asymptotic convergence as in MC, but with re- duced variance, equal to the quality of the underlying function approximation. We also discuss methods that improve the approximationp quality as more samples are taken, and thus can converge faster than O(1= N). The main message is that techniques in high-dimensional approximation theory can be combined with Monte Carlo integration to accelerate convergence. 1 Introduction arXiv:1806.05492v1 [math.NA] 14 Jun 2018 This paper deals with the numerical evaluation (approximation) of the definite integral Z I := f(x)dx; (1.1) Ω for f :Ω ! R. -

5. Monte Carlo Integration

5. Monte Carlo integration One of the main applications of MC is integrating functions. At the simplest, this takes the form of integrating an ordinary 1- or multidimensional analytical function. But very often nowadays the function itself is a set of values returned by a simulation (e.g. MC or MD), and the actual function form need not be known at all. Most of the same principles of MC integration hold regardless of whether we are integrating an analytical function or a simulation. Basics of Monte Carlo simulations, Kai Nordlund 2006 JJ J I II × 1 5.1. MC integration [Gould and Tobochnik ch. 11, Numerical Recipes 7.6] To get the idea behind MC integration, it is instructive to recall how ordinary numerical integration works. If we consider a 1-D case, the problem can be stated in the form that we want to find the area A below an arbitrary curve in some interval [a; b]. In the simplest possible approach, this is achieved by a direct summation over N points occurring Basics of Monte Carlo simulations, Kai Nordlund 2006 JJ J I II × 2 at a regular interval ∆x in x: N X A = f(xi)∆x (1) i=1 where b − a xi = a + (i − 0:5)∆x and ∆x = (2) N i.e. N b − a X A = f(xi) N i=1 This takes the value of f from the midpoint of each interval. • Of course this can be made more accurate by using e.g. the trapezoidal or Simpson's method. { But for the present purpose of linking this to MC integration, we need not concern ourselves with that. -

Mean Field Simulation for Monte Carlo Integration MONOGRAPHS on STATISTICS and APPLIED PROBABILITY

Mean Field Simulation for Monte Carlo Integration MONOGRAPHS ON STATISTICS AND APPLIED PROBABILITY General Editors F. Bunea, V. Isham, N. Keiding, T. Louis, R. L. Smith, and H. Tong 1. Stochastic Population Models in Ecology and Epidemiology M.S. Barlett (1960) 2. Queues D.R. Cox and W.L. Smith (1961) 3. Monte Carlo Methods J.M. Hammersley and D.C. Handscomb (1964) 4. The Statistical Analysis of Series of Events D.R. Cox and P.A.W. Lewis (1966) 5. Population Genetics W.J. Ewens (1969) 6. Probability, Statistics and Time M.S. Barlett (1975) 7. Statistical Inference S.D. Silvey (1975) 8. The Analysis of Contingency Tables B.S. Everitt (1977) 9. Multivariate Analysis in Behavioural Research A.E. Maxwell (1977) 10. Stochastic Abundance Models S. Engen (1978) 11. Some Basic Theory for Statistical Inference E.J.G. Pitman (1979) 12. Point Processes D.R. Cox and V. Isham (1980) 13. Identification of OutliersD.M. Hawkins (1980) 14. Optimal Design S.D. Silvey (1980) 15. Finite Mixture Distributions B.S. Everitt and D.J. Hand (1981) 16. ClassificationA.D. Gordon (1981) 17. Distribution-Free Statistical Methods, 2nd edition J.S. Maritz (1995) 18. Residuals and Influence in RegressionR.D. Cook and S. Weisberg (1982) 19. Applications of Queueing Theory, 2nd edition G.F. Newell (1982) 20. Risk Theory, 3rd edition R.E. Beard, T. Pentikäinen and E. Pesonen (1984) 21. Analysis of Survival Data D.R. Cox and D. Oakes (1984) 22. An Introduction to Latent Variable Models B.S. Everitt (1984) 23. Bandit Problems D.A. Berry and B. -

Sampling and Monte Carlo Integration

Sampling and Monte Carlo Integration Michael Gutmann Probabilistic Modelling and Reasoning (INFR11134) School of Informatics, University of Edinburgh Spring semester 2018 Recap Learning and inference often involves intractable integrals, e.g. I Marginalisation Z p(x) = p(x, y)dy y I Expectations Z E [g(x) | yo] = g(x)p(x|yo)dx for some function g. I For unobserved variables, likelihood and gradient of the log lik Z L(θ) = p(D; θ) = p(u, D; θdu), u ∇θ`(θ) = Ep(u|D;θ) [∇θ log p(u, D; θ)] Notation: Ep(x) is sometimes used to indicate that the expectation is taken with respect to p(x). Michael Gutmann Sampling and Monte Carlo Integration 2 / 41 Recap Learning and inference often involves intractable integrals, e.g. I For unnormalised models with intractable partition functions p˜(D; θ) L(θ) = R x p˜(x; θ)dx ∇θ`(θ) ∝ m(D; θ) − Ep(x;θ) [m(x; θ)] I Combined case of unnormalised models with intractable partition functions and unobserved variables. I Evaluation of intractable integrals can sometimes be avoided by using other learning criteria (e.g. score matching). I Here: methods to approximate integrals like those above using sampling. Michael Gutmann Sampling and Monte Carlo Integration 3 / 41 Program 1. Monte Carlo integration 2. Sampling Michael Gutmann Sampling and Monte Carlo Integration 4 / 41 Program 1. Monte Carlo integration Approximating expectations by averages Importance sampling 2. Sampling Michael Gutmann Sampling and Monte Carlo Integration 5 / 41 Averages with iid samples I Tutorial 7: For Gaussians, the sample average is an estimate (MLE) of the mean (expectation) E[x] 1 n x¯ = X x ≈ [x] n i E i=1 I Gaussianity not needed: assume xi are iid observations of x ∼ p(x). -

Monte Carlo Integration

A Monte Carlo Integration HE techniques developed in this dissertation are all Monte Carlo methods. Monte Carlo T methods are numerical techniques which rely on random sampling to approximate their results. Monte Carlo integration applies this process to the numerical estimation of integrals. In this appendix we review the fundamental concepts of Monte Carlo integration upon which our methods are based. From this discussion we will see why Monte Carlo methods are a particularly attractive choice for the multidimensional integration problems common in computer graphics. Good references for Monte Carlo integration in the context of computer graphics include Pharr and Humphreys[2004], Dutré et al.[2006], and Veach[1997]. The term “Monte Carlo methods” originated at the Los Alamos National Laboratory in the late 1940s during the development of the atomic bomb [Metropolis and Ulam, 1949]. Not surprisingly, the development of these methods also corresponds with the invention of the first electronic computers, which greatly accelerated the computation of repetitive numerical tasks. Metropolis[1987] provides a detailed account of the origins of the Monte Carlo method. Las Vegas algorithms are another class of method which rely on randomization to compute their results. However, in contrast to Las Vegas algorithms, which always produce the exact or correct solution, the accuracy of Monte Carlo methods can only be analyzed from a statistical viewpoint. Because of this, we first review some basic principles from probability theory before formally describing Monte Carlo integration. 149 150 A.1 Probability Background In order to define Monte Carlo integration, we start by reviewing some basic ideas from probability. -

Monte Carlo Integration...In a Nutshell

Monte Carlo Integration. in a Nutshell Draft V1.3 c MIT 2014. From Math, Numerics, & Programming for Mechanical Engineers . in a Nutshell by AT Patera and M Yano. All rights reserved. 1 Preamble In engineering analysis, we must often evaluate integrals defined over a complex domain or in a high-dimensional space. For instance, we might wish to calculate the volume of a complex three- dimensional part for an aircraft. We might even wish to evaluate a performance metric for an aircraft expressed as an integral over a very high-dimensional design space. Unfortunately, the deterministic integration techniques considered in the nutshell Integration are unsuited for these tasks: either the domain of integration is too complex to discretize, or the function to integrate is too irregular, or the convergence is too slow due to the high dimension of the space | the curse of dimensionality. In this nutshell, we consider an alternative integration technique based on random variates and statistical estimation: Monte Carlo integration. We introduce in this nutshell the Monte Carlo integration framework. As a first application we consider the calculation of the area of a complex shape in two dimensions: we provide a statistical estimator for the area, as well as associated a priori and a posteriori error bounds in the form of confidence intervals; we also consider extension to higher space dimensions. Finally, we consider two different Monte Carlo approaches to integration: the \hit or miss" approach, and the sample mean method; for simplicity, we consider univariate functions. Prerequisites: probability theory; random variables; statistical estimation. 2 Motivation: Example Let us say that we are given a (pseudo-)random variate generator for the standard uniform dis- tribution. -

Monte Carlo Integration

Chapter 5 Monte Carlo integration 5.1 Introduction The method of simulating stochastic variables in order to approximate entities such as Z I(f) = f(x)dx is called Monte Carlo integration or the Monte Carlo method. This is desirable in applied mathematics, where complicated integrals frequently arises in and close form solutions are a rarity. In order to deal with the problem, numerical methods and approximations are employed. By simulation and on the foundations of the law of large numbers it is to find good estimates for I(f). Note here that "good" means "close to the exact value" in some specific sense. There are other methods to approximate an integral such as I(f). One common way is the Riemann sum, where the integral is replaced by the sum of small n intervals in one dimension. In order to calculate a d-dimensional integral, it is natural to try to extend the one-dimensional approach. When doing so, the number of times the function f has to be calculated increases to N = (n + 1)d ≈ nd times, and the approximation error will be proportional to n−2 ≈ N −2=d. One key advantage of the Monte Carlo method to calculate integrals numerically, is that it has an error that is proportional to n−1=2, regardless of the dimension of the integral. 5.2 Monte Carlo Integration Consider the d-dimensional integral Z Z x1=1 Z xd=1 I = f(x)dx = ··· f(x1; : : : ; xd)dx1 : : : dxd x1=0 xd=0 1 2 CHAPTER 5. MONTE CARLO INTEGRATION d d of a function f over the unit hypercube [0; 1] = [0; 1] × ::: × [0; 1] in R .