Monte Carlo and Markov Chain Monte Carlo Methods

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Markov Chain Monte Carlo 1 Importance Sampling

CS731 Spring 2011 Advanced Artificial Intelligence Markov Chain Monte Carlo Lecturer: Xiaojin Zhu [email protected] A fundamental problem in machine learning is to generate samples from a distribution: x ∼ p(x). (1) This problem has many important applications. For example, one can approximate the expectation of a function φ(x) Z µ ≡ Ep[φ(x)] = φ(x)p(x)dx (2) by the sample average over x1 ... xn ∼ p(x): n 1 X µˆ ≡ φ(x ) (3) n i i=1 This is known as the Monte Carlo method. A concrete application is in Bayesian predictive modeling where R p(x | θ)p(θ | Data)dθ. Clearly,µ ˆ is an unbiased estimator of µ. Furthermore, the variance ofµ ˆ decreases as V(φ)/n (4) R 2 where V(φ) = (φ(x) − µ) p(x)dx. Thus Monte Carlo methods depend on the sample size n, not on the dimensionality of x. 1 Often, p is only known up to a constant. That is, we assume p(x) = Z p˜(x) and we are able to evaluate p˜(x) at any point x. However, we cannot compute the normalization constant Z. For instance, in the 1 Bayesian modeling example, the posterior distribution is p(θ | Data) = Z p(θ)p(Data | θ). This makes sampling harder. All methods below work onp ˜. 1 Importance Sampling Unlike other methods discussed later, importance sampling is not a method to sample from p. Rather, it is a method to compute (3). Assume we know the unnormalizedp ˜. It turns out that we (or Matlab) only know how to directly sample from very few distributions such as uniform, Gaussian, etc.; p may not be one of them in general. -

Monte Carlo Method - Wikipedia

2. 11. 2019 Monte Carlo method - Wikipedia Monte Carlo method Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes:[1] optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of risk in business and, in mathematics, evaluation of multidimensional definite integrals with complicated boundary conditions. In application to systems engineering problems (space, oil exploration, aircraft design, etc.), Monte Carlo–based predictions of failure, cost overruns and schedule overruns are routinely better than human intuition or alternative "soft" methods.[2] In principle, Monte Carlo methods can be used to solve any problem having a probabilistic interpretation. By the law of large numbers, integrals described by the expected value of some random variable can be approximated by taking the empirical mean (a.k.a. the sample mean) of independent samples of the variable. When the probability distribution of the variable is parametrized, mathematicians often use a Markov chain Monte Carlo (MCMC) sampler.[3][4][5][6] The central idea is to design a judicious Markov chain model with a prescribed stationary probability distribution. -

1. Monte Carlo Integration

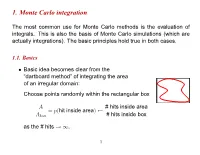

1. Monte Carlo integration The most common use for Monte Carlo methods is the evaluation of integrals. This is also the basis of Monte Carlo simulations (which are actually integrations). The basic principles hold true in both cases. 1.1. Basics Basic idea becomes clear from the • “dartboard method” of integrating the area of an irregular domain: Choose points randomly within the rectangular box A # hits inside area = p(hit inside area) Abox # hits inside box as the # hits . ! 1 1 Buffon’s (1707–1788) needle experiment to determine π: • – Throw needles, length l, on a grid of lines l distance d apart. – Probability that a needle falls on a line: d 2l P = πd – Aside: Lazzarini 1901: Buffon’s experiment with 34080 throws: 355 π = 3:14159292 ≈ 113 Way too good result! (See “π through the ages”, http://www-groups.dcs.st-and.ac.uk/ history/HistTopics/Pi through the ages.html) ∼ 2 1.2. Standard Monte Carlo integration Let us consider an integral in d dimensions: • I = ddxf(x) ZV Let V be a d-dim hypercube, with 0 x 1 (for simplicity). ≤ µ ≤ Monte Carlo integration: • - Generate N random vectors x from flat distribution (0 (x ) 1). i ≤ i µ ≤ - As N , ! 1 V N f(xi) I : N iX=1 ! - Error: 1=pN independent of d! (Central limit) / “Normal” numerical integration methods (Numerical Recipes): • - Divide each axis in n evenly spaced intervals 3 - Total # of points N nd ≡ - Error: 1=n (Midpoint rule) / 1=n2 (Trapezoidal rule) / 1=n4 (Simpson) / If d is small, Monte Carlo integration has much larger errors than • standard methods. -

Stat 451 Lecture Notes 0512 Simulating Random Variables

Stat 451 Lecture Notes 0512 Simulating Random Variables Ryan Martin UIC www.math.uic.edu/~rgmartin 1Based on Chapter 6 in Givens & Hoeting, Chapter 22 in Lange, and Chapter 2 in Robert & Casella 2Updated: March 7, 2016 1 / 47 Outline 1 Introduction 2 Direct sampling techniques 3 Fundamental theorem of simulation 4 Indirect sampling techniques 5 Sampling importance resampling 6 Summary 2 / 47 Motivation Simulation is a very powerful tool for statisticians. It allows us to investigate the performance of statistical methods before delving deep into difficult theoretical work. At a more practical level, integrals themselves are important for statisticians: p-values are nothing but integrals; Bayesians need to evaluate integrals to produce posterior probabilities, point estimates, and model selection criteria. Therefore, there is a need to understand simulation techniques and how they can be used for integral approximations. 3 / 47 Basic Monte Carlo Suppose we have a function '(x) and we'd like to compute Ef'(X )g = R '(x)f (x) dx, where f (x) is a density. There is no guarantee that the techniques we learn in calculus are sufficient to evaluate this integral analytically. Thankfully, the law of large numbers (LLN) is here to help: If X1; X2;::: are iid samples from f (x), then 1 Pn R n i=1 '(Xi ) ! '(x)f (x) dx with prob 1. Suggests that a generic approximation of the integral be obtained by sampling lots of Xi 's from f (x) and replacing integration with averaging. This is the heart of the Monte Carlo method. 4 / 47 What follows? Here we focus mostly on simulation techniques. -

Slice Sampling for General Completely Random Measures

Slice Sampling for General Completely Random Measures Peiyuan Zhu Alexandre Bouchard-Cotˆ e´ Trevor Campbell Department of Statistics University of British Columbia Vancouver, BC V6T 1Z4 Abstract such model can select each category zero or once, the latent categories are called features [1], whereas if each Completely random measures provide a princi- category can be selected with multiplicities, the latent pled approach to creating flexible unsupervised categories are called traits [2]. models, where the number of latent features is Consider for example the problem of modelling movie infinite and the number of features that influ- ratings for a set of users. As a first rough approximation, ence the data grows with the size of the data an analyst may entertain a clustering over the movies set. Due to the infinity the latent features, poste- and hope to automatically infer movie genres. Cluster- rior inference requires either marginalization— ing in this context is limited; users may like or dislike resulting in dependence structures that prevent movies based on many overlapping factors such as genre, efficient computation via parallelization and actor and score preferences. Feature models, in contrast, conjugacy—or finite truncation, which arbi- support inference of these overlapping movie attributes. trarily limits the flexibility of the model. In this paper we present a novel Markov chain As the amount of data increases, one may hope to cap- Monte Carlo algorithm for posterior inference ture increasingly sophisticated patterns. We therefore that adaptively sets the truncation level using want the model to increase its complexity accordingly. auxiliary slice variables, enabling efficient, par- In our movie example, this means uncovering more and allelized computation without sacrificing flexi- more diverse user preference patterns and movie attributes bility. -

Lab 4: Monte Carlo Integration and Variance Reduction

Lab 4: Monte Carlo Integration and Variance Reduction Lecturer: Zhao Jianhua Department of Statistics Yunnan University of Finance and Economics Task The objective in this lab is to learn the methods for Monte Carlo Integration and Variance Re- duction, including Monte Carlo Integration, Antithetic Variables, Control Variates, Importance Sampling, Stratified Sampling, Stratified Importance Sampling. 1 Monte Carlo Integration 1.1 Simple Monte Carlo estimator 1.1.1 Example 5.1 (Simple Monte Carlo integration) Compute a Monte Carlo(MC) estimate of Z 1 θ = e−xdx 0 and compare the estimate with the exact value. m <- 10000 x <- runif(m) theta.hat <- mean(exp(-x)) print(theta.hat) ## [1] 0.6324415 print(1 - exp(-1)) ## [1] 0.6321206 : − : The estimate is θ^ = 0:6355 and θ = 1 − e 1 = 0:6321. 1.1.2 Example 5.2 (Simple Monte Carlo integration, cont.) R 4 −x Compute a MC estimate of θ = 2 e dx: and compare the estimate with the exact value of the integral. m <- 10000 x <- runif(m, min = 2, max = 4) theta.hat <- mean(exp(-x)) * 2 print(theta.hat) 1 ## [1] 0.1168929 print(exp(-2) - exp(-4)) ## [1] 0.1170196 : − : The estimate is θ^ = 0:1172 and θ = 1 − e 1 = 0:1170. 1.1.3 Example 5.3 (Monte Carlo integration, unbounded interval) Use the MC approach to estimate the standard normal cdf Z 1 1 2 Φ(x) = p e−t /2dt: −∞ 2π Since the integration cover an unbounded interval, we break this problem into two cases: x ≥ 0 and x < 0, and use the symmetry of the normal density to handle the second case. -

A Minimax Near-Optimal Algorithm for Adaptive Rejection Sampling

A minimax near-optimal algorithm for adaptive rejection sampling Juliette Achdou [email protected] Numberly (1000mercis Group) Paris, France Joseph C. Lam [email protected] Otto-von-Guericke University Magdeburg, Germany Alexandra Carpentier [email protected] Otto-von-Guericke University Magdeburg, Germany Gilles Blanchard [email protected] Potsdam University Potsdam, Germany Abstract Rejection Sampling is a fundamental Monte-Carlo method. It is used to sample from distributions admitting a probability density function which can be evaluated exactly at any given point, albeit at a high computational cost. However, without proper tuning, this technique implies a high rejection rate. Several methods have been explored to cope with this problem, based on the principle of adaptively estimating the density by a simpler function, using the information of the previous samples. Most of them either rely on strong assumptions on the form of the density, or do not offer any theoretical performance guarantee. We give the first theoretical lower bound for the problem of adaptive rejection sampling and introduce a new algorithm which guarantees a near-optimal rejection rate in a minimax sense. Keywords: Adaptive rejection sampling, Minimax rates, Monte-Carlo, Active learning. 1. Introduction The breadth of applications requiring independent sampling from a probability distribution is sizable. Numerous classical statistical results, and in particular those involved in ma- arXiv:1810.09390v1 [stat.ML] 22 Oct 2018 chine learning, rely on the independence assumption. For some densities, direct sampling may not be tractable, and the evaluation of the density at a given point may be costly. Rejection sampling (RS) is a well-known Monte-Carlo method for sampling from a density d f on R when direct sampling is not tractable (see Von Neumann, 1951, Devroye, 1986). -

Bayesian Computation: MCMC and All That

Bayesian Computation: MCMC and All That SCMA V Short-Course, Pennsylvania State University Alan Heavens, Tom Loredo, and David A van Dyk 11 and 12 June 2011 11 June Morning Session 10.00 - 13.00 10.00 - 11.15 The Basics: Heavens Bayes Theorem, Priors, and Posteriors. 11.15 - 11.30 Coffee Break 11.30 - 13.00 Low Dimensional Computing Part I Loredo 11 June Afternoon Session 14.15 - 17.30 14.15 - 15.15 Low Dimensional Computing Part II Loredo 15.15 - 16.00 MCMC Part I van Dyk 16.00 - 16.15 Coffee Break 16.15 - 17.00 MCMC Part II van Dyk 17.00 - 17.30 R Tutorial van Dyk 12 June Morning Session 10.00 - 13.15 10.00 - 10.45 Data Augmentation and PyBLoCXS Demo van Dyk 10.45 - 11.45 MCMC Lab van Dyk 11.45 - 12.00 Coffee Break 12.00 - 13.15 Output Analysls* Heavens and Loredo 12 June Afternoon Session 14.30 - 17.30 14.30 - 15.25 MCMC and Output Analysis Lab Heavens 15.25 - 16.05 Hamiltonian Monte Carlo Heavens 16.05 - 16.20 Coffee Break 16.20 - 17.00 Overview of Other Advanced Methods Loredo 17.00 - 17.30 Final Q&A Heavens, Loredo, and van Dyk * Heavens (45 min) will cover basic convergence issues and methods, such as G&R's multiple chains. Loredo (30 min) will discuss Resampling The Bayesics Alan Heavens University of Edinburgh, UK Lectures given at SCMA V, Penn State June 2011 I V N E R U S E I T H Y T O H F G E R D I N B U Outline • Types of problem • Bayes’ theorem • Parameter EsJmaon – Marginalisaon – Errors • Error predicJon and experimental design: Fisher Matrices • Model SelecJon LCDM fits the WMAP data well. -

On the Generalized Ratio of Uniforms As a Combination of Transformed Rejection and Extended Inverse of Density Sampling

1 On the Generalized Ratio of Uniforms as a Combination of Transformed Rejection and Extended Inverse of Density Sampling Luca Martinoy, David Luengoz, Joaqu´ın M´ıguezy yDepartment of Signal Theory and Communications, Universidad Carlos III de Madrid. Avenida de la Universidad 30, 28911 Leganes,´ Madrid, Spain. zDepartment of Circuits and Systems Engineering, Universidad Politecnica´ de Madrid. Carretera de Valencia Km. 7, 28031 Madrid, Spain. E-mail: [email protected], [email protected], [email protected] Abstract In this work we investigate the relationship among three classical sampling techniques: the inverse of density (Khintchine’s theorem), the transformed rejection (TR) and the generalized ratio of uniforms (GRoU). Given a monotonic probability density function (PDF), we show that the transformed area obtained using the generalized ratio of uniforms method can be found equivalently by applying the transformed rejection sampling approach to the inverse function of the target density. Then we provide an extension of the classical inverse of density idea, showing that it is completely equivalent to the GRoU method for monotonic densities. Although we concentrate on monotonic probability density functions (PDFs), we also discuss how the results presented here can be extended to any non-monotonic PDF that can be decomposed into a collection of intervals where it is monotonically increasing or decreasing. In this general case, we show the connections with transformations of certain random variables and the generalized inverse PDF with the GRoU technique. Finally, we also introduce a GRoU technique to arXiv:1205.0482v7 [stat.CO] 16 Jul 2013 handle unbounded target densities. Index Terms Transformed rejection sampling; inverse of density method; Khintchine’s theorem; generalized ratio of uniforms technique; vertical density representation. -

Approximate and Integrate: Variance Reduction in Monte Carlo Integration Via Function Approximation

Approximate and integrate: Variance reduction in Monte Carlo integration via function approximation Yuji Nakatsukasa ∗ June 15, 2018 Abstract Classical algorithms in numerical analysis for numerical integration (quadrature/cubature) follow the principle of approximate and integrate: the integrand is approximated by a simple function (e.g. a polynomial), which is then integrated exactly. In high- dimensional integration, such methods quickly become infeasible due to the curse of dimensionality. A common alternative is the Monte Carlo method (MC), which simply takes the average of random samples, improving the estimate as more and more sam- ples are taken. The main issue with MC is its slow (though dimension-independent) convergence, and various techniques have been proposed to reduce the variance. In this work we suggest a numerical analyst's interpretation of MC: it approximates the inte- grand with a constant function, and integrates that constant exactly. This observation leads naturally to MC-like methods where the approximant is a non-constant function, for example low-degree polynomials,p sparse grids or low-rank functions. We show that these methods have the same O(1= N) asymptotic convergence as in MC, but with re- duced variance, equal to the quality of the underlying function approximation. We also discuss methods that improve the approximationp quality as more samples are taken, and thus can converge faster than O(1= N). The main message is that techniques in high-dimensional approximation theory can be combined with Monte Carlo integration to accelerate convergence. 1 Introduction arXiv:1806.05492v1 [math.NA] 14 Jun 2018 This paper deals with the numerical evaluation (approximation) of the definite integral Z I := f(x)dx; (1.1) Ω for f :Ω ! R. -

5. Monte Carlo Integration

5. Monte Carlo integration One of the main applications of MC is integrating functions. At the simplest, this takes the form of integrating an ordinary 1- or multidimensional analytical function. But very often nowadays the function itself is a set of values returned by a simulation (e.g. MC or MD), and the actual function form need not be known at all. Most of the same principles of MC integration hold regardless of whether we are integrating an analytical function or a simulation. Basics of Monte Carlo simulations, Kai Nordlund 2006 JJ J I II × 1 5.1. MC integration [Gould and Tobochnik ch. 11, Numerical Recipes 7.6] To get the idea behind MC integration, it is instructive to recall how ordinary numerical integration works. If we consider a 1-D case, the problem can be stated in the form that we want to find the area A below an arbitrary curve in some interval [a; b]. In the simplest possible approach, this is achieved by a direct summation over N points occurring Basics of Monte Carlo simulations, Kai Nordlund 2006 JJ J I II × 2 at a regular interval ∆x in x: N X A = f(xi)∆x (1) i=1 where b − a xi = a + (i − 0:5)∆x and ∆x = (2) N i.e. N b − a X A = f(xi) N i=1 This takes the value of f from the midpoint of each interval. • Of course this can be made more accurate by using e.g. the trapezoidal or Simpson's method. { But for the present purpose of linking this to MC integration, we need not concern ourselves with that. -

Mean Field Simulation for Monte Carlo Integration MONOGRAPHS on STATISTICS and APPLIED PROBABILITY

Mean Field Simulation for Monte Carlo Integration MONOGRAPHS ON STATISTICS AND APPLIED PROBABILITY General Editors F. Bunea, V. Isham, N. Keiding, T. Louis, R. L. Smith, and H. Tong 1. Stochastic Population Models in Ecology and Epidemiology M.S. Barlett (1960) 2. Queues D.R. Cox and W.L. Smith (1961) 3. Monte Carlo Methods J.M. Hammersley and D.C. Handscomb (1964) 4. The Statistical Analysis of Series of Events D.R. Cox and P.A.W. Lewis (1966) 5. Population Genetics W.J. Ewens (1969) 6. Probability, Statistics and Time M.S. Barlett (1975) 7. Statistical Inference S.D. Silvey (1975) 8. The Analysis of Contingency Tables B.S. Everitt (1977) 9. Multivariate Analysis in Behavioural Research A.E. Maxwell (1977) 10. Stochastic Abundance Models S. Engen (1978) 11. Some Basic Theory for Statistical Inference E.J.G. Pitman (1979) 12. Point Processes D.R. Cox and V. Isham (1980) 13. Identification of OutliersD.M. Hawkins (1980) 14. Optimal Design S.D. Silvey (1980) 15. Finite Mixture Distributions B.S. Everitt and D.J. Hand (1981) 16. ClassificationA.D. Gordon (1981) 17. Distribution-Free Statistical Methods, 2nd edition J.S. Maritz (1995) 18. Residuals and Influence in RegressionR.D. Cook and S. Weisberg (1982) 19. Applications of Queueing Theory, 2nd edition G.F. Newell (1982) 20. Risk Theory, 3rd edition R.E. Beard, T. Pentikäinen and E. Pesonen (1984) 21. Analysis of Survival Data D.R. Cox and D. Oakes (1984) 22. An Introduction to Latent Variable Models B.S. Everitt (1984) 23. Bandit Problems D.A. Berry and B.