A Thin MIPS Hypervisor for Embedded Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Performance Computing Systems: Status and Outlook

Acta Numerica (2012), pp. 001– c Cambridge University Press, 2012 doi:10.1017/S09624929 Printed in the United Kingdom High Performance Computing Systems: Status and Outlook J.J. Dongarra University of Tennessee and Oak Ridge National Laboratory and University of Manchester [email protected] A.J. van der Steen NCF/HPC Research L.J. Costerstraat 5 6827 AR Arnhem The Netherlands [email protected] CONTENTS 1 Introduction 1 2 The main architectural classes 2 3 Shared-memory SIMD machines 6 4 Distributed-memory SIMD machines 8 5 Shared-memory MIMD machines 10 6 Distributed-memory MIMD machines 13 7 ccNUMA machines 17 8 Clusters 18 9 Processors 20 10 Computational accelerators 38 11 Networks 53 12 Recent Trends in High Performance Computing 59 13 HPC Challenges 72 References 91 1. Introduction High Performance computer systems can be regarded as the most power- ful and flexible research instruments today. They are employed to model phenomena in fields so various as climatology, quantum chemistry, compu- tational medicine, High-Energy Physics and many, many other areas. In 2 J.J. Dongarra & A.J. van der Steen this article we present some of the architectural properties and computer components that make up the present HPC computers and also give an out- look on the systems to come. For even though the speed of computers has increased tremendously over the years (often a doubling in speed every 2 or 3 years), the need for ever faster computers is still there and will not disappear in the forseeable future. Before going on to the descriptions of the machines themselves, it is use- ful to consider some mechanisms that are or have been used to increase the performance. -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

Totalview Reference Guide

TotalView Reference Guide version 8.8 Copyright © 2007–2010 by TotalView Technologies. All rights reserved Copyright © 1998–2007 by Etnus LLC. All rights reserved. Copyright © 1996–1998 by Dolphin Interconnect Solutions, Inc. Copyright © 1993–1996 by BBN Systems and Technologies, a division of BBN Corporation. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, elec- tronic, mechanical, photocopying, recording, or otherwise without the prior written permission of TotalView Technologies. Use, duplication, or disclosure by the Government is subject to restrictions as set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013. TotalView Technologies has prepared this manual for the exclusive use of its customers, personnel, and licensees. The infor- mation in this manual is subject to change without notice, and should not be construed as a commitment by TotalView Tech- nologies. TotalView Technologies assumes no responsibility for any errors that appear in this document. TotalView and TotalView Technologies are registered trademarks of TotalView Technologies. TotalView uses a modified version of the Microline widget library. Under the terms of its license, you are entitled to use these modifications. The source code is available at: ftp://ftp.totalviewtech.com/support/toolworks/Microline_totalview.tar.Z. All other brand names are the trademarks of their respective holders. Book Overview part I - CLI Commands 1 -

Mips 16 Bit Instruction Set

Mips 16 bit instruction set Continue Instruction set architecture MIPSDesignerMIPS Technologies, Imagination TechnologiesBits64-bit (32 → 64)Introduced1985; 35 years ago (1985)VersionMIPS32/64 Issue 6 (2014)DesignRISCTypeRegister-RegisterEncodingFixedBranchingCompare and branchEndiannessBiPage size4 KBExtensionsMDMX, MIPS-3DOpenPartly. The R12000 has been on the market for more than 20 years and therefore cannot be subject to patent claims. Thus, the R12000 and old processors are completely open. RegistersGeneral Target32Floating Point32 MIPS (Microprocessor without interconnected pipeline stages) is a reduced setting of the Computer Set (RISC) Instruction Set Architecture (ISA):A-3:19, developed by MIPS Computer Systems, currently based in the United States. There are several versions of MIPS: including MIPS I, II, III, IV and V; and five MIPS32/64 releases (for 32- and 64-bit sales, respectively). The early MIPS architectures were only 32-bit; The 64-bit versions were developed later. As of April 2017, the current version of MIPS is MIPS32/64 Release 6. MiPS32/64 differs primarily from MIPS I-V, defining the system Control Coprocessor kernel preferred mode in addition to the user mode architecture. The MIPS architecture has several additional extensions. MIPS-3D, which is a simple set of floating-point SIMD instructions dedicated to common 3D tasks, MDMX (MaDMaX), which is a more extensive set of SIMD instructions using 64-bit floating current registers, MIPS16e, which adds compression to flow instructions to make programs that take up less space, and MIPS MT, which adds layered potential. Computer architecture courses in universities and technical schools often study MIPS architecture. Architecture has had a major impact on later RISC architectures such as Alpha. -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Overview of Recent Supercomputers

Overview of recent supercomputers Aad J. van der Steen HPC Research NCF L.J. Costerstraat 5 6827 AR Arnhem The Netherlands [email protected] www.hpcresearch.nl HPC Research December 2012 Abstract In this report we give an overview of high-performance computers which are currently available or will become available within a short time frame from vendors; no attempt is made to list all machines that are still in the development phase. The machines are described according to their macro-architectural class. The information about the machines is kept as compact as possible. No attempt is made to quote price information as this is often even more elusive than the performance of a system. In addition, some general information about high-performance computer architectures and the various processors and communication networks employed in these systems is given in order to better appreciate the systems information given in this report. This document reflects the technical momentary state of the supercomputer arena as accurately as possible. However, the author does not take any responsibility for errors or mistakes in this document. We encourage anyone who has comments or remarks on the contents to inform us, so we can improve this report. Contents 1 Introduction and account 2 2 Architecture of high-performance computers 4 2.1 Themainarchitecturalclasses. ........... 4 2.2 Shared-memorySIMDmachines . ......... 6 2.3 Distributed-memorySIMDmachines . .......... 8 2.4 Shared-memoryMIMDmachines . ......... 10 2.5 Distributed-memoryMIMDmachines . ......... 12 2.6 ccNUMAmachines.................................... ...... 14 2.7 Clusters .......................................... ...... 16 2.8 Processors....................................... ........ 16 2.8.1 AMDInterlagos .................................... ... 17 2.8.2 IBMPOWER7....................................... 19 2.8.3 IBMBlueGeneprocessors . -

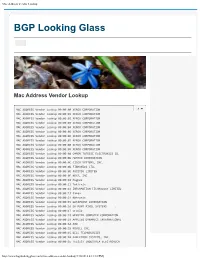

Mac Address Vendor Lookup

Mac Address Vendor Lookup BGP Looking Glass Mac Address Vendor Lookup MAC ADDRESS Vendor Lookup 00:00:00 XEROX CORPORATION 8 MAC ADDRESS Vendor Lookup 00:00:01 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:02 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:03 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:04 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:05 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:06 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:07 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:08 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:09 XEROX CORPORATION MAC ADDRESS Vendor Lookup 00:00:0A OMRON TATEISI ELECTRONICS CO. MAC ADDRESS Vendor Lookup 00:00:0B MATRIX CORPORATION MAC ADDRESS Vendor Lookup 00:00:0C CISCO SYSTEMS, INC. MAC ADDRESS Vendor Lookup 00:00:0D FIBRONICS LTD. MAC ADDRESS Vendor Lookup 00:00:0E FUJITSU LIMITED MAC ADDRESS Vendor Lookup 00:00:0F NEXT, INC. MAC ADDRESS Vendor Lookup 00:00:10 Hughes MAC ADDRESS Vendor Lookup 00:00:11 Tektrnix MAC ADDRESS Vendor Lookup 00:00:12 INFORMATION TECHNOLOGY LIMITED MAC ADDRESS Vendor Lookup 00:00:13 Camex MAC ADDRESS Vendor Lookup 00:00:14 Netronix MAC ADDRESS Vendor Lookup 00:00:15 DATAPOINT CORPORATION MAC ADDRESS Vendor Lookup 00:00:16 DU PONT PIXEL SYSTEMS . MAC ADDRESS Vendor Lookup 00:00:17 Oracle MAC ADDRESS Vendor Lookup 00:00:18 WEBSTER COMPUTER CORPORATION MAC ADDRESS Vendor Lookup 00:00:19 APPLIED DYNAMICS INTERNATIONAL MAC ADDRESS Vendor Lookup 00:00:1A AMD MAC ADDRESS Vendor Lookup 00:00:1B NOVELL INC. -

Jul 2 0 2011 Libraries

Technically Superior But Unloved: A Multi-Faceted Perspective on Multi-core's Failure to Meet Expectations in Embedded Systems by MASSACHUSETTS INSTITE' Daniel Thomas Ledger OF TECHNOLOGY B.S. Electrical Engineering JUL 2 0 2011 Washington University in St. Louis 1996 LIBRARIES B.S., Computer Engineering Washington University in St. Louis 1997 ARCHIVES SUBMITTED TO THE SYSTEM DESIGN AND MANAGEMENT PROGRAM IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE IN ENGINEERING AND MANAGEMENT AT THE MASSACHUSETTS INSTITUTE OF TECHNOLOGY JUNE 2011 @2011 Daniel Thomas Ledger. All rights reserved. The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part medium now known or hereafter created. K,- /II Signature of Author: Daniel Thomas Ledger Fellow, System, ign and Management Program ^// May 6 h, 2011 Certified By: Senior Lecturer, Engineering ystems Division and the Sloan School of Management Thesis Supervisor Accepted By: Patrick Hale Senior Lecturer, Engineering Systems Division Director, System Design and Management Fellows Program Technically Superior But Unloved: A Multi-Faceted Perspective on Multi-core's Failure to Meet Expectations in Embedded Systems By Daniel Thomas Ledger Submitted to the System Design and Management Program on May 6th, 2011 in Partial Fulfillment of the Requirements for the Degree of Master of Science in Engineering and Management Abstract A growing number of embedded multi-core processors from several vendors now offer several technical advantages over single-core architectures. However, despite these advantages, adoption of multi-core processors in embedded systems has fallen short of expectations and not increased significantly in the last 3-4 years. -

Toward a Software Pipelining Framework for Many-Core Chips

TOWARD A SOFTWARE PIPELINING FRAMEWORK FOR MANY-CORE CHIPS by Juergen Ributzka A thesis submitted to the Faculty of the University of Delaware in partial fulfillment of the requirements for the degree of Master of Science in Electrical and Computer Engineering Spring 2009 c 2009 Juergen Ributzka All Rights Reserved TOWARD A SOFTWARE PIPELINING FRAMEWORK FOR MANY-CORE CHIPS by Juergen Ributzka Approved: Guang R. Gao, Ph.D. Professor in charge of thesis on behalf of the Advisory Committee Approved: Gonzalo R. Arce, Ph.D. Chair of the Department of Electrical and Computer Engineering Approved: Michael J. Chajes, Ph.D. Dean of the College of Engineering Approved: Debra Hess Norris, M.S. Vice Provost for Graduate and Professional Education DEDICATION In memory of my grandfather. iii ACKNOWLEDGEMENTS I would like to express my deepest gratitude to my advisor, Prof. Guang R. Gao, who guided and supported me in my research and my decisions. I was very fortunate to have full freedom in my research and I feel honored with the trust he put in me. Under his guidance I acquired a vast set of skills and knowledge. This knowledge was not limited to research only. I enjoyed the private conversations we had and the wisdom he shared with me. Without his help and preparation, this thesis would have been impossible. I am also very grateful to Dr. Fred Chow. He believed I could do this project and he offered me a unique opportunity, which made this thesis possible. Without his help and the support of him and his coworkers at PathScale, I would not have been able to finish this project successfully in such short time. -

Efficient Hardware for Low Latency Applications

EFFICIENT HARDWARE FOR LOW LATENCY APPLICATIONS Inauguraldissertation zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften der Universität Mannheim vorgelegt von Christian Harald Leber (Diplom-Informatiker der Technischen Informatik) aus Ludwigshafen Mannheim, 2012 Dekan: Professor Dr. Heinz Jürgen Müller, Universität Mannheim Referent: Professor Dr. Ulrich Brüning, Universität Heidelberg Korreferent: Professor Dr. Holger Fröning, Universität Heidelberg Tag der mündlichen Prüfung: 20.8.2012 Für meine Eltern Abstract Abstract The design and development of application specific hardware structures has a high degree of complexity. Logic resources are nowadays often not the limit anymore, but the develop- ment time and especially the time it takes for testing are today significant factors that limit the possibility to implement functionality in hardware. In the following techniques are pre- sented that help to improve the capabilities to design complex hardware. The first part presents a generator (RFS) which allows defining control and status structures for hardware designs using an abstract high level language. These structures are usually called register files. Special features are presented for the efficient implementation of counters and error reporting facilities. Native support for the partitioning of such register files is an important trait of RFS. A novel method to inform host systems very efficiently about changes in the register files is presented in the second part. It makes use of a microcode programmable hardware unit. The microcode is auto generated based on output from RFS. The general principle is to push changed information from the register file to the host systems instead of relying on the stan- dard pull approach. In the third part a highly efficient, fully pipelined address translation mechanism for remote memory access in HPC interconnection networks is presented.