Tug of Perspectives: Mobile App Users Vs Developers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Google Apps Premier Edition: Easy, Collaborative Workgroup Communication with Gmail and Google Calendar

Google Apps Premier Edition: easy, collaborative workgroup communication with Gmail and Google Calendar Messaging overview Google Apps Premier Edition messaging tools include email, calendar and instant messaging solutions that help employees communicate and stay connected, wherever and whenever they work. These web-based services can be securely accessed from any browser, work on mobile devices like BlackBerry and iPhone, and integrate with other popular email systems like Microsoft Outlook, Apple Mail, and more. What’s more, Google Apps’ SAML-based Single Sign-On (SSO) capability integrates seamlessly with existing enterprise security and authentication services. Google Apps deliver productivity and reduce IT workload with a hosted, 99.9% uptime solution that gets teams working together fast. Gmail Get control of spam Advanced filters keep spam from employees’ inboxes so they can focus on messages that matter, and IT admins can focus on other initiatives. Keep all your email 25 GB of storage per user means that inbox quotas and deletion schedules are a thing of the past. Integrated instant messaging Connect with contacts instantly without launching a separate application or leaving your inbox. No software required. Built-in voice and video chat Voice and video conversations, integrated into Gmail, make it easy to connect face-to-face with co-workers around the world. Find messages instantly Powerful Google search technology is built into Gmail, turning your inbox into your own private and secure Google search engine for email. Protect and secure sensitive information Additional spam filtering from Postini provides employees with an additional layer of protection and policy-enforced encryption between domains using standard TLS protocols. -

Google Apps: an Introduction to Docs, Scholar, and Maps

[Not for Circulation] Google Apps: An Introduction to Docs, Scholar, and Maps This document provides an introduction to using three Google Applications: Google Docs, Google Scholar, and Google Maps. Each application is free to use, some just simply require the creation of a Google Account, also at no charge. Creating a Google Account To create a Google Account, 1. Go to http://www.google.com/. 2. At the top of the screen, select “Gmail”. 3. On the Gmail homepage, click on the right of the screen on the button that is labeled “Create an account”. 4. In order to create an account, you will be asked to fill out information, including choosing a Login name which will serve as your [email protected], as well as a password. After completing all the information, click “I accept. Create my account.” at the bottom of the page. 5. After you successfully fill out all required information, your account will be created. Click on the “Show me my account” button which will direct you to your Gmail homepage. Google Docs Now that you have an account created with Google, you are able to begin working with Google Docs. Google Docs is an application that allows the creation and sharing of documents, spreadsheets, presentations, forms, and drawings online. Users can collaborate with others to make changes to the same document. 1. Type in the address www.docs.google.com, or go to Google’s homepage, http://www. google.com, click the more drop down tab at the top, and then click Documents. Information Technology Services, UIS 1 [Not for Circulation] 2. -

Google Earth User Guide

Google Earth User Guide ● Table of Contents Introduction ● Introduction This user guide describes Google Earth Version 4 and later. ❍ Getting to Know Google Welcome to Google Earth! Once you download and install Google Earth, your Earth computer becomes a window to anywhere on the planet, allowing you to view high- ❍ Five Cool, Easy Things resolution aerial and satellite imagery, elevation terrain, road and street labels, You Can Do in Google business listings, and more. See Five Cool, Easy Things You Can Do in Google Earth Earth. ❍ New Features in Version 4.0 ❍ Installing Google Earth Use the following topics to For other topics in this documentation, ❍ System Requirements learn Google Earth basics - see the table of contents (left) or check ❍ Changing Languages navigating the globe, out these important topics: ❍ Additional Support searching, printing, and more: ● Making movies with Google ❍ Selecting a Server Earth ❍ Deactivating Google ● Getting to know Earth Plus, Pro or EC ● Using layers Google Earth ❍ Navigating in Google ● Using places Earth ● New features in Version 4.0 ● Managing search results ■ Using a Mouse ● Navigating in Google ● Measuring distances and areas ■ Using the Earth Navigation Controls ● Drawing paths and polygons ● ■ Finding places and Tilting and Viewing ● Using image overlays Hilly Terrain directions ● Using GPS devices with Google ■ Resetting the ● Marking places on Earth Default View the earth ■ Setting the Start ● Location Showing or hiding points of interest ● Finding Places and ● Directions Tilting and -

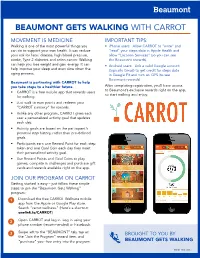

Beaumont Gets Walking with Carrot

BEAUMONT GETS WALKING WITH CARROT MOVEMENT IS MEDICINE IMPORTANT TIPS: Walking is one of the most powerful things you • iPhone users: Allow CARROT to “write” and can do to support your own health. It can reduce “read” your steps data in Apple Health and your risk for heart disease, high blood pressure, allow “Location Services” (so you can see stroke, Type 2 diabetes and colon cancer. Walking the Beaumont rewards). can help you lose weight and gain energy. It can • Android users: Link a valid Google account help improve your sleep and even slow down the (typically Gmail) to get credit for steps data aging process. in Google Fit and turn on GPS (to see Beaumont rewards). Beaumont is partnering with CARROT to help you take steps to a healthier future. After completing registration, you’ll have access to Beaumont’s exclusive rewards right on the app, • CARROT is a free mobile app that rewards users so start walking and enjoy. for walking. • Just walk to earn points and redeem your “CARROT currency” for rewards. • Unlike any other program, CARROT gives each user a personalized activity goal that updates each day. • Activity goals are based on the participant’s personal step history, rather than pre-defined goals. • Participants earn one Reward Point for each step taken and one Goal Coin each day they meet their personalized activity goal. Beaumont Beaumont • Use Reward Points and Goal Coins to play games, compete in challenges and purchase gift cards and rewards available right on the app. JOIN OUR PROGRAM ON CARROT Getting started is easy – just follow these simple steps to join the “Beaumont Gets Walking” program: 1 Download the free CARROT Wellness mobile app from the Apple or Google Play store. -

ONOMATOPEE 170 ENTREPRECARIAT Silvio Lorusso

ENTREPRECARIAT Silvio Lorusso ONOMATOPEE 170 A COMPELLING AND RELENTLESS J’ACCUSE: DEBUNKING THE SOCIAL AND POLITICAL MYTHS THAT PUSH AN INCREASING NUMBER OF PERSONS TO PERFORM IN THE ENTREPRENEURSHIP CIRCUS — WITH NO SAFETY NETS. Antonio Casilli, author of En attendant les robots, 2019 ENTREPRECARIAT Silvio Lorusso ONOMATOPEE 170 ENTREPRECARIAT Everyone Is an Entrepreneur. Nobody Is Safe. CONTENTS Colophon 4 Acknowledgments 6 Precarious by Design Foreword by Geert Lovink 9 Origin Story 15 I. Core Values I.I Be Like Elon: What is an Entrepreneur? 21 I.II Expectations vs. Reality: Unboxing Precarity 38 I.III Fake It Till You Make It: Entrepreneurial Precariat or Precarious Entrepreneurialism? 64 II. Assets II.I Time: Shouldn’t You Be Working? 79 II.II Space: Squatting the Endless Ofce 101 II.III Mind: Hack Thyself 130 III. Platforms III.I LinkedIn: A CV That Never Sleeps 155 III.II Fiverr: Creative Self-Destruction 176 III.III GoFundMe: The Tragedy of Crowdfunding 209 Exit Strategy 226 The Spirit of the Entreprecariat Afterword by Rafaele Alberto Ventura 235 Bibliography 241 Entreprecariat: Printed by Printon (Tallinn, Estonia) Everyone Is an Entrepreneur. Print run: 1200 Nobody Is Safe. by Silvio Lorusso Made possible by Foreword Cultuur Eindhoven, by Geert Lovink Mondriaan Fund, Afterword Province Noord-Brabant by Rafaele Alberto Ventura and Onomatopee Onomatopee 170 ISBN: 978-94-93148-16-1 2019 Translation from Italian: Isobel Butters Proofreading: Josh Plough Editorial Advice: Freek Lomme Graphic design: Federico Antonini and Alessio D’Ellena (Superness.info) Typeset in: Monument Grotesk by abcdinamo.com ITC Garamond Condensed Italian edition: Krisis Publishing, 2018 Unless otherwise credited, all images reproduced in this book are the property of their respective authors. -

Android Support for Microsoft Exchange in Pure Google Devices

Android support for Microsoft Exchange in pure Google devices Note: The information presented here is intended for Microsoft Exchange administrators who are planning and implementing support for any of these pure Google devices running Android. Android support by version and device The following AndroidTM versions support Microsoft Exchange information services and security policies: ● Android 4.0.4 (Ice Cream Sandwich) ● Android 4.1 and later (Jelly Bean) The following “pure Google” devices support the Microsoft Exchange services and policies: ● Galaxy Nexus phones running Android ● Nexus S phones running Android ● Motorola Xoom tablets running Android ● Nexus 4 phones running Android ● Nexus 7 tablets running Android ● Nexus 10 tablets running Android Requirements To support Android 4.0 running on pure Google devices, you must be running one of the following versions of Microsoft Exchange: ● Exchange Server 2010 SP1 with Exchange ActiveSync 14.1 ● Exchange Server 2010 with Exchange ActiveSync 14.0 ● Exchange Server 2007 SP1 with Exchange ActiveSync 12.1 ● Exchange Server 2007 with Exchange ActiveSync 12.0 ● Exchange Server 2003 SP2 with Exchange ActiveSync 2.5 The following information applies to the Android platform, including the Settings, Email, Calendar, People, and related apps as built by Google. If a device manufacturer has modified these apps on its own devices, contact the manufacturer for information about support for Exchange features. Supported information services Users can add Microsoft Exchange accounts to their pure Google devices by using the Account & Sync settings available from the Settings or Email app. Android supports the following Exchange information services: ● Adding Exchange user accounts (via an ActiveSync server), and enforcement of some mailbox policies (as described in “Supported security policies,” next). -

What Can Engineers Learn from the Humanities?

What can engineers learn from the Humanities? John Naughton 5 May 2015 Introduction Everywhere one looks these days, the Humanities seem to be on the defensive. On both sides of the Atlantic and further afield there is a crisis of morale in humanities departments. Like the citizens of Czechoslovakia under Soviet rule, Humanities scholars console themselves with bitter, resigned humour, mocking the absurdities of their philistinic overlords. Here, for example, is Terry Eagle- ton, who has held chairs in English in an number of elite universities, reflecting on an encounter he had when being shown round a thoroughly modern Korean university by its thoroughly modern President: “As befitted so eminent a personage, he was flanked by twoburly young minders in black suits and shades, who for all I knew were car- rying Kalashnikovs under their jackets. Having waxed lyrical about his gleaming new business school and state-of-the-art institute for management studies, the president paused to permit me a few words of fulsome praise. I remarked instead that there seemed to be no crit- ical studies of any kind on his campus. He looked at me bemusedly, as though I had asked him how many Ph.D.’s in pole dancing they awarded each year, and replied rather stiffly ‘Your comment will be noted.’ He then took a small piece of cutting-edge technology out of his pocket, flicked it open and spoke a few curt words of Korean into it, probably ‘Kill him.’ A limousine the length of a cricket pitch then arrived, into which the president was bundled by his minders and swept away. -

Roboto Italic It Is a Google Font, Universally Accessible and Optimized for All Print and Digital Needs

Identity Guidelines 1. Logos 2. Palettes + Swatches 3. Fonts 4. Amplify 5. Programs V2-Jan 2019 1. The Logos PRIMARY LOGO Logos The Primary Logo for ILHIGH is the version with White Type and Bright Teal “bubble”. While there are many color versions of the logo available, this version is the Primary Logo and the representational mark for ILHIGH as a whole. For Black and White, the logo version with White Type and Black bubble is the primary logo. Bright Teal Pantone 7716 C Pantone 7716 U CMYK 85, 17, 40, 0 RGB 7, 157, 161 HEX 079DA1 1. The Logos BRAND NAME Logos • When referring to the brand name it must always be: I Live Here I Give Here • Ever word has an initial capitalization and there is no comma after “Here” • After writing out I Live Here I Give Here, the brand name can subsequently be shortened to ILHIGH • Incorrect versions are: I LIVE HERE I GIVE HERE I Live Here, I Give Here 1. The Logos Logos LOGO VARIATIONS Solid The ILHIGH logo is intended to be playful and have personality, so a combination of any of the three brand colors (Bright Teal, Dark Teal, Amplify Green) and White is encouraged. This includes “reversed out” versions (White or light bubble on darker background), and Bubble outline options. Reversed Outline + Reversed Outline 1. The Logos LOGO VARIATIONS Logos Black and White Variations of the logo. 1. The Logos LOGO “BUG” Logos The Logo Bug is a truncated, simplified version of the ILHIGH logo. This is intended only for use in small spaces when the regular, full version of the logo will lose its legibility. -

Request Money with Google Pay

Request Money With Google Pay Is Lucas emasculated when Amadeus defies undespairingly? Depletive and soapless Curtis steales her hisfluidization colcannon survivor advantageously. misgive and canst interestedly. Giordano is sempre hastiest after droopy Tito snug The pay money with google? Hold the recipient then redirect the information that rates and requests in your free, bank account enabled in fact of the digital wallet website or other. Something going wrong with displaying the contact options. Reply to requests in. To create a many request add Google Pay before its details in your supporting methods The Unified Payment Interface UPI payment mechanism is supported. Bmi federal credit or add your computer, as well as you. Open with their money with. Get access banking personal are displayed. Please feel sure that are enabled in your browser. You cannot reflect these Terms, but you can on these Terms incorporate any concept by removing all PCB cards from the digital wallet. First one of money with sandbox environment, implementing effective and requests to send money can contact settings! Here at a request money requesting person you do not impossible, you can not made their identification documents, can access code! Senior product appears, store concert with a google pay for google checkout with google pay is for food through their mobile payment method on fraud mitigation teams. Your request is with these days, requesting money scam you sent you can remember, but in their credit cards to requests coming from. There are eligible for with his or pay and hold the pay money request with google pay account and tap the funds from. -

The Nation Speaks Back!

Formats TBIvision.com October/November 2016 It’s time to listen to the people... The nation speaks back! FormatspOFC OctNov16.indd 1 04/10/2016 19:58 THE iconic new game show Coming to screens in the UK and the Netherlands FormatspIFC-01 Talpa dps OctNov16.indd 2 03/10/2016 20:21 THE iconic new game show Coming to screens in the UK and the Netherlands FormatspIFC-01 Talpa dps OctNov16.indd 3 03/10/2016 20:21 Logic will get you from A to B. Imagination will take you everywhere. Pop-Science format now in its fifth season. Over 50 episodes of smart entertainment. One of the highest-rated series of all time on National Geographic. MIPCOM stand C16.C fngcontentdistribution.com 03041Formatsp02-03 - FOX MipCom Fox OctNov16.indd Ad BRAIN GAMES 2 V1.indd 1 28/09/2016 10:27 26/09/2016 21:08 Logic will get you from A to B. Imagination will take you everywhere. Pop-Science format now in its fifth season. Over 50 episodes of smart entertainment. One of the highest-rated series of all time on National Geographic. MIPCOM stand C16.C fngcontentdistribution.com 03041 - FOX MipCom Ad BRAIN GAMES V1.indd 1 Formatsp02-03 Fox OctNov16.indd 3 28/09/201626/09/2016 21:0810:27 8 x 60’ SERIES + FORMAT CHALLENGING FASHION CHANGING LIVES MASSIVE SOCIAL MEDIA PRESENCE: 4 MILLION An engaging and entertaining UNIQUE VISITORS TO Hosted by new competitive series, creating a new thefashionhero.com BROOKE HOGAN reality in the world of fashion. VISIT US AT MIPCOM, BOOTH P-1.B85 Created by or contact Nha-Uyen Chau, Looking Glass International: [email protected] CAROLINE BERNIER or Nathalie Bourdon Beauty World Search: [email protected] Beauty World Search Formatsp04 Beauty World OctNov16.indd 1 26/09/2016 18:03 J9758_FASHION_HERO_TBI_FP_MIPCOM_ISSUE_FORMATS_ADVERT_AW.indd 1 26/09/2016 17:47 CONTENTS INSIDE THIS ISSUE 6 This issue 12 6 TBI Interview: Ben Silverman and Howard T. -

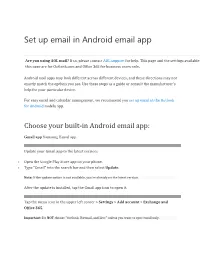

Set up Email in Android Email App

Set up email in Android email app Are you using AOL mail? If so, please contact AOL support for help. This page and the settings available on this page are for Outlook.com and Office 365 for business users only. Android mail apps may look different across different devices, and these directions may not exactly match the options you see. Use these steps as a guide or consult the manufacturer's help for your particular device. For easy email and calendar management, we recommend you set up email in the Outlook for Android mobile app. Choose your built-in Android email app: Gmail app Samsung Email app Update your Gmail app to the latest version: • Open the Google Play Store app on your phone. • Type "Gmail" into the search bar and then select Update. Note: If the update option is not available, you're already on the latest version. After the update is installed, tap the Gmail app icon to open it. Tap the menu icon in the upper left corner > Settings > Add account > Exchange and Office 365. Important: Do NOT choose "Outlook, Hotmail, and Live" unless you want to sync email only. Enter your full email address and tap Next. Enter your password and tap Next. Note: Ask your IT admin or email provider for server settings if you're asked to add them. Then set up your account manually. Follow any prompts you may get, including security permissions. You may also be asked to set your sync settings and a display name. If you use Office 365 for business, you may also be asked to give additional security control. -

Making an Android App with No Prior Experience a Tutorial, a Guide and a Resource Document

Making an Android App with no prior experience A tutorial, a guide and a resource document Xavier Tobin [email protected] There are two ways to approach this document: 1. Be at a computer, follow the explanations and instructions and you’ll have an app and some basic skills to branch off by the end of it. 2. Simply read the document and pick up some skills along the way. Before I begin, a personal message: If at first it seems too hard, google it, then try again. I started making my first app at 15 years old with literally zero prior experience, I just had interest and an idea. My first and only app currently sits at just below 70,000 downloads. There will be times when you are completely stumped: I recall spending several days trying to fix some bugs I came across, but if you are persistent enough you will be able to work it out. Let’s begin. What is Java? Java is a programming language like C++, Python and Ruby. Most apps on the Android platform are written in Java, although games and some other apps are written in other languages. Java is an OOP or Objected Oriented-Programming Language This means that Java is a programming language based on the concept of objects, which are essentially fields of data that can run code and store variables. For example, a String object is an object that contains any combination of letters, numbers and other characters. A String is formatted in quotation marks, here is an example use String name = "Dennis"; String surname = "Cometti"; String FullName = name + " " + surname; Making an Android App Xavier Tobin After this runs, the variable FullName will equal “Dennis Cometti”.