Designing the Best Human-Like Bot for Unreal Tournament 2004

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Playvs Announces $30.5M Series B Led by Elysian Park

PLAYVS ANNOUNCES $30.5M SERIES B LED BY ELYSIAN PARK VENTURES, INVESTMENT ARM OF THE LA DODGERS, ADDITIONAL GAME TITLES AND STATE EXPANSIONS FOR INAUGURAL SEASON FEBRUARY 2019 High school esports market leader introduces Rocket League and SMITE to game lineup adds associations within Alabama, Mississippi, and Texas to sanctioned states for Season One and closes a historic round of funding from Diddy, Adidas, Samsung and others EMBARGOED FOR NOVEMBER 20TH AT 10AM EST / 7AM PST LOS ANGELES, CA - November 20th - PlayVS – the startup building the infrastructure and official platform for high school esports - today announced its Series B funding of $30.5 million led by Elysian Park Ventures, the private investment arm of the Los Angeles Dodgers ownership group, with five existing investors doubling down, New Enterprise Associates, Science Inc., Crosscut Ventures, Coatue Management and WndrCo, and new groups Adidas (marking the company’s first esports investment), Samsung NEXT, Plexo Capital, along with angels Sean “Diddy” Combs, David Drummond (early employee at Google and now SVP Corp Dev at Alphabet), Rahul Mehta (Partner at DST Global), Rich Dennis (Founder of Shea Moisture), Michael Dubin (Founder and CEO of Dollar Shave Club), Nat Turner (Founder and CEO of Flatiron Health) and Johnny Hou (Founder and CEO of NZXT). This milestone round comes just five months after PlayVS’ historic $15M Series A funding. “We strive to be at the forefront of innovation in sports, and have been carefully -

Epic Games and EA Announce 'Bulletstorm Epic Edition' With

Epic Games and EA Announce ‘Bulletstorm Epic Edition' With Exclusive Early Access to Gears of War 3 Beta REDWOOD CITY, Calif.--(BUSINESS WIRE)-- People Can Fly, Epic Games, Electronic Arts Inc. (NASDAQ:ERTS) and Microsoft Game Studios today announced the "Epic Edition" of Bulletstorm™, the highly anticipated new action shooter from the makers of the award-winning Unreal Tournament and Gears of War series of games. In this unique promotion, Epic Games and EA are blowing out the launch of Bulletstorm with access to the public beta for Gears of War 3, the spectacular conclusion to one of the most memorable and celebrated sagas in video games. Players that purchase the Epic Edition are guaranteed early access to the Gears of War 3 beta*. Pre-order now to reserve a copy of the Epic Edition which will be available on Feb. 22, 2011 for MSRP $59.99, only for the Xbox 360® video game and entertainment system, while supplies last. "Epic is poised to break new ground in 2011 with Gears of War 3 and Bulletstorm," said Dr. Michael Capps, president of Epic Games. "With these two highly anticipated triple-A experiences comes a unique opportunity to do something to really excite players, and that's what we intend to accomplish with the support of Microsoft Game Studios and EA. This is for the shooter fans." In addition to access to the beta, the Epic Edition gives players bonus in-game Bulletstorm content when playing online, including 25,000 experience points, visual upgrades for their iconic leash, deadly Peace Maker Carbine, boots and armor. -

Principles of Game Design

Principles of Game Design • To improve the chances for success of a game, there are several principles of Game Design Principles good game design to be followed. – Some of this is common sense. – Some of this is uncommon sense … learn from other people’s experiences and mistakes! • Remember: – Players do not know what they want, but they 1 know it when they see it! 2 Principles of Game Design Player Empathy • To start with, we will take a look at some • A good designer always has an idea of what is of the fundamental principles of game going on in a player’s head. design. – Know what they expect and do not expect. – Anticipate their reactions to different game • Later, we will come back and take a look situations. at how we can properly design a game to • Anticipating what a player wants to do next in a make sure these principles are being video game situation is important. properly met. – Let the player try it, and ensure the game responds intelligently. This makes for a better experience. – If necessary, guide the player to a better course of 3 action. 4 Player Empathy Player Empathy Screen shot from Prince of Persia: The Sands of Time. This well designed game Screen shot from Batman Vengeance. The people making Batman games clearly demonstrates player empathy. The prince will miss this early jump5 do not empathize with fans of this classic comic. Otherwise, Batman games6 over a pit, but will not be punished and instead can try again the correct way. would not be so disappointing on average. -

Introduction

Introduction ○ Make games. ○ Develop strong mutual relationships. ○ Go to conferences with reasons. ○ Why build 1.0, when building 1.x is easier? Why we use Unreal Engine? ○ Easier to stay focused. ○ Avoid the trap of development hell. ○ Building years of experience. ○ A lot of other developers use it and need our help! Build mutual relationships ○ Epic offered early access to Unreal Engine 2. ○ Epic gave me money. ○ Epic sent me all around the world. ○ Meeting Jay Wilbur. Go to conferences ○ What are your extrinsic reasons? ○ What are your intrinsic reasons? ○ PAX Prime 2013. Building 1.x ○ Get experience by working on your own. ○ Know your limitations. ○ What are your end goals? Conclusion ○ Know what you want and do it fast. ○ Build and maintain key relationships. ○ Attend conferences. ○ Build 1.x. Introduction Hello, my name is James Tan. I am the co-founder of a game development studio that is called Digital Confectioners. Before I became a game developer, I was a registered pharmacist with a passion for game development. Roughly five years ago, I embarked on a journey to follow that passion and to reach the dream of becoming a professional game developer. I made four key decisions early on that I still follow to this day. One, I wanted to make games. Two, I need to develop strong mutual relationships. Three, I need to have strong reasons to be at conferences and never for the sake of it. Four, I should always remember that building 1 point x is going to be faster and more cost effective than trying to build 1 point 0. -

Unreal Tournament for Immersive Interactive �Theater

Jeffrey Jacobson and Zimmy Hwang UNREAL TOURNAMENT FOR IMMERSIVE INTERACTIVE THEATER aveUT is a set of modifications to ater that displays a specially made movie on the Unreal Tournament that allows it inside of a half-sphere-shaped screen. Members of to display in panoramic (wide field the audience experience some degree of immer- of view) theaters. The result is a sion, the feeling of being there in the world useful tool for educational applica- depicted by the movie, because the screen covers Ctions and virtual reality (VR) research. Our mod- such a wide angle of view. ifications are open source and freely available to While film is capable of providing the most the public (see www2.sis.pitt.edu/~jacobson/ut/). detailed imagery, producing the visual effects by CaveUT provides multiple views—left, right, up, computer has some distinct advantages. With the and down—from one point in the virtual envi- right software, scenes could be generated rapidly ronment. It is also capable of off-axis projection, and with no special equipment. Most impor- which supports correct display when used in tantly, computer-generated imagery could the very small one-person theaters researchers respond to input from a performer or the audi- refer to as caves. ence itself. At this point it is more like an interac- An important branch of engineering for VR tive game—a boon for entertainment and applications centers on building an enclosure educational applications, as well as certain areas where imagery is projected onto the walls. An of research. An example of a fully digital display early example of this is a planetarium, which cre- is the Earth Theater at the Carnegie Museum of ates the illusion of a night sky, or an IMAX the- Pittsburgh (see Figure 1). -

ROUTE GENERATION ALGORITHM in UT2004 Gregg T

ROUTE GENERATION FOR A SYNTHETIC CHARACTER (BOT) USING A PARTIAL OR INCOMPLETE KNOWLEDGE ROUTE GENERATION ALGORITHM IN UT2004 Gregg T. Hanold, Technical Manager Oracle National Security Group gregg. [email protected] David T. Hanold, Animator DavidHanoldCG [email protected] Abstract. This paper presents a new Route Generation Algorithm that accurately and realistically represents human route planning and navigation for Military Operations in Urban Terrain (MOUT). The accuracy of this algorithm in representing human behavior is measured using the Unreal TournamenFM 2004 (UT2004) Game Engine to provide the simulation environment in which the differences between the routes taken by the human player and those of a Synthetic Agent (BOT) executing the A-star algorithm and the new Route Generation Algorithm can be compared. The new Route Generation Algorithm computes the BOT route based on partial or incomplete knowledge received from the UT2004 game engine during game play. To allow BOT navigation to occur continuously throughout the game play with incomplete knowledge of the terrain, a spatial network model of the UT2004 MOUT terrain is captured and stored in an Oracle 11 9 Spatial Data Object (SOO). The SOO allows a partial data query to be executed to generate continuous route updates based on the terrain knowledge, and stored dynamic BOT, Player and environmental parameters returned by the query. The partial data query permits the dynamic adjustment of the planned routes by the Route Generation Algorithm based on the current state of the environment during a simulation. The dynamic nature of this algorithm more accurately allows the BOT to mimic the routes taken by the human executing under the same conditions thereby improving the realism of the BOT in a MOUT simulation environment. -

Paths to Being Epic: Finding Your Role in the Industry

Paths to being Epic: Finding your role in the Industry Andy Bayle - Apr 18, 2012 Who is this guy? • Production Team – Localization Coordinator – Production Assistant – Test Expert • Shipped Title Experience – UDK – Shadow Complex – Unreal Tournament 3 – Bulletstorm – Gears of War 3 • Raam’s Shadow • Fenix Rising • Forces of Nature I love Video Games! What next? Administration Business/Marketing • Operations • Sales • HR • Advertising • Public Relations Animation • Rigging Design • MoCap • Game Designer Audio Production • Music • Project planning/Scheduling • SFX Programming Talent • Platform/Engine • VO Designer • Level Designer – Visual Environments – England • High School hobby • Long Break in toying with this stuff – University • Studied Illustration • Concept Art Designer • Level Designer – Visual Environments – Unreal Tournament 3 Launches in 2007 • Posts every stage of his level development • Call from Mark Rein – Entered the Make Something Unreal Contest • Won! 3 Stages – These were collaborative efforts with a small team • No prior “real” experience – Internship • After 10 months, hired Artist • Modeling and Texture Art – India, High School • 3D not available in schools – Had a gap in interest • Worked saving money for College – College in California • Realized that school wasn't everything that was needed – Find additional information on his own • 3 yrs, No breaks - No summer holidays - No Christmases • Slept 2 hours per night - seriously – Because he loves Art Artist • Modeling and Texture Art – Polycount.com • Just putting up -

Table of Contents Installation

Table of Contents Installation Installation . 2 To install Unreal Tournament 3™ to your computer, follow these steps: Controls . 3 - 4 Login . 5 1. Insert your “Unreal Tournament 3™” DVD in your DVD-ROM drive. Create Profile . 6 Single Player . 7 2. The install program will automatically begin. If not, browse to your DVD-ROM drive and double-click on SetupUT3.exe. Campaign . 7 Instant Action . 7 3. Select the language you would like to install and select OK. Multiplayer . 8 4. Read the End User License Agreement and if you agree, select Quick Match . 8 YES. Join Game . 8 Host Game . 9 - 10 5. Select Browse to change the directory to install the game to, other- Community & Settings . 11 wise select Next. Community . 11 6. Unreal Tournament 3 will now begin installation. Settings . 11 - 14 Deathmatch HUD . 15 7. Once the installation has completed, select Finish. Team Deathmatch HUD . 16 8. The first time you run Unreal Tournament 3 following installation, Duel Deathmatch HUD . 16 the game will prompt you to enter your Product Key. The Product Capture the Flag HUD . 17 Key can be found in the game’s packaging. Vehicle Capture the Flag HUD . 18 Warfare . 19 - 21 Warfare HUD . 22 Unreal Characters . 23 - 25 Weapons . 26 - 29 Vehicles . 30 - 34 Pickups . 35 Powerups . 36 Deployables . 37 MODS/Unreal Editor 3 . 38 End User LIcense Agreement . 39 - 42 Credits . 43 - 45 Notes . 46 Warranty . 50 Installation Key Code 1 2 UT3_PC_Manual2.indd 2-3 10/26/07 11:32:59 AM Controls Controls Action Key Set 1 Key Set 2 Action Key Set 1 Key Set 2 Move Forward -

Exploiting Game Engines for Fun & Profit

Paris, May 2013 Exploiting Game Engines For Fun & Profit Luigi Auriemma & Donato Ferrante Who ? Donato Ferrante Luigi Auriemma @dntbug ReVuln Ltd. @luigi_auriemma Re-VVho ? - Vulnerability Research - Consulting - Penetration Testing REVULN.com 3 Agenda • Introduction • Game Engines • Attacking Game Engines – Fragmented Packets – Compression Theory about how to – Game Protocols find vulnerabilities in game engines – MODs – Master Servers • Real World Real world examples • Conclusion ReVuln Ltd. 4 Introduction • Thousands of potential attack vectors (games) • Millions of potential targets (players) Very attractive for attackers ReVuln Ltd. 5 But wait… Gamers ReVuln Ltd. 6 But wait… did you know… • Unreal Engine => Licensed to FBI and US Air Force – Epic Games Powers US Air Force Training With Unreal Engine 3 Web Player From Virtual Heroes. – In March 2012, the FBI licensed Epic's Unreal Development Kit to use in a simulator for training. ReVuln Ltd. 7 But wait… did you know… • Real Virtuality => It’s used in military training simulators – VBS1 – VBS2 ReVuln Ltd. 8 But wait… did you know… • Virtual3D => Mining, Excavation, Industrial, Engineering and other GIS & CAD-based Visualizations with Real-time GPS-based Animation and Physical Simulation on a Virtual Earth => SCADA ReVuln Ltd. 9 But wait… did you know… Different people but they have something in common.. They are potential attack vectors • When they go back home, they play games • When they play games, they become targets • And most importantly, their Companies become targets ReVuln Ltd. 10 Game Engines Game Engines [ What ] • A Game Engine is the Kernel for a Game GAME API Sounds Graphics A GAMENetwork ENGINE Maps Animations Content Development Audio Models ReVuln Ltd. -

Unreal Tournament Goty

UTGYCD manual guts rev 1.qxp 9/22/00 3:18 PM Page 1 UTGYCD manual guts rev 1.qxp 9/22/00 3:18 PM Page 2 END-USER LICENSE AG R E E M E N T PLEASE READ CAREFULLY. BY USING THIS SOFTWARE, YOU ARE AGREEING TO BE BOUND BY THE TERMS OF TABLE OF CONTENTS THIS END-USER LICENSE AGREEMENT ("LICENSE"). IF YOU DO NOT AGREE TO THESE TERMS, DO NOT USE THE SOFTWARE AND PROMPTLY RETURN THE DISC OR CARTRIDGE IN ITS ORIGINAL PACKAGING TO THE PLACE OF PURCHASE. System Requirements ......................2 1. Grant of License. The software accompanying this license and related documentation (the "Software") is licensed to you, not sold, by Infogrames North America, Inc. ("INA"), and its use is subject to this license. INA grants to you a limited, personal, non-exclusive right to use the Software in the manner described in the Installation ......................................2 user documentation. If the Software is configured for loading onto a hard drive, you may load the Software only onto the hard drive of a single machine and run the Software from only that hard drive. You may perma- nently transfer all rights INA grants to you in this license, provided you retain no copies, you transfer all of Game Objective ................................4 the Software (including all component parts, the media and printed materials, and any upgrades), and the recipient reads and accepts this license. INA reserves all rights not expressly granted to you by this Agreement. Quick Start ......................................4 2. Restrictions. INA or its suppliers own the title, copyright, and other intellectual property rights in the Software. -

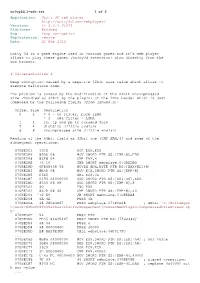

Application: Unity 3D Web Player

unity3d_1-adv.txt 1 of 2 Application: Unity 3D web player http://unity3d.com/webplayer/ Versions: <= 3.2.0.61061 Platforms: Windows Bug: heap corruption Exploitation: remote Date: 21 Feb 2012 Unity 3d is a game engine used in various games and it’s web player allows to play these games (unity3d extension) also directly from the web browser. # Vulnerabilities # Heap corruption caused by a negative 32bit size value which allows to execute malicious code. The problem is caused by the modification of the 64bit uncompressed size (handled as 32bit by the plugin) of the lzma header which is just composed by the following fields (from lzma86.h): Offset Size Description 0 1 = 0 - no filter, pure LZMA = 1 - x86 filter + LZMA 1 1 lc, lp and pb in encoded form 2 4 dictSize (little endian) 6 8 uncompressed size (little endian) Reading of the 64bit field as 32bit one (CMP EAX,4) and some of the subsequent operations: 070BEDA3 33C0 XOR EAX,EAX 070BEDA5 895D 08 MOV DWORD PTR SS:[EBP+8],EBX 070BEDA8 83F8 04 CMP EAX,4 070BEDAB 73 10 JNB SHORT webplaye.070BEDBD 070BEDAD 0FB65438 05 MOVZX EDX,BYTE PTR DS:[EAX+EDI+5] 070BEDB2 8B4D 08 MOV ECX,DWORD PTR SS:[EBP+8] 070BEDB5 D3E2 SHL EDX,CL 070BEDB7 0196 A4000000 ADD DWORD PTR DS:[ESI+A4],EDX 070BEDBD 8345 08 08 ADD DWORD PTR SS:[EBP+8],8 070BEDC1 40 INC EAX 070BEDC2 837D 08 40 CMP DWORD PTR SS:[EBP+8],40 070BEDC6 ^72 E0 JB SHORT webplaye.070BEDA8 070BEDC8 6A 4A PUSH 4A 070BEDCA 68 280A4B07 PUSH webplaye.074B0A28 ; ASCII "C:/BuildAgen t/work/b0bcff80449a48aa/PlatformDependent/CommonWebPlugin/CompressedFileStream.cp p" 070BEDCF 53 PUSH EBX 070BEDD0 FF35 84635407 PUSH DWORD PTR DS:[7546384] 070BEDD6 6A 04 PUSH 4 070BEDD8 68 00000400 PUSH 40000 070BEDDD E8 BA29E4FF CALL webplaye.06F0179C .. -

GOG-API Documentation Release 0.1

GOG-API Documentation Release 0.1 Gabriel Huber Jun 05, 2018 Contents 1 Contents 3 1.1 Authentication..............................................3 1.2 Account Management..........................................5 1.3 Listing.................................................. 21 1.4 Store................................................... 25 1.5 Reviews.................................................. 27 1.6 GOG Connect.............................................. 29 1.7 Galaxy APIs............................................... 30 1.8 Game ID List............................................... 45 2 Links 83 3 Contributors 85 HTTP Routing Table 87 i ii GOG-API Documentation, Release 0.1 Welcome to the unoffical documentation of the APIs used by the GOG website and Galaxy client. It’s a very young project, so don’t be surprised if something is missing. But now get ready for a wild ride into a world where GET and POST don’t mean anything and consistency is a lucky mistake. Contents 1 GOG-API Documentation, Release 0.1 2 Contents CHAPTER 1 Contents 1.1 Authentication 1.1.1 Introduction All GOG APIs support token authorization, similar to OAuth2. The web domains www.gog.com, embed.gog.com and some of the Galaxy domains support session cookies too. They both have to be obtained using the GOG login page, because a CAPTCHA may be required to complete the login process. 1.1.2 Auth-Flow 1. Use an embedded browser like WebKit, Gecko or CEF to send the user to https://auth.gog.com/auth. An add-on in your desktop browser should work as well. The exact details about the parameters of this request are described below. 2. Once the login process is completed, the user should be redirected to https://www.gog.com/on_login_success with a login “code” appended at the end.