Inlined Reference Monitors: Certification, Concurrency and Tree Based Monitoring

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Demos—What Makes Them Tick?

AALTO UNIVERSITY School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Markku Reunanen Computer Demos—What Makes Them Tick? Licentiate Thesis Helsinki, April 23, 2010 Supervisor: Professor Tapio Takala AALTO UNIVERSITY ABSTRACT OF LICENTIATE THESIS School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Author Date Markku Reunanen April 23, 2010 Pages 134 Title of thesis Computer Demos—What Makes Them Tick? Professorship Professorship code Contents Production T013Z Supervisor Professor Tapio Takala Instructor - This licentiate thesis deals with a worldwide community of hobbyists called the demoscene. The activities of the community in question revolve around real-time multimedia demonstrations known as demos. The historical frame of the study spans from the late 1970s, and the advent of affordable home computers, up to 2009. So far little academic research has been conducted on the topic and the number of other publications is almost equally low. The work done by other researchers is discussed and additional connections are made to other related fields of study such as computer history and media research. The material of the study consists principally of demos, contemporary disk magazines and online sources such as community websites and archives. A general overview of the demoscene and its practices is provided to the reader as a foundation for understanding the more in-depth topics. One chapter is dedicated to the analysis of the artifacts produced by the community and another to the discussion of the computer hardware in relation to the creative aspirations of the community members. -

![Archive and Compressed [Edit]](https://docslib.b-cdn.net/cover/8796/archive-and-compressed-edit-1288796.webp)

Archive and Compressed [Edit]

Archive and compressed [edit] Main article: List of archive formats • .?Q? – files compressed by the SQ program • 7z – 7-Zip compressed file • AAC – Advanced Audio Coding • ace – ACE compressed file • ALZ – ALZip compressed file • APK – Applications installable on Android • AT3 – Sony's UMD Data compression • .bke – BackupEarth.com Data compression • ARC • ARJ – ARJ compressed file • BA – Scifer Archive (.ba), Scifer External Archive Type • big – Special file compression format used by Electronic Arts for compressing the data for many of EA's games • BIK (.bik) – Bink Video file. A video compression system developed by RAD Game Tools • BKF (.bkf) – Microsoft backup created by NTBACKUP.EXE • bzip2 – (.bz2) • bld - Skyscraper Simulator Building • c4 – JEDMICS image files, a DOD system • cab – Microsoft Cabinet • cals – JEDMICS image files, a DOD system • cpt/sea – Compact Pro (Macintosh) • DAA – Closed-format, Windows-only compressed disk image • deb – Debian Linux install package • DMG – an Apple compressed/encrypted format • DDZ – a file which can only be used by the "daydreamer engine" created by "fever-dreamer", a program similar to RAGS, it's mainly used to make somewhat short games. • DPE – Package of AVE documents made with Aquafadas digital publishing tools. • EEA – An encrypted CAB, ostensibly for protecting email attachments • .egg – Alzip Egg Edition compressed file • EGT (.egt) – EGT Universal Document also used to create compressed cabinet files replaces .ecab • ECAB (.ECAB, .ezip) – EGT Compressed Folder used in advanced systems to compress entire system folders, replaced by EGT Universal Document • ESS (.ess) – EGT SmartSense File, detects files compressed using the EGT compression system. • GHO (.gho, .ghs) – Norton Ghost • gzip (.gz) – Compressed file • IPG (.ipg) – Format in which Apple Inc. -

TIMES of CHANGE in the DEMOSCENE a Creative Community and Its Relationship with Technology

TIMES OF CHANGE IN THE DEMOSCENE A Creative Community and Its Relationship with Technology Markku Reunanen ACADEMIC DISSERTATION To be presented, with the permission of the Faculty of Humanities of the University of Turku, for public examination in Classroom 125 University Consortium of Pori, on February 17, 2017, at 12.00 TURUN YLIOPISTON JULKAISUJA – ANNALES UNIVERSITATIS TURKUENSIS Sarja - ser. B osa - tom. 428 | Humanoria | Turku 2017 TIMES OF CHANGE IN THE DEMOSCENE A Creative Community and Its Relationship with Technology Markku Reunanen TURUN YLIOPISTON JULKAISUJA – ANNALES UNIVERSITATIS TURKUENSIS Sarja - ser. B osa - tom. 428 | Humanoria | Turku 2017 University of Turku Faculty of Humanities School of History, Culture and Arts Studies Degree Programme in Cultural Production and Landscape Studies Digital Culture, Juno Doctoral Programme Supervisors Professor Jaakko Suominen University lecturer Petri Saarikoski University of Turku University of Turku Finland Finland Pre-examiners Professor Nick Montfort Associate professor Olli Sotamaa Massachusetts Institute of Technology University of Tampere United States Finland Opponent Assistant professor Carl Therrien University of Montreal Canada The originality of this thesis has been checked in accordance with the University of Turku quality assurance system using the Turnitin OriginalityCheck service. ISBN 978-951-29-6716-2 (PRINT) ISBN 978-951-29-6717-9 (PDF) ISSN 0082-6987 (PRINT) ISSN 2343-3191 (ONLINE) Cover image: Markku Reunanen Juvenes Print, Turku, Finland 2017 Abstract UNIVERSITY OF TURKU Faculty of Humanities School of History, Culture and Arts Studies Degree Programme in Cultural Production and Landscape Studies Digital Culture REUNANEN, MARKKU: Times of Change in the Demoscene: A Creative Commu- nity and Its Relationship with Technology Doctoral dissertation, 100 pages, 88 appendix pages January 17, 2017 The demoscene is a form of digital culture that emerged in the mid-1980s after home computers started becoming commonplace. -

Amigaos4 Download

Amigaos4 download click here to download Read more, Desktop Publishing with PageStream. PageStream is a creative and feature-rich desktop publishing/page layout program available for AmigaOS. Read more, AmigaOS Application Development. Download the Software Development Kit now and start developing native applications for AmigaOS. Read more.Where to buy · Supported hardware · Features · SDK. Simple DirectMedia Layer port for AmigaOS 4. This is a port of SDL for AmigaOS 4. Some parts were recycled from older SDL port for AmigaOS 4, such as audio and joystick code. Download it here: www.doorway.ru Thank you James! 19 May , In case you haven't noticed yet. It's possible to upload files to OS4Depot using anonymous FTP. You can read up on how to upload and create the required readme file on this page. 02 Apr , To everyone downloading the Diablo 3 archive, April Fools on. File download command line utility: http, https and ftp. Arguments: URL/A,DEST=DESTINATION=TARGET/K,PORT/N,QUIET/S,USER/K,PASSWORD/K,LIST/S,NOSIZE/S,OVERWRITE/S. URL = Download address DEST = File name / Destination directory PORT = Internet port number QUIET = Do not display progress bar. AmigaOS 4 is a line of Amiga operating systems which runs on PowerPC microprocessors. It is mainly based on AmigaOS source code developed by Commodore, and partially on version developed by Haage & Partner. "The Final Update" (for OS version ) was released on 24 December (originally released Latest release: Final Edition Update 1 / De. Purchasers get a serial number inside their box or by email to register their purchase at our website in order to get access to our restricted download area for the game archive, the The game was originally released in for AmigaOS 68k/WarpOS and in December for AmigaOS 4 by Hyperion Entertainment CVBA. -

Vbcc Compiler System

vbcc compiler system Volker Barthelmann i Table of Contents 1 General :::::::::::::::::::::::::::::::::::::::::: 1 1.1 Introduction ::::::::::::::::::::::::::::::::::::::::::::::::::: 1 1.2 Legal :::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 1 1.3 Installation :::::::::::::::::::::::::::::::::::::::::::::::::::: 2 1.3.1 Installing for Unix::::::::::::::::::::::::::::::::::::::::: 3 1.3.2 Installing for DOS/Windows::::::::::::::::::::::::::::::: 3 1.3.3 Installing for AmigaOS :::::::::::::::::::::::::::::::::::: 3 1.4 Tutorial :::::::::::::::::::::::::::::::::::::::::::::::::::::::: 5 2 The Frontend ::::::::::::::::::::::::::::::::::: 7 2.1 Usage :::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 7 2.2 Configuration :::::::::::::::::::::::::::::::::::::::::::::::::: 8 3 The Compiler :::::::::::::::::::::::::::::::::: 11 3.1 General Compiler Options::::::::::::::::::::::::::::::::::::: 11 3.2 Errors and Warnings :::::::::::::::::::::::::::::::::::::::::: 15 3.3 Data Types ::::::::::::::::::::::::::::::::::::::::::::::::::: 15 3.4 Optimizations::::::::::::::::::::::::::::::::::::::::::::::::: 16 3.4.1 Register Allocation ::::::::::::::::::::::::::::::::::::::: 18 3.4.2 Flow Optimizations :::::::::::::::::::::::::::::::::::::: 18 3.4.3 Common Subexpression Elimination :::::::::::::::::::::: 19 3.4.4 Copy Propagation :::::::::::::::::::::::::::::::::::::::: 20 3.4.5 Constant Propagation :::::::::::::::::::::::::::::::::::: 20 3.4.6 Dead Code Elimination::::::::::::::::::::::::::::::::::: 21 3.4.7 Loop-Invariant Code Motion -

Vasm Assembler System

vasm assembler system Volker Barthelmann, Frank Wille June 2021 i Table of Contents 1 General :::::::::::::::::::::::::::::::::::::::::: 1 1.1 Introduction ::::::::::::::::::::::::::::::::::::::::::::::::::: 1 1.2 Legal :::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 1 1.3 Installation :::::::::::::::::::::::::::::::::::::::::::::::::::: 1 2 The Assembler :::::::::::::::::::::::::::::::::: 3 2.1 General Assembler Options ::::::::::::::::::::::::::::::::::::: 3 2.2 Expressions :::::::::::::::::::::::::::::::::::::::::::::::::::: 5 2.3 Symbols ::::::::::::::::::::::::::::::::::::::::::::::::::::::: 7 2.4 Predefined Symbols :::::::::::::::::::::::::::::::::::::::::::: 7 2.5 Include Files ::::::::::::::::::::::::::::::::::::::::::::::::::: 8 2.6 Macros::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 8 2.7 Structures:::::::::::::::::::::::::::::::::::::::::::::::::::::: 8 2.8 Conditional Assembly :::::::::::::::::::::::::::::::::::::::::: 8 2.9 Known Problems ::::::::::::::::::::::::::::::::::::::::::::::: 9 2.10 Credits ::::::::::::::::::::::::::::::::::::::::::::::::::::::: 9 2.11 Error Messages :::::::::::::::::::::::::::::::::::::::::::::: 10 3 Standard Syntax Module ::::::::::::::::::::: 13 3.1 Legal ::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 13 3.2 Additional options for this module :::::::::::::::::::::::::::: 13 3.3 General Syntax ::::::::::::::::::::::::::::::::::::::::::::::: 13 3.4 Directives ::::::::::::::::::::::::::::::::::::::::::::::::::::: 14 3.5 Known Problems:::::::::::::::::::::::::::::::::::::::::::::: -

Apov Issue 4 Regulars

issue 4 - june 2010 - an abime.net publication the amiga dedicated to amIga poInt of vIew AMIGA reviews w news tips w charts apov issue 4 regulars 8 editorial 10 news 14 who are we? 116 charts 117 letters 119 the back page reviews 16 leander 18 dragon's breath 22 star trek: 25th anniversary 26 operation wolf 28 cabal 30 cavitas 32 pinball fantasies 36 akira 38 the king of chicago ap o 40 wwf wrestlemania v 4 42 pd games 44 round up 5 features 50 in your face The first person shooter may not be the first genre that comes to mind when you think of the Amiga, but it's seen plenty of them. Read about every last one in gory detail. “A superimposed map is very useful to give an overview of the levels.” 68 emulation station There are literally thousands of games for the Amiga. Not enough for you? Then fire up an emulator and choose from games for loads of other systems. Wise guy. “More control options than you could shake a joypad at and a large number of memory mappers.” 78 sensi and sensibility Best football game for the Amiga? We'd say so. Read our guide to the myriad versions of Sensi. “The Beckhams had long lived in their estate, in the opulence which their eminence afforded them.” wham into the eagles nest 103 If you're going to storm a castle full of Nazis you're going to need a plan. colorado 110 Up a creek without a paddle? Read these tips and it'll be smooth sailing. -

Donald Kay Munro

Library of the Australian Defence Force Academy University College The University of New South Wales Donor: Donald Kay Munro PLEASE TYPE UNTVERSITY OF NEW SOUTH WALES Thesis/Project Report Sheet SumameorFamilyname: Firstname: DONALD Othername/s: MI Abbreviation for degree as given in the University calendar: Ml NF. S.Q School: COMPUrER..SCIENCE p^cuity: ZIIII^IIIZZIIIIZIIZIIIIIZ m: Fanners conduct their business in a spatial environment They make decisions about the area of land to place under various crops, the area for livestock and the areas which need to have ^^^^^^^^^^^^^^^^^^^^^^^^^ certain treatments (spraying, ploughing) carried out on them. On domestic personal computers, software such as Word Processors and Spreadsheets can assist them with record keeping and financial records; however, there is no commonly available software that can assist with the spatial decisions and spatial record keeping. Geographic Information Systems (CIS) can provide such support, but the existing software is targeted at an expert specialist user and, while offering powerful processing, is too complex for a novice user to derive benefit. Most of the software has its origins in the scientific or research community and would previously have been running on mainframe or powerful minicomputer environments. Many programs require complex instructions and parameters to be typed at a command line, and learning how to use these instructions would consume months of training. This Project used the Evolutionary Model of Structured Rapid Prototyping to define the user requirements of a DeskTop GIS for use by farmers. This work involved a repetitive cycle of talking to farmers about the information that they regularly used and the sort of information they would like in the future; finding ways of collecting and storing that data; writing programs that would manipulate and display the information; and making those programs accessible to the farmer in an easy-to-use environment on a domestic personal computer. -

English SCACOM Issue 3

English.SCACOM issue 3 (July 2008 ) Issue 3 www.scacom.de.vu July 2008 GGGGoooolllllldddd QQQQuuuueeeesssstttt 4444```` MMMMaaaakkkkiiiinnnngggg ooooffff NNNNyyyyaaaaaaaaaaaaaaaahhhh!!!! 11111111 !!!!nnnntttteeeerrrrvvvviiiieeeewwww wwwwiiiitttthhhh TTTThhhhoooorrrrsssstttteeeennnn SSSScccchhhhrrrreeeecccckkkk IIIInnnntttteeeerrrreeeessssttttiiiinnnngggg tttthhhhiiiinnnnggggssss BBBBeeeesssstttt rrrrCCCC66664444 GGGGaaaammmmeeeessss LLLLiiiisssstttt BBBBaaaarrrraaaaccccuuuuddddaaaassss ssssttttoooorrrryyyy aaa aaaaa aaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaa Page - 1 -aaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaa aaaaa aaa English.SCACOM issue 3 (July 2008 ) aaa aaaaa aaaaaaa aaaaaaaaaaaaaaaaaaaaaaaaa Page - 2 -aaaaaaaaaaaaaaaaaaaaaaaaa aaaaaaa aaaaa aaa English.SCACOM issue 3 (July 2008 ) Prologue . English SCACOM issue 3 with beautiful Imprint background pictures, interesting texts and a good game done by Richard Bayliss! English SCACOM is a free download- able PDF magazine.It’s scheduled every 3 months. First test of the new 1541U is included as well as the news from the last three month. There You can publish the magazine on your are a lot of interesting articles and the story of homepage without changes and link to www.scacom.de.vu only. the new game Gold Quest 4 with an Interview of the developer. Each author has Copyright of articles published in the magazine. Don’t use But it’s sad that there was very little feedback without permission of the author! for issue 2. Too nobody sent us texts or wants The best way to help would be if you to translate things. Due to this problems Eng- write some articles for us. lish.SCACOM is now scheduled every three Please send suggestions, corrections months. The next one will be released in Oc- or complaints via e-mail. tober 2008! Editoral staff: Please help us: write articles and give feed- Stefan Egger back. Write an E-mail to Joel Reisinger [email protected]. -

Download Issue 14

IssueBiggest Ever! £4.00 Issue 14, Spring 2003 8.00Euro Quake 2 Read our comprehensive review of Hyperions’s latest port. Hollywood Take a seat and enjoy our full review of this exciting new multimedia blockbuster! Contents Features The Show Must Go On! Editorial Welcome to another issue of Candy for SEAL’s Mick Sutton gives us an insight into the production of WoASE. Total Amiga, as you will no-doubt Issue 14 usergroups can afford. To give balance between space for the have noticed this issue is rather ack in the good old days we you an idea a venue capable of punters and giving the exhibitors late, which is a pity as we had Candy Factory is a graphics A built-in character generator had World of Amiga shows holding between 300 and 500 the stand space they require improved our punctuality over OS4 B the last few issues. application designed for allows you to add effects to Spring 2002 put on every year, usually at a people can cost anywhere from (some companies get a real bee high profile site (Wembley) and £500 to £1000 (outside London) in their bonnet about where they Unfortunately the main reason making logos and other text in any font without leaving texture again based on the all well attended. Everybody for a day. are situated). The floorplan goes behind the delay was that the graphics with high quality 3D the program. You can also load Contents wanted to be there and be seen, through many revisions before SCSI controller and PPC on my textured effects quickly and shapes (for example a logo) light source. -

QUAKE 2 (Amiga)

Quake 2 for AmigaOS Table of Contents Quake 2 for AmigaOS Licence issues Features of this port Installation and Gamestart The GUI Mini-FAQ Speed-Tweaking Hyperion-Demos List of included Quake 2 Mods Technical Support Quake 2 Web-Links Credits System Requirements The GUI When you doubleclick the Icon you see four tabs: Gfx Here you can select between MiniGL/Warp3D and Software Rendering. People with 3D Hardware should select MiniGL/Warp3D. It looks nicer and performs better. The game is also playable in Software-Rendering, though. You also can select between Fullscreen and Window Mode on this Tab. Audio On this tab you can choose between AHI and Paula-Sound. Also you can setup your CD Drive for CD Audio Replay (or disable it). Input On this Tab you can Enable or Disable the Mouse and the Joystick. As to the Joystick, the following Input-Devices are supported: - CD 32 Pad - PSX Port - PC-Analog-Stick, by using a Custom-Made Adapter and wessupport.library (included on CD). The same adaptor which worked for Descent:Freespace and ADescent also works for Quake 2. For PSX Port and PC-Joysticks you can also manipulate the Joystick-Speed and the Deadzone. In case you use a PC-Joystick, PSX Port and CD32 Pad will be disabled. In case you use PSX Port you can choose to disable all other Joysticks, if wanted. Game This is the Tab which comes up, when you start up the GUI. Here you can chooce between Deathmatch, Cooperative Gaming and CTF (as supported by Mods). -

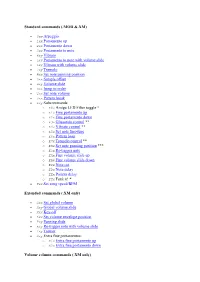

Standard Commands (.MOD &.XM) • 0Xy Arpeggio • 1Xx Portamento Up

Standard commands (.MOD &.XM) 0xy Arpeggio 1xx Portamento up 2xx Portamento down 3xx Portamento to note 4xy Vibrato 5xy Portamento to note with volume slide 6xy Vibrato with volume slide 7xy Tremolo 8xx Set note panning position 9xx Sample offset Axy Volume slide Bxx Jump to order Cxx Set note volume Dxx Pattern break Exy Subcommands: o E0x Amiga LED Filter toggle * o E1x Fine portamento up o E2x Fine portamento down o E3x Glissando control ** o E4x Vibrato control ** o E5x Set note fine-tune o E6x Pattern loop o E7x Tremolo control ** o E8x Set note panning position *** o E9x Re-trigger note o EAx Fine volume slide up o EBx Fine volume slide down o ECx Note cut o EDx Note delay o EEx Pattern delay o EFx Funk it! * Fxx Set song speed/BPM Extended commands (.XM only) Gxx Set global volume Hxy Global volume slide Kxx Key-off Lxx Set volume envelope position Pxy Panning slide Rxy Re-trigger note with volume slide Txy Tremor Xxy Extra fine portamentos: o X1x Extra fine portamento up o X2x Extra fine portamento down Volume column commands (.XM only) xx Set note volume +x Volume slide up -x Volume slide down Dx Fine volume slide down (displayed as ▼x) Lx Panning slide left (displayed as ◀x) Mx Portamento to note Px Set note panning position Rx Panning slide right (displayed as ▶x) Sx Set vibrato speed Ux Fine volume slide up (displayed as ▲x) Vx Vibrato *) Not implemented, no plans to support **) Not implemented yet, will be required for feature completeness ***) Not supported on Amiga nor in FT2, effect relocation (8xx, Px) advised 0xy Arpeggio 0 Syntax: x = semitone offset y = semitone offset C-4 ·1 ·· 037 ··· ·· ·· 037 Example: ··· ·· ·· 037 ··· ·· ·· 037 Arpeggio quickly alters the note pitch between the base note (C-4) and the semitone offsets x (3 = D#4) and y (7 = G-4).