Introduction to Imaging

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Management

Color Management hotoshop 5.0 was justifiably praised as a ground- breaking upgrade when it was released in the summer of 1998, although the changes made to the color P management setup were less well received in some quarters. This was because the revised system was perceived to be complex and unnecessary. Bruce Fraser once said of the Photoshop 5.0 color management system ‘it’s push-button simple, as long as you know which of the 60 or so buttons to push!’ Attitudes have changed since then (as has the interface) and it is fair to say that most people working today in the pre-press industry are now using ICC color profile managed workflows. The aim of this chapter is to introduce the basic concepts of color management before looking at the color management interface in Photoshop and the various color management settings. 1 Color management Adobe Photoshop CS6 for Photographers: www.photoshopforphotographers.com The need for color management An advertising agency art buyer was once invited to address a meeting of photographers. The chair, Mike Laye, suggested we could ask him anything we wanted, except ‘Would you like to see my book?’ And if he had already seen your book, we couldn’t ask him why he hadn’t called it back in again. And if he had called it in again we were not allowed to ask why we didn’t get the job. And finally, if we did get the job we were absolutely forbidden to ask why the color in the printed ad looked nothing like the original photograph! That in a nutshell is a problem which has bugged many of us throughout our working lives, and it is one which will be familiar to anyone who has ever experienced the difficulty of matching colors on a computer display with the original or a printed output. -

Adaptive Quantization Matrices for High Definition Resolutions in Scalable HEVC

Original citation: Prangnell, Lee and Sanchez Silva, Victor (2016) Adaptive quantization matrices for HD and UHD display resolutions in scalable HEVC. In: IEEE Data Compression Conference, Utah, United States, 31 Mar - 01 Apr 2016 Permanent WRAP URL: http://wrap.warwick.ac.uk/73957 Copyright and reuse: The Warwick Research Archive Portal (WRAP) makes this work by researchers of the University of Warwick available open access under the following conditions. Copyright © and all moral rights to the version of the paper presented here belong to the individual author(s) and/or other copyright owners. To the extent reasonable and practicable the material made available in WRAP has been checked for eligibility before being made available. Copies of full items can be used for personal research or study, educational, or not-for profit purposes without prior permission or charge. Provided that the authors, title and full bibliographic details are credited, a hyperlink and/or URL is given for the original metadata page and the content is not changed in any way. Publisher’s statement: © 2016 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting /republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. A note on versions: The version presented here may differ from the published version or, version of record, if you wish to cite this item you are advised to consult the publisher’s version. -

Dell 24 USB-C Monitor - P2421DC User’S Guide

Dell 24 USB-C Monitor - P2421DC User’s Guide Monitor Model: P2421DC Regulatory Model: P2421DCc NOTE: A NOTE indicates important information that helps you make better use of your computer. CAUTION: A CAUTION indicates potential damage to hardware or loss of data if instructions are not followed. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. Copyright © 2020 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. 2020 – 03 Rev. A01 Contents About your monitor ......................... 6 Package contents . 6 Product features . .8 Identifying parts and controls . .9 Front view . .9 Back view . 10 Side view. 11 Bottom view . .12 Monitor specifications . 13 Resolution specifications . 14 Supported video modes . 15 Preset display modes . 15 MST Multi-Stream Transport (MST) Modes . 16 Electrical specifications. 16 Physical characteristics. 17 Environmental characteristics . 18 Power management modes . 19 Plug and play capability . 25 LCD monitor quality and pixel policy . 25 Maintenance guidelines . 25 Cleaning your monitor. .25 Setting up the monitor...................... 26 Attaching the stand . 26 │ 3 Connecting your monitor . 28 Connecting the DP cable . 28 Connecting the monitor for DP Multi-Stream Transport (MST) function . 28 Connecting the USB Type-C cable . 29 Connecting the monitor for USB-C Multi-Stream Transport (MST) function. 30 Organizing cables . 31 Removing the stand . 32 Wall mounting (optional) . 33 Operating your monitor ..................... 34 Power on the monitor . 34 USB-C charging options . 35 Using the control buttons . 35 OSD controls . 36 Using the On-Screen Display (OSD) menu . -

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

Image Processing Terms Used in This Section Working with Different Screen Bit Describes How to Determine the Screen Bit Depth of Your Depths (P

13 Color This section describes the toolbox functions that help you work with color image data. Note that “color” includes shades of gray; therefore much of the discussion in this chapter applies to grayscale images as well as color images. Topics covered include Terminology (p. 13-2) Provides definitions of image processing terms used in this section Working with Different Screen Bit Describes how to determine the screen bit depth of your Depths (p. 13-3) system and provides recommendations if you can change the bit depth Reducing the Number of Colors in an Describes how to use imapprox and rgb2ind to reduce the Image (p. 13-6) number of colors in an image, including information about dithering Converting to Other Color Spaces Defines the concept of image color space and describes (p. 13-15) how to convert images between color spaces 13 Color Terminology An understanding of the following terms will help you to use this chapter. Terms Definitions Approximation The method by which the software chooses replacement colors in the event that direct matches cannot be found. The methods of approximation discussed in this chapter are colormap mapping, uniform quantization, and minimum variance quantization. Indexed image An image whose pixel values are direct indices into an RGB colormap. In MATLAB, an indexed image is represented by an array of class uint8, uint16, or double. The colormap is always an m-by-3 array of class double. We often use the variable name X to represent an indexed image in memory, and map to represent the colormap. Intensity image An image consisting of intensity (grayscale) values. -

Supported File Types

MyFax Supported File Formats Document Type Versions Extensions Adobe Portable Document Format (PDF) All Versions PDF Adobe Postscript All Versions PS Adobe Photoshop v. 3.0 and above PSD Amiga Interchange File Format (IFF) Raster Bitmap only IFF CAD Drawing Exchange Format (DXF) All AutoCad compatible versions DXF Comma Separated Values Format All Versions CSV Compuserve Graphics Interchange Format GIF87a, GIF89a GIF Corel Presentations Slide Show v. 96 and above SHW Corel Word Perfect v. 5.x. 6, 7, 8, 9 WPD, WP5, WP6 Encapsulated Postscript All Versions EPS Hypertext Markup Language HTML only with base href tag required HTML, HTM JPEG Joint Photography Experts Group All Versions JPG, JPEG Lotus 1-2-3 v. 2, 3, 4, 5, 96, 97, 9.x 123, WK1, WK3, WK4 Lotus Word Pro v. 96, 97, 9.x LWP Microsoft Excel v. 5, 95, 97, 2000, 2003, 2007 XLS, XLSX Microsoft PowerPoint v. 4 and above PPT, PPTX Microsoft Publisher v. 98, 2000, 2002, 2003, 2007 PUB Microsoft Windows Write All Versions WRI Microsoft Word Win: v. 97, 2000, 2003, 2007 Mac: v. 4, 5.x, 95, 98 DOC, DOCX Microsoft Word Template Win: v. 97, 2000, 2003, 2007 Mac: v. 4, 5.x, 95, 98 DOT, DOTX Microsoft Works Word Processor v. 4.x, 5, 6, 7, 8.x, 9 WPS OpenDocument Drawing All Versions ODG OpenDocument Presentation All Versions ODP OpenDocument Spreadsheet All Versions ODS OpenDocument Text All Versions ODT PC Paintbrush Graphics (PCX) All Versions PCX Plain Text All Versions TXT, DOC, LOG, ERR, C, CPP, H Portable Network Graphics (PNG) All Versions PNG Quattro Pro v. -

Paul Hightower Instrumentation Technology Systems Northridge, CA 91324 [email protected]

COMPRESSION, WHY, WHAT AND COMPROMISES Authors Hightower, Paul Publisher International Foundation for Telemetering Journal International Telemetering Conference Proceedings Rights Copyright © held by the author; distribution rights International Foundation for Telemetering Download date 06/10/2021 11:41:22 Link to Item http://hdl.handle.net/10150/631710 COMPRESSION, WHY, WHAT AND COMPROMISES Paul Hightower Instrumentation Technology Systems Northridge, CA 91324 [email protected] ABSTRACT Each 1080 video frame requires 6.2 MB of storage; archiving a one minute clip requires 22GB. Playing a 1080p/60 video requires sustained rates of 400 MB/S. These storage and transport parameters pose major technical and cost hurdles. Even the latest technologies would only support one channel of such video. Content creators needed a solution to these road blocks to enable them to deliver video to viewers and monetize efforts. Over the past 30 years a pyramid of techniques have been developed to provide ever increasing compression efficiency. These techniques make it possible to deliver movies on Blu-ray disks, over Wi-Fi and Ethernet. However, there are tradeoffs. Compression introduces latency, image errors and resolution loss. The exact effect may be different from image to image. BER may result the total loss of strings of frames. We will explore these effects and how they impact test quality and reduce the benefits that HD cameras/lenses bring telemetry. INTRODUCTION Over the past 15 years we have all become accustomed to having television, computers and other video streaming devices show us video in high definition. It has become so commonplace that our community nearly insists that it be brought to the telemetry and test community so that better imagery can be used to better observe and model systems behaviors. -

Pale Intrusions Into Blue: the Development of a Color Hannah Rose Mendoza

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2004 Pale Intrusions into Blue: The Development of a Color Hannah Rose Mendoza Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY SCHOOL OF VISUAL ARTS AND DANCE PALE INTRUSIONS INTO BLUE: THE DEVELOPMENT OF A COLOR By HANNAH ROSE MENDOZA A Thesis submitted to the Department of Interior Design in partial fulfillment of the requirements for the degree of Master of Fine Arts Degree Awarded: Fall Semester, 2004 The members of the Committee approve the thesis of Hannah Rose Mendoza defended on October 21, 2004. _________________________ Lisa Waxman Professor Directing Thesis _________________________ Peter Munton Committee Member _________________________ Ricardo Navarro Committee Member Approved: ______________________________________ Eric Wiedegreen, Chair, Department of Interior Design ______________________________________ Sally Mcrorie, Dean, School of Visual Arts & Dance The Office of Graduate Studies has verified and approved the above named committee members. ii To Pepe, te amo y gracias. iii ACKNOWLEDGMENTS I want to express my gratitude to Lisa Waxman for her unflagging enthusiasm and sharp attention to detail. I also wish to thank the other members of my committee, Peter Munton and Rick Navarro for taking the time to read my thesis and offer a very helpful critique. I want to acknowledge the support received from my Mom and Dad, whose faith in me helped me get through this. Finally, I want to thank my son Jack, who despite being born as my thesis was nearing completion, saw fit to spit up on the manuscript only once. -

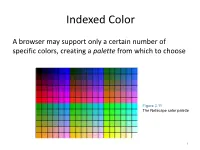

Indexed Color

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

Color Combinations and Contrasts

COLOR COMBINATIONS AND CONTRASTS Full Value Color Combinations Above are 18 color combinations tested for visibility, using primary and secondary colors, of full hue and value. Visibility is ranked in the sequence shown, with 1 being the most visible and 18 being the least visible. Readability It is essential that outdoor designs are easy to read. Choose colors with high contrast in both hue and value. Contrasting colors are viewed well from great distances, while colors with low contrast will blend together and obscure a message. In fact, research demonstrates that high color contrast can improve outdoor advertising recall by 38 percent. A standard color wheel clearly illustrates the importance of contrast, hue and value. Opposite colors on the wheel are complementary. An example is red and green (as shown above). They respresent a good contrast in hue, but their values are similar. It is difficult for the human eye to process the wavelength variations associated with complementary colors. Therefore, a quivering or optical distortion is sometimes detected when two complemen- tary colors are used in tandem. Adjacent colors, such as blue and green, make especially poor combinations since their contrast is similar in both hue and value. As a result, adjacent colors create contrast that is hard to discern. Alternating colors, such as blue and yellow, produce the best combinations since they have good contrast in both hue and value. Black contrasts well with any color of light value and white is a good contrast with colors of dark value. For example, yellow and black are dissimilar in the contrast of both hue and value. -

Detectability Model for the Evaluation of Lossy Compression Methods on Radiographic Images Vivek Ramaswami Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 1996 Detectability model for the evaluation of lossy compression methods on radiographic images Vivek Ramaswami Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Electrical and Electronics Commons Recommended Citation Ramaswami, Vivek, "Detectability model for the evaluation of lossy compression methods on radiographic images" (1996). Retrospective Theses and Dissertations. 250. https://lib.dr.iastate.edu/rtd/250 This Thesis is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Detectability model for the evaluation of lossy compression methods on radiographic images by Vivek Ramaswami A thesis submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Electrical Engineering Major Professors: Satish S. Udpa and Joseph N. Gray Iowa State University Ames, Iowa 1996 Copyright © Vivek Ramaswami, 1996. All rights reserved. 11 Graduate College Iowa State University This is to certify that the Master's thesis of Vivek Ramaswami has met the t hesis requirements of Iowa State University Signature redacted for privacy 111 TABLE OF CONTENTS ACKNOWLEDGEMENTS IX 1 INTRODUCTION . 1 2 IMAGE COMPRESSION METHODS 5 201 Introduction o 0 0 0 0 0 5 2o2 Vector quantization 0 0 ..... 5 20201 Classified vector quantizer 6 20202 Coding of shade blocks o ..... -

Image Resolution

Image resolution When printing photographs and similar types of image, the size of the file will determine how large the picture can be printed whilst maintaining acceptable quality. This document provides a guide which should help you to judge whether a particular image will reproduce well at the size you want. What is resolution? A digital photograph is made up of a number of discrete picture elements, known as “pixels”. We can see these elements if we magnify an image on the screen (see right). Because the number of pixels in the image is fixed, the bigger we print the image, then the bigger the pixels will be. If we print the image too big, then the pixels will be visible to the naked eye and the image will appear to be poor quality. Let’s take as an example an image from a “5 megapixel” digital camera. Typically this camera at its maximum quality setting will produce images which are 2592 x 1944 pixels. (If we multiply these two figures, we get 5,038,848 pixels, which approximately equates to 5 million pixels/5 megapixels.) Printing this image at various sizes, we can calculate the number of pixels per inch, more commonly referred to as dots per inch (dpi). Just note that this measure is dependent on the image being printed, it is unrelated to the resolution of the printer, which is also expressed in dpi. Original image size 2592 x 1944 pixels Small format (up to A3) When printing images onto A4 or A3 pages, aim for 300dpi if at all Print size (inches) 8 x 6 16 x 12 24 x 16 32 x 24 possible.