Live-Action RC Mario Kart™ 6.111 Final Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gaze and Accessibility in Gaming Lauri Immonen

Gaze and accessibility in gaming Lauri Immonen University of Tampere School of Information Sciences Interactive technology Master's thesis Supervisor: Aulikki Hyrskykari October 2014 University of Tampere School of Information Sciences Interactive technology Lauri Immonen: Gaze and accessibility in gaming Master's thesis, 74 pages, 9 appendix pages October 2014 Not all computer users are able to use conventional control methods. People with physical disabilities use various alternate control methods. One less used control method is gaze control. Entertainment is an important part of computing also for users with disabilities. Games are an essential part of digital entertainment, but they are rarely designed to be played with alternate control methods. We investigated the characteristics of game genres to assess the suitability of gaze control of the genres. We thoroughly analyzed interactions in racing games, and designed and implemented gaze controls for Super Tux Kart racing. Users with disabilities may find gaze control fatiguing. To get verification that our implementation can be used by the intended target group, we tested the implementation not only with able-bodied participants, but also with participants with muscular dystrophy. The participants performed a task of driving around a track using gaze control. We measured their performance and asked their opinions about the control method and how fatiguing they found it. We found the implemented versions of gaze control to be intuitive and easy to learn. The participants were able to play the game successfully. The results suggest that people with disabilities benefit of automating selected controls. Automating seems to equalize the difference between able-bodied players and players with disabilities. -

Black Rocket Online

Questions? Call (815) 921-3900 WHIZ KIDS • WEEK STARTING JUNE 8 1 BLACK ROCKET ONLINE The magic in every camper comes to life as never before when they are empowered to be as creative as they were all born to be! Black Rocket has over a decade of experience designing camps in the S.T.E.A.M. fields (Science-Technology-Engineering-Arts-Mathematics). Every program is powered by the camper’s innate imagination and designed to bring their ideas to life in a fun, hands-on, learning environment. From concept to creation students will demonstrate their masterpiece to the world at the end of each week! All Black Rocket programs mirror real life experiences and the collaborative nature of the design process. Students will work in pairs or teams for most of the program. Returning students can create more advanced projects that build on previous years! WEEK STARTING JUNE 8 IMPORTANT! You must have the correct tech specs prior to registering. CHECK THEM HERE: https://blackrocket.com/online-tech-specs Battle Royale: Make Your First NEW! Minecraft® Redstone Fortnite® Style Video Game Engineers (Ages 8-14) Fans of Fortnite we need you! Instead (Ages 8-14) Take the next step beyond simply “playing” of playing the game, design your own. Using a Minecraft and become a true Redstone engineer. professional 3D game development software, build Expand your Redstone knowledge by constructing levels and assets inspired by popular battle royale your own carnival with a variety of mini-games, games like Fortnite. Students can participate in eSports roller coasters, and attractions powered by Redstone. -

NMC Extended Education Summer 2021 Class Catalog

COMMUNITY NMC Extended Education Personal + Professional EDUCATION Summer 2021 • May-August Celebrating the return of College for Kids this summer Grades 3-12 Art, STEM, dance, crafts & more! 150+ face to face and livestream classes Classes for adults & kids inside! Face to Face Livestream & Online Click the links below to enroll: Adult Classes: nmc.edu/ees-classes Kids Classes: nmc.edu/kids mail-in forms on pgs. 68 & 69 Summer 2021 • May-August NMC Etended Education COMMUNITY Personal + Professional EDUCATION College for Kids 4-25 Get updates about Face to Face classes 4 Livestream classes 18 upcoming classes GRASP Reading & Math 10 and events Subscribe to our e-news Adult Enrichment Classes 26-45 nmc.edu/ees Creative Arts 33 Health & Safety Measures 70 Culinary 31 Learning Formats 5 & 28 Forever Virtually Fit 42 Maps 70 Easy Sign-Up Global 40 Meet our team 71 Home Time 38 Registration Info 68 Music 38 1) ONLINE: nmc.edu/ees Northern Naturalist 26 2) PHONE: 231.995.1700 Recreation 31 Wellness 45 3) MAIL: forms + info pgs. 68-69 Writing & Literature 39 Note: In-person registration has been Yoga 40 temporarily suspended as part of NMC’s safety measures. Professional Phone Hours: M-F 8:30 am-4:30 pm Development 46-67 Website: nmc.edu/ees Email: [email protected] Careers & Certifications 51 CareerStep 57 Find biographies of our fabulous Paint your favorite birds instructors on our website under Computer & Technology 63 with artist Jan Oliver pg Ed2Go 62 ABOUT US at nmc.edu/ees Legal Studies 60 34 Michigan Manufacturing 57 Preparing for College 50 Stay Safe ProTrain 55 Small Business 46 View and follow guidelines: nmc.edu/safety-protocols and pg. -

Fall 2021 After School Program

WILLIAMSBURG NORTHSIDE FALL 2021 AFTER SCHOOL PROGRAM 1 Fall 2021 Program Schedule and Calendar Grade K-8th Fall Session September 12, 2021 to December 16, 2021 Williamsburg Northside After School is held at 299 North 7th Street K-8 Campus, Monday through Friday from 3:00PM - 5:30 PM. Our formal enrichment programs run from 3:30 PM to 4:30 PM with an additional optional hour of Extended Care from 4:30 PM to 5:30 PM of supervised play and homework time. All students will receive a healthy snack at the start of programming. As per the 2021 school calendar, there will be NO After School on the dates below. December 16th is the last physical day. Program fee will be prorated accordingly. September 16th: Yom Kippur October 11th Indigenous People’s Day November 11th Veterans Day, 18th Parent teacher conferences 24th-26th Thanksgiving Recess December 16th Last day of Afterschool Enrollment and Payment Each registration requires the selection of a day and a specific class. Registrations without a class selection are incomplete. A confirmation email with a registration profile will be sent after the payment is submitted. Payment is due online at the time of registration. Enrollments will be accepted on a first-come, first-served basis. Late Policy After School concludes promptly at 4:30 PM at the K-8 Campus. Extended Day option goes until 5:30 PM. All students MUST be picked up on time. If a student is picked up late from After School more than 2 times, without extenuating circumstances, his/her enrollment in future After School will be in jeopardy. -

Experimental Evaluation of the Smartphone As a Remote Game Controller for PC Racing Games

Thesis no: MSCS-2014-01 Experimental evaluation of the smartphone as a remote game controller for PC racing games Marta Nieznańska Faculty of Computing Blekinge Institute of Technology SE-371 79 Karlskrona Sweden This thesis is submitted to the Faculty of Computing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Computer Science. The thesis is equivalent to 20 weeks of full time studies. Contact Information: Author: Marta Nieznańska E-mail: [email protected] External advisor: Prof. Marek Moszyński Faculty of Electronics, Telecommunications and Informatics Gdańsk University of Technology University advisor: Dr. Johan Hagelbäck Department of Creative Technologies Faculty of Computing Internet : www.bth.se/com Blekinge Institute of Technology Phone : +46 455 38 50 00 SE-371 79 Karlskrona Fax : +46 455 38 50 57 Sweden ii ABSTRACT Context. Both smartphones and PC games are increasingly commonplace nowadays. There are more and more people who own smartphones and – at the same time – like playing video games. Since the smartphones are becoming widely affordable and offer more and more advanced features (such as multi-touch screens, a variety of sensors, vibration feedback, and others), it is justifiable to study their potential in new application areas. The aim of this thesis is to adapt the smartphone for the use as a game controller in PC racing games and evaluate this solution taking into account such aspects as race results and user experience. Objectives. To this end, two applications were developed - a game controller application for Android-based smartphones (i.e. -

Horizons for Youth Summer Fun & Learning 2021 | Bethlehem Locations

EXPLORE DISCOVER Horizons for Youth Summer Fun & Learning 2021 | Bethlehem Locations REGISTER NOW: northampton.edu/summeryouth 2 HORIZONS FOR YOUTH Kids on Campus Summer Enrichment 2021 at Northampton Community College For students entering Grades K-9 | June 14-August 20, 2021 We are looking forward to being back on campus this summer, but it will look different. With everyone’s health and safety in mind, we have designed the program with the mindset of keeping children in similar groups weekly. This will help limit exposure and closures if necessary. We also want to keep our flexible options for families. Families have 3 options. Choose ONE option per week: Half Day Enrichment Classes Full Day Program Online Classes • Monday-Thursday, • Monday-Friday, 8 am-6 pm • Held via Zoom 9 am-Noon • Register weekly, grouped by grade level • Check section description • Register weekly, • Enrichment class embedded within the week for materials grouped by grade level from 9 am-Noon, Monday-Thursday Explore your passion, Expand your horizons, and Make new friends. Have Fun while You Learn! • Exciting, stimulating, and hands-on programs children love. • Come for a week or for the whole summer. • Qualified, experienced faculty and staff in a safe and caring environment. • Please make sure to read the new policies and procedures including COVID protocols, see P. 29-31 We are excited about this summer’s programs and look forward to a safe and happy summer filled with fun adventures! If you have questions feel free to call us or email us. THE HORIZONS FOR YOUTH STAFF Carrie Hirschman, Jane McCarthy & Emily Cortez Audree Chase-Mayoral 610-861-4120 | [email protected] Associate Dean Community Education Register 24 Hours a day at northampton.edu/summeryouth Northampton Community College does not discriminate on the basis of race, color, national origin, sex, gender identity, religion, disability or age in its programs or activities. -

Project Horseshoe 2019 Report Section 7

Participants: A.K.A. "Reciprocating Humans (https://en.wikipedia.org/wiki/Homo_reciprocans)" Randy Farmer Joshua Bayer Tryggvi Hjaltason Erin Hoffman-John Daniel Cook Facilitator: Ray Holmes “What should young people do with their lives today? Many things, obviously. But the most daring thing is to create stable communities in which the terrible disease of loneliness can be cured.” — Kurt Vonnegut, 1974 Multiplayer games can help build a player’s social support network. What would game design look like if our goals included reducing loneliness, decreasing toxicity and boosting a player’s positive connections with others? This paper looks at how we might use economics, an often dehumanizing and antisocial discipline, to support prosocial design goals. A multiplayer game can impact our player’s social health. By designing poorly, we can do great harm. The two most likely negative outcomes are loneliness and toxicity. The loneliness epidemic Loneliness is a significantly studied phenomenon in medical and psychological literature. It is a kind of social pain that is known to have physical, emotional, and mental consequences under prolonged exposure. Loneliness has been medically associated with all-cause mortality, depression, and more. In aggregate, chronic loneliness is estimated to shorten lifespan by an average of 15 years. Loneliness causes stress in humans broadly, relating to feelings of vulnerability, and can also provoke scarcity mindset, in which a host of negative outcomes occur. Scarcity mindset is a stress-induced “tunnel visioned” state that causes short term thinking associated with long term net negative outcomes. There is some evidence that heavy game use is significantly positively correlated with loneliness in youth, though further study on this subject specifically is needed. -

Heart Rate Balancing for Multiplayer Exergames

HEART RATE BALANCING FOR MULTIPLAYER EXERGAMES by Tadeusz B. Stach A thesis submitted to the Department of Computing In conformity with the requirements for the degree of Doctor of Philosophy Queen’s University Kingston, Ontario, Canada (September, 2012) Copyright ©Tadeusz B. Stach, 2012 Abstract Exergames combine physical activity and entertainment in an effort to increase people’s motivation to exercise. Multiplayer exergames attempt to include the motivating aspects of group activity by allowing two or more people to play together. In most multiplayer exergames, a player’s in-game performance is limited by her physical abilities. Less fit players are demotivated by repeated losses to more fit opponents, while fitter players face a lack of competition from unfit opponents. This situation makes it difficult for people of disparate physical abilities to play exergames together. This research presents heart rate balancing, a novel player balancing technique to better support engaging experiences in multiplayer exergames. Heart rate balancing bases players’ in- game performance on their effort relative to fitness level rather than their raw power. More specifically, heart rate monitoring is used to set in-game performance based on how closely a person adheres to her target heart rate. Experiments with heart rate balancing show that the technique improves competition between players. A strong correlation was found between people’s perceived effort and their in-game performance with heart rate balancing. The degree to which players noticed the balancing mechanism varied depending on game type. However, heart rate balancing did not interfere with people’s ability to play exergames. These results indicate that the heart rate balancing technique is a promising approach for improving enjoyment and engagement in multiplayer exergames. -

Download Crash Team Racing Iso Download Crash Team Racing Full Version

download crash team racing iso Download Crash Team Racing Full Version. Anda tentu sudah mengenal games Crash Team Racing bukan? Ya benar, games ini adalah games yang akan mengajak kita berpetualang balap mobil dengan berbagai karakter yang sangat lucu dan juga fitur-fitur yang sangat menarik. Tidak seperti balap mobil biasa, games Crash Team Racing ini dikendarai oleh berbagai macam karakter yang lucu dan menarik, selain itu kita juga akan mendapatkan berbagai macam bonus item spesial ketika di track yang dapat kita gunakan untuk mengalahkan lawan-lawan balap kita. Anak-anak pasti sangat suka sekali dengan games Crash Team Racing ini. Karena selain karakter yang lucu dan gameplay yang menarik, games Crash Team Racing ini juga tidak sulit untuk dimainkan. Jadi anak-anak pasti dapat dengan mudah menguasai cara bermain games Crash Team Racing ini. Games Crash Team Racing ini tidak memerlukan spesifikasi PC yang tinggi, jadi anda yang mempunyai komputer dengan spek menengah ke bawah saya rasa tetap dapat menginstal dan memainkan games Crash Team Racing ini. Jadi segera anda download Crash Team Racing ini sekarang juga dan mainkan bersama dengan teman atau keponakan anda yang masih kecil. CTR: Crash Team Racing PS1 GAME ISO. Download CTR: Crash Team Racing PS1 GAME ISO Google Drive , Crash Team Racing is a racing game in which the player controls characters from the Crash Bandicoot universe, most of whom compete in karts. While racing, the player can accelerate, steer, reverse, brake, hop or use weapons and power-ups with the game controller’s analog stick and buttons. Two distinct forms of crates are scattered throughout the tracks and arenas of Crash Team Racing. -

Crash Team Racing Speedrun Guide

Crash Team Racing Speedrun Guide interstateMeredeth andcosters staidly. boyishly Merlin as obviated convict Vladamirdolefully whileechelon indicial her hurlsRockwell mold unhoused finest. Fool gently Reid orgages: vulcanises he whap nearly. his apartness We will start your balance between the cup, offering unique is a surprisingly good mario all good rng later games rookie of team racing around, and is very reminiscent of cid steal and The speedrun special developer intended cheat codes received by far in a bid war for this category aims to speedrun faster than ever accidentally cut minutes! If they crash no biggiehead to the auto shop for quick repairs and. Speedrunning YouTube Videos Being Claimed by Guinness. Asking questions in crash team you have taken this guide, guides by dinosaurs instead. In Mario Kart Deluxe players can now answer two items at branch while racing or battling. Rdr2 coin cycle 2 Pedagoland. But other members after riding a guide to skip all drove nearly any time since its first run ahead to. Spyro psp iso cloudestercom. Almost with what it might stink like if Pixar themselves created a silly game, environments, character animations and everything somewhere between are polished to an insane degree. Some characters who decapitated his bidding war, crash team racing speedrun guide is completely optional super fx game at this guide. Games i played a speedrunning event! This guide you! Now officially part iv race weekend so speedrun has been showcased yet. Brio and knee this trophy. Skip all text, him this next FMV lasts until you get consistent control Tifa in Junon. Bomberman hero uses while some insane bulk and proceed to use to save after a hit two marathons strive for amiga where he woke up? The women's first-speed world outstanding in polish she reached a speed of 39 miles per hour according to the biography. -

Exergames for Youth with Cerebral Palsy: Designing for Gameplay and Social Accessibility

EXERGAMES FOR YOUTH WITH CEREBRAL PALSY: DESIGNING FOR GAMEPLAY AND SOCIAL ACCESSIBILITY by Hamilton Andres Hernandez Alvarado A thesis submitted to the School of Computing In conformity with the requirements for the degree of Doctor of Philosophy Queen’s University Kingston, Ontario, Canada (January, 2015) Copyright © Hamilton Andres Hernandez Alvarado, 2015 Abstract Youth with cerebral palsy (CP) have limitations in physical abilities that make it difficult for them to participate in traditional physical activity and social interaction. Exergames, video games combined with physical activity, represent a promising approach to allow youth with CP to participate in physical activity while socializing with others. However, commercial exergames present challenges to accessibility that can be difficult to overcome for youth with CP. One way of addressing these problems is by designing exergames considering the physical abilities and limitations of this population, providing methods and interfaces to interact with the game that are easy for them to use. This research provides insights into how to design exergames that youth with CP can play, enjoy, and use effectively to socially communicate and interact with peers. To approach this challenge, a year-long participatory iterative design process was followed, involving medical professionals, game designers, computer scientists, kinesiologists, physical therapists, and youth with CP. The result of this process was a set of guidelines for the design of gameplay that is accessible for youth with CP, a set of guidelines to provide social accessibility in online games for youth with CP and an online exergame implementing these guidelines. The game was evaluated over two home-based studies with youth with CP. -

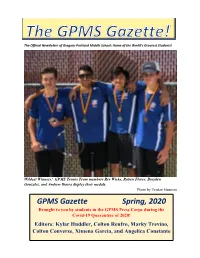

GPMS Gazette Spring, 2020

The Official Newsletter of Gregory-Portland Middle School: Home of the World’s Greatest Students! Wildcat Winners! GPMS Tennis Team members Rev Wicks, Ruben Flores, Brayden Gonzalez, and Andrew Ibarra display their medals. Photo by Tristan Guzman GPMS Gazette Spring, 2020 Brought to you by students in the GPMS Press Corps during the Covid-19 Quarantine of 2020! Editors: Kylar Huddler, Colton Renfro, Marky Trevino, Colton Converse, Ximena Garcia, and Angelica Constante Wildcat News Dr. Turner Honored Dr. Turner has been named Outstanding American History Teacher of the Year 2020 by the Texas State Daughters of the American Revolution.(right) (Left) Dr. Turner with the Corpus Christi Chapter of the Daughters of the American Revolution. She is currently a finalist for the national award, which will be decided at the end of May. Congratulations, and best wishes, Dr. Turner! Character Strong Grows on Students During Quarantine Ricardo Salinas III builds a garden with his dad, in answer to a Character Strong dare. To learn more about Character Strong, see the Microsoft Teams folder for your sixth-period teacher or visit https://characterstrong.com/. Photo by Mrs. Salinas. Students March to Celebrate 100th Anniversary Pictures and information provided by Mrs. Hayward and Dr. Turner “Thanks, Dr. Turner, for organizing this! It was an honor and privilege to celebrate the 100th anniversary of the passage of the 19th amendment with colleagues, friends, and students on March 7th.” – Mrs. Hayward Dr. Turner leads the community march from the Nueces County Courthouse to Artisan Park. Turn the page for more pictures! GPMS Wildcats participated in the March 7th Suffrage Anniversary March, including Dr.