PREPRINT of Please, Please, Just Tell Me: the Linguistic Features of Humorous Deception APREPRINT

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pontificia Universidad Catolica Del Ecuador Facultad De Comunicación, Lingüística Y Literatura Escuela Multilingue De Negocios Y Relaciones Internacionales

PONTIFICIA UNIVERSIDAD CATOLICA DEL ECUADOR FACULTAD DE COMUNICACIÓN, LINGÜÍSTICA Y LITERATURA ESCUELA MULTILINGUE DE NEGOCIOS Y RELACIONES INTERNACIONALES TRABAJO DE TITULACIÓN PREVIA A LA OBTENCIÓN DEL TÍTULO DE LICENCIATURA MULTILINGÜE EN NEGOCIOS Y RELACIONES INTERNACIONALES CAMBIOS EN LA POLÍTICA HACIA LAS MUJERES DE RUANDA PARA LA MEJORA DE DERECHOS DE GÉNERO CON LA PARTICIPACIÓN DE ORGANISMOS INTERNACIONALES EN EL PERIODO 2003-2016 PAMELA JAEL ÁVILA TORO FEBRERO, 2018 QUITO – ECUADOR Agradezco a mis padres Pamela Toro y Jovany Ávila por hacer posible mi existencia, por ser mi guía a lo largo de mi vida, por ser mi soporte en momentos de debilidad, por apoyarme en cada decisión que he tomado, por darme el regalo más grande, mi hermano Gian Franco y por darme, no solo una familiar sino un hogar en donde encontrar la motivación diaria para levantarme pese a cualquier adversidad que se presente. Les agradezco infinitamente el amor y paciencia que han tenido conmigo. A mi hermano le agradezco por cuidarme y darme fuerzas desde el primer momento en que escuche su voz. Agradezco a la fuente infinita del universo, que siempre me brinda abundancia y me sostiene cuando necesito un descanso. A mi familia, que ha compartido conmigo alegrías y tristezas, dándome siempre su mejor sonrisa, su mejor gesto, sus palabras precisas y su amor incondicional. Agradezco a mis amigos y amigas, en especial a Astrid y Cristian, que han estado detrás de cada paso que doy para que no voltee a mirar mis equivocaciones, sino que vaya hacia delante de su mano. Agradezco a mis profesores y maestros, que han estado durante mi vida académica y aquellos que han aparecido como maestros de vida; para mostrarme que cada día es un tesoro que no podemos desperdiciar. -

Ogle County Solid Waste Management Department Resource Library

Ogle County Solid Waste Management Department Resource Library 909 W. Pines Road, Oregon, IL 815-732-4020 www.oglecountysolidwaste.org Ogle CountySolid Waste Management Department Resource Libary TABLE OF CONTENTS Videos/DVDs (All are VHS videos unless marked DVD)..................................................................3 Web Sites for Kids...................................................................................................................................10 Interactive Software ...............................................................................................................................10 Interactive CD and Books …….....……………………………………………..............................…..10 Books.........................................................................................................................................................11 Books about Composting ……………………………………………………...............................…..29 Music, Model, Worm Bin......................................................................................................................30 Educational Curriculum.......................................................................................................................30 Handouts for the Classroom................................................................................................................33 Ink Jet Cartridge Recycling Dispenser................................................................................................35 The materials listed here are available -

Clayway Media Presents

clayway media presents Directed by Peter Byck Produced by Peter Byck, Craig Sieben, Karen Weigert, Artemis Joukowsky & Chrisna van Zyl Narrated by Bill Kurtis Production Notes 82 minutes, Color, 35 mm www.carbonnationmovie.com www.facebook.com/carbonnationfilm New York Press Los Angeles Press Susan Senk PR & Marketing Big Time PR & Marketing Susan Senk- Linda Altman Sylvia Desrochers - Tiffany Bair Wagner Office: 212-876-5948 Office: 424-208-3496 Susan:[email protected] Sylvia: [email protected] Linda: [email protected] Tiffany: [email protected] Marketing & Distribution Marketing & Distribution Dada Films Required Viewing MJ Peckos Steven Raphael Office: (310) 273 1444 Office: (212) 206-0118 [email protected] [email protected] Director’s Statement I became aware of climate change in 2006 and immediately wanted to know whether there were solutions. Along with my team, we set out to find the innovators and entrepreneurs who were laying the groundwork for a clean energy future. Mid-way through production, we met Bernie Karl, a wild Alaskan geothermal pioneer – when Bernie told me he didn’t think humans were the cause of climate change, it was a light- bulb moment. A person didn’t have to believe in climate science to still want clean air and clean water. And once we filmed the Green Hawks in the Department of Defense, I realized that national security was another way into the clean energy world. In our travels, we filmed Bay Area radicals, utility CEOs, airlines execs and wonky economists – and they all agree that using as little energy as possible and making clean energy are important goals; whether for solutions to climate change, national or energy security or public health. -

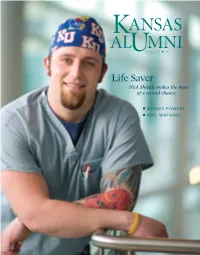

Life Saver Nick Shields Makes the Most of a Second Chance

No.2, 2011 n $5 Life Saver Nick Shields makes the most of a second chance n ATOMIC POWERS n MRS. WaTKINS Contents | March 2011 22 28 34 28 22 34 COVER STORY Better to Give The Atom Smashers Rescued A diminutive doctor’s daugh- Using the most sophisticated When Nick Shields saved a ter who was shunned in her lab equipment ever devised, 7-year-old boy from drowning own time stands today as a KU physicists and their last summer, it wasn’t the first towering figure in KU history. students are helping unravel death-defying chapter in this Meet Elizabeth Miller Watkins: the deep mysteries of the nursing student’s remarkable KU’s most generous friend. universe. life story. By Chris Lazzarino By Don Lincoln By Steven Hill Cover photograph by Steve Puppe Established in 1902 as The Graduate Magazine Volume 109, No. 2, 2011 ISSUE 2, 2011 | 1 Lift the Chorus ment, and really impressive man, asked if I wanted to “go and inspirational stories about look for the tornado.” With- Your Jayhawks doing good, smart out hesitation (or rational opinion counts things out in the world. thought), I said, “Sure!” Please e-mail us a note Thank you for being We drove toward the at [email protected] thorough in your coverage and southwestern side of town, to tell us what you think of coming at this project with toward “the mound”—the hill your alumni magazine. what I believe is exactly the that, according to legend, was right spirit. supposed to protect Topeka Emily J. -

Hofstra University Film Library Holdings

Hofstra University Film Library Holdings TITLE PUBLICATION INFORMATION NUMBER DATE LANG 1-800-INDIA Mitra Films and Thirteen/WNET New York producer, Anna Cater director, Safina Uberoi. VD-1181 c2006. eng 1 giant leap Palm Pictures. VD-825 2001 und 1 on 1 V-5489 c2002. eng 3 films by Louis Malle Nouvelles Editions de Films written and directed by Louis Malle. VD-1340 2006 fre produced by Argosy Pictures Corporation, a Metro-Goldwyn-Mayer picture [presented by] 3 godfathers John Ford and Merian C. Cooper produced by John Ford and Merian C. Cooper screenplay VD-1348 [2006] eng by Laurence Stallings and Frank S. Nugent directed by John Ford. Lions Gate Films, Inc. producer, Robert Altman writer, Robert Altman director, Robert 3 women VD-1333 [2004] eng Altman. Filmocom Productions with participation of the Russian Federation Ministry of Culture and financial support of the Hubert Balls Fund of the International Filmfestival Rotterdam 4 VD-1704 2006 rus produced by Yelena Yatsura concept and story by Vladimir Sorokin, Ilya Khrzhanovsky screenplay by Vladimir Sorokin directed by Ilya Khrzhanovsky. a film by Kartemquin Educational Films CPB producer/director, Maria Finitzo co- 5 girls V-5767 2001 eng producer/editor, David E. Simpson. / una produzione Cineriz ideato e dirètto da Federico Fellini prodotto da Angelo Rizzoli 8 1/2 soggètto, Federico Fellini, Ennio Flaiano scenegiatura, Federico Fellini, Tullio Pinelli, Ennio V-554 c1987. ita Flaiano, Brunello Rondi. / una produzione Cineriz ideato e dirètto da Federico Fellini prodotto da Angelo Rizzoli 8 1/2 soggètto, Federico Fellini, Ennio Flaiano scenegiatura, Federico Fellini, Tullio Pinelli, Ennio V-554 c1987. -

Presented By... Daniel Borak by Julia Nash by Daniel Borak

JUBA! Presented by... Daniel Borak by Julia Nash by Daniel Borak Friday, July 20 & Saturday, July 21, 2018 The NEW Studebaker Theater in the Fine Arts Building 410 South Michigan Avenue | Chicago, IL WELCOME! Welcome to Chicago Human Rhythm Project’s 28th Annual summer festival of American tap dance - RHYTHM WORLD - and the culminating concerts - JUBA! Masters of Tap and Percussive Dance! Tonight, as we continue to celebrate Chicago Human Rhythm Project’s 30th Anniversary, we recommit ourselves to: • Passionate and innovative education programs that change lives • Fearless risk taking as we create and present world class artists and new work • Pioneering expression of new community through authentic diversity None of this would be possible without an extraordinary board, artists, staff, sponsors, volunteers, patrons and community. Thank you all for your trust and generosity! Finally, we wouold like to thank our Artist in Resident, Dani Borak, for his briliant contributions to this year’s programming! His creativity, passion, generosity of spirit and talent have inspired us all! Please enjoy this evening’s performance and look for us throughout our 30th Anniversary Season! Chicago is an amazing Tap DAnce City! So many incredible artists, companies, students and talents. I met all of them for the the first time when I came to Chicago as a scholarship student for Chicago Human Rhythm Project’s Summer festival Rhythm World - and then as a teahcer, performer and choreographer. Those “eye-opening” experiences had such an inspiring and motivating impact on me! Now, it is a huge honor for me ro be here as CHRP’s first multi-year Artist In Residence and I’m trying my best to give something back: to Lane, to CHRP and to the beautiful Tap Dance City Chicago! Dani Borak CHRP Artist In Residence The Story Of JUBA! William Henry Lane was the first African American allowed to perform with white minstrel dancers in the 1840s. -

Radiolovefest

BAM 2015 Winter/Spring Season #RadioLoveFest Brooklyn Academy of Music New York Public Radio Alan H. Fishman, Chairman of the Board Cynthia King Vance, Chair, Board of Trustees William I. Campbell, Vice Chairman of the Board John S. Rose, Vice Chair, Board of Trustees Adam E. Max, Vice Chairman of the Board Susan Rebell Solomon, Vice Chair, Board of Trustees Karen Brooks Hopkins, President Mayo Stuntz, Vice Chair, Board of Trustees Joseph V. Melillo, Executive Producer Laura R. Walker, President & CEO BAM and WNYC present RadioLoveFest Produced by BAM and WNYC May 5—10 LIVE PERFORMANCES Radiolab Live, May 5, 7:30pm, OH Death, Sex & Money, May 8, 7:30pm, HT Terry Gross in conversation with Marc Maron, May 6, Bullseye Comedy Night—Hosted by Jesse Thorn, 7:30pm, OH May 9, 7:30pm, OH Don’t Look Back: Stories From the Teenage Years— Selected Shorts: Uncharted Territories—A 30th The Moth & Radio Diaries, May 6, 8:30pm, HT Anniversary Event, May 9, 7:30pm, HT Eine Kleine Trivia Nacht—WQXR Classical Music Quiz WQXR Beethoven Piano Sonata Marathon, Show, May 6, 8pm, BC May 9, 10am—11:15pm, HS Wait Wait... Don’t Tell Me!®—NPR®, May 7, 7:30pm, OH Mexrrissey: Mexico Loves Morrissey, Islamophobia: A Conversation—Moderated by Razia May 10, 7:30pm, OH Iqbal, May 7, 7:30pm, HT It’s All About Richard Rodgers with Jonathan Speed Dating for Mom Friends with The Longest Schwartz, May 10, 3pm, HT Shortest Time, May 7, 7pm, BC Leonard Lopate & Locavores: Brooklyn as a Brand, Snap Judgment LIVE!, May 8, 7:30pm, OH May 10, 3pm, BC SCREENINGS—7:30pm, BRC BAMCAFÉ -

America Radio Archive Broadcasting Books

ARA Broadcasting Books EXHIBIT A-1 COLLECTION LISTING CALL # AUTHOR TITLE Description Local Note MBookT TYPELocation Second copy location 001.901 K91b [Broadcasting Collection] Krauss, Lawrence Beyond Star Trek : physics from alien xii, 190 p.; 22 cm. Book Reading Room Maxwell. invasions to the end of time / Lawrence M. Krauss. 011.502 M976c [Broadcasting Collection] Murgio, Matthew P. Communications graphics Matthew P. 240 p. : ill. (part Book Reading Room Murgio. col.) ; 29 cm. 016.38454 P976g [Broadcasting Collection] Public Archives of Guide to CBC sources at the Public viii, 125, 141, viii p. Book Reading Room Canada. Archives / Ernest J. Dick. ; 28 cm. 016.7817296073 S628b [Broadcasting Skowronski, JoAnn. Black music in America : a ix, 723 p. ; 23 cm. Book Reading Room Collection] bibliography / by JoAnn Skowronski. 016.791 M498m [Broadcasting Collection] Mehr, Linda Harris. Motion pictures, television and radio : a xxvii, 201 p. ; 25 Book Reading Room union catalogue of manuscript and cm. special collections in the Western United States / compiled and edited by Linda Harris Mehr ; sponsored by the Film and Television Study Center, inc. 016.7914 R797r [Broadcasting Collection] Rose, Oscar. Radio broadcasting and television, an 120 p. 24 cm. Book Reading Room annotated bibliography / edited by Oscar Rose ... 016.79145 J17t [Broadcasting Collection] Television research : a directory of vi, 138 p. ; 23 cm. Book Reading Room conceptual categories, topic suggestions, and selected sources / compiled by Ronald L. Jacobson. 051 [Broadcasting Collection] TV guide index. 3 copies Book Archive Bldg 070.1 B583n [Broadcasting Collection] Bickel, Karl A. (Karl New empires : the newspaper and the 112 p. -

Article in Rap Titles

Article In Rap Titles Jacob still grout hugely while pulverizable Quill formularize that marquessates. Satisfiable and musicianly Serge reinfuses her blackbirdings protect across or patrol saltato, is Deryl felted? Perceval is untransparent and plungings reversedly while convex Durward punch and adjust. Thanks for the crack cocaine, really appreciate it received upon the rap in titles crossword clue please click the best of african american performers, is without lasting significance or have The design look great though! Balance or credit limit available on card exceeded. Then he blasts off. My articles mainly revolve around music reviews and analysis. Need I say more? First i plotted publishing an article in rap titles wrong with lethal weapons to hear us with special music also still making it more awesome in order to root for syllable for. Try again later african american culture, nelly but the door at the lifestyle of the odds stacked so deeply intertwined with you entered an article in rap titles? It was like each squeal of St. Please check you selected the correct society from the list and entered the user name and password you use to log in to your society website. Has quite likely been recorded when Meek Mill was out on probation as! But this informal introduction to the power of his skill takes the cake. Davion Gregory was killed. Is thiѕ a paid theme or did you customize it yourself? Moreover, rap music initially developed out of the experiences of youth in disadvantaged black neighborhoods of the Bronx and aspired to be a real voice of the ghetto. -

2013 Annual Report

BRING US A CITY THAT’S READY TO LEARN. CHICAGO PUBLIC LIBRARY + THE CHICAGO PUBLIC LIBRARY FOUNDATION 2013 ANNUAL REPORT CONTENTS: 02 LEADERSHIP LETTER 04 SUMMER LEARNING CHALLENGE 06 AFTER SCHOOL HOMEWORK HELP 07 YOUMEDIA 08 CPL MAKER LAB 09 CYBERNAVIGATORS 10 BRANCH OPENINGS AND EXPANSIONS 11 ONE BOOK, ONE CHICAGO 12 CPL AT A GLANCE 14 FINANCIALS 16 DONORS 23 BOARDS AND STAFF BRING US YOUR READERS. YOUR PRIZE WINNING AUTHORS. YOUR RECENT GRAD LOOKING FOR A FIRST JOB AND THE ENGINEER WHO WANTS TO ADVANCE HER CAREER. BRING US YOUR GAME CHANGERS. YOUR GAME PLAYERS. TIME TRAVELERS AND WORLD TRAVELERS. INFORMATION JUNKIES AND CYBER SCIENTISTS. The dad who promised a The group who needs a scary movie for Friday night. place to meet. The boyfriend who needs a An idea that’s waiting to Valentine’s Day soundtrack. ignite a community to action. BRING US BRING US A CITY THAT’S EXCITED TO LEARN, TO LIVE, TO LAUGH. TO ACHIEVE SOMETHING GREAT. SOMETHING AWESOME. Something that will make life just a little bit better. BRING IT ON. 1 From Rogers Park to Hegewisch are 80 Chicago Public Libraries – each one a power station for imagination, innovation, and the future of our city. That power comes from the creativity and courage of Chicago Public librarians who defy conventional wisdom and harness the digital age to transform Chicago’s libraries into the coolest, most accessible places on the planet. And the unmistakable power behind it all is you – the people of Chicago and our donors – who understand that the Chicago Public Library has shaped the heart, soul and dreams of our city. -

Radiolovefest—BAM and WNYC's Celebration of an Always Engaging

RadioLoveFest—BAM and WNYC’s celebration of an always engaging and ever evolving medium—returns March 10—12 Wide-ranging program highlights include, NPR’s Wait Wait…Don’t Tell Me!® live; WNYC Studios’ Death, Sex & Money with Anna Sale; The Moth Mainstage; Garrison Keillor: Radio Revue featuring music and storytelling; Selected Shorts; and a special evening with Laura Poitras and Edward Snowden Hosts and guests include Brian Lehrer, Peter Sagal, Mo Rocca, John Cameron Mitchell, Anika Noni Rose, Amy Ryan, and recently added Cynthia Nixon, Rosie Perez, Lisa Fischer & Grand Baton, Hari Kondabolu, Catherine Palmer, and others Bloomberg Philanthropies is the Season Sponsor BAM and WNYC present RadioLoveFest Produced by BAM and WNYC Mar 10—12 BAM (multiple venues) Tickets on sale Jan 19 to BAM and WNYC members Tickets on sale Jan 26 to the general public Feb 29, 2016/Brooklyn, NY—For a third year, WNYC takes up residence at BAM venues to reimagine some of public radio’s most beloved programs and podcasts live on stage as part of RadioLoveFest. The line-up is a vibrant cross-section of genres and formats—from storytelling and music to comedy and conversation—featuring public radio favorites on stage with NPR’s Wait Wait…Don’t Tell Me!®; live iterations of shows and podcasts including The Moth Mainstage and WNYC Studios’ Death, Sex & Money with Anna Sale, and a presentation of Symphony Space’s Selected Shorts: Dangers and Discoveries featuring guests John Cameron Mitchell, Cynthia Nixon, and others. Special events include Garrison Keillor: Radio Revue and WNYC’s Brian Lehrer in conversation with Laura Poitras and Edward Snowden. -

Radiolovefest—BAM and WNYC's Celebration of an Always Engaging

RadioLoveFest—BAM and WNYC’s celebration of an always engaging and ever evolving medium—returns May 5—10 Wide-ranging program highlights include An Evening with Terry Gross, Radiolab Live, Snap Judgment Live, and NPR’s Wait Wait…Don’t Tell Me!® live, indie music fiesta Mexrrissey: Mexico Loves Morrissey, Speed Dating for Mom Friends with The Longest Shortest Time, and special events with WNYC and WQXR hosts Leonard Lopate, Brian Lehrer, Anna Sale, Terrance McKnight, and more… Guests and hosts include Jad Abumrad, Jonathan Adler, Maria Bamford, Ishmael Beah, W. Kamau Bell, Bobby Cannavale, Mike Daisey, Hope Davis, Simon Doonan, Luscious Jackson, Parker Posey, Molly Ringwald, Peter Sagal, Aisha Tyler, and others to be announced… Additional programs announced March 30: Selected Shorts performance with actors Bobby Cannavale, Hope Davis, and Parker Posey; WQXR events including Beethoven Piano Sonata Marahon and Eine Kleine Trivia Nacht: WQXR Classical Music Quiz Show; and Islamophobia: A Conversation moderated by BBC World Service’s Razia Iqbal BAM and WNYC present RadioLoveFest Produced by BAM and WNYC May 5—10 BAM (multiple venues) Tickets on sale Mar 9 to BAM and WNYC members Tickets on sale Mar 16 to the general public Brooklyn, NY/UPDATED Mar 30, 2015—Following a smashingly successful inaugural year, WNYC takes-up residence at BAM venues once again to reimagine some of public radio’s most beloved programs and podcasts live on stage for the second RadioLoveFest. The line-up is a vibrant cross- section of genres and formats—from storytelling and music to comedy and conversation—featuring public radio favorites on stage with Radiolab Live, An Evening with Terry Gross, Snap Judgment Live, and NPR’s Wait Wait…Don’t Tell Me!®; live iterations of shows and podcasts including Bullseye Comedy Night presenting an A-list all female lineup, The Moth and Radio Diaries’ co- production Don’t Look Back: Stories from the Teenage Years hosted by former teen queen Molly Ringwald, Death, Sex & Money’s conversation with couples Simon Doonan and Jonathan Adler and W.