In-Season Prediction of Batting Averages: a Field Test of Empirical Bayes and Bayes Methodologies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NCAA Division I Baseball Records

Division I Baseball Records Individual Records .................................................................. 2 Individual Leaders .................................................................. 4 Annual Individual Champions .......................................... 14 Team Records ........................................................................... 22 Team Leaders ............................................................................ 24 Annual Team Champions .................................................... 32 All-Time Winningest Teams ................................................ 38 Collegiate Baseball Division I Final Polls ....................... 42 Baseball America Division I Final Polls ........................... 45 USA Today Baseball Weekly/ESPN/ American Baseball Coaches Association Division I Final Polls ............................................................ 46 National Collegiate Baseball Writers Association Division I Final Polls ............................................................ 48 Statistical Trends ...................................................................... 49 No-Hitters and Perfect Games by Year .......................... 50 2 NCAA BASEBALL DIVISION I RECORDS THROUGH 2011 Official NCAA Division I baseball records began Season Career with the 1957 season and are based on informa- 39—Jason Krizan, Dallas Baptist, 2011 (62 games) 346—Jeff Ledbetter, Florida St., 1979-82 (262 games) tion submitted to the NCAA statistics service by Career RUNS BATTED IN PER GAME institutions -

Sabermetrics: the Past, the Present, and the Future

Sabermetrics: The Past, the Present, and the Future Jim Albert February 12, 2010 Abstract This article provides an overview of sabermetrics, the science of learn- ing about baseball through objective evidence. Statistics and baseball have always had a strong kinship, as many famous players are known by their famous statistical accomplishments such as Joe Dimaggio’s 56-game hitting streak and Ted Williams’ .406 batting average in the 1941 baseball season. We give an overview of how one measures performance in batting, pitching, and fielding. In baseball, the traditional measures are batting av- erage, slugging percentage, and on-base percentage, but modern measures such as OPS (on-base percentage plus slugging percentage) are better in predicting the number of runs a team will score in a game. Pitching is a harder aspect of performance to measure, since traditional measures such as winning percentage and earned run average are confounded by the abilities of the pitcher teammates. Modern measures of pitching such as DIPS (defense independent pitching statistics) are helpful in isolating the contributions of a pitcher that do not involve his teammates. It is also challenging to measure the quality of a player’s fielding ability, since the standard measure of fielding, the fielding percentage, is not helpful in understanding the range of a player in moving towards a batted ball. New measures of fielding have been developed that are useful in measuring a player’s fielding range. Major League Baseball is measuring the game in new ways, and sabermetrics is using this new data to find better mea- sures of player performance. -

Understanding Advanced Baseball Stats: Hitting

Understanding Advanced Baseball Stats: Hitting “Baseball is like church. Many attend few understand.” ~ Leo Durocher Durocher, a 17-year major league vet and Hall of Fame manager, sums up the game of baseball quite brilliantly in the above quote, and it’s pretty ridiculous how much fans really don’t understand about the game of baseball that they watch so much. This holds especially true when you start talking about baseball stats. Sure, most people can tell you what a home run is and that batting average is important, but once you get past the basic stats, the rest is really uncharted territory for most fans. But fear not! This is your crash course in advanced baseball stats, explained in plain English, so that even the most rudimentary of fans can become knowledgeable in the mysterious world of baseball analytics, or sabermetrics as it is called in the industry. Because there are so many different stats that can be covered, I’m just going to touch on the hitting stats in this article and we can save the pitching ones for another piece. So without further ado – baseball stats! The Slash Line The baseball “slash line” typically looks like three different numbers rounded to the thousandth decimal place that are separated by forward slashes (hence the name). We’ll use Mike Trout‘s 2014 slash line as an example; this is what a typical slash line looks like: .287/.377/.561 The first of those numbers represents batting average. While most fans know about this stat, I’ll touch on it briefly just to make sure that I have all of my bases covered (baseball pun intended). -

The Rules of Scoring

THE RULES OF SCORING 2011 OFFICIAL BASEBALL RULES WITH CHANGES FROM LITTLE LEAGUE BASEBALL’S “WHAT’S THE SCORE” PUBLICATION INTRODUCTION These “Rules of Scoring” are for the use of those managers and coaches who want to score a Juvenile or Minor League game or wish to know how to correctly score a play or a time at bat during a Juvenile or Minor League game. These “Rules of Scoring” address the recording of individual and team actions, runs batted in, base hits and determining their value, stolen bases and caught stealing, sacrifices, put outs and assists, when to charge or not charge a fielder with an error, wild pitches and passed balls, bases on balls and strikeouts, earned runs, and the winning and losing pitcher. Unlike the Official Baseball Rules used by professional baseball and many amateur leagues, the Little League Playing Rules do not address The Rules of Scoring. However, the Little League Rules of Scoring are similar to the scoring rules used in professional baseball found in Rule 10 of the Official Baseball Rules. Consequently, Rule 10 of the Official Baseball Rules is used as the basis for these Rules of Scoring. However, there are differences (e.g., when to charge or not charge a fielder with an error, runs batted in, winning and losing pitcher). These differences are based on Little League Baseball’s “What’s the Score” booklet. Those additional rules and those modified rules from the “What’s the Score” booklet are in italics. The “What’s the Score” booklet assigns the Official Scorer certain duties under Little League Regulation VI concerning pitching limits which have not implemented by the IAB (see Juvenile League Rule 12.08.08). -

An Offensive Earned-Run Average for Baseball

OPERATIONS RESEARCH, Vol. 25, No. 5, September-October 1077 An Offensive Earned-Run Average for Baseball THOMAS M. COVER Stanfortl University, Stanford, Californiu CARROLL W. KEILERS Probe fiystenzs, Sunnyvale, California (Received October 1976; accepted March 1977) This paper studies a baseball statistic that plays the role of an offen- sive earned-run average (OERA). The OERA of an individual is simply the number of earned runs per game that he would score if he batted in all nine positions in the line-up. Evaluation can be performed by hand by scoring the sequence of times at bat of a given batter. This statistic has the obvious natural interpretation and tends to evaluate strictly personal rather than team achievement. Some theoretical properties of this statistic are developed, and we give our answer to the question, "Who is the greatest hitter in baseball his- tory?" UPPOSE THAT we are following the history of a certain batter and want some index of his offensive effectiveness. We could, for example, keep track of a running average of the proportion of times he hit safely. This, of course, is the batting average. A more refined estimate ~vouldb e a running average of the total number of bases pcr official time at bat (the slugging average). We might then notice that both averages omit mention of ~valks.P erhaps what is needed is a spectrum of the running average of walks, singles, doublcs, triples, and homcruns per official time at bat. But how are we to convert this six-dimensional variable into a direct comparison of batters? Let us consider another statistic. -

"What Raw Statistics Have the Greatest Effect on Wrc+ in Major League Baseball in 2017?" Gavin D

1 "What raw statistics have the greatest effect on wRC+ in Major League Baseball in 2017?" Gavin D. Sanford University of Minnesota Duluth Honors Capstone Project 2 Abstract Major League Baseball has different statistics for hitters, fielders, and pitchers. The game has followed the same rules for over a century and this has allowed for statistical comparison. As technology grows, so does the game of baseball as there is more areas of the game that people can monitor and track including pitch speed, spin rates, launch angle, exit velocity and directional break. The website QOPBaseball.com is a newer website that attempts to correctly track every pitches horizontal and vertical break and grade it based on these factors (Wilson, 2016). Fangraphs has statistics on the direction players hit the ball and what percentage of the time. The game of baseball is all about quantifying players and being able give a value to their contributions. Sabermetrics have given us the ability to do this in far more depth. Weighted Runs Created Plus (wRC+) is an offensive stat which is attempted to quantify a player’s total offensive value (wRC and wRC+, Fangraphs). It is Era and park adjusted, meaning that the park and year can be compared without altering the statistic further. In this paper, we look at what 2018 statistics have the greatest effect on an individual player’s wRC+. Keywords: Sabermetrics, Econometrics, Spin Rates, Baseball, Introduction Major League Baseball has been around for over a century has given awards out for almost 100 years. The way that these awards are given out is based on statistics accumulated over the season. -

Salary Correlations with Batting Performance

Salary correlations with batting performance By: Jaime Craig, Avery Heilbron, Kasey Kirschner, Luke Rector, Will Kunin Introduction Many teams pay very high prices to acquire the players needed to make that team the best it can be. While it often seems that high budget teams like the New York Yankees are often very successful, is the high price tag worth the improvement in performance? We compared many statistics including batting average, on base percentage, slugging, on base plus slugging, home runs, strike outs, stolen bases, runs created, and BABIP (batting average for balls in play) to salaries. We predicted that higher salaries will correlate to better batting performances. We also divided players into three groups by salary range, with the low salary range going up to $1 million per year, the mid-range salaries from $1 million to $10 million per year, and the high salaries greater than $10 million per year. We expected a stronger correlation between batting performance and salaries for players in the higher salary range than the correlation in the lower salary ranges. Low Salary Below $1 million In figure 1 is a correlation plot between salary and batting statistics. This correlation plot is for players that are making below $1 million. We see in all of the plots that there is not a significant correlation between salary and batting statistics. It is , however, evident that players earning the lowest salaries show the lowest correlations. The overall trend for low salary players--which would be expected--is a negative correlation between salary and batting performance. This negative correlation is likely a result of players getting paid according to their specific performance, or the data are reflecting underpaid rookies who have not bloomed in the major leagues yet. -

Combining Radar and Optical Sensor Data to Measure Player Value in Baseball

sensors Article Combining Radar and Optical Sensor Data to Measure Player Value in Baseball Glenn Healey Department of Electrical Engineering and Computer Science, University of California, Irvine, CA 92617, USA; [email protected] Abstract: Evaluating a player’s talent level based on batted balls is one of the most important and difficult tasks facing baseball analysts. An array of sensors has been installed in Major League Baseball stadiums that capture seven terabytes of data during each game. These data increase interest among spectators, but also can be used to quantify the performances of players on the field. The weighted on base average cube model has been used to generate reliable estimates of batter performance using measured batted-ball parameters, but research has shown that running speed is also a determinant of batted-ball performance. In this work, we used machine learning methods to combine a three-dimensional batted-ball vector measured by Doppler radar with running speed measurements generated by stereoscopic optical sensors. We show that this process leads to an improved model for the batted-ball performances of players. Keywords: Bayesian; baseball analytics; machine learning; radar; intrinsic values; forecasting; sensors; batted ball; statistics; wOBA cube 1. Introduction The expanded presence of sensor systems at sporting events has enhanced the enjoy- ment of fans and supported a number of new applications [1–4]. Measuring skill on batted balls is of fundamental importance in quantifying player value in baseball. Traditional measures for batted-ball skill have been based on outcomes, but these measures have a low Citation: Healey, G. Combining repeatability due to the dependence of outcomes on variables such as the defense, the ball- Radar and Optical Sensor Data to park dimensions, and the atmospheric conditions [5,6]. -

Batter Handedness Project - Herb Wilson

Batter Handedness Project - Herb Wilson Contents Introduction 1 Data Upload 1 Join with Lahman database 1 Change in Proportion of RHP PA by Year 2 MLB-wide differences in BA against LHP vs. RHP 2 Equilibration of Batting Average 4 Individual variation in splits 4 Logistic regression using Batting Average splits. 7 Logistic regressions using weighted On-base Average (wOBA) 11 Summary of Results 17 Introduction This project is an exploration of batter performance against like-handed and opposite-handed pitchers. We have long known that, collectively, batters have higher batting averages against opposite-handed pitchers. Differences in performance against left-handed versus right-handed pitchers will be referred to as splits. The generality of splits favoring opposite-handed pitchers masks variability in the magnitude of batting splits among batters and variability in splits for a single player among seasons. In this contribution, I test the adequacy of split values in predicting batter handedness and then examine individual variability to explore some of the nuances of the relationships. The data used primarily come from Retrosheet events data with the Lahman dataset being used for some biographical information such as full name. I used the R programming language for all statistical testing and for the creation of the graphics. A copy of the code is available on request by contacting me at [email protected] Data Upload The Retrosheet events data are given by year. The first step in the analysis is to upload dataframes for each year, then use the rbind function to stitch datasets together to make a dataframe and use the function colnames to add column names. -

How to Do Stats

EXPLANATION OF STATS IN SCORE BOOK FIELDING STATISTICS COLUMNS DO - Defensive Outs The number of put outs the team participated in while each player was in the line-up. Defensive outs are used in National Championships as a qualification rule. PO - Put out (10.09) A putout shall be credited to each fielder who (1) Catches a fly ball or a line drive, whether fair or foul. (2) Catches a thrown ball, which puts out a batter or a runner. (3) Tags a runner when the runner is off the base to which he is legally entitled. A – Assist (10.10) Any fielder who throws or deflects a battered or thrown ball in such a way that a putout results or would have except for a subsequent error, will be credited with an Assist. E – Error (10.12) An error is scored against any fielder who by any misplay (fumble, muff or wild throw) prolongs the life of the batter or runner or enables a runner to advance. BATTING STATISTICS COLUMNS PA - Plate Appearance Every time the batter completes his time at bat he is credited with a PA. Note: if the third out is made in the field he does not get a PA but is first to bat in the next innings. AB - At Bat (10.02(a)(1)) When a batter has reached 1st base without the aid of an ‘unofficial time at bat’. i.e. do not include Base on Balls, Hit by a Pitched Ball, Sacrifice flies/Bunts and Catches Interference. R – Runs (2.66) every time the runner crosses home plate scoring a run. -

Does the Defensive Shift Employed by an Opposing Team Affect an MLB

Does the Defensive Shift Employed by an Opposing team affect an MLB team’s Batted Ball Quality and Offensive Performance? 11/20/2019 Abstract This project studies proportions of batted ball quality across the 2019 MLB season when facing two different types of defensive alignment. It also attempts to answer if run production is affected by shifts. Batted ball quality is split into six groups (barrel, solid contact, flare, poor (topped), poor (under), and poor(weak)) while defensive alignments are split into two (no shift and shift). Relative statistics come from all balls put in play excluding sacrifice bunts in the 2019 MLB season. The study shows there to be differences in the proportions of batted ball quality relative to defensive alignment. Specifically, the proportion of barrels (balls barreled) against the shift was greater than the proportion of barrels against no shift. Barrels also proved to result in the highest babip (batting average on balls in play) + slg (slugging percentage), where babip + slg then proved to be a good predictor of overall offensive performance measured in woba (weighted on-base average). There appeared to be a strong positive correlation between babip + slg and woba. MLB teams may consider this data when deciding which defensive alignment to play over the course of a game. However, they will most likely want to extend this research by evaluating each player on a case by case basis. 1 Background and Signifigance Do MLB teams hit the ball better when facing a certain type of defensive alignment? As the shift becomes increasingly employed in Major League Baseball these types of questions become more and more important. -

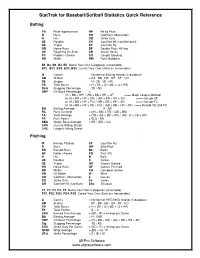

Stattrak for Baseball/Softball Statistics Quick Reference

StatTrak for Baseball/Softball Statistics Quick Reference Batting PA Plate Appearances HP Hit by Pitch R Runs CO Catcher's Obstruction H Hits SO Strike Outs 2B Doubles SH Sacrifice Hit (sacrifice bunt) 3B Triples SF Sacrifice Fly HR Home Runs DP Double Plays Hit Into OE Reaching On-Error SB Stolen Bases FC Fielder’s Choice CS Caught Stealing BB Walks RBI Runs Batted In B1, B2, B3, B4, B5 Name Your Own Categories (renamable) BS1, BS2, BS3, BS4, BS5 Create Your Own Statistics (renamable) G Games = Number of Batting records in database AB At Bats = PA - BB - HP - SH - SF - CO 1B Singles = H - 2B - 3B - HR TB Total Bases = H + 2B + (2 x 3B) + (3 x HR) SLG Slugging Percentage = TB / AB OBP On-Base Percentage = (H + BB + HP) / (AB + BB + HP + SF) <=== Major League Method or (H + BB + HP + OE) / (AB + BB + HP + SF) <=== Include OE or (H + BB + HP + FC) / (AB + BB + HP + SF) <=== Include FC or (H + BB + HP + OE + FC) / (AB + BB + HP + SF) <=== Include OE and FC BA Batting Average = H / AB RC Runs Created = ((H + BB) x TB) / (AB + BB) TA Total Average = (TB + SB + BB + HP) / (AB - H + CS + DP) PP Pure Power = SLG - BA SBA Stolen Base Average = SB / (SB + CS) CHS Current Hitting Streak LHS Longest Hitting Streak Pitching IP Innings Pitched SF Sacrifice Fly R Runs WP Wild Pitch ER Earned-Runs Bk Balks BF Batters Faced PO Pick Offs H Hits B Balls 2B Doubles S Strikes 3B Triples GS Games Started HR Home Runs GF Games Finished BB Walks CG Complete Games HB Hit Batter W Wins CO Catcher's Obstruction L Losses SO Strike Outs Sv Saves SH Sacrifice Hit