A Bayesian Approach to Multi-Drone Source Localization Methods

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Educator Activity Booklet to the Arkansas Inland Maritime Museum

Educator Activity Booklet to the Arkansas Inland Maritime Museum Field trips to the Arkansas Inland Maritime Museum include a guided tour through USS Razorback submarine and an optional age appropriate scavenger hunt through our museum. Students have a limited time at the facility, so completing activities with students at school will help them better understand submarines. There are some activities that introduce students to what submarines are and how they work; while others are follow up activities. There are four main subject areas covered: English Language Arts, Math, Science, and Geography. The activities are organized by grade level then by subject area. Kindergarten—Second Grade……………………………………………………2 Third—Fifth Grade…………………………………………………………………6 Sixth—Eighth Grade………………………………………………………………11 Ninth—Twelfth Grade……………………………………………………………..16 Appendix……………………………………………………………………………18 If your class would like to take a field trip to the Museum please contact us by calling (501) 371-8320 or emailing [email protected]. We offer tours to school groups Wednesday through Saturday 10:00 AM to dusk and Sunday 1:00 PM to dusk. We do give special rates to school groups who book their field trips in advance. Arkansas Inland Maritime Museum | Educator Activity Booklet 1 Kindergarten through Second Grade English Language Arts A fun way to introduce young students to what a submarine is before visiting is by reading The Magic School Bus on the Ocean Floor to your students. The teacher should explain that while the bus turned into a “submarine” it is different from a submarine that is used in warfare. After your class has visited the museum the class can create an anchor chart that shows the new vocabulary they learned. -

Two US Navy's Submarines

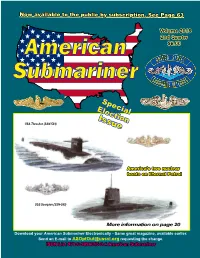

Now available to the public by subscription. See Page 63 Volume 2018 2nd Quarter American $6.00 Submariner Special Election Issue USS Thresher (SSN-593) America’s two nuclear boats on Eternal Patrol USS Scorpion (SSN-589) More information on page 20 Download your American Submariner Electronically - Same great magazine, available earlier. Send an E-mail to [email protected] requesting the change. ISBN List 978-0-9896015-0-4 American Submariner Page 2 - American Submariner Volume 2018 - Issue 2 Page 3 Table of Contents Page Number Article 3 Table of Contents, Deadlines for Submission 4 USSVI National Officers 6 Selected USSVI . Contacts and Committees AMERICAN 6 Veterans Affairs Service Officer 6 Message from the Chaplain SUBMARINER 7 District and Base News This Official Magazine of the United 7 (change of pace) John and Jim States Submarine Veterans Inc. is 8 USSVI Regions and Districts published quarterly by USSVI. 9 Why is a Ship Called a She? United States Submarine Veterans Inc. 9 Then and Now is a non-profit 501 (C) (19) corporation 10 More Base News in the State of Connecticut. 11 Does Anybody Know . 11 “How I See It” Message from the Editor National Editor 12 2017 Awards Selections Chuck Emmett 13 “A Guardian Angel with Dolphins” 7011 W. Risner Rd. 14 Letters to the Editor Glendale, AZ 85308 18 Shipmate Honored Posthumously . (623) 455-8999 20 Scorpion and Thresher - (Our “Nuclears” on EP) [email protected] 22 Change of Command Assistant Editor 23 . Our Brother 24 A Boat Sailor . 100-Year Life Bob Farris (315) 529-9756 26 Election 2018: Bios [email protected] 41 2018 OFFICIAL BALLOT 43 …Presence of a Higher Power Assoc. -

Appendix As Too Inclusive

Color profile: Disabled Composite Default screen Appendix I A Chronological List of Cases Involving the Landing of United States Forces to Protect the Lives and Property of Nationals Abroad Prior to World War II* This Appendix contains a chronological list of pre-World War II cases in which the United States landed troops in foreign countries to pro- tect the lives and property of its nationals.1 Inclusion of a case does not nec- essarily imply that the exercise of forcible self-help was motivated solely, or even primarily, out of concern for US nationals.2 In many instances there is room for disagreement as to what motive predominated, but in all cases in- cluded herein the US forces involved afforded some measure of protection to US nationals or their property. The cases are listed according to the date of the first use of US forces. A case is included only where there was an actual physical landing to protect nationals who were the subject of, or were threatened by, immediate or po- tential danger. Thus, for example, cases involving the landing of troops to punish past transgressions, or for the ostensible purpose of protecting na- tionals at some remote time in the future, have been omitted. While an ef- fort to isolate individual fact situations has been made, there are a good number of situations involving multiple landings closely related in time or context which, for the sake of convenience, have been treated herein as sin- gle episodes. The list of cases is based primarily upon the sources cited following this paragraph. -

Black Sailors During the War of 1812 Lauren Mccormack, 2005 Revised by Kate Monea and Carl Herzog, 2020

Black Sailors During the War of 1812 Lauren McCormack, 2005 Revised by Kate Monea and Carl Herzog, 2020 A publication of the USS Constitution Museum, Boston © 2020 USS Constitution Museum | usscm.org Black Sailors During the War of 1812 Lauren McCormack, 2005 Revised by Kate Monea and Carl Herzog, 2020 CONTENTS Introduction .............................................................1 Free Blacks in the Post-Revolutionary American North ........................2 Free Blacks in Boston, Massachusetts ........................................5 Black Participation in the Maritime Trade ....................................7 Life at Sea for Black Sailors in the early United States Navy ....................10 Black Sailors on USS Constitution ..........................................17 A publication of the USS Constitution Museum, Boston © 2020 USS Constitution Museum | usscm.org Introduction At the beginning of the nineteenth century, free black men from the northeastern United States, struggling to make their way in a highly discriminatory American society, went to sea in the merchant marine and the U.S. Navy, including aboard USS Constitution. By no means did shipboard life completely extract them from the prejudices of a white-dominated culture, but it often provided them with better opportunities than they had on land. Like their fellow white sailors, black seamen in the Early Republic could count on stable pay with the benefit of room and board. For many, sea service and its pay provided a path to a better life ashore. Because race was not specifically noted in U.S. Navy personnel records at the time, much remains unknown about these men. However, a survey of the status of life for free blacks on shore sheds light on why some may have found seafaring an attractive opportunity. -

Albert J. Baciocco, Jr. Vice Admiral, US Navy (Retired)

Albert J. Baciocco, Jr. Vice Admiral, U. S. Navy (Retired) - - - - Vice Admiral Baciocco was born in San Francisco, California, on March 4, 1931. He graduated from Lowell High School and was accepted into Stanford University prior to entering the United States Naval Academy at Annapolis, Maryland, in June 1949. He graduated from the Naval Academy in June 1953 with a Bachelor of Science degree in Engineering, and completed graduate level studies in the field of nuclear engineering in 1958 as part of his training for the naval nuclear propulsion program. Admiral Baciocco served initially in the heavy cruiser USS SAINT PAUL (CA73) during the final days of the Korean War, and then in the diesel submarine USS WAHOO (SS565) until April of 1957 when he became one of the early officer selectees for the Navy's nuclear submarine program. After completion of his nuclear training, he served in the commissioning crews of three nuclear attack submarines: USS SCORPION (SSN589), as Main Propulsion Assistant (1959-1961); USS BARB (SSN596), as Engineer Officer (1961-1962), then as Executive Officer (1963- 1965); and USS GATO (SSN615), as Commanding Officer (1965-1969). Subsequent at-sea assignments, all headquartered in Charleston, South Carolina, included COMMANDER SUBMARINE DIVISION FORTY-TWO (1969-1971), where he was responsible for the operational training readiness of six SSNs; COMMANDER SUBMARINE SQUADRON FOUR (1974-1976), where he was responsible for the operational and material readiness of fifteen SSNs; and COMMANDER SUBMARINE GROUP SIX (1981-1983), where, during the height of the Cold War, he was accountable for the overall readiness of a major portion of the Atlantic Fleet submarine force, including forty SSNs, 20 SSBNs, and various other submarine force commands totaling approximately 20,000 military personnel, among which numbered some forty strategic submarine crews. -

DL 4-1968 Mediterranean Cruise

USS Willis A. Lee (DL-4) Mediterranean Cruise 10 January 1968 - 17 May 1968 LTJG David C. Mader Served Aboard: June 1967 – January 1969 pg. 1 Introduction I received my commission in June of 1967 after graduating from OCS in Newport. After 4 weeks of gunnery school in Dam Neck, VA, I reported aboard the Lee a green Ensign in July. My first billet was that of Ordinance Officer, later Fox Division Officer, and eventually after the Med Cruise, First Lieutenant. After some brief training periods at sea off the East Coast in the fall of 1967, the Lee’s departure for the Med in January of 1968 was an exciting event for me. Memories tend to fade after almost 50 years. At the recent DLA reunion, comparing memories from the Lee’s 1968 Med cruise with shipmates showed some conflicting remembrances of the itinerary and events of that deployment. Short of accessing the ship’s log, documentation is difficult to find. This chronicle is based on the best resource I could find – a series of 10 lengthy letters I wrote to my parents over the course of the cruise. As mothers often do, mine faithfully saved every letter, which I have today. In the letters, I included port calls and dates, shipboard and onshore events of significance, and some personal impressions of my first extended deployment. Hopefully, the shared memories which follow will be of interest to the reader, particularly shipmates who shared the experience with me on the Lee. I should also point out that DLA shipmate Howard Dobson authored a similar commentary on this deployment spanning January, February and March, when he then departed the ship. -

Remembering USS SCORPION (SSN 589) – Lost, May 1968

Remembering USS SCORPION (SSN 589) – Lost, May 1968 USS SCORPION (SSN 589), a 3500-ton Skipjack class nuclear-powered attack submarine built at Groton, Connecticut, was commissioned in July 1960. Assigned to the Atlantic Fleet, she took part in the development of contemporary submarine warfare tactics and made periodic deployments to the Mediterranean Sea and other areas where the presence of a fast and stealthy submarine would be beneficial. SCORPION began another Mediterranean cruise in February 1968. She operated with the 6 th Fleet into May, and then headed west for home. On May 21, SCORPION’s crew indicated their position to be about 50 miles south of the Azores. Six days later she was reported overdue at Norfolk. A search was initiated, but on June 5, SCORPION and all hands were declared “presumed lost.” Her name was struck from the Naval Register on June 30, 1968. In late October 1968, her remains were found on the sea floor over 10,000 feet below the surface by a towed deep-submergence vehicle deployed from USNS MIZAR (T-AGOR-11). Photographs, taken then and later, showed that her hull had suffered fatal damage while she was running submerged, and that even more severe damage occurred as she sank. The cause of the initial damage continues to generate controversy decades later. One of the first photographs of SCORPION (SSN 589), taken on 27 June 1960, off New London, Connecticut, during builder's trials. The trials were under the direction of VADM Hyman G. Rickover, shown on sailplanes with CDR James F. Calvert, former skipper of USS SKATE (SSN 578), who described the performance of the ship and crew as “outstanding.” SCORPION’s commanding officer, CDR Norman B. -

Water Exploration Booklet

Water Exploration Activity Booklet by the Arkansas Inland Maritime Museum Water Exploration activities are designed to be included into educators’ geography lesson plans. These activities can be used by educators throughout the continental United States. Kindergarten through Second Grade…………………………………………………………..2 Third through Fifth Grade………………………………………………………………………..4 Sixth through Eighth Grade……………………………………………………………………...5 Ninth through Twelfth Grade…………………………………………………………………….7 Arkansas Inland Maritime Museum | Water Exploration 1 Kindergarten through Second Grade Kindergarten: Display your state map. First Grade: Display a United States map. Second Grade: Display a world map. Have the students locate their town (state and country). Then students should identify the difference between land and water. What is the closest water source to your town? What rivers are in your state? Some rivers are used to trade goods and raw materials. Use the United States Waterways Map to find out if your state’s river(s) are used for this purpose. These rivers that are used for trade eventually connect to the ocean. Find a river closest to your town and follow it to the ocean. What ocean does your river go to? Locate Arkansas on the United States Waterways Map and explain to students that a United State Naval submarine is on display in Arkansas for people to visit. Have students figure out how the submarine got to North Little Rock, Arkansas. During the map discussion use new terminology with your students such as the cardinal directions (north, south, east, and west) when explaining a location. Arkansas Inland Maritime Museum | Water Exploration 2 “United States Inland Waterways Map” Arkansas Inland Maritime Museum | Water Exploration 3 Third through Fifth Grade Display a United States map and gives students a blank map. -

Salvage Diary from 1 March – 1942 Through 15 November, 1943

Salvage Diary from 1 March – 1942 through 15 November, 1943 INDUSTRIAL DEPARTMENT WAR DIARY COLLECTION It is with deep gratitude to the National Archives and Records Administration (NARA) in San Bruno, California for their kind permission in acquiring and referencing this document. Credit for the reproduction of all or part of its contents should reference NARA and the USS ARIZONA Memorial, National Park Service. Please contact Sharon Woods at the phone # / address below for acknowledgement guidelines. I would like to express my thanks to the Arizona Memorial Museum Association for making this project possible, and to the staff of the USS Arizona Memorial for their assistance and guidance. Invaluable assistance was provided by Stan Melman, who contributed most of the ship classifications, and Zack Anderson, who provided technical guidance and Adobe scans. Most of the Pacific Fleet Salvage that was conducted upon ships impacted by the Japanese attack on Pearl Harbor occurred within the above dates. The entire document will be soon be available through June, 1945 for viewing. This salvage diary can be searched by any full or partial keyword. The Diaries use an abbreviated series of acronyms, most of which are listed below. Their deciphering is work in progress. If you can provide assistance help “fill in the gaps,” please contact: AMMA Archival specialist Sharon Woods (808) 422-7048, or by mail: USS Arizona Memorial #1 Arizona Memorial Place Honolulu, HI 96818 Missing Dates: 1 Dec, 1941-28 Feb, 1942 (entire 3 months) 11 March, 1942 15 Jun -

CONGRESSIONAL RECORD—SENATE March 23, 1999 COMMITTEE on FINANCE SUBCOMMITTEE on INVESTIGATIONS I Ask That a Portion of His Award Win- Mr

5234 CONGRESSIONAL RECORD—SENATE March 23, 1999 COMMITTEE ON FINANCE SUBCOMMITTEE ON INVESTIGATIONS I ask that a portion of his award win- Mr. SMITH of New Hampshire. Mr. Mr. SMITH of New Hampshire. Mr. ning article be printed in the RECORD President, the Finance Committee re- President, I ask unanimous consent on and intend to have the remainder of quests unanimous consent to conduct a behalf of the Permanent Subcommittee the article printed in the RECORD over hearing on Tuesday, March 23, 1999 be- on Investigations of the Governmental the next several days. ginning at 10 a.m. in room 215 Dirksen. Affairs Committee to meet on Tuesday, The material follows: The PRESIDING OFFICER. Without March 23, 1999, for a hearing on the SUBMISS: THE MYSTERIOUS DEATH OF THE objection, it is so ordered. topic of ‘‘Securities Fraud On The U.S.S. ‘‘SCORPION’’ (SSN 589) COMMITTEE ON FOREIGN RELATIONS Internet.’’ (By Mark Bradley) Mr. SMITH of New Hampshire. Mr. The PRESIDING OFFICER. Without At around midnight on May 16, 1968, U.S.S. President, I ask unanimous consent objection, it is so ordered. Scorpion (SSN 589) slipped quietly through that the Committee on Foreign Rela- SUBCOMMITTEE ON TECHNOLOGY, TERRORISM, the Straits of Gibraltar and paused just long tions be authorized to meet during the AND GOVERNMENT INFORMATION enough off the choppy breakwaters of Rota, session of the Senate on Tuesday, Mr. SMITH of New Hampshire. Mr. Spain, to rendezvous with a boat and offload March 23, 1999 at 2:30 p.m. to hold a two crewmen and several messages. -

Combat Losses of Nuclear-Powered Warships: Contamination, Collateral Damage and the Law

Combat Losses of Nuclear-Powered Warships: Contamination, Collateral Damage and the Law Akira Mayama 93 INT’L L. STUD. 132 (2017) Volume 93 2017 Published by the Stockton Center for the Study of International Law ISSN 2375-2831 International Law Studies 2017 Combat Losses of Nuclear-Powered Warships: Contamination, Collateral Damage and the Law Akira Mayama CONTENTS I. Introduction .................................................................................................. 133 II. Collateral Damage and the Significance of Determining Its Scope ..... 135 A. Collateral Damage Based on the Principle of Proportionality ....... 135 B. Legal Issues Stemming from the Combat Loss of Nuclear- Powered Warships ............................................................................. 137 III. Collateral Damage Rule and Theaters of Naval Warfare ..................... 138 A. Neutral Land Territory and Territorial Seas .................................... 138 B. Civilians and Civilian Objects in Belligerent Land Territory and Territorial Seas ................................................................................... 140 C. Belligerent and Neutral Merchant Shipping on the High Seas ..... 141 D. Neutral Exclusive Economic Zones ................................................. 142 IV. Collateral Damage and Nuclear Contamination .................................... 146 A. Nuclear Contamination Not Causing Physical Damage ................ 146 B. Nuclear Contamination of the Natural Environment ................... 149 V. Nuclear Contamination -

Maryland Archeology Month 2018 Sponsors

Maryland Archeo logy Month 2018 CHARTING THE PAST: 30 Y EARS OF EXPLORING MARYLAND’S SUBMERGED HISTORY You are cordially invited to join Maryland Governor Larry Hogan in celebrating April 2018 as “Maryland Archeology Month” April 2018 1 Charting the Past: 30 Years of Exploring Maryland's Submerged History Some of us like to experience our archeological adventures in a corn or soybean field, breathing fresh country air (I confess!), while others prefer to don a wet suit, strap a bottle of canned air on their backs, and dive into the murky and mysterious underwater realm. Crazy? Not at all. Putting aside the lure of sunken treasure, with all of its attendant ethical issues, there is nevertheless something captivating and engrossing about humankind’s use of the sea that is so heroic as to be inspiring, and so frightening as to be thrilling. For thousands of years our kind have plied the seas in pursuit of discovery, trade, and travel. The commodities of the ages have moved across the seas. Most of the time such endeavors ended well, but often enough that a subdiscipline of archeology is devoted to it, ships floundered. These submerged time-capsules represent a frozen moment in history, and can be great fodder for archeological inquiry. In Maryland, we have a rich maritime history that begins thousands of years ago with Native Americans moving steatite and rhyolite from source areas in the Piedmont and Blue Ridge provinces west of the Bay to the Eastern Shore by canoe. From earliest Colonial times, European settlers made their homes along Maryland waters, as seafaring represented cutting edge transportation that allowed communication and commerce with the homes left behind in the Old World.