Delineation of Agents for Games Using Deep Reinforcement Learning [1] Pranav Batra, [2] Sambhav Jain, [3] Asst

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Playvs Announces $30.5M Series B Led by Elysian Park

PLAYVS ANNOUNCES $30.5M SERIES B LED BY ELYSIAN PARK VENTURES, INVESTMENT ARM OF THE LA DODGERS, ADDITIONAL GAME TITLES AND STATE EXPANSIONS FOR INAUGURAL SEASON FEBRUARY 2019 High school esports market leader introduces Rocket League and SMITE to game lineup adds associations within Alabama, Mississippi, and Texas to sanctioned states for Season One and closes a historic round of funding from Diddy, Adidas, Samsung and others EMBARGOED FOR NOVEMBER 20TH AT 10AM EST / 7AM PST LOS ANGELES, CA - November 20th - PlayVS – the startup building the infrastructure and official platform for high school esports - today announced its Series B funding of $30.5 million led by Elysian Park Ventures, the private investment arm of the Los Angeles Dodgers ownership group, with five existing investors doubling down, New Enterprise Associates, Science Inc., Crosscut Ventures, Coatue Management and WndrCo, and new groups Adidas (marking the company’s first esports investment), Samsung NEXT, Plexo Capital, along with angels Sean “Diddy” Combs, David Drummond (early employee at Google and now SVP Corp Dev at Alphabet), Rahul Mehta (Partner at DST Global), Rich Dennis (Founder of Shea Moisture), Michael Dubin (Founder and CEO of Dollar Shave Club), Nat Turner (Founder and CEO of Flatiron Health) and Johnny Hou (Founder and CEO of NZXT). This milestone round comes just five months after PlayVS’ historic $15M Series A funding. “We strive to be at the forefront of innovation in sports, and have been carefully -

Principles of Game Design

Principles of Game Design • To improve the chances for success of a game, there are several principles of Game Design Principles good game design to be followed. – Some of this is common sense. – Some of this is uncommon sense … learn from other people’s experiences and mistakes! • Remember: – Players do not know what they want, but they 1 know it when they see it! 2 Principles of Game Design Player Empathy • To start with, we will take a look at some • A good designer always has an idea of what is of the fundamental principles of game going on in a player’s head. design. – Know what they expect and do not expect. – Anticipate their reactions to different game • Later, we will come back and take a look situations. at how we can properly design a game to • Anticipating what a player wants to do next in a make sure these principles are being video game situation is important. properly met. – Let the player try it, and ensure the game responds intelligently. This makes for a better experience. – If necessary, guide the player to a better course of 3 action. 4 Player Empathy Player Empathy Screen shot from Prince of Persia: The Sands of Time. This well designed game Screen shot from Batman Vengeance. The people making Batman games clearly demonstrates player empathy. The prince will miss this early jump5 do not empathize with fans of this classic comic. Otherwise, Batman games6 over a pit, but will not be punished and instead can try again the correct way. would not be so disappointing on average. -

ROUTE GENERATION ALGORITHM in UT2004 Gregg T

ROUTE GENERATION FOR A SYNTHETIC CHARACTER (BOT) USING A PARTIAL OR INCOMPLETE KNOWLEDGE ROUTE GENERATION ALGORITHM IN UT2004 Gregg T. Hanold, Technical Manager Oracle National Security Group gregg. [email protected] David T. Hanold, Animator DavidHanoldCG [email protected] Abstract. This paper presents a new Route Generation Algorithm that accurately and realistically represents human route planning and navigation for Military Operations in Urban Terrain (MOUT). The accuracy of this algorithm in representing human behavior is measured using the Unreal TournamenFM 2004 (UT2004) Game Engine to provide the simulation environment in which the differences between the routes taken by the human player and those of a Synthetic Agent (BOT) executing the A-star algorithm and the new Route Generation Algorithm can be compared. The new Route Generation Algorithm computes the BOT route based on partial or incomplete knowledge received from the UT2004 game engine during game play. To allow BOT navigation to occur continuously throughout the game play with incomplete knowledge of the terrain, a spatial network model of the UT2004 MOUT terrain is captured and stored in an Oracle 11 9 Spatial Data Object (SOO). The SOO allows a partial data query to be executed to generate continuous route updates based on the terrain knowledge, and stored dynamic BOT, Player and environmental parameters returned by the query. The partial data query permits the dynamic adjustment of the planned routes by the Route Generation Algorithm based on the current state of the environment during a simulation. The dynamic nature of this algorithm more accurately allows the BOT to mimic the routes taken by the human executing under the same conditions thereby improving the realism of the BOT in a MOUT simulation environment. -

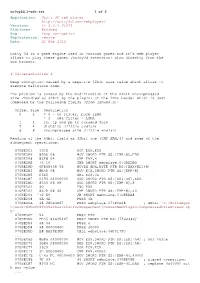

Application: Unity 3D Web Player

unity3d_1-adv.txt 1 of 2 Application: Unity 3D web player http://unity3d.com/webplayer/ Versions: <= 3.2.0.61061 Platforms: Windows Bug: heap corruption Exploitation: remote Date: 21 Feb 2012 Unity 3d is a game engine used in various games and it’s web player allows to play these games (unity3d extension) also directly from the web browser. # Vulnerabilities # Heap corruption caused by a negative 32bit size value which allows to execute malicious code. The problem is caused by the modification of the 64bit uncompressed size (handled as 32bit by the plugin) of the lzma header which is just composed by the following fields (from lzma86.h): Offset Size Description 0 1 = 0 - no filter, pure LZMA = 1 - x86 filter + LZMA 1 1 lc, lp and pb in encoded form 2 4 dictSize (little endian) 6 8 uncompressed size (little endian) Reading of the 64bit field as 32bit one (CMP EAX,4) and some of the subsequent operations: 070BEDA3 33C0 XOR EAX,EAX 070BEDA5 895D 08 MOV DWORD PTR SS:[EBP+8],EBX 070BEDA8 83F8 04 CMP EAX,4 070BEDAB 73 10 JNB SHORT webplaye.070BEDBD 070BEDAD 0FB65438 05 MOVZX EDX,BYTE PTR DS:[EAX+EDI+5] 070BEDB2 8B4D 08 MOV ECX,DWORD PTR SS:[EBP+8] 070BEDB5 D3E2 SHL EDX,CL 070BEDB7 0196 A4000000 ADD DWORD PTR DS:[ESI+A4],EDX 070BEDBD 8345 08 08 ADD DWORD PTR SS:[EBP+8],8 070BEDC1 40 INC EAX 070BEDC2 837D 08 40 CMP DWORD PTR SS:[EBP+8],40 070BEDC6 ^72 E0 JB SHORT webplaye.070BEDA8 070BEDC8 6A 4A PUSH 4A 070BEDCA 68 280A4B07 PUSH webplaye.074B0A28 ; ASCII "C:/BuildAgen t/work/b0bcff80449a48aa/PlatformDependent/CommonWebPlugin/CompressedFileStream.cp p" 070BEDCF 53 PUSH EBX 070BEDD0 FF35 84635407 PUSH DWORD PTR DS:[7546384] 070BEDD6 6A 04 PUSH 4 070BEDD8 68 00000400 PUSH 40000 070BEDDD E8 BA29E4FF CALL webplaye.06F0179C .. -

GOG-API Documentation Release 0.1

GOG-API Documentation Release 0.1 Gabriel Huber Jun 05, 2018 Contents 1 Contents 3 1.1 Authentication..............................................3 1.2 Account Management..........................................5 1.3 Listing.................................................. 21 1.4 Store................................................... 25 1.5 Reviews.................................................. 27 1.6 GOG Connect.............................................. 29 1.7 Galaxy APIs............................................... 30 1.8 Game ID List............................................... 45 2 Links 83 3 Contributors 85 HTTP Routing Table 87 i ii GOG-API Documentation, Release 0.1 Welcome to the unoffical documentation of the APIs used by the GOG website and Galaxy client. It’s a very young project, so don’t be surprised if something is missing. But now get ready for a wild ride into a world where GET and POST don’t mean anything and consistency is a lucky mistake. Contents 1 GOG-API Documentation, Release 0.1 2 Contents CHAPTER 1 Contents 1.1 Authentication 1.1.1 Introduction All GOG APIs support token authorization, similar to OAuth2. The web domains www.gog.com, embed.gog.com and some of the Galaxy domains support session cookies too. They both have to be obtained using the GOG login page, because a CAPTCHA may be required to complete the login process. 1.1.2 Auth-Flow 1. Use an embedded browser like WebKit, Gecko or CEF to send the user to https://auth.gog.com/auth. An add-on in your desktop browser should work as well. The exact details about the parameters of this request are described below. 2. Once the login process is completed, the user should be redirected to https://www.gog.com/on_login_success with a login “code” appended at the end. -

Who Put the Mod in Commodification? –

Who put the mod in commodification? – A descriptive analysis of the First Person Shooter mod culture. David B. Nieborg [email protected] Http://GameSpace.nl April 2004 Index 1. Introduction 3 1.1 Methodology 3 2. Participatory Culture and Co-Created Media & Games 4 2.1 What makes a mod: an introduction 5 2.2 Am I Mod or Not? 6 2.3 Sorts of mods 7 3. ComMODdification of mod-culture: the industry is reaching out 8 3.1 The Unreal Universe 9 4. The Battlefield Universe: looking for trends within a mod-community 11 4.1 Battlefield: IP 12 4.2 Battlefield mods and original IP 13 4.3 Blurring boundaries? The case of Desert Combat 15 5. Extreme Modding (for Dummies) 15 5.1 Extremist (Right) modding 17 5.2 The Trend is death!? 19 6. Conclusion 19 7. Literature 20 8. Ludology 21 Appendix A 23 2 Title of expert video tutorials1 on making (software) modifications (or mods2) for UT2004. Who put the mod in commodification? – A descriptive analysis of the First Person The tutorials cover almost all areas of developing content for UT2004, starting with Shooter mod culture. level design, digging into making machinima, learning to make weapons, mutators, characters and vehicles3. Each area is divided into several sub-areas and than divided Author into several topics, resulting in more than 270 video tutorials. When NY Times David B. Nieborg journalist Marriot (2003) stated: “So far, mod makers say, there is no ‘Mod Making for Dummies’ book”, his statement was only partly true. -

Visual Scripting in Game Development

Kristófer Ívar Knutsen Visual Scripting in Game Development Metropolia University of Applied Sciences Bachelor of Engineering Information Technology Bachelor’s Thesis 10 May 2021 Abstract Author: Kristófer Knutsen Title: Visual Scripting in Game Development Number of Pages: 41 pages + 3 appendices Date: 10 May 2021 Degree: Bachelor of Engineering Degree Programme: Information Technology Professional Major: Mobile Solutions Supervisor: Ulla Sederlöf, Principal Lecturer This thesis explores how visual scripting is used in game development, both in practice and in theory. The topic starts on a broad level but is narrowed down to the two most popular game engines to date, the Unreal Engine and the Unity Engine. Next, the theoretical part covers how the selected game engines have evolved from their infancy and when visual scripting became a part of their respective platform. Finally, the visual scripting tools were used to create mini-games in each engine, and the process was compared and analyzed in the practical part. The hypothesis that visual scripting is inferior to traditional written code was tested by research and in practice. The main emphasis was to look at the visual scripting offerings for the Unreal Engine and the Unity Engine, compare them to their scripting language counterpart and then compare them against each other. The results of both the theoretical and the practical part show that the visual scripting tools are an excellent asset for anyone who wants to use their many benefits, such as quick iteration, fast prototyping, and increased productivity between programmers and designers. However, it also demonstrates some aspects of the game development process that should be taken into consideration while using visual scripting due to some limitations that the tool can show. -

The Way It's Meant to Be Played

The Way It’s Meant to be Played Analyst Day 2004 Mark Daly – VP, Content Development EverQuest®DOOM 3 II Unreal Tournament 2004 Sonyid SoftwareOnline Entertainment Ep ic New content drives DEMAND for new technology Virtual Skipper 3 Digital Jesters/Nadeo Fa r Cr y ©2004 NV IDIA Cor porati on. All rights res erved. ©2004 NV IDIA Cor porati on. All rights resUbiS erved. oft EverQuest®DOOM 3 II Unreal Tournament 2004 Sonyid SoftwareOnline Entertainment Ep ic Virtual Skipper 3 Digital Jesters/Nadeo Fa r Cr y ©2004 NV IDIA Cor porati on. All rights res erved. ©2004 NV IDIA Cor porati on. All rights resUbiS erved. oft The Way It’s Meant To Be Played Titles 350 300 250 200 150 100 50 0 2002 2003 2004 2005 ©2004 NV IDIA Cor porati on. All rights res erved. Dual focus of TWIMTBP Development Demand Generation ©2004 NV IDIA Cor porati on. All rights res erved. NVIDIA Content Team – A World Wide Organization Over 50 graphics experts deployed world wide Backgrounds from SGI, Alias|Wavefront, Pixar, Sony and 15 separate game companies ©2004 NV IDIA Cor porati on. All rights res erved. NVSDK – Software Development Kit ©2004 NV IDIA Cor porati on. All rights res erved. FX Composer Everquest content courtesy of Sony Online Entertainment Inc. ©2004 NV IDIA Cor porati on. All rights res erved. NVPerfHUD – Performance Analyzer TotalTotal••DriverDriverFrameFrame numbernumber Instrumentation: Instrumentation: raterate ofof DrawDraw PrimitivesPrimitives Batches:Batches: •Number of triangles/frame ••Current•Current••NumberTimeTime spentspent of triangles/frame inin FrameFrame •••Value•Value••ElapsedElapsedTimeTime overover spentspent timetime timetime inin inin DriverDriver thethe sessionsession ••DriverDriver waitingwaiting forfor GPUGPU (Spin)(Spin) ••GPUGPU IdleIdle PerformancePerformance CounterCounter CurrentCurrent MemoryMemory footprint:footprint: HistogramHistogram ofof DrawDraw PrimitivesPrimitives BatchesBatches ••AGPAGP ••VideoVideo MemoryMemory Image courtesy of FutureMark Corp. -

![Unreal Tournament 2003 - 2004 [ UT2003 - UT2004 ] - Unreal 2 XMP - Unreal Saga & Unreal Engine Francophone](https://docslib.b-cdn.net/cover/1592/unreal-tournament-2003-2004-ut2003-ut2004-unreal-2-xmp-unreal-saga-unreal-engine-francophone-3301592.webp)

Unreal Tournament 2003 - 2004 [ UT2003 - UT2004 ] - Unreal 2 XMP - Unreal Saga & Unreal Engine Francophone

Unreal.fr : Unreal Tournament 2003 - 2004 [ UT2003 - UT2004 ] - Unreal 2 XMP - Unreal Saga & Unreal Engine Francophone UNREAL TOURNAMENT 2004 / GUIDE MENU FORUMS Plan du guide : Informations Accueil Preview Le forum du site ● Installation Interview La saga Unreal ● Paramètres Unreal Engine ❍ Affichage Galerie Créations ❍ Audio Fiche Clans Histoire ❍ Personnage Armes ❍ Partie Mon compte Equipes ❍ Commandes Mon assistant Items ❍ Armes Maps ❍ HUD Modes de jeu DOSSIERS ● Les fichiers .ini Véhicules ❍ USER.ini ■ Annonces Binds ■ Listes des messages écrit et vocaux préenregistrés Astuces ■ La console et ses commandes FAQ ❍ UT2004.ini Guides ● Benchmark Techniques ● Chercher et choisir les serveurs ● Installer les mods, mutators, maps... ● Prendre une capture d'écran et enregistrer une démo FICHIERS Patch Sources : Bonus Packs - TweakGuides Démos - Keybinding & Aliases Serveur - Le forum Unreal.fr - ...et mon expérience personnelle ;p Maps Modèles Mods Autres : Mutators Skins Réaliser de belles captures d'écran (saVTRonic 16/04/04 ) Utilitaires Guides des cartes Onslaught (saVTRonic 30/03/04 ) Vidéos AS-Convoy (keyboard_junky 23/02/04 ) Voice Packs ONS-Torlan (keyboard_junky 15/02/04 ) Wallpapers Vidéos et Demos mIRC http://www.unreal.fr/unrealtournament2004/guide.php (1 of 2)12/06/2004 15:04:02 Unreal.fr : Unreal Tournament 2003 - 2004 [ UT2003 - UT2004 ] - Unreal 2 XMP - Unreal Saga & Unreal Engine Francophone UNREAL TOURNAMENT 2004 / GUIDE / INSTALLATION MENU FORUMS Informations Accueil Preview Avant de commencer Le forum du site Interview La première à chose à faire après avoir installer votre jeu, vérifier la disponibilité de patch et de bonus La saga Unreal Unreal Engine packs et les installer. Galerie Créations Fiche Clans Histoire Les mutators de compétitions Armes Mon compte Certains serveurs sont également équipés d’outils « dit de compétitions ». -

Evolutionary Interactive Bot for the FPS Unreal Tournament 2004

Evolutionary Interactive Bot for the FPS Unreal Tournament 2004 Jos´eL. Jim´enez1, Antonio M. Mora2, Antonio J. Fern´andez-Leiva1 1 Departamento de Lenguajes y Ciencias de la Computaci´on, Universidad de M´alaga(Spain). [email protected],[email protected] 2 Departamento de Teor´ıade la Se~nal,Telem´aticay Comunicaciones. ETSIIT-CITIC, Universidad de Granada (Spain). [email protected] Abstract. This paper presents an interactive genetic algorithm for gen- erating a human-like autonomous player (bot) for the game Unreal Tour- nament 2004. It is based on a bot modelled from the knowledge of an expert human player. The algorithm provides two types of interaction: by an expert in the game and by an expert in the algorithm. Each one af- fects different aspects of the evolution, directing it towards better results regarding the agent's humanness (objective of this work). It has been con- ducted an analysis of the experts' influence on the performance, showing much better results after these interactions that the non-interactive ver- sion. The best bot were submitted to the BotPrize 2014 competition (which seeks for the best human-like bot), getting the second position. 1 Introduction Unreal Tournament 2004[1], also know as UT2004 or UT2K4, is a first per- son shooter (FPS) game mainly focused on the multiplayer experience, which includes several game modes for promoting this playability. It includes a one- player mode in which the rest of team partners (if so) and rivals are controlled by the machine, thus they are autonomous. They are called bots. -

Troubleshooting Guide

TROUBLESHOOTING GUIDE Solved - Issue with USB devices after Windows 10 update KB4074588 Logitech is aware of a Microsoft update (OS Build 16299.248) which is reported to affect USB support on Windows 10 computers. Support statement from Microsoft "After installing the February 13, 2018 security update, KB4074588 (OS Build 16299.248), some USB devices and onboard devices, such as a built-in laptop camera, keyboard or mouse, may stop working for some users." If you are using Microsoft Windows 10, (OS Build 16299.248) and are having USB-related issues. Microsoft has released a new update KB4090913 (OS Build 16299.251) to resolve this issue. We recommend you follow Microsoft Support recommendations and install the latest Microsoft Windows 10 update: https://support.microsoft.com/en-gb/help/4090913/march5- 2018kb4090913osbuild16299-251. This update was released by Microsoft on March 5th in order to address the USB connection issues and should be downloaded and installed automatically using Windows Update. For instructions on installing the latest Microsoft update, please see below: If you have a working keyboard/mouse If you have a non-working keyboard/mouse If you have a working keyboard/mouse: 1. Download the latest Windows update from Microsoft. 2. If your operating system is 86x-based, click on the second option. If your operating system is 64x-based, click on the third option. 3. Once you have downloaded the update, double-click on the downloaded file and follow the on-screen instructions to complete the update installation. NOTE: If you wish to install the update manually, you can download the 86x and 64x versions of the update from http://www.catalog.update.microsoft.com/Search.aspx?q=KB4090913 If you currently have no working keyboard/mouse: For more information, see the Microsoft article on how to start and use the Windows 10 Recovery Environment (WinRE): https://support.microsoft.com/en-us/help/4091240/usb-devices-may-stop-working-after- installing-the-february-13-2018-upd Do the following: 1. -

2020 Walt Disney World EGF National Championships to Air Rocket League Finals on ESPN2 Three Championship Titles Airing Across ESPN Digital Platforms

2020 Walt Disney World EGF National Championships to air Rocket League Finals on ESPN2 Three Championship Titles airing across ESPN Digital Platforms New York, NY (June 11, 2020) The Electronic Gaming Federation (EGF) today unveiled plans to air the inaugural 2020 Walt Disney World EGF High School National Championships of Rocket League on ESPN2 on Sunday June 14 at 7:30pm. Early rounds of competition will stream live on the ESPN App as well as on EGF Twitch, EGF Mixer, EGF YouTube and EGF Facebook. The 2020 Walt Disney World EGF High School National Championships is the culmination of the EGFH season 5 (fall) and 6 (spring) which has qualified high school players in Rocket League, Overwatch and Super Smash Brothers Ultimate competing June 12-14. For the safety of the players following the onset of the COVID-19 pandemic, the championship tournament, originally planned for ESPN Wide World of Sports Complex at Walt Disney World Resort, was moved to an at-home tournament. Qualifying teams hail from across the United States, including Alaska, Connecticut, New York, Texas and Virginia. “We’re very excited to be able to partner with ESPN and our publishers to provide such a grand stage to showcase and celebrate our best student teams,” says Eric Johnson, CEO, EGF. “We look forward to being back at Walt Disney World Resort and ESPN Wide World of Sports Complex in June 2021.” The esports (competitive video games) industry is on fire: having generated more than $1 billion globally in 2019. Industry analysts have estimated that over two billion people worldwide play or watch esports across all gaming titles.