Procedural Audio for Video Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A History of Video Game Consoles Introduction the First Generation

A History of Video Game Consoles By Terry Amick – Gerald Long – James Schell – Gregory Shehan Introduction Today video games are a multibillion dollar industry. They are in practically all American households. They are a major driving force in electronic innovation and development. Though, you would hardly guess this from their modest beginning. The first video games were played on mainframe computers in the 1950s through the 1960s (Winter, n.d.). Arcade games would be the first glimpse for the general public of video games. Magnavox would produce the first home video game console featuring the popular arcade game Pong for the 1972 Christmas Season, released as Tele-Games Pong (Ellis, n.d.). The First Generation Magnavox Odyssey Rushed into production the original game did not even have a microprocessor. Games were selected by using toggle switches. At first sales were poor because people mistakenly believed you needed a Magnavox TV to play the game (GameSpy, n.d., para. 11). By 1975 annual sales had reached 300,000 units (Gamester81, 2012). Other manufacturers copied Pong and began producing their own game consoles, which promptly got them sued for copyright infringement (Barton, & Loguidice, n.d.). The Second Generation Atari 2600 Atari released the 2600 in 1977. Although not the first, the Atari 2600 popularized the use of a microprocessor and game cartridges in video game consoles. The original device had an 8-bit 1.19MHz 6507 microprocessor (“The Atari”, n.d.), two joy sticks, a paddle controller, and two game cartridges. Combat and Pac Man were included with the console. In 2007 the Atari 2600 was inducted into the National Toy Hall of Fame (“National Toy”, n.d.). -

First Amendment Protection of Artistic Entertainment: Toward Reasonable Municipal Regulation of Video Games

Vanderbilt Law Review Volume 36 Issue 5 Issue 5 - October 1983 Article 2 10-1983 First Amendment Protection of Artistic Entertainment: Toward Reasonable Municipal Regulation of Video Games John E. Sullivan Follow this and additional works at: https://scholarship.law.vanderbilt.edu/vlr Part of the First Amendment Commons, and the Intellectual Property Law Commons Recommended Citation John E. Sullivan, First Amendment Protection of Artistic Entertainment: Toward Reasonable Municipal Regulation of Video Games, 36 Vanderbilt Law Review 1223 (1983) Available at: https://scholarship.law.vanderbilt.edu/vlr/vol36/iss5/2 This Note is brought to you for free and open access by Scholarship@Vanderbilt Law. It has been accepted for inclusion in Vanderbilt Law Review by an authorized editor of Scholarship@Vanderbilt Law. For more information, please contact [email protected]. NOTE First Amendment Protection of Artistic Entertainment: Toward Reasonable Municipal Regulation of Video Games Outline I. INTRODUCTION .................................. 1225 II. LOCAL GOVERNMENT REGULATION OF VIDEO GAMES . 1232 A. Zoning Regulation ......................... 1232 1. Video Games Permitted by Right ........ 1232 2. Video Games as Conditional or Special U ses .................................. 1233 3. Video Games as Accessory Uses ......... 1234 4. Special Standards for Establishments Of- fering Video Game Entertainment ....... 1236 a. Age of Players and Hours of Opera- tion Standards .................. 1236 b. Arcade Space and Structural Stan- dards ........................... 1237 c. Arcade Location Standards....... 1237 d. Noise, Litter, and Parking Stan- dards ........................... 1238 e. Adult Supervision Standards ..... 1239 B. Licensing Regulation ....................... 1239 1. Licensing and Zoning Distinguished ...... 1239 2. Video Game Licensing Standards ........ 1241 3. Administration of Video Game Licensing. 1242 a. Denial of a License ............. -

What Way Is It Meant to Be Played?

What Way Is It Meant To Be Played? Florian Mihola March 2020 Abstract and home video game consoles digital inputs were the standard up until the “16-bit” era of the 1990s. The most commonly used interface between a Sony PlayStation, Nintendo 64 and Sega Saturn fi video game and the human user is a handheld are among the rst which brought with them ad- “game controller”, “game pad”, or in some occa- ditional analog controls—either at launch or as an sions an “arcade stick.” Directional pads, analog updated controller option. And even though mod- sticks and buttons—both digital and analog—are ern mass-market offerings include analog sticks linked to in-game actions. One or multiple simul- and analog triggers, digital buttons and directional taneous inputs may be necessary to communicate pads remain the ubiquitous fundamentals of input. the intentions of the user. Activating controls may The simple nature and widespread use of digital be more or less convenient depending on their po- inputs leads to a degree of interoperability: Game sition and size. In order to enable the user to per- software is not necessarily tied to a single game fi form all inputs which are necessary during game- controller—whether we interpret this as a speci c play, it is thus imperative to find a mapping be- model, a design and protocol available by different tween in-game actions and buttons, analog sticks, manufacturers, or a class of generic controllers— and so on. We present simple formats for such but can be enjoyed using a range of controllers, mappings as well as for the constraints on possi- provided they share at least some common char- ble inputs which are either determined by a phys- acteristics. -

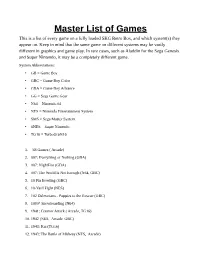

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

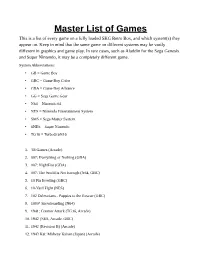

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Programme Edition

JOURNEE 13h00 - 18h00 WEEK END 14h00 - 19h00 JOURJOURJOUR Vendredi 18/12 - 19h00 Samedi 19/12 Dimanche 20/12 Lundi 21/12 Mardi 22/12 ThèmeThèmeThème Science Fiction Zelda & le J-RPG (Jeu de rôle Japonais) ArcadeArcadeArcade Strange Games AnimeAnimeAnime NES / Twin Famicom / MSXMSXMSX The Legend of Zelda Rainbow Islands Teenage Mutant Hero Turtles SC 3000 / Master System Psychic World Streets of Rage Rampage Super Nintendo Syndicate Zelda Link to the Past Turtles in Time + Sailor Moon Megadrive / Mega CD / 32X32X32X Alien Soldier + Robo Aleste Lunar 2 + Soleil Dynamite Headdy EarthWorm Jim + Rocket Knight Adventures Dragon Ball Z + Quackshot Nintendo 64 Star Wars Shadows of the Empire Furai no Shiren 2 Ridge Racer 64 Buck Bumble SaturnSaturnSaturn Deep Fear Shining Force III scénario 2 Sky Target Parodius Deluxe Pack + Virtual Hydlide Magic Knight Rayearth + DBZ Shinbutouden Playstation Final Fantasy VIII + Saga Frontier 2 Elemental Gearbolt + Gun Blade Arts Tobal n°1 Dreamcast Ghost Blade Spawn Twinkle Star Sprites Alice's Mom Rescue Gamecube F Zero GX Zelda Four Swords 4 joueurs Bleach Playstation 2 Earth Defense Force Code Age Commanders / Stella Deus Puyo Pop Fever Earth Defense Force Cowboy Bebop + Berserk XboxXboxXbox Panzer Dragoon Orta Out Run 2 Dead or Alive Xtreme Beach Volleyball Wii / Wii UWii U / Wii JPWii JP Fragile Dreams Xenoblade Chronicles X Devils Third Samba De Amigo Tatsunoko vs Capcom + The Skycrawlers Playstation 3 Guilty Gear Xrd Demon's Souls J Stars Victory versus + Catherine Kingdom Hearts 2.5 Xbox 360 / XBOX -

CP/M-80 Kaypro

$3.00 June-July 1985 . No. 24 TABLE OF CONTENTS C'ing Into Turbo Pascal ....................................... 4 Soldering: The First Steps. .. 36 Eight Inch Drives On The Kaypro .............................. 38 Kaypro BIOS Patch. .. 40 Alternative Power Supply For The Kaypro . .. 42 48 Lines On A BBI ........ .. 44 Adding An 8" SSSD Drive To A Morrow MD-2 ................... 50 Review: The Ztime-I .......................................... 55 BDOS Vectors (Mucking Around Inside CP1M) ................. 62 The Pascal Runoff 77 Regular Features The S-100 Bus 9 Technical Tips ........... 70 In The Public Domain... .. 13 Culture Corner. .. 76 C'ing Clearly ............ 16 The Xerox 820 Column ... 19 The Slicer Column ........ 24 Future Tense The KayproColumn ..... 33 Tidbits. .. .. 79 Pascal Procedures ........ 57 68000 Vrs. 80X86 .. ... 83 FORTH words 61 MSX In The USA . .. 84 On Your Own ........... 68 The Last Page ............ 88 NEW LOWER PRICES! NOW IN "UNKIT"* FORM TOO! "BIG BOARD II" 4 MHz Z80·A SINGLE BOARD COMPUTER WITH "SASI" HARD·DISK INTERFACE $795 ASSEMBLED & TESTED $545 "UNKIT"* $245 PC BOARD WITH 16 PARTS Jim Ferguson, the designer of the "Big Board" distributed by Digital SIZE: 8.75" X 15.5" Research Computers, has produced a stunning new computer that POWER: +5V @ 3A, +-12V @ 0.1A Cal-Tex Computers has been shipping for a year. Called "Big Board II", it has the following features: • "SASI" Interface for Winchester Disks Our "Big Board II" implements the Host portion of the "Shugart Associates Systems • 4 MHz Z80-A CPU and Peripheral Chips Interface." Adding a Winchester disk drive is no harder than attaching a floppy-disk The new Ferguson computer runs at 4 MHz. -

Video Gaming and Death

Untitled. Photographer: Pawel Kadysz (https://stocksnap.io/photo/OZ4IBMDS8E). Special Issue Video Gaming and Death edited by John W. Borchert Issue 09 (2018) articles Introduction to a Special Issue on Video Gaming and Death by John W. Borchert, 1 Death Narratives: A Typology of Narratological Embeddings of Player's Death in Digital Games by Frank G. Bosman, 12 No Sympathy for Devils: What Christian Video Games Can Teach Us About Violence in Family-Friendly Entertainment by Vincent Gonzalez, 53 Perilous and Peril-Less Gaming: Representations of Death with Nintendo’s Wolf Link Amiibo by Rex Barnes, 107 “You Shouldn’t Have Done That”: “Ben Drowned” and the Uncanny Horror of the Haunted Cartridge by John Sanders, 135 Win to Exit: Perma-Death and Resurrection in Sword Art Online and Log Horizon by David McConeghy, 170 Death, Fabulation, and Virtual Reality Gaming by Jordan Brady Loewen, 202 The Self Across the Gap of Death: Some Christian Constructions of Continued Identity from Athenagoras to Ratzinger and Their Relevance to Digital Reconstitutions by Joshua Wise, 222 reviews Graveyard Keeper. A Review by Kathrin Trattner, 250 interviews Interview with Dr. Beverley Foulks McGuire on Video-Gaming, Buddhism, and Death by John W. Borchert, 259 reports Dying in the Game: A Perceptive of Life, Death and Rebirth Through World of Warcraft by Wanda Gregory, 265 Perilous and Peril-Less Gaming: Representations of Death with Nintendo’s Wolf Link Amiibo Rex Barnes Abstract This article examines the motif of death in popular electronic games and its imaginative applications when employing the Wolf Link Amiibo in The Legend of Zelda: Breath of the Wild (2017). -

The History of Educational Computer Games

Beyond Edutainment Exploring the Educational Potential of Computer Games By Simon Egenfeldt-nielsen Submitted to the IT-University of Copenhagen as partial fulfilment of the requirements for the PhD degree February, 2005 Candidate: Simon Egenfeldt-Nielsen Købmagergade 11A, 4. floor 1150 Copenhagen +45 40107969 [email protected] Supervisors: Anker Helms Jørgensen and Carsten Jessen Abstract Computer games have attracted much attention over the years, mostly attention of the less flattering kind. This has been true for computer games focused on entertainment, but also for what for years seemed a sure winner, edutainment. This dissertation aims to be a modest contribution to understanding educational use of computer games by building a framework that goes beyond edutainment. A framework that goes beyond the limitations of edutainment, not relying on a narrow perception of computer games in education. The first part of the dissertation outlines the background for building an inclusive and solid framework for educational use of computer games. Such a foundation includes a variety of quite different perspectives for example educational media and non-electronic games. It is concluded that educational use of computer games remains strongly influenced by educational media leading to the domination of edutainment. The second part takes up the challenges posed in part 1 looking to especially educational theory and computer games research to present alternatives. By drawing on previous research three generations of educational computer games are identified. The first generation is edutainment that perceives the use of computer games as a direct way to change behaviours through repeated action. The second generation puts the spotlight on the relation between computer game and player. -

Imitation and Limitation

Fake Bit: Imitation and Limitation Brett Camper [email protected] ABSTRACT adventure and role-playing games, which are traditionally less A small but growing trend in video game development uses the action-oriented. Several lesser known NES games contributed to “obsolete” graphics and sound of 1980s-era, 8-bit microcomputers the style early on as well, such as Hudson Soft’s Faxanadu (1989) to create “fake 8-bit” games on today’s hardware platforms. This and Milon’s Secret Castle (1986), as well as Konami’s The paper explores the trend by looking at a specific case study, the Goonies II (1987). In more recent decades, the Castlevania series platform-adventure game La-Mulana, which was inspired by the from Konami has also adopted and advanced the form, from Japanese MSX computer platform. Discussion includes the Symphony of the Night (1997) on PlayStation, through Portrait of specific aesthetic traits the game adopts (as well as ignores), and Ruin (2006) for the Nintendo DS. the 8-bit technological structures that caused them in their original La-Mulana is an extremely well made title that ranks among the 1980s MSX incarnation. The role of technology in shaping finest in this genre, displaying unusual craftsmanship and aesthetics, and the persistence of such effects beyond the lifetime cohesiveness. Its player-protagonist is Professor Lemeza, an of the originating technologies, is considered as a more general archaeologist explorer charting out vast underground ruins in a “retro media” phenomenon. distant, unspecified corner of the globe (Indiana Jones is an obvious pop culture reference, but also earlier examples like H. -

Introduction to Gaming

IWKS 2300 Fall 2019 A (redacted) History of Computer Gaming John K. Bennett How many hours per week do you spend gaming? A: None B: Less than 5 C: 5 – 15 D: 15 – 30 E: More than 30 What has been the driving force behind almost all innovations in computer design in the last 50 years? A: defense & military B: health care C: commerce & banking D: gaming Games have been around for a long time… Senet, circa 3100 B.C. 麻將 (mahjong, ma-jiang), ~500 B.C. What is a “Digital Game”? • “a software program in which one or more players make decisions through the control of the game objects and resources in pursuit of a goal” (Dignan, 2010) 1.Goal 2.Rules 3.Feedback loop (extrinsic / intrinsic motivation) 4.Voluntary Participation McGonigal, J. (2011). Reality is Broken: Why Games Make Us Better and How They Can Change the World. Penguin Press Early Computer Games Alan Turning & Claude Shannon Early Chess-Playing Programs • In 1948, Turing and David Champernowne wrote “Turochamp”, a paper design of a chess-playing computer program. No computer of that era was powerful enough to host Turochamp. • In 1950, Shannon published a paper on computer chess entitled “Programming a Computer for Playing Chess”*. The same algorithm has also been used to play blackjack and the stock market (with considerable success). *Programming a Computer for Playing Chess Philosophical Magazine, Ser.7, Vol. 41, No. 314 - March 1950. OXO – Noughts and Crosses • PhD work of A.S. Douglas in 1952, University of Cambridge, UK • Tic-Tac-Toe game on EDSAC computer • Player used dial -

Download 80 PLUS 4983 Horizontal Game List

4 player + 4983 Horizontal 10-Yard Fight (Japan) advmame 2P 10-Yard Fight (USA, Europe) nintendo 1941 - Counter Attack (Japan) supergrafx 1941: Counter Attack (World 900227) mame172 2P sim 1942 (Japan, USA) nintendo 1942 (set 1) advmame 2P alt 1943 Kai (Japan) pcengine 1943 Kai: Midway Kaisen (Japan) mame172 2P sim 1943: The Battle of Midway (Euro) mame172 2P sim 1943 - The Battle of Midway (USA) nintendo 1944: The Loop Master (USA 000620) mame172 2P sim 1945k III advmame 2P sim 19XX: The War Against Destiny (USA 951207) mame172 2P sim 2010 - The Graphic Action Game (USA, Europe) colecovision 2020 Super Baseball (set 1) fba 2P sim 2 On 2 Open Ice Challenge (rev 1.21) mame078 4P sim 36 Great Holes Starring Fred Couples (JU) (32X) [!] sega32x 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) fba 2P sim 3D Crazy Coaster vectrex 3D Mine Storm vectrex 3D Narrow Escape vectrex 3-D WorldRunner (USA) nintendo 3 Ninjas Kick Back (U) [!] megadrive 3 Ninjas Kick Back (U) supernintendo 4-D Warriors advmame 2P alt 4 Fun in 1 advmame 2P alt 4 Player Bowling Alley advmame 4P alt 600 advmame 2P alt 64th. Street - A Detective Story (World) advmame 2P sim 688 Attack Sub (UE) [!] megadrive 720 Degrees (rev 4) advmame 2P alt 720 Degrees (USA) nintendo 7th Saga supernintendo 800 Fathoms mame172 2P alt '88 Games mame172 4P alt / 2P sim 8 Eyes (USA) nintendo '99: The Last War advmame 2P alt AAAHH!!! Real Monsters (E) [!] supernintendo AAAHH!!! Real Monsters (UE) [!] megadrive Abadox - The Deadly Inner War (USA) nintendo A.B.