Result Clustering for Keyword Search on Graphs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Amfar India to Benefit Amfar, the Foundation for AIDS Research

amfAR India To Benefit amfAR, The Foundation for AIDS Research November 2014 Mumbai, India Event Produced by Andy Boose / AAB Productions A Golden Opportunity amfAR held its inaugural Indian fundraising gala in Mumbai in November 2013. The event was hosted by amfAR Global Fundraising Chairman Sharon Stone, and Bollywood stars Aishwarya Rai Bachchan and Abhishek Bachchan. It was chaired by amfAR Chairman Kenneth Cole, Vikram Chatwal, Anuj Gupta, Rocky Malhotra, and Hilary Swank. Memorable Moments The black-tie event, presented by Dr. Cyrus Poonawalla and held at The Taj Mahal Palace Hotel, featured a cocktail reception, dinner, and an exquisite gold-themed fashion show that showcased three of India’s most important fashion designers -- Rohit Bal, Abu Jani- Sandeep Khosla, and Tarun Tahiliani. The evening concluded with a spirited performance by pop sensation Ke$ha, whose Hilary Swank for amfAR India songs included her hits Animal, We R Who We R, and Tik Tok. Among the Guests Neeta Ambani, Torquhil Campbell, Duke of Argyll, Sonal Chauhan, Venugopal Dhoot, Nargis Fakhri, Sunil Gavaskar, Parmeshwar Godrej, Lisa Haydon, Dimple Kapadia, Tikka Kapurthala, Nandita Mahtani, Rocky The Love Gold Fashion Show for amfAR India Abhishek Bachchan, Sharon Stone, Nita Ambani, and Aishwarya Rai Bachchan for amfAR India Malhotra, Dino Morea, among many others. Kenneth Cole and Sharon Stone for amfAR India Total Media Impressions: 2.4 Billion Total Media Value: $1.6 Million Aishwarya Rai Bachchan for amfAR India The Love Gold Fashion Show for amfAR India A Star-Studded Cast amfAR’s fundraising events are world renowned for their ability to attract a glittering list of top celebrities, entertainment industry elite, and international society. -

FICCI FRAMES: a Brief Introduction the FICCI Media & Entertainment

FICCI FRAMES: A Brief Introduction The FICCI Media & Entertainment Committee, headed by the legendary Late Yash Chopra, one of the prominent film producers in India, comprising visionary thought leaders of the Indian entertainment industry, first met in 1999. An idea was born which crystallized into the first FICCI FRAMES, a platform and a voice for the entire Indian media and entertainment industry. The first ever industry report giving numbers to this industry was brought out by FICCI and is updated every year. Over the last 19 years, FICCI FRAMES, the most definitive annual global convention on the entertainment business in Asia, has grown in strength, stature and horizon. FRAMES not only facilitates communication and exchange between key industry figures, influencers and policy makers, it is also an unparalleled platform for the exchange of ideas and knowledge between individuals, countries and conglomerates. FRAMES has brought together more the 400,000 professionals from India and abroad representing the various sectors of the industry. The three eventful days of FRAMES are pulsating with activity – sessions, keynotes, workshops, masterclasses, policy roundtables, B2B meetings, exhibition, cultural evening, networking events and a meeting of the best minds in the business under a single umbrella. 20 th Edition of FICCI FRAMES: March 12 th -14 th , 2019 FRAMES 2019 will be the landmark 20 th Edition of FRAMES reflecting the richness of the platform which it has acquired over the years. Like every year, the world’s media and entertainment industry will be in attendance at FRAMES 2019, with nearly 2,000 Indian and over 600 foreign delegates representing the entire gamut of the sector, including Film, Broadcast (TV & Radio), Digital Entertainment, Animation, Gaming, VFX, etc. -

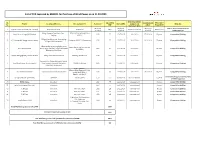

Updated All Sources Contracted & Commissioned- As on 31.10.2020

List of PPA Executed by MSEDCL for Purchase of Wind Power as on 31.10.2020 SCOD (Schedulded Sr. Capacity in Commissionin PPA tenure Vender location of Projects Evacuation level Tariff rate Date of EPA Commercial Remarks No. MW g Date From SCOD Operation date) As per list As per list As per list EPA on Preferrential tariff on 1 Various wind Generators (list attached) As per list attached As per PPA 2479 As per list attached As above list. attached attached attached MERC approval Village Dayapar, Dist Kutch, State:- 765/400/220 kV Bhuj GSS of 2 Adani Green Energy (MP) Limited 2.85 75 17.07.2018 18.01.2020 27.12.2019 25 years Competitive Bidding Gujarat PGCIL Village Kawaldhara and Surrounding 3 KCT Renewable Energy Private Limited Osmanabad MSETCL Substation 2.85 75 17.07.2018 31.07.2019 26.12.2019 25 years Competitive Bidding Villages, Dist Osmanabad Village Kotda, Laxmipar, Bhadra nana, 220KV bay of 765/400/220 KV 4 Inox Wind Limited Muru, Nagviri & others,Tehsil-Lakhpat & 2.86 50 17.07.2018 17.01.2020 25 years Competitive Bidding PGCIL Bhuj Nakhatrana, Dist Kutch 5 Mytrah Energy (India) Private Limited Village Jevli, Dist Osmanabad Naldurg 132/33 S/sn 2.86 100 16.07.2018 27.06.2020 25 years Competitive Bidding Anjanadi Site, Village-Malegaon Thokal, 6 Hero Wind Energy Private Limited Gaur Pimpari, Kannad & Phulambri 132/33 kV Naldurg 2.86 76 17.07.2018 23.06.2020 25 years Competitive Bidding Tehsil, Dist. Aurangabad Ujani - 132/33 / LILO on Ujani-Umarga S/C line 7 Torrent Power Limited Lohara and Koral, Dist Osmanabad at 132/33 kV S/sn. -

Download Download

Kervan – International Journal of Afro-Asiatic Studies n. 21 (2017) Item Girls and Objects of Dreams: Why Indian Censors Agree to Bold Scenes in Bollywood Films Tatiana Szurlej The article presents the social background, which helped Bollywood film industry to develop the so-called “item numbers”, replace them by “dream sequences”, and come back to the “item number” formula again. The songs performed by the film vamp or the character, who takes no part in the story, the musical interludes, which replaced the first way to show on the screen all elements which are theoretically banned, and the guest appearances of film stars on the screen are a very clever ways to fight all the prohibitions imposed by Indian censors. Censors found that film censorship was necessary, because the film as a medium is much more popular than literature or theater, and therefore has an impact on all people. Indeed, the viewers perceive the screen story as the world around them, so it becomes easy for them to accept the screen reality and move it to everyday life. That’s why the movie, despite the fact that even the very process of its creation is much more conventional than, for example, the theater performance, seems to be much more “real” to the audience than any story shown on the stage. Therefore, despite the fact that one of the most dangerous elements on which Indian censorship seems to be extremely sensitive is eroticism, this is also the most desired part of cinema. Moreover, filmmakers, who are tightly constrained, need at the same time to provide pleasure to the audience to get the invested money back, so they invented various tricks by which they manage to bypass censorship. -

F a S Hionab

Bollywood actors Aishwarya Rai Bachchan and hus- band Abhishek Bachchan leave after casting their vote Johnny Galecki, Jim Parsons, Kaley Cuoco, Simon Helberg, Kunal Nayyar, Mayim Bialik and Melissa Rauch from the cast of ”The Big Bang at a polling station during the fourth phase of general Theory” attend their handprint ceremony at the TCL Chinese Theatre IMAX on in Hollywood, California. election in Mumbai FASHION DeVon Franklin and Meagan Good arrive at the Premiere of 'The Intruder' at ArcLight FOCUS Hollywood in Hollywood, California. I O N A H B Actress Lucy Liu speaks during her Walk of Fame ceremony in Hollywood. Bollywood actor Hrithik Roshan leaves after casting his vote at a polling station during the fourth phase of general S L election in Mumbai, A E F Bollywood actor Salman Khan poses for photographs after casting his GLOBE vote at a polling station in Mumbai. Kaley Cuoco from the cast of ”The Big Bang Theory” poses during their hand- print ceremony at the TCL Chinese Thea- tre IMAX in Hollywood, California. Actress Drew Barrymore, Cameron Diaz and Lucy Liu Bollywood actress Preity Zinta poses for photographs as stand on the star during Liu's Walk of Fame ceremony in she leaves a polling station after casting her vote in Hollywood. Mumbai. Bollywood actress Ihana Dhillon and actor Dev Kharoud pose for pho- tographs during the promotion of the upcoming Punjabi film 'Blackia', in Amritsar. Host Kelly Clarkson performs onstage dur- ing the 2019 Billboard Music Awards at MGM Grand Garden Arena in Las Vegas, Nevada. From left, Japan’s new Emperor Naruhito, Empress Masako, Crown Prince Akishino and Crown Princess Kiko arrive to attend a ceremony to Bollywood actress Vidya Balan poses for Princess Alexia, King Willem-Alexander, Princess Ariane, Queen Maxima and Princess Alexia arrive to Amersfoort to cele- receive the first audience after the accession to the throne at the Mat- photographs as she leaves a polling sta- brate Kings Day. -

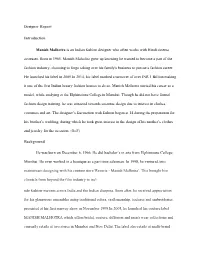

Designer Report Manish Malhotra

Designer Report Introduction Manish Malhotra is an Indian fashion designer who often works with Hindi cinema actresses. Born in 1965, Manish Maholtra grew up knowing he wanted to become a part of the fashion industry, choosing to forgo taking over his family's business to pursue a fashion career. He launched his label in 2005.In 2014, his label marked a turnover of over INR 1 Billion making it one of the first Indian luxury fashion houses to do so. Manish Malhotra started his career as a model, while studying at the Elphinstone College in Mumbai. Though he did not have formal fashion design training, he was attracted towards costume design due to interest in clothes, costumes and art. The designer’s fascination with fashion began at 14 during the preparation for his brother’s wedding, during which he took great interest in the design of his mother’s clothes and jewelry for the occasion. (BoF). Background He was born on December 6, 1966. He did bachelor’s in arts from Elphinstone College, Mumbai. He even worked in a boutique as a part time salesman. In 1998, he ventured into mainstream designing with his couture store 'Reverie - Manish Malhotra’. This brought him clientele from beyond the film industry to incl ude fashion-mavens across India and the Indian diaspora. Soon after, he received appreciation for his glamorous ensembles using traditional colors, craftsmanship, textures and embroideries presented at his first runway show in November 1999.In 2005, he launched his couture label MANISH MALHOTRA which offers bridal, couture, diffusion and men's wear collections and currently retails at two stores in Mumbai and New Delhi. -

The Past; Preparing for the Future

Vol. 5 No. 08 New York August 2020 Sanya Madalsa Malhotra Sharma breakout Angel face with talent a spunky spirit Janhvi Priyanka Kapoor Abhishek chopra Jonas Navigating troubled Desi girl turns Bollywood Bachchan global icon Learning thefrom past Volume 5 - August 2020 Inside Copyright 2020 Bollywood Insider 50 Dhaval Roy Deepali Singh 56 18 www.instagram.com/BollywoodInsiderNY/ Scoops 12 SRK’s plastic act 40 leaves fans curious “I had a deeper connection with Kangana’s grading Exclusives Sushant” system returns to bite 50 Sanjana Sanghi 64 her Learning from past; preparing for future 06 Abhishek Bachchan “I feel like a newcomer” 56 Sushmita Sen From desi girl to a truly global icon 12 Priyanka Chopra Jonas Perspective Angel face with spunky spirit happy 18 Madalsa Sharma 32 Patriotic Films independence day “I couldn’t talk in front of Vidya Balan” 26 Sanya Malhotra 62 Actors on OTT 15TH AUGUST The privileged one 41 44 Janhvi Kapoor PREVIOUS ISSUES FOR ADVERTISEMENT July June May (516) 680-8037 [email protected] CLICK For FREE Subscription Bollywood Insider August 2020 LOWER YOUR PROPERTY TAXES Our fee 40 35%; others charge 50% NO REDUCTION NO FEE A. SINGH, Hicksville sign up for 2021 Serving Homeowners in Nassau County Varinder K Bhalla PropertyTax Former Commissioner, Nassau County ReductionGuru Assessment Review Commission CALL or whatsApp [email protected] 3 Patriotic Fervor Of Bollywood Stars iti Sunshine Bhalla, a young TV host in Williams during 2008 to 2011. In 2012, Shah New York, anchored India Independence Rukh Khan, Anushka Sharma and Sanjay Dutt R Day celebrations which were televised appeared on her show and shared their patriotic in India and 23 countries in Europe. -

Kilimanjaro Enthiran 1080P Hd Wallpapers

1 / 3 Kilimanjaro Enthiran 1080p Hd Wallpapers 30 Eyl 2010 — Dr. Vasi invents a super-powered robot, Chitti, in his own image. ... Rai 1080p HD Enthiran (The Robot) Tamil Song -- Kilimanjaro.flv.. 3 Şub 2021 — Kuttywap Enthiran Tamil Bluray Video Songs 1080p HD . ... Kilimanjaro - (Endhiran) - Bluray - 1080p - x264 - DTS - 5.1,… ... to edit footage .... Images: On/Off Sort By: Name /Date. Search Your Videos: Enthiran HD Video Song ... [+] Kilimanjaro - Enthiran 1080p HD Video Song.mp4 [156.54 MB].. 26 Mar 2021 — Kilimanjaro Enthiran 1080p Hd Wallpapers Skycity Sy-ms8518 Driver CD -- DOWNLOAD (Mirror #1). skycity driverskycity wifi 11n driverskycity .... 29 Mar 2021 — Shankar Music by A. R. Rahman Enthiran full movie hd film Dr. Vasi invents a super- powered robot, Chitti, in his own image. highway 2014 movie .... 27 Mar 2021 — MiniTool Partition Wizard Pro License Key with Torrent Free Download 2020. Kilimanjaro Enthiran 1080p Hd Wallpapers .... Kilimanjaro Enthiran 1080p Hd Wallpapers - tondusttemliの日記. Sahana Sivaji The Boss Bluray Hd Song; Youtube I, All About Music, Full A.R. Rahman .... 13 Mar 2021 — Arima Arima Enthiran 1080p HD Video Song ... Enthiran Kilimanjaro video song HD 5.1 Dolby Atmos surround ... Kilimanjaro Song from Endhiran (Enthiran) Movie and Our Exciting Story! ... In fact, we had packed some spare T-shirts with full sleeves if it was too sunny .... Amazon.com: Kilimanjaro (From "Enthiran"): Javed Ali,Chinmayi: MP3 Downloads.. 12 May 2021 — Kilimanjaro Official Video Song | Enthiran | Rajinikanth | Aishwarya ... 4k | Full HD | Kilimanjaro Song 0.25x Speed 3.47 to 3.53 Aishwarya .... Kilimanjaro Official Video Song | Enthiran | Rajinikanth | Aishwarya Rai | A.R.Rahman .. -

Vasudha Builders Limited

+91-8048371928 Vasudha Builders Limited https://www.indiamart.com/deepas-mehendi/ Deepa Sharma, India,s foremost celebrity mehendi designer. She has always been the first choice of Bollywood celebrities including Aishwariya Rai for her wedding & many other high profile stars marriage ceremonies. About Us The pioneer Mrs. Bhramadevi Sharma began practicing the art of Mehendi at the age of Nine. Her mother, Late Smt. Ayodhya Sharma too was an expert in this field.A winner of several prestigious awards, Bhramadevi's first client was famous designer Pallavi Jaikishen. She has now created a global clientele.In her career of fifty years, her art has won her many laurels. Today, the artistic legacy of Bhramadevi is proudly carried forward, by her daughter Deepa Sharma, who with a concrete backing of two decades of experience is one of the finest Mehendi artist in India and has also showcased her talent in many countries around the world. She belongs to the third generation of a gifted family of Mehendi artists under, which this mother and daughter's designs have adorned, the hands of beautiful women from Nargis Dutt to Aishwarya Rai Bachchan. Creating a unique design is a cakewalk for Deepa as it is a result of her amazing creativity. Designs are created on the spot by her and this is done according to the shape and size of the hands. The uniqueness of each and every design is guaranteed and the mehendi is all set to make the bride look one in a million for that special moment. Not just marriage and engagements Deepa, also offer mehendi services for a wide range of functions and ceremonies such as Godhbharai and Namkaran Ceremony,Chooda Ceremony and Karvachauth Mehendi. -

LET US ENTERTAIN YOU Your Ultimate Guide to This Month’S Superb Selection of Movies, TV, Music and Games

LET US ENTERTAIN YOU Your ultimate guide to this month’s superb selection of movies, TV, music and games All on board and right at your fi ngertips Movies New Releases and Recent Movies p1244––1225 Made in Belgium p126 World Cinema p1227 TV Programmes See the full list p128-129 News and Sports See highlights on p129 Music See the full list on p1330 Games See the full list on p1330 Welcome! Switch on to switch off and relax on your Brussels Airlines fl ight today with our extensive range of top entertainment options. Thiss month’s linne-upp inccluddes Chhristtopphheer Nolaan’s viscerall massterppiecce DUUNKKIRRKK, movving family ddrama THHE GGLAASS CCAASTTLLEE, hilarious Belgiaan moockuumeentaryy KINNG OF THE BELGIANS, and longg-ruunnning Ameerican sitcom THE MIDDDLEE. © 2017 WARNER BROTHERS. ALL RIGHTS RESERVED. ALL BROTHERS. WARNER 2017 © DUNKIRK DECEMBER 2017 123 LLH_123-130H_123-130 IFE.PDV.inddIFE.PDV.indd 123123 223/11/20173/11/2017 110:200:20 FEATURED MOVIES What Happened to Monday (2017) Director Tommy Wirkola Cast Noomi Rapace, Glenn Close, Willem Dafoe Languages EN, FR, DE, ES Noomi Rapace works overtime playing a set of identical septuplets in a dystopian world where families are limited to one child due to overpopulation. The seven siblings – named after the days of the week – have to avoid being put into a long sleep by the government while investigating the disappearance of one of their own. A Family Man (2017) Director Mark Williams Cast Gerard Butler, Alison Brie, Willem Dafoe Languages EN, FR, DE, ES, PT Headhunter Dane Jensen (Gerard Butler), whose entire life revolves around closing deals in a survival-of-the- fi tt est boiler room, batt les his top rival for control of their job placement company. -

Iifa Awards 2011 Popular Awards Nominations

IIFA AWARDS 2011 POPULAR AWARDS NOMINATIONS BEST FILM MUSIC DIRECTION ❑ Band Baaja Baaraat ❑ Salim - Sulaiman — Band Baaja Baaraat ❑ Dabangg ❑ Sajid - Wajid & Lalit Pandit — Dabangg ❑ My Name Is Khan ❑ Vishal - Shekhar — I Hate Luv Storys ❑ Once Upon A Time In Mumbaai ❑ Vishal Bhardwaj — Ishqiya ❑ Raajneeti ❑ Shankar Ehsaan Loy — My Name Is Khan ❑ Pritam — Once Upon A Time In Mumbaai BEST DIRECTION ❑ Maneesh Sharma — Band Baaja Baaraat BEST STORY ❑ Abhinav Kashyap — Dabangg ❑ Maneesh Sharma — Band Baajaa Baaraat ❑ Sanjay Leela Bhansali — Guzaarish ❑ Abhishek Chaubey — Ishqiya ❑ Karan Johar — My Name Is Khan ❑ Shibani Bhatija — My Name Is Khan ❑ Milan Luthria — Once Upon A Time In Mumbaai ❑ Rajat Aroraa — Once Upon A Time In Mumbaai ❑ Vikramaditya Motwane — Udaan ❑ Anurag Kashyap, Vikramaditya Motwane — Udaan PERFORMANCE IN A LEADING ROLE: MALE LYRICS ❑ Salman Khan — Dabangg ❑ Amitabh Bhattacharya — Ainvayi Ainvayi, Band Baajaa Baraat ❑ Hrithik Roshan — Guzaarish ❑ Faaiz Anwar — Tere Mast Mast, Dabangg ❑ Shah Rukh Khan — My Name Is Khan ❑ Gulzar — Dil Toh Bachcha, Ishqiya ❑ Ajay Devgn — Once Upon A Time In Mumbaai ❑ Niranjan Iyengar — Sajdaa, My Name Is Khan ❑ Ranbir Kapoor — Raajneeti ❑ Irshad Kamil — Pee Loon, Once Upon A Time In Mumbaai PERFORMANCE IN A LEADING ROLE: FEMALE PLAYBACK SINGER: MALE ❑ Anushka Sharma — Band Baaja Baaraat ❑ Vishal Dadlani — Adhoore, Break Ke Baad ❑ Kareena Kapoor — Golmaal 3 ❑ Rahat Fateh Ali Khan — Tere Mast Mast Do Nain, Dabangg ❑ Aishwarya Rai Bachchan — Guzaarish ❑ Shafqat Amanat Ali — Bin Tere, I Hate -

Very Rare Old Indian Ads February 8, 2013

VINTAGE: Very Rare old Indian Ads February 8, 2013 Sabun (Soap), coffee, butter, chocolate, chappal, toothpaste and more. Here are some old Indian Ads :) Hope you enjoy this post! (Part 2 coming soon!) http://www.pinkvilla.co m/entertainmenttags/r are/vintage-very-rare- old-indian-ads 5 Star Energy Bar ad 1971. More than a decade later it was selling for a princely sum of Rs. 5. Amul Butter girl, year 1968 Bata Ad, year 1963 Nescafe Coffee ad from 1965 Colgate Dental Cream, 1958 Ad. An iconic ad from 1980s The look that lingers. Shakila, star of K. Amarnath's Baraat Code 10 Tonic for Hair Dressing, 1978 Medimix Soap Ad, 1970s Launched in 1969. The early campaign was...simple and the soap was beach friendly. Hit the Indian market just about the time My Little Pony 'n Friends hit the television. Ad for Lure Beauty Mask. Early 1970s. Model: Either the famous Persis Khambatta or (more probably) Yasmin Daji, crowned Miss India 1966 by Persis Khambatta The famous Cherry Blossom Ad from late 70s, early 80s featuring model Nandini Sen. 'Pyaar andha hota hai', lol. Bith Control means to insure Blind Love Risk. Ovanil. Which assures the public that it insures this blind risk in such an easy and quite harmless way. - Ad for contraceptive pills published in FilmIndia Magazine, August, 1943 The beautiful Mala Sinha Amul's Nutramul Ad. Does the kid look like Jugal Hansraj? Maltova Ad. My dad is always takjing about how he loved this stuff :) This 1955 Brylcreem ad features the inimitable Kishore Kumar It appeared in the pages of Filmfare.